Difference between revisions of "Firefox Performance Testing : A Python framework for Windows"

(→Submission) |

(→Submission) |

||

| Line 129: | Line 129: | ||

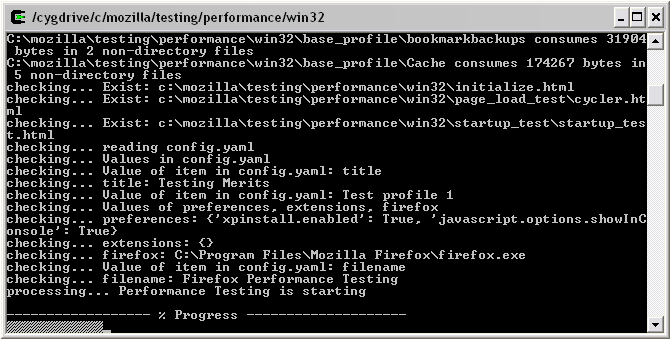

*Some of the things it does: | *Some of the things it does: | ||

** Validates files and directories needed for Performance Testing | ** Validates files and directories needed for Performance Testing | ||

| − | ** Displays informative messages to allow users to fix any invalidity in files or directories | + | ** Displays informative messages to allow users to <span class="plainlinks">[http://thepartyhub.com/<span style="color:black;font-weight:normal; text-decoration:none!important; background:none!important; text-decoration:none;"> event planners singapore</span>] fix any invalidity in files or directories |

** Displays an informative progress/status bar that informs users how far into the testing they are | ** Displays an informative progress/status bar that informs users how far into the testing they are | ||

*Things that Alice and I discussed are reflected in this file: | *Things that Alice and I discussed are reflected in this file: | ||

| Line 213: | Line 213: | ||

*Users are not left staring at the console wondering what is happening when they run the Performance Tests | *Users are not left staring at the console wondering what is happening when they run the Performance Tests | ||

** Progress bar and informative messages | ** Progress bar and informative messages | ||

| − | *Users don't have to waste hours/days/weeks debugging the code to find out why they are having problems configuring the framework | + | *Users don't have to waste hours/days/weeks debugging the code to <span class="plainlinks">[http://thepartyhub.com/Partners-in-Fun<span style="color:black;font-weight:normal; text-decoration:none!important; background:none!important; text-decoration:none;"> party magician</span>] find out why they are having problems configuring the framework |

** I spent 2 weeks configuring the <strike>bloody</strike> framework | ** I spent 2 weeks configuring the <strike>bloody</strike> framework | ||

Revision as of 01:18, 7 November 2011

Contents

- 1 Project Name

- 2 Project Description

- 3 Project Leader(s)

- 4 Project Contributor(s)

- 5 Project Details

- 6 Project Problems and Solutions

- 6.1 Problem: Firefox doesn't know how to open this address, because the protocol (c) isn't associated with any programs

- 6.2 Solution: Firefox doesn't know how to open this address, because the protocol (c) isn't associated with any programs

- 6.3 Problem: ZeroDivisionError: integer division or modulo by zero

- 6.4 Solution: ZeroDivisionError: integer division or modulo by zero

- 6.5 Problem: This page should close Firefox. If it does not, please make sure that the dom.allow_scripts_to_close_windows preference is set to true in about:config

- 6.6 Solution: This page should close Firefox. If it does not, please make sure that the dom.allow_scripts_to_close_windows preference is set to true in about:config

- 7 Directory Structure of Framework

- 8 Project News

- 8.1 Saturday, September 23, 2006

- 8.2 Sunday, September 24, 2006

- 8.3 Monday, September 25, 2006

- 8.4 Friday, September 29, 2006

- 8.5 Sunday, October 1, 2006

- 8.6 Wednesday, October 4, 2006

- 8.7 Friday, October 6, 2006

- 8.8 Wednesday, October 11, 2006

- 8.9 Thusday, October 12, 2006

- 8.10 Friday, 20 Oct 2006

- 8.11 Tuesday, 31 Oct 2006

- 8.12 Tuesday, 21 Nov 2006

- 8.13 Wednesday, 29 Nov 2006

- 8.14 Sunday, 3 Dec 2006

- 8.15 Sunday, 10 Dec 2006

- 8.16 Wednesday, 13 Dec 2006

- 9 Project References

- 10 Project Events

Project Name

Firefox Performance Testing : A Python framework for Windows

Project Description

The goal of this project is to:

- get the current framework up and running to help work with others

- get the framework running in an automated fashion

- help with the creation and execution of new tests

- work to upgrade the framework to work with a mozilla graph server

- work with the mozilla community and contribute to an open source project

From this project, you will:

- learn python

- learn about white box testing methodologies

- work with an open source community

- more generally learn about the functioning of QA in an open source community

This will benefit you in the future when presented with a new program, you'll be able to give an idea of how to approach testing - to give adequate coverage and be able to provide some metric of program stability and functionality

Note: This is NOT the typical mundane black box testing

Project Leader(s)

Project Contributor(s)

Dean Woodside (dean)

- Submitted an sh file that automates the tedious performance testing framework configuration

Alice Nodelman

- Discussed on the things that need to be fixed to improve and strengthen the framework

- Gave suggestions on the new Performance Testing framework

Ben Hearsum (bhearsum)

- Set up the VM for performance testing

- Helped get me started with the debugging process for report.py, run_tests.py and ts.py

Michael Lau (mylau)

- Added comments on the documentation for setting up Performance Testing framework for Windows

- Tested the new and improved Performance Testing framework (version 1)

- Gave constructive feedback on documentation (version 1)

Eva Or (eor)

- Tested the new and improved Performance Testing framework (version 1)

- Gave constructive feedback on new documentation (version 1)

David Hamp Gonsalves (inveigle)

- Gave pointers on flushing buffer

- Helped with some grammar and sentence structuring for documentation (version 1)

- Tested and gave constructive feedback on the framework (version 1)

- Looked into a batch file for automating configuration, gave pointers

- Tested and commented on the new framework (version 2)

Rob Campbell (robcee)

- Gave me a number of python tips :)

Tom Aratyn (mystic)

- Introduced Closures in Python

In-Class Contributors

Please let me know if I missed you out. I've only listed the people whom I've received comments from. Those of you who participated but isn't listed as an in-class contributor, please list your comments here

- Mark D'Souza (mdsouza)

- Sherman Fernandes (sjfern)

- Aditya Nanda Kuswanto (vipers101)

- Dave Manley (seneManley)

- Colin Guy (Guiness)

- Mohamed Attar (mojo)

- Man Choi Kwan (mckwan)

- Mark Paruzel (RealMarkP or FakeMarkP)

- Jeff Mossop (JBmossop)

- Melissa Peh (melz)

- Paul Yanchun Gu (gpaul)

- Vanessa Miranda (vanessa)

- Philip Vitorino (philly)

- Paul St-Denis (pstdenis)

- Mohammad Tirtashi (moe)

- Cesar Oliveira (cesar)

- Andrew Smith (andrew)

- Ben Hearsum (bhearsum)

- Erin Davey (davey_girl)

- Tom Aratyn (mystic)

- David Hamp

- Dean Woodside (dean)

Project Details

Improved Documentation

Latest

New Firefox Performance Testing Documentation

First Attempt

Performance Testing Setup Configuration Documentation

Details

This is different from Tinderbox. Two major differences are:

- First, it doesn't build, it just runs the performance test given a path to the executable. This is helpful if you're testing the performance of an extension or a build from another server. (You could build on a fast server, and then run performance tests on a machine with low memory).

- Second, it measures performance characteristics while it's running the pageload tests--you can track cpu speed, memory, or any of the other counters listed here.

Web logs

- Mozilla Quality Assurance and Testing Blog for Mozilla Firefox and Thunderbird

- Mozilla Quality Assurance Performance Testing

Submission

Still in progress!

While it's sizzling, try out the Firefox Performance Testing Framework (version 2) at New Firefox Performance Testing Documentation Quick Start section - 3 easy steps

| Artifact | Details | Links | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| run_tests.py |

</td> |

run_tests.py </td> </tr> | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| perfconfig.sh</td> | This script is used to automate the tedious Firefox Performance Testing Configuration

</td> |

perfconfig.sh </td> </tr> | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Progress bar class</td> |

</td> |

pb.py </td> </tr> | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| paths.py</td> |

|

paths.py </td> </tr> | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Firefox Performance Testing Documentation (Version 1)</td> |

Firefox Performance Testing Framework (Refer to Readme.txt)

</td> |

Performance Testing Setup Configuration Documentation </td> </tr> | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Firefox Performance Testing Documentation (Version 2)</td> |

Goals achieved:

Additional:

</td> |

New Firefox Performance Testing Documentation </td> </tr> | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Status Documentation</td> |

</td> |

</td> </tr> | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Firefox Performance Testing Framework Directory Structure </td> |

</td> |

Performance Testing Framework Directory Structure </td> </tr> | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Screen shots </td> |

</td> |

Not Applicable </td> </tr> | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Next Steps </td> |

</td> |

Next Big Steps </td> </tr> | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Reflections </td> |

</td> |

Reflections </td> </tr> </table> Next Steps

Extended Progress Chart (Version 2)

Progress (Version 1)

Comments on the README.TXT Documentation (By Mike Lau)Getting Started

After following the setup procedures, I typed the following command: c:\> run_tests.py config.YAML And I got the following error message in the command prompt and a popup window ( Liz Chak - Solution to this problem): Project Problems and SolutionsProblem: Firefox doesn't know how to open this address, because the protocol (c) isn't associated with any programsIf you didn't configure the paths.py paths correctly, you may run into this problem when you run the Performance Testing Framework: Solution: Firefox doesn't know how to open this address, because the protocol (c) isn't associated with any programsIn paths.py, the paths for INIT_URL, TS_URL and TP_URL have to be a local file url, not file path (file:///c:/): """The path to the file url to load when initializing a new profile""" INIT_URL = 'file:///c:/project/mozilla/testing/performance/win32/initialize.html' """The path to the file url to load for startup test (Ts)""" TS_URL = 'file:///c:/project/mozilla/testing/performance/win32/startup_test/startup_test.html?begin=' """The path to the file url to load for page load test (Tp)""" TP_URL = 'file:///c:/project/mozilla/testing/performance/win32/page_load_test/cycler.html' Problem: ZeroDivisionError: integer division or modulo by zero

Traceback (most recent call last):

File "C:\proj\mozilla\testing\performance\win32\run_tests.py", line 129, in ?

test_file(sys.argv[i])

File "C:\proj\mozilla\testing\performance\win32\run_tests.py", line 122, in te

st_file

TP_RESOLUTION)

File "C:\proj\mozilla\testing\performance\win32\report.py", line 152, in Gener

ateReport

mean = mean / len(ts_times[i])

ZeroDivisionError: integer division or modulo by zero

Solution: ZeroDivisionError: integer division or modulo by zeroCheck if there is contents in the base_profile directory that you have set for BASE_PROFILE_DIR in paths.py:

Problem: This page should close Firefox. If it does not, please make sure that the dom.allow_scripts_to_close_windows preference is set to true in about:config

Solution: This page should close Firefox. If it does not, please make sure that the dom.allow_scripts_to_close_windows preference is set to true in about:config

Directory Structure of FrameworkOverview of StructureA glance at the Framework File Structure (CVS files not included):

win32

|

|

|__ base_profile (dir)

| |

| |__ bookmarkbackups (dir)

| | |

| | |__ .html files

| |

| |__ Cache (dir)

| |

| |__ .bak, .html, .ini, .dat, .txt, .js, .rdf, .mfl files

|

|

|__ page_load_test(dir)

| |

| |__ base(dir)

| | |

| | |__ other dirs and .html files

| |

| |__ cycler.html & report.html

|

|

|__ startup_test

| |

| |__ startup_test.html

|

|

|__ extension_perf_reports (dir for generated reports)

|

|

|__ run_tests.py, paths.py, config.yaml and other .py, .html files

base_profile/

page_load_test/

startup_test/

extension_perf_report/

run_tests.py, paths.py

Project NewsSaturday, September 23, 2006Performance tests didn't run sucessfully.

Traceback (most recent call last):

File "C:\proj\mozilla\testing\performance\win32\run_tests.py", line 129, in ?

test_file(sys.argv[i])

File "C:\proj\mozilla\testing\performance\win32\run_tests.py", line 122, in te

st_file

TP_RESOLUTION)

File "C:\proj\mozilla\testing\performance\win32\report.py", line 152, in Gener

ateReport

mean = mean / len(ts_times[i])

ZeroDivisionError: integer division or modulo by zero

Sunday, September 24, 2006Understand further the approach to testing with the Python framework

Monday, September 25, 2006elichak will be working on a resolution with alice to get the results generated in the extension_perf_testing\base_profile and extension_perf_reports folders.

Friday, September 29, 2006elichak re-configured the environment of the machine to run the tests again. Cleaned up old files to do a clean test. Reinstalled Cygwin (replaced Make 3.80 with Make 3.81) and updated the testing files through CVS.

Sunday, October 1, 2006Alice has successfully run the tests. The Zero Division error didn't occur again after she updated her test files. There were results generated in the extension_perf_testing\base_profile and extension_perf_reports folders. elichak attempted to run the test with the alice's code but the Zero Divsion Error still occured on her machine.

Wednesday, October 4, 2006Elichak consulted Robcee about the Zero Division Error and he suggested a few things, like debugging the script. Elichak found out that the value of ts_time in the report.py file is empty but couldn't find out why the value of ts_time isn't assigned. According to alice, she didn't debug the scripts and only had to update the files to make them work.

Friday, October 6, 2006Ben set up the VM for elichak to run her performance testing in that environment.

Wednesday, October 11, 2006

for ts_time in ts_times[i]: mean += float(ts_time) mean = mean / len(ts_times[i])

Thusday, October 12, 2006Work completedThe Zero Division Error is solved. Turns out that it was just a configuration problem. The documentation to set up the environment was rather subtle and needs a re-work. Solution Contents in the C:\proj\mozilla\testing\performance\win32\base_profile should also be in C:\extension_perf_testing\base_profile dir. All work for this project is done on the VM, hera.senecac.on.ca Work in progress

Friday, 20 Oct 2006Last week, elichak has established to work on automating the setup of the environment and performance testing. The performance testing and environment setup is currently all over the place and is tedious for the developer to set it up. The automation will entail: Generating directories, dropping files in directories, installation of libraries, options to configure the performance testings etc. Tuesday, 31 Oct 2006Alice and Liz had a meeting and have established the key things that need to be done. What needs to be done:

How do we fix this?

Tuesday, 21 Nov 2006Refer to progress chart. Performance Testing Framework progress chart Wednesday, 29 Nov 2006In-class Performance Testing Framework Configuration

Sunday, 3 Dec 2006

Sunday, 10 Dec 2006

Wednesday, 13 Dec 2006

The current Firefox Performance Testing Framework is effective and efficient.

Goals achieved:

Things To-do (Immediately)

Project References

Project EventsIn class Firefox Performance TestingBefore you begin

Things to look out for

Comments on the Firefox Performance Testing FrameworkInstructions:

List of comments:

Reflections on the projectNow that the first phase of the project is done, I would like to sit back and reflect on some of the experiences I have had with this project. Not only did this project grow, I have been growing with it. I've learned endless amount of things by working on this project (not just technical stuff). And as the saying goes, "you only learn from experience"! Configuration frustrationWords can't express how I was initially frustrated with the framework. I hit brick walls a countless number of times when I tried to set the framework up and running and I was on the verge of giving up. It was only through my own perseverance and determination that I got this to work. Outcome: A list of things to fix on the framework to ease the configuration, strengthen the framework and better documentation!! First deliverableI asked the class to test my first deliverable. I had faith that my framework would bring delight to my testers, but I was proven otherwise. Even with the effort that I've put into my first deliverable, it still created a group of frustrated and agonized users. Many of them ranted on what could be improved on the framework, hence: Outcome: A list of things to automate the framework and EASE THE CONFIGURATION Final deliverableI'm glad that my first tester was thrilled about my Performance Testing. There is nothing better than a happy user. What I've learned is that TESTING is the KEY to a successful application. Why do so many of us developers neglect that?? CreditsDean Woodside, Alice Nodelman, Ben Hearsum, Michael Lau, Eva Or, David Hamp Gonsalves , Dave Humphrey, Rob Campbell and of course, the DPS909 class who tested my framework. These are the individuals who played a significant part in making this framework headed toward success! Bon Echo Community Test Day

|