GPU621/The Chapel Programming Language

Contents

Project Description

The Chapel is a portable, scalable, open-source modern parallel programming language designed to be productive. The Chapel is a high-level programming language that tends to be more human-readable and writable with some similarity to Python. The Chapel's performance can compete or even surpass MPI/OpenMP. This project will introduce the main functionality of Chapel programming language, compare the code and performance to C++ MPI/OpenMP, analysis the pros and cons of the Chapel.

Team Members

Installation

The Chapel is an open-source language that still constantly updates. The latest version as of the creation of this project is 1.23.0 released on October 15, 2020.

There is currently no official support for The Chapel Programming Language in major known IDE like Visual Studio, which is a downside compared to other languages in terms of usability.

Select one of the following options to install The Chapel Programming Language.

Pre-packaged Chapel installations are available for:

- Cray® XC™ systems

- Docker users (Requires to install Docker Engine, available on Linux, MAC, Windows 10)

- Mac OS X Homebrew users

GitHub source code download available here.

Users can also try The Chapel online here, though the version is currently stuck on Chapel 1.20.

The Chapel Programming Language

Main Functionality

//https://chapel-lang.org/docs/primers/

Language Basics

Variables

The variable declaration requires a type or initializer.

var: variable declarations.

const: runtime constants and compile-time constants. Must be initialized.

param: runtime constants and compile-time constants. Must be known at compile time.

var myVar1: real; const myVar2: real = sqrt(myVar1); param myVar3 = 3.14;

config: used before var, const, param keywords to allow overridden on the command line.

config var myVar4: bool = false; //./variable --myVariable=true

Procedures:

A procedure is like a function in C++ that can be used or called inside the program.

proc plus(x: int) : int

{

return x + 1;

}

Classes:

A class is a type of collection that collects variables, procedures and iterators. We use class keyword to declare a new class type and use new keyword to create its instance.

class myClass {

var a, b: int;

proc print() {

writeln("a = ", a, " b = ", b);

}

}

var foo = new myClass(0, 1);

foo.print();

Records:

Records are similar to classes. Records doesn't support inheritance and virtual dispatch but it supports copy constructor and copy assignment. And record variables refer to a distinct memory.

Iterators

Iterators are declared like procedure in Chapel. Unlike processes, iterators typically return a sequence of values.

We declared the yield statement inside the iterator bodies, it returns a value and suspends the execution.

iter fibonacci(n: int): int {

var i1 = 0, i2 = 1;

for i in 1..#n {

yield i1;

var i3 = i1 + i2;

i1 = i2;

i2 = i3;

}

}

config const n =10;

for idVar in fibonacci(n) do

writeln(" - ", idVar);

Parallel Iterators

There are two types of parallel iterator in Chapel:

Leader-follower iterators: implement zippered forall loops.

Standalone parallel iterators: implement non-zippered forall loops.

An example code:

iter fibonacci(n: int): int {

var i1 = 0, i2 = 1;

for i in 1..#n {

yield i1;

var i3 = i1 + i2;

i1 = i2;

i2 = i3;

}

}

config const n =10;

for (i,idVar) in zip(0..n, fibonacci(n)) do

writeln("#", i, " - ", idVar);

Leader-follower iterator

forall (a, b, c) in zip(A, B, C) do

//in here, A is the leader; and A, B, C is followers

leader iterator will create the parallel tasks for its forall loop and set iterations to each parallel task.

follower iterator will take input and iterate over it; create opposite results in order.

Task Parallelism

basic

Three basic parallel features:

begin: it creates a independent thread. Unable to confirm who will execute first.

cobeign: it creates a block of tasks. The main thread will continue until the statements inside are finished.

coforall: it is a loop of cobegin syntax in which each iteration is a separate task. The main thread will also continue until the loop is finished. The order of execution within each iteration is determined, and the order of iteration will execute first is not determined.

An example code:

const numTasks = here.numPUs();

cobegin {

writeln("Hello from task 1 of 2");

writeln("Hello from task 2 of 2");

}

coforall tid in 1..numTasks do

writef("Hello from task %n of %n\n", tid, numTasks);

Sync/Single

Sync and single are type qualifiers that end with $ when you declare it.

They have an associated state (full/empty); when you declare it with an initializing expression, the statement will be full; otherwise it is empty and the value of that variable is the default value of its type.

empty is the only state that you can write a value to it, and after writing it changes to full.

full is the only state that you can read the value from a variable; After reading, sync variable will change to empty and single variable doesn't change.

Methods for sync/single variables:

- For both sync and single variables:

- isFull - return true if variable's statement is full.

- writeEF() - it will block until variable's statement is empty, then set the argument value.

- readFF() - it will block until variable's statement is full, then read the value and return the value. sync and single variable cannot directly output, use this method to output the value.

- readXX() - return the value regardless variable's statement.

- For sync variables only:

- reset() - set the value to its type's default value and set statement to empty.

- readFE() - it will block until variable's statement is full, then read the value and return the value. using this method to output the value.

- writeXF() - set the argument value without block the execution.

- writeFF() - it will block until variable's statement is full, then set the argument value without change the state.

An example code:

var sy$: sync int = 2;

var getSy = sy$;

writeln("Default value: ", getSy);

var done$: sync bool;

writeln("Create thread");

begin {

var getNewsy = sy$;

writeln("In child thread, sy=", getNewsy);

done$ = true;

}

writeln("Thread created");

var result = done$.readXX();

writeln("Thread finished? ", result);

sy$ = 20;

writeln("Thread finished? ", done$.readFE());

Locality

The built-in type locale is used to represent locales in Chapel. When a task is trying to access a variable within the same locale, the cost is less compared to accessing a variable from another locale.

numLocales: a built-in variable which returns the number of locales for the current program as an integer.

Locales array: a built-in array, which stores the locale values for the program as elements in the array. The ID of each locale value is its index in the Locales array.

Here: a built-in variable which returns the locale value that the current task is running on. In Chapel, all programs begin execution from locale #0.

Data Parallelism

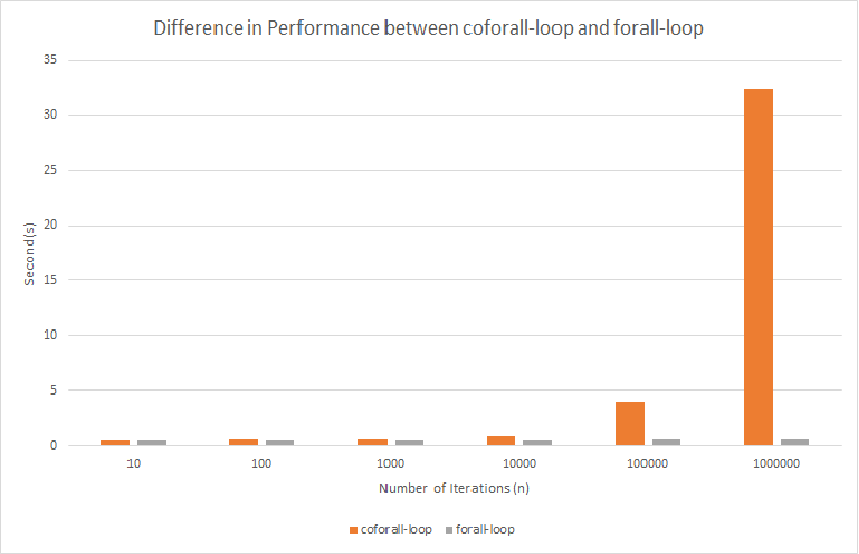

Forall-loop: important concept in Chapel for data parallelism. Like the coforall-loops, the forall-loop is also a parallel version of a for-loop in Chapel. The motivation behind forall-loops is to be able to handle a large number of iterations without having to create a task for every iteration like the coforall-loop.

const n = 1000000; var iterations: [1..n] real;

Each task will be responsible for a single iteration. Significant performance hit caused by creating, scheduling, and destroying each individual task which will outweigh the benefits of each task handling little computation.

coforall it in iterations do {

it += 1.0;

}

Automatically creates an appropriate number of tasks specific to each system and assigns an equal amount of loop iterations to each task. The number of tasks created is generally based on the number of cores that the system’s processor has. For example: a quad-core processor running this program with 1,000,000 iterations would cause 4 tasks to be created and assign 250,000 iterations to each individual task.

forall it in iterations do {

it += 1.0;

}

Library Utilities

Time Module

Timer: a timer is part of the time module which can be imported using the following statement:

use Time; /* Create a Timer t */ var t: Timer; t.start(); writeln(“Operation start”); sleep(5); writeln(“Operation end”); t.stop(); /* return time in milliseconds that was recorded by the timer */ writeln(t.elapsed(TimeUnits.milliseconds)); t.clear();

List Module

List: the list type can be imported using the following statement:

use List; var new_list: list(int) = 1..5; writeln(new_list); Output: [1, 2, 3, 4, 5]

for i in 6..10 do {

new_list.append(i);

}

writeln(new_list);

Output: [1, 2, 3, 4, 5, 6, 7, 8, 9, 10]

/* parSafe needs to be set to true if the list is going to be used in parallel */

var new_list2: list(int, parSafe=true);

forall i in 0..5 with (ref new_list2) {

new_list2.append(i);

}

writeln(new_list2);

Output: [0, 1, 2, 3, 4, 5]

sort(): used to sort the list in ascending order.

pop(index): used to pop the element at the specified index.

clear(): used to clear all elements from the list.

indexOf(value): used to retrieve the index of the value specified, returns -1 if not found.

insert(index, value): used to insert the value at the specified index.

Comparison to OpenMP

// Chapel is short and concise.

// OpenMP code

for(i = 0 ; i<niter; i++) {

start_time();

#pragma omp parallel for

for(…) {}

}

stop_time();

// Chapel code

for i in 1..niter {

start_time();

forall .. {}

}

stop_time();

}

//Hello World OpenMP

#include <iostream>

#include <omp.h>

#include <chrono>

using namespace std::chrono;

// report system time

void reportTime(const char* msg, steady_clock::duration span) {

auto ms = duration_cast<milliseconds>(span);

std::cout << msg << " - took - " <<

ms.count() << " milliseconds" << std::endl;

}

int main() {

steady_clock::time_point ts, te;

ts = steady_clock::now();

#pragma omp parallel

{

int tid = omp_get_thread_num();

int nt = omp_get_num_threads();

printf("Hello from task %d of %d \n", tid, not);

}

te = steady_clock::now();

reportTime("Integration", te - ts);

}

//Hello World Chapel

use Time;

var t: Timer;

//const numTasks = here.numPUs();

const numTasks = 8;

t.start();

coforall tid in 1..numTasks do

writef("Hello from task %n of %n \n", tid, numTasks);

t.stop();

writeln(t.elapsed(TimeUnits.milliseconds));

Pros and Cons of Using The Chapel

Pros

- GitHub open-source.

- similarly readable/writable as Python.

- the compiler is getting faster and producing faster code. Comparable to OpenMP/MPI.

- there are lots of examples, tutorials and documentation available.

- a global namespace supporting direct access to local or remote variables.

- data parallelism & task parallelism.

- friendly community.

Cons

- lack of a native Windows version.

- Adoption, hard to gain user compare to another parallel programming platform.

- User base is very small.

- It certainly will not be used in the IT industry for the near future(or ever) unless you are opening your own company and decided to try Chapel.

- a small number of contributors to this open-source project. Much less support and update compare to other IT giants.

- Projects based on Chapel is very little.

- It's more for research projects than products.

- the package is easy to install, but not as easy as other tools like OpenMP/MPI.

- there’s no central place where other people could look for your work if you wanted to have it as an external package.

- users have no much reason to start trying the language, given better options like C++ & OpenMP/MPI.

References

- The Chapel Programming Language official page: https://chapel-lang.org/

- The Chapel Documentation: https://chapel-lang.org/docs/#

- Full-Length Chapel Tutorial: https://chapel-lang.org/tutorials.html

- Learn Chapel in Y Minutes: https://learnxinyminutes.com/docs/chapel/

- Specific Chapel Concepts or Features: https://chapel-lang.org/docs/primers/

- October 2018 Chapel Tutorial: https://chapel-lang.org/tmp/OctTut2018/slides.html

- The Chapel Promotional Video: https://youtu.be/2yye1yJPcsg

- The Chapel Overview Talk Video: https://youtu.be/ko11tLuchvg

- The Chapel Overview Talk Slide: https://chapel-lang.org/presentations/ChapelForHPCKM-presented.pdf

- Comparative Performance and Optimization of Chapel: https://chapel-lang.org/CHIUW/2017/kayraklioglu-slides.pdf

- The Parallel Research Kernels: https://www.nas.nasa.gov/assets/pdf/ams/2016/AMS_20161013_VanDerWijngaart.pdf