DPS921/ND-R&D

GPU621/DPS921 | Participants | Groups and Projects | Resources | Glossary

Contents

[hide]C++11 Threads Library Comparison to OpenMP

Group Members

Daniel Bogomazov

Nick Krillis

How Threads Works

OpenMP Threads

Threading in OpenMP works through the use of compiler directives with constructs in order to create a parallel region in which threading can be performed.

For example:

#pragma omp construct [clause, ...] newline (\n) structured block

Through the use of different constructs we can define the parallel programming command to be used. Using constructs is mandatory in order for OpenMP to execute the command

For example:

#pragma omp parallel

The above code shows the use of the parallel construct parllel this construct identifies a block of code to be executed by multiple threads (a parallel region)

Implicit Barrier

With OpenMP after defining a parallel region, by default at the end of the region there is what we call an implicit barrier. An implicit barrier is where all individual threads are contained back into one thread; the Master thread which then continues.

// OpenMP - Parallel Construct

// omp_parallel.cpp

#include <iostream>

#include <omp.h>

int main() {

#pragma omp parallel

{

std::cout << "Hello\n";

}

std::cout << "Fin\n";

return 0;

}

Output:

Hello Hello Hello Hello Hello Hello Fin

C++11 Threads

C++11 introduced threading through the thread library.

Unlike OpenMP, C++11 does not use parallel regions as barriers for its threading. When a thread is run using the C++11 thread library, we must consider the scope of the parent thread. If the parent thread would exit before the child thread can return, it can crash the program if not handled correctly.

Join and Detach

When using the join function on the child thread, the parent thread will be blocked until the child thread returns.

t2

____________________

/ \

__________/\___________________|/\__________

t1 t1 t2.join() | t1

When using the detach function on the child thread, the two threads will split and run independently. Even if the parent thread exits before the child thread is able to finish, the child thread will still be able to continue. The child thread is responsible for deallocation of memory upon completion.

OpenMP does not have this functionality. OpenMP cannot execute instructions outside of its parallel region like the C++11 thread library can.

t2

________________________________

/

__________/\_______________________

t1 t1 t2.detach()

Creating a Thread

The following is the template used for the overloaded thread constructor. The thread begins to run on initialization.

f is the function, functor, or lambda expression to be executed in the thread. args are the arguements to pass to f.

template<class Function, class... Args> explicit thread(Function&& f, Args&&... args);

How Multithreading Works

Multithreading With OpenMP

#include <iostream>

#include <omp.h>

int main() {

#pragma omp parallel

{

int tid = omp_get_thread_num();

std::cout << "Hi from thread "<< tid << '\n';

}

return 0;

}

Output:

Hi from thread Hi from Thread 2 0 Hi from thread 1 Hi from thread 3

Essentially what is happening in the code above is that the threads are intermingling creating a jumbled output. All threads are trying to access the cout stream at the same time. As one thread is in the stream another may interfere with it because they are all trying to access the stream at the same time.

Threading with C++11

Unlike OpenMP, C++11 threads are created by the programmer instead of the compiler.

std::this_thread::get_id() is similar to OpenMP's omp_get_thread_num() but instead of an int, it returns a

// cpp11.multithreading.cpp

#include <iostream>

#include <vector>

#include <thread>

void func1(int index) {

std::cout << "Index: " << index << " - ID: " << std::this_thread::get_id() << std::endl;

}

int main() {

int numThreads = 10;

std::vector<std::thread> threads;

std::cout << "Creating threads...\n";

for (int i = 0; i < numThreads; i++)

threads.push_back(std::thread(func1, i));

std::cout << "All threads have launched!\n";

std::cout << "Syncronizing...\n";

for (auto& thread : threads)

thread.join();

std::cout << "All threads have syncronized!\n";

return 0;

}

Since all threads are using the std::cout stream, the output can appear jumbled and out of order. The solution to this problem will be presented in the next section.

Creating threads... Index: 0 - ID: Index: 1 - ID: Index: 2 - ID: 0x70000b57e000 0x70000b4fb000 0x70000b601000Index: 3 - ID: 0x70000b684000 Index: 4 - ID: 0x70000b707000 Index: 5 - ID: 0x70000b78a000 Index: 6 - ID: 0x70000b80d000 Index: 7 - ID: 0x70000b890000 Index: All threads have launched! 8 - ID: 0x70000b913000 Index: Syncronizing... 9 - ID: 0x70000b996000 All threads have syncronized!

How Syncronization Works

Syncronization With OpenMP

critical

#include <iostream>

#include <omp.h>

int main()

{

#pragma omp parallel

{

int tid = omp_get_thread_num();

#pragma omp critical

std::cout << "Hi from thread "<< tid << '\n';

}

return 0;

}

Using the parallel construct: critical we are able to limit one thread accessing the stream at a time. critical defines the region in which only one thread is allowed to execute at a time. In this case its the cout stream that we are limiting to one thread. The revised code now has an output like this:

Hi from thread 0 Hi from Thread 1 Hi from thread 2 Hi from thread 3

parallel for

In OpenMp there is a way of parallelizing a for loop by using the parallel construct for. This statement will automatically distribute iterations between threads.

Example:

void simple(int n, float *a, float *b) {

int i;

#pragma omp parallel for

for (i = 1; i < n; i++)

b[i] = (a[i] + a[i-1]) / 2.0;

}

Syncronization with C++11

mutex

To allow for thread syncronization, we can use the mutex library to lock specific sections of code from being used by multiple threads at once.

// cpp11.mutex.cpp

#include <iostream>

#include <vector>

#include <thread>

#include <mutex>

std::mutex mu;

void func1(int index) {

std::lock_guard<std::mutex> lock(mu);

// mu.lock();

std::cout << "Index: " << index << " - ID: " << std::this_thread::get_id() << std::endl;

// mu.unlock();

}

int main() {

int numThreads = 10;

std::vector<std::thread> threads;

std::cout << "Creating threads...\n";

for (int i = 0; i < numThreads; i++)

threads.push_back(std::thread(func1, i));

std::cout << "All threads have launched!\n";

std::cout << "Syncronizing...\n";

for (auto& thread : threads)

thread.join();

std::cout << "All threads have syncronized!\n";

return 0;

}

Using mutex, we're able to place a lock on the data used by the threads to allow for mutual exclusion. This is similar to OpenMP's critical in that it only allows one thread to execute a block of code at a time.

Creating threads... Index: 0 - ID: 0x70000aa29000 Index: 4 - ID: 0x70000ac35000 Index: 5 - ID: 0x70000acb8000 Index: 1 - ID: 0x70000aaac000 Index: 6 - ID: 0x70000ad3b000 Index: 7 - ID: 0x70000adbe000 Index: 8 - ID: 0x70000ae41000 Index: 3 - ID: 0x70000abb2000 All threads have launched! Syncronizing... Index: 9 - ID: 0x70000aec4000 Index: 2 - ID: 0x70000ab2f000 All threads have syncronized!

How Data Sharing Works

Data Sharing With OpenMP

In OpenMP by default all data is shared and passed by reference. Therefore, we must be careful how the data is handled within the parallel region if accessed by multiple threads at once.

For Example:

#include <iostream>

#include <omp.h>

int main() {

int i = 12;

#pragma omp parallel

{

#pragma omp critical

std::cout << "\ni = " << ++i;

}

std::cout << "\ni = " << i << std::endl;

return 0;

}

Output:

i = 13 i = 14 i = 15 i = 16 i = 16

What we can see using the output from the code above is that even after the parallel region is closed we can see that our variable i holds a different value than it did originally. This is due to the fact that the variable is shared inside and outside the parallel region. In order to pass this variable by value to each thread we must make this variable non-shared. This is done by using firstprivate() This is considered a clause, which comes after a construct. firstprivate(i) will take i and make it private to each thread.

For example:

#include <iostream>

#include <omp.h>

int main() {

int i = 12;

#pragma omp parallel firstprivate(i)

{

#pragma omp critical

std::cout << "\ni = " << ++i;

}

std::cout << "\ni = " << i << std::endl;

}

New Output:

i = 13 i = 13 i = 13 i = 13 i = 12

What we can see here is that through each indiviual thread the value of i stays at 12 then gets incremented by the thread to 13. On the last line of the output we can see that i = 12 showing that the parallel region did not change the value of i outside the parallel region.

Data Sharing with C++11

The C++11 thread library requires the programmer to pass in the address of the data that should be shared by the threads.

// cpp11.datasharing.cpp

#include <iostream>

#include <vector>

#include <thread>

#include <mutex>

std::mutex mu;

void func1(int value) {

std::lock_guard<std::mutex> lock(mu);

std::cout << "func1 start - value = " << value << std::endl;

value = 0;

std::cout << "func1 end - value = " << value << std::endl;

}

void func2(int& value) {

std::lock_guard<std::mutex> lock(mu);

std::cout << "func2 start - value = " << value << std::endl;

value *= 2;

std::cout << "func2 end - value = " << value << std::endl;

}

int main() {

int numThreads = 5;

int value = 1;

std::vector<std::thread> threads;

for (int i = 0; i < numThreads; i++) {

if (i == 2) threads.push_back(std::thread(func1, value));

else threads.push_back(std::thread(func2, std::ref(value)));

}

for (auto& thread : threads)

thread.join();

return 0;

}

func2 start - value = 1 func2 end - value = 2 func2 start - value = 2 func2 end - value = 4 func1 start - value = 1 func1 end - value = 0 func2 start - value = 4 func2 end - value = 8 func2 start - value = 8 func2 end - value = 16

How Syncronization Works Continued

Syncronization Continued With OpenMP

atomic

The atomic construct is a way of OpenMP's implementation to serialize a specific operation. The advantage of using the atomic construct in this example below is that it allows the increment operation with less overhead than critical. Atomic ensures that only the operation is being performed one thread at a time.

int main() {

int i = 0;

#pragma omp parallel num_threads(10)

{

#pragma omp atomic

i++;

}

std::cout << i << std::endl;

return 0;

}

10

Syncronization Continued with C++11

atomic

Another way to ensure syncronization of data between threads is to use the atomic library.

// cpp11.atomic.cpp

#include <iostream>

#include <vector>

#include <thread>

#include <atomic>

std::atomic<int> value(1);

void add() {

++value;

}

void sub() {

--value;

}

int main() {

int numThreads = 5;

std::vector<std::thread> threads;

for (int i = 0; i < numThreads; i++) {

if (i == 2) threads.push_back(std::thread(sub));

else threads.push_back(std::thread(add));

}

for (auto& thread : threads)

thread.join();

std::cout << value << std::endl;

return 0;

}

The atomic value can only be accessed by one thread at a time. This is a similar lock procedure as mutex except the lock is defined by the atomic wrapper instead of the programmer.

4

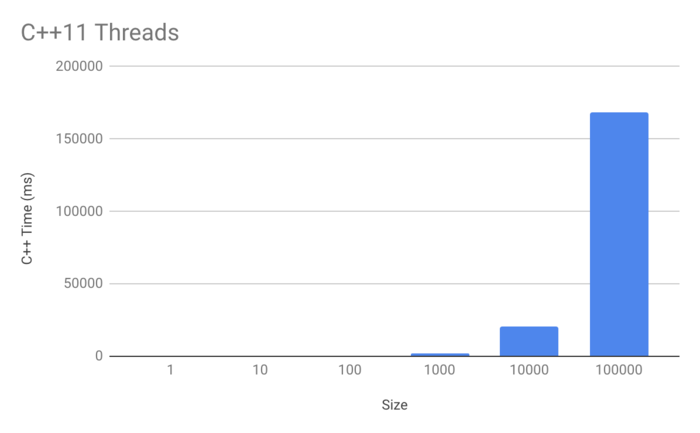

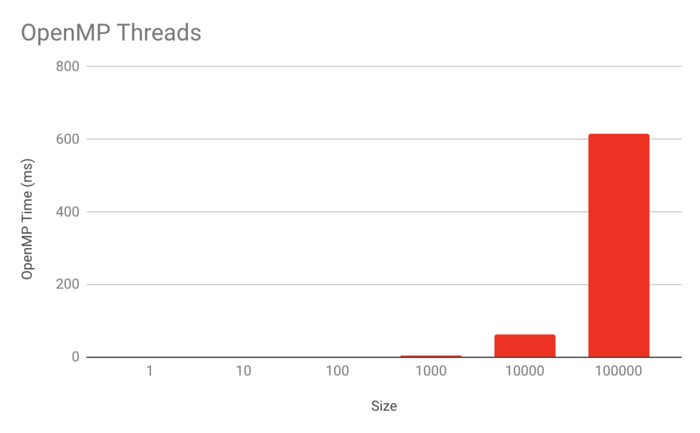

Thread Creation Test

#include <iostream>

#include <string>

#include <chrono>

#include <vector>

#include <thread>

#include <omp.h>

using namespace std::chrono;

void reportTime(const char* msg, int size, steady_clock::duration span) {

auto ms = duration_cast<milliseconds>(span);

std::cout << msg << "- size : " << std::to_string(size) << " - took - " << ms.count() << " milliseconds" << std::endl;

}

void empty() {}

void cpp(int size) {

steady_clock::time_point ts, te;

ts = steady_clock::now();

for (int i = 0; i < size; i++) {

std::vector<std::thread> threads;

for (int j = 0; j < 10; j++) threads.push_back(std::thread(empty));

for (auto& thread : threads) thread.join();

}

te = steady_clock::now();

reportTime("C++11 Threads", size, te - ts);

}

void omp(int size) {

steady_clock::time_point ts, te;

ts = steady_clock::now();

for (int i = 0; i < size; i++) {

#pragma omp parallel for num_threads(10)

for (int i = 0; i < 10; i++) empty();

}

te = steady_clock::now();

reportTime("OpenMP", size, te - ts);

}

int main() {

// Test C++11 Threads

cpp(1);

cpp(10);

cpp(100);

cpp(1000);

cpp(10000);

cpp(100000);

std::cout << std::endl;

// Test OpenMP

omp(1);

omp(10);

omp(100);

omp(1000);

omp(10000);

omp(100000);

return 0;

}

C++11 Threads- size : 1 - took - 1 milliseconds C++11 Threads- size : 10 - took - 10 milliseconds C++11 Threads- size : 100 - took - 125 milliseconds C++11 Threads- size : 1000 - took - 1703 milliseconds C++11 Threads- size : 10000 - took - 20760 milliseconds C++11 Threads- size : 100000 - took - 168628 milliseconds OpenMP- size : 1 - took - 0 milliseconds OpenMP- size : 10 - took - 0 milliseconds OpenMP- size : 100 - took - 0 milliseconds OpenMP- size : 1000 - took - 6 milliseconds OpenMP- size : 10000 - took - 62 milliseconds OpenMP- size : 100000 - took - 616 milliseconds