GPU621/Analyzing False Sharing

Contents

Group Members

Preface

In multicore concurrent programming, if we compare the contention of mutually exclusive locks to "performance killers", then pseudo-sharing is the equivalent of "performance assassins". The difference between a "killer" and an "assassin" is that the killer is visible and we can choose to fight, run, detour, and beg for mercy when we encounter the killer, but the "assassin" is different. The "assassin" is always hiding in the shadows, waiting for an opportunity to give you a fatal blow, which is impossible to prevent. In our concurrent programming, when we encounter lock contention that affects concurrency performance, we can take various measures (such as shortening the critical area, atomic operations, etc.) to improve the performance of the program, but pseudo-sharing is something that we cannot see from the code we write, so we cannot find the problem and cannot solve it. This leads to pseudo-sharing in the "dark", which is a serious drag on concurrency performance, but we can't do anything about it.

What to know before understanding false sharing

Cache Lines

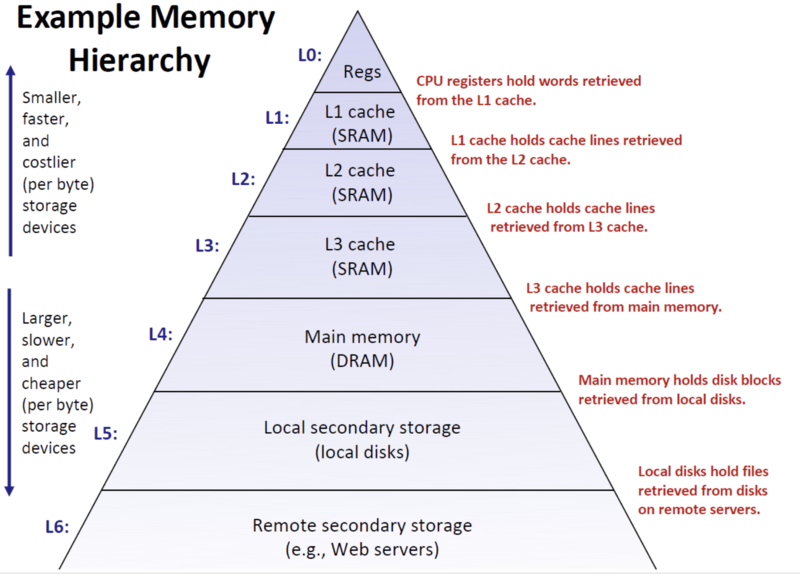

In order to carry out the following discussion, we need to first familiarize ourselves with the concept of cache lines. Students who have studied this part of the OS course on storage architecture should be impressed by the pyramid model of the memory hierarchy, where the pyramid from top to bottom represents a reduction in the cost and larger capacity of the storage medium, and from bottom to top represents an increase in access speed. The top of the pyramid model is located in the CPU registers, followed by the CPU cache (L1, L2, L3), then down to the memory, the bottom is the disk, the operating system uses this storage hierarchy model is mainly to solve the contradiction between the CPU's high speed and memory disk low speed, the CPU will be recently used data read in advance to the Cache, the next time to access the same data, the CPU can be directly from the faster CPU. The next time the same data is accessed, it can be read directly from the faster CPU cache, avoiding slowing down the overall speed by reading from memory or disk.

The smallest unit of CPU cache is the cache line, the cache line size varies depending on the architecture, the most common ones are 64Byte and 32Byte, the CPU cache accesses data from within the cache line unit, each time taking the entire cache line where the data needs to be read, even if the adjacent data is not used will also be cached in the CPU cache.