GPU610/DPS915 Team 7 Project Page

GPU610/DPS915 | Student List | Group and Project Index | Student Resources | Glossary

Contents

Team 7

Team Members

Assignment 1

Creating ray-traced images by Alek Minassian

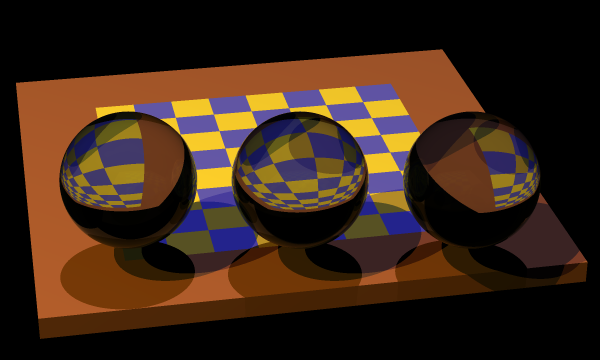

This is an open-source program available from here. This program can be built and run on both Windows and Linux. A copy of the source code in ZIP format is available in the section below as well as instructions for building and running. The program works by creating one or more objects and placing them on a "Scene". It then adds one or more light sources and traces them as they reflect off or refract through objects. As an example, the following chessboard is generated as follows:

- A chessboard is created, rotated, and added to the scene.

- Three spheres are created and added to the scene.

- Three light sources are added.

- Finally, the new image is saved.

Downloading the source code

A modified version of the project is available from here. The following modifications were made:

- The raytrace/raytrace/build file used for building on Linux is modified to enable profiling as well as using version 7.2.0 of g++ on Matrix.

- The Visual Studio solution raytrace/raytrace.sln is upgraded to Visual Studio 2017.

- Some code has been added to scene.cpp in order to report the time spent in certain parts of the code. This is so that we can get a more granular timing than what is available through profiling.

Building and running on Windows

- Unzip the source from the previous step and open raytrace/raytrace.sln.

- Set the active configuration and platform:

- From the "Build" menu item, select "Configuration Manager..."

- Change the Active solution platform to be x64.

- Change the Active solution configuration to be Release.

- If you run into build issues, you may have to re-target the solution as follows:

- In the Solution Explorer pane, right-click on the solution and select "Retarget solution".

- Select the latest Windows SDK version available to you.

- You can now build the solution from the build menu.

- Run the program by selecting "Start Without Debugging" from the Debug menu. You should now see the following screen:

- The chessboard image chessboard.png, that was displayed above, is now generated and can be found in the raytrace/raytrace folder.

Building and running on Linux (Matrix)

- Download the source: curl https://wiki.cdot.senecacollege.ca/w/imgs/Raytrace.a1.zip -o rtsource.zip

- Unzip the source: unzip rtsource.zip

- cd raytrace/raytrace

- chmod a+x build

- Build the source code: ./build

- Run the application: ./raytrace chessboard

Profiling and Analysis

The following shows the results of profiling in Visual Studio:

Profiling on Matrix

The results of the profiling done on Matrix can be found in this flat profile and this call graph.

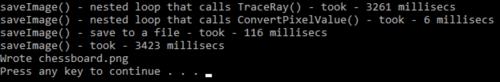

The following shows some timings reported by code that was added to the application.

Analysis of the code shows that main calls ChessBoardTest which calls SaveImage. Based on the profiling results above, the application spends the majority of its time in SaveImage. Analyzing the code also shows that SaveImage calls TraceRay in a nested loop as follows:

for (size_t i=0; i < largePixelsWide; ++i)

{

......

for (size_t j=0; j < largePixelsHigh; ++j)

{

......

TraceRay();

......

}

}

The remainder of the functions where the majority of the time is spent are called from TraceRay. The timing statements added to the code show that 3261 milliseconds are spent in this nested loop. The total time spent in the application is 3658 milliseconds. Therefore, we can conclude that the majority of the time is spent in the above nested loop which is 89%. Since one iteration of the loop does not depend on another iteration, the calls to TraceRay can be parallelized. Assuming an Nvidia GPU with 192 cores (Nvidia Quadro K1000M), Amdahl's Law predicts a speedup by a factor of 8.72:

S192 = 1 / ( 1 - 0.89 + 0.89 / 192 ) = 8.72

Sudoku Solver by

This is an open source project that I found on someone's github page which can be found here. This program can be compiled with the GNU C++ compiler.

The program works by first defining what the sudoku board looks like. It sets each value. It checks a value and makes sure it fits based on Sudoku rules. Everytime a value is set, we backtrack to ensure that the rules are kept across the board.

The main chunk of code that seemily would run the longest would be in the verifyValue function.

for (int y_verify=box_y * 3; y_verify < box_y * 3 + 3; y_verify++) {

// For each x in the same box

for (int x_verify=box_x * 3; x_verify < box_x * 3 + 3; x_verify++) {

// Skip self.

if (x_verify == x_cord && y_verify == y_cord) {

continue;

}

// If same value, failed

int verifyValue = board[x_verify][y_verify];

if (verifyValue == value) {

return false;

}

}

}

This part runs at O(xy) time complexity.

Profiling and Call Graph

After further analysis, the initial solution is already fast.

Flat profile: Each sample counts as 0.01 seconds.

no time accumulated

% cumulative self self total time seconds seconds calls Ts/call Ts/call name 0.00 0.00 0.00 44317 0.00 0.00 SudokuPuzzle::verifyValue(int, int) 0.00 0.00 0.00 1 0.00 0.00 _GLOBAL__sub_I__ZN12SudokuPuzzleC2Ev 0.00 0.00 0.00 1 0.00 0.00 _GLOBAL__sub_I_main 0.00 0.00 0.00 1 0.00 0.00 SudokuPuzzle::solve(int, int)

Call graph

granularity: each sample hit covers 4 byte(s) no time propagated

index % time self children called name

0.00 0.00 44317/44317 SudokuPuzzle::solve(int, int) [10]

[7] 0.0 0.00 0.00 44317 SudokuPuzzle::verifyValue(int, int) [7]

0.00 0.00 1/1 __do_global_ctors_aux [18]

[8] 0.0 0.00 0.00 1 _GLOBAL__sub_I__ZN12SudokuPuzzleC2Ev [8]

0.00 0.00 1/1 __do_global_ctors_aux [18]

[9] 0.0 0.00 0.00 1 _GLOBAL__sub_I_main [9]

4701 SudokuPuzzle::solve(int, int) [10]

0.00 0.00 1/1 SudokuPuzzle::solve() [15]

[10] 0.0 0.00 0.00 1+4701 SudokuPuzzle::solve(int, int) [10]

0.00 0.00 44317/44317 SudokuPuzzle::verifyValue(int, int) [7]

4701 SudokuPuzzle::solve(int, int) [10]

Index by function name

[8] _GLOBAL__sub_I__ZN12SudokuPuzzleC2Ev (SudokuPuzzle.cpp) [7] SudokuPuzzle::verifyValue(int, int) [9] _GLOBAL__sub_I_main (main.cpp) [10] SudokuPuzzle::solve(int, int)

Assessment

This code would not benefit from parallelism as it is already fast, and each result relies on a previous result. This would make the code incredibly complex to parallelize and it would not benefit as such. Perhaps if the Sudoku board was larger than 9x9 the solution could be faster.

Image Processing by

I found a sample of image processing code located here: http://www.dreamincode.net/forums/topic/76816-image-processing-tutorial/.

The code uses PGM files (P5 type is the standard for the code). For more information about PGM files, here is a link: http://netpbm.sourceforge.net/doc/pgm.html.

There were a few functions that I felt could be parallelized a significant amount. These functions were enlargeImage, reflectImage, and rotateImage.

Functions

Enlarge Image

int rows, cols, gray;

int pixel;

int enlargeRow, enlargeCol;

rows = oldImage.N * value;

cols = oldImage.M * value;

gray = oldImage.Q;

Image tempImage(rows, cols, gray);

for(int i = 0; i < oldImage.N; i++)

{

for(int j = 0; j < oldImage.M; j++)

{

pixel = oldImage.pixelVal[i][j];

enlargeRow = i * value;

enlargeCol = j * value;

for(int c = enlargeRow; c < (enlargeRow + value); c++)

{

for(int d = enlargeCol; d < (enlargeCol + value); d++)

{

tempImage.pixelVal[c][d] = pixel;

}

}

}

}

oldImage = tempImage;

Reflect Image

int rows = oldImage.N;

int cols = oldImage.M;

Image tempImage(oldImage);

if(flag == true) //horizontal reflection

{

for(int i = 0; i < rows; i++)

{

for(int j = 0; j < cols; j++)

tempImage.pixelVal[rows - (i + 1)][j] = oldImage.pixelVal[i][j];

}

}

else //vertical reflection

{

for(int i = 0; i < rows; i++)

{

for(int j = 0; j < cols; j++)

tempImage.pixelVal[i][cols - (j + 1)] = oldImage.pixelVal[i][j];

}

}

oldImage = tempImage;

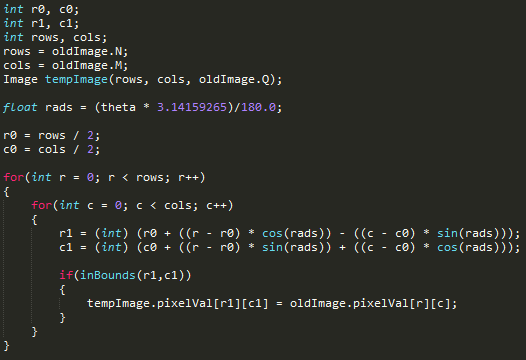

Rotate Image

int r0, c0;

int r1, c1;

int rows, cols;

rows = oldImage.N;

cols = oldImage.M;

Image tempImage(rows, cols, oldImage.Q);

float rads = (theta * 3.14159265)/180.0;

r0 = rows / 2;

c0 = cols / 2;

for(int r = 0; r < rows; r++)

{

for(int c = 0; c < cols; c++)

{

r1 = (int) (r0 + ((r - r0) * cos(rads)) - ((c - c0) * sin(rads)));

c1 = (int) (c0 + ((r - r0) * sin(rads)) + ((c - c0) * cos(rads)));

if(inBounds(r1,c1))

{

tempImage.pixelVal[r1][c1] = oldImage.pixelVal[r][c];

}

}

}

for(int i = 0; i < rows; i++)

{

for(int j = 0; j < cols; j++)

{

if(tempImage.pixelVal[i][j] == 0)

tempImage.pixelVal[i][j] = tempImage.pixelVal[i][j+1];

}

}

oldImage = tempImage;

Profiling

I started with a PGM file that contained 512 width and height (in ASCII decimal), and 255 gray value (in ASCII decimal). This totaled to 257 KB as the file size.

I enlarged the image by 5 times its original size. I then reflected the image horizontally. Finally, I rotated the image 90 degrees.

real 0m35.904s user 0m0.520s sys 0m22.781s

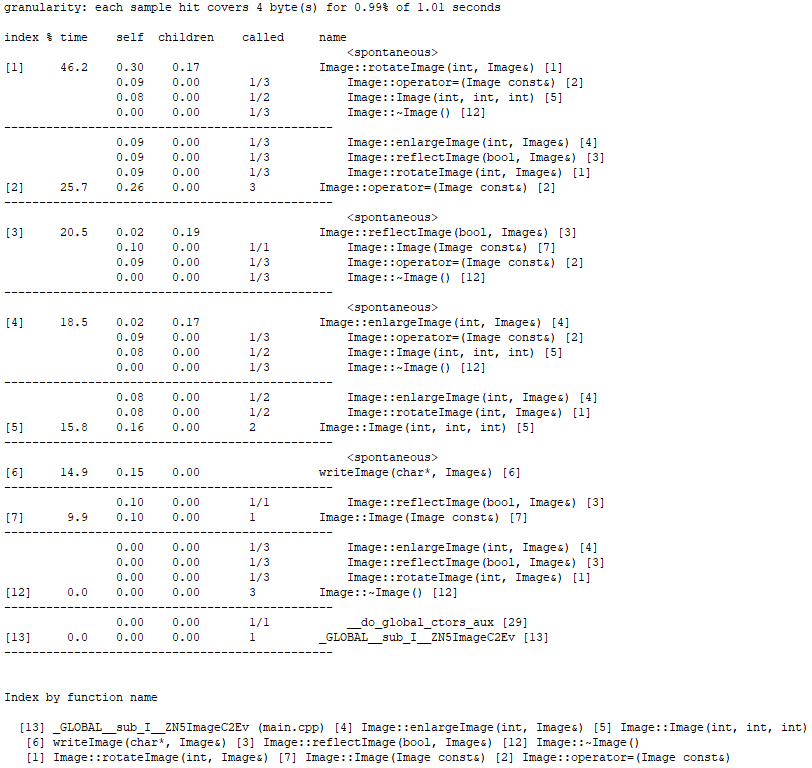

Flat Profile

Each sample counts as 0.01 seconds.

% cumulative self self total time seconds seconds calls ms/call ms/call name 29.70 0.30 0.30 Image::rotateImage(int, Image&) 25.74 0.56 0.26 3 86.67 86.67 Image::operator=(Image const&) 15.84 0.72 0.16 2 80.00 80.00 Image::Image(int, int, int) 14.85 0.87 0.15 writeImage(char*, Image&) 9.90 0.97 0.10 1 100.00 100.00 Image::Image(Image const&) 1.98 0.99 0.02 Image::enlargeImage(int, Image&) 1.98 1.01 0.02 Image::reflectImage(bool, Image&) 0.00 1.01 0.00 3 0.00 0.00 Image::~Image() 0.00 1.01 0.00 1 0.00 0.00 _GLOBAL__sub_I__ZN5ImageC2Ev

Call Graph

Assessment

Judging from the flat profile and call graph, rotateImage takes the longest to complete. EnlargeImage and reflectImage both take less time to complete than rotateImage. They also are completed at very similar times. Therefore, the best function to look into parallizing would be the rotateImage function.

Final Decision

The group has decided to proceed with the "Creating ray-traced images" application as it has good potential for parallelization. Although the total time taken to generate one image is somewhat fast already (3.6 seconds), however, if generating multiple images (10, 100, or even more), then parallelization would offer great savings in the total time.

Update: After reaching the above decision, the team started looking into the effort required to parallelize the program. The TraceRay() method called in the nested loop is a member of the Scene class which has several lists of objects that are created on the heap. It is a major effort to copy these to the device as there are many dependent classes. In addition, the program uses the standard template library which would have to be converted to be using the Thrust library. Given the large number of classes involved, the effort required to do these is big and error-prone. As a result, the team has now decided to work on the Image Processing program.

Assignment 2

Rotate Image

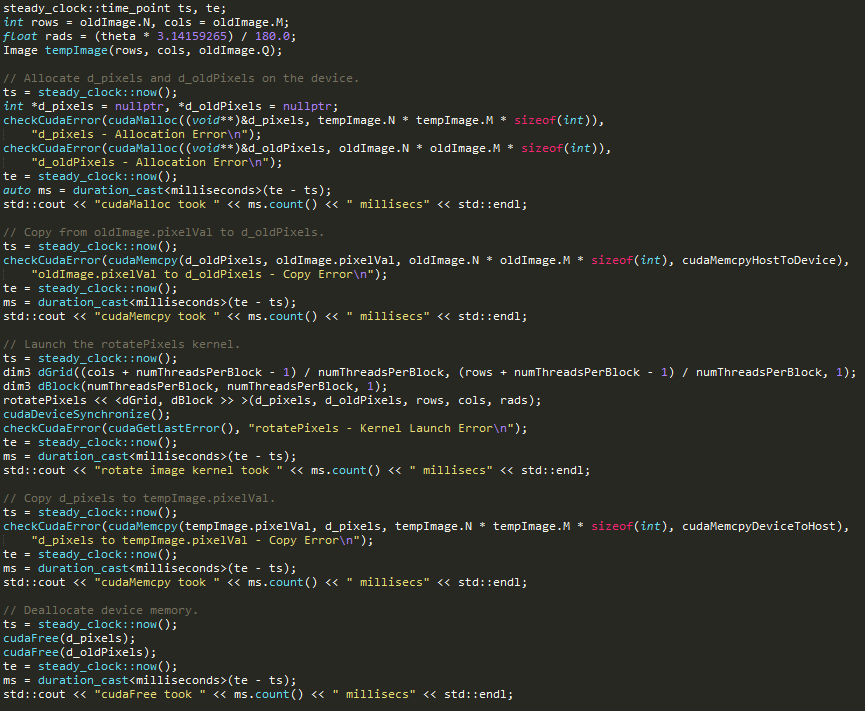

The following code shows part of the serial version of the rotateImage method. The parallelized version of this code is also shown below.

Serial Version:

Parallelized Version:

The following shows the kernel definition as well as launching of the kernel. Another modification that was made to the serial version was to convert pixelVal (a member of the Image class) from an int** to an int* and allocate it as a linearized matrix. This was done in order to make it easier to do the allocations and the copying to the device.

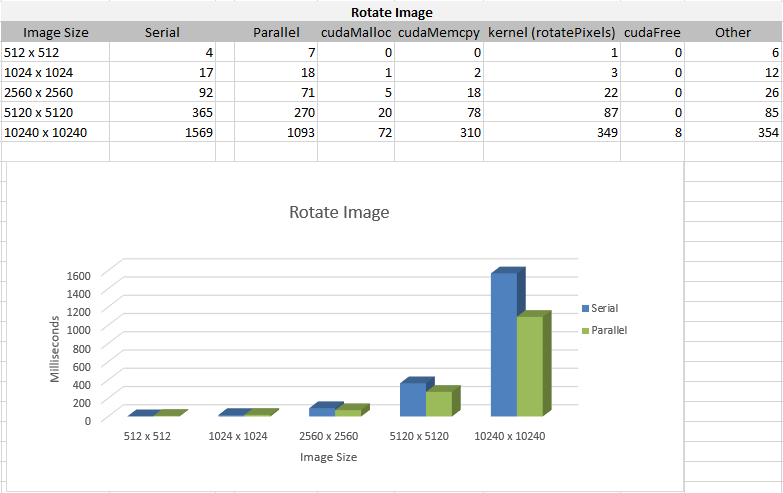

The following table shows the timings (in milliseconds) for various image sizes for the serialized and parallelized versions of the program. The parallelized version of the code is faster for larger image sizes as can be seen in the graph below.

Assignment 3

For the Optimization Phase, multiple techniques were considered but not all of them worked. For example:

- Using shared memory does not help since dst is only being assigned to once and src is only being accessed once.

- Considered using constant memory for the source array, however, because of the max limitation of constant memory, which is 65536, I was not able to allocate enough space to accommodate for large images.

- There is one if-statement in the kernel (if (inBounds(r1, c1, maxRows, maxCols))) that has the potential for thread divergence. Removing that if-statement, however, for angles that are not multiples of 90 degrees, will result in a memory access exception as some of the pixels would be rotated out of the original matrix. There are ways of avoiding this exception. For example, you could use a destination matrix that is large enough to hold the rotated image but then when saving, only save based on the boundaries of the original image, as illustrated in the figure below. However, first I removed the if-statement and rotated by 90 degrees, and there was only a slight improvement in the timing. Therefore, it was not worth implementing a method such as this in order to remove the if-statement.

- Tried using pre-fetching by changing this statement (dst[r1 * maxCols + c1] = src[r * maxCols + c];) to dst[r1 * maxCols + c1] = srcVal; and adding int srcVal = src[r * maxCols + c]; before other statements. However, this did not result in any timing improvements.

The techniques that improved timings are discussed below:

Changed access to matrix of pixels in global memory from column-major to row-major so that memory accessed is coalesced. This resulted in timing improvements as shown below. The timing for the kernel has gone down from 349 ms to 206 ms.

In the kernel, the two calls to sin and cos were replaced by a single call to __sincosf(rads, &sine, &cosine); which calculated both the sine and the cosine at the same time. This resulted in timing improvements as shown below. The timing for the kernel has gone down from 206 ms to 179 ms.

The initial block size was 32 x 32 = 1024 which on devices with compute capability 3.0 is the maximum number of threads per block. In an attempt to find an optimal block size, I tried the following block sizes and calculated their thread occupancy using the CUDA Occupancy Calculator spreadsheet:

- 8 x 8: 50% thread occupancy

- 16 x 16: 100% thread occupancy

- 24 x 24: 84% thread occupancy

- 32 x 32: 100% thread occupancy

Running the tests with the various block sizes revealed that the optimal block size is 16 x 16, timings below. The timing for the kernel has gone down from 179 ms to 106 ms. Observation: Although block sizes of 16 x 16 and 32 x 32 both have 100% thread occupancy, the 16 x 16 turned out to be optimal. This is probably due to the fact that less warps are competing for access to global memory.

The following image shows the final optimized kernel:

The following shows the comparison between the serial version vs parallelized and optimized version:

The final version of the code is available from here. To run the program, clone the repository and open the Visual Studio solution. If prompted to retarget, click Cancel. The project folder has an ZIP file containing some sample images. These should be extracted into the project folder. To run the program, you need to specify two arguments: the name of the source file and the name of the target file.