Alpha Centauri

"The world is one big data problem." -cit. Andrew McAfee

Contents

Intel Data Analytics Acceleration Library

Team Members

Introduction

Intel Data Analytics Acceleration Library, also known as Intel DAAL, is a library created by Intel in 2015 to solve problems associated with Big Data. Intel DAAL is available for Linux, OS X and Windows platforms and it is available for the C++, Python, and Java programming platforms. Intel DAAL is optimized to run on a wide range of devices ranging from home computers to data centers and it uses Vectorization to deliver best performances.

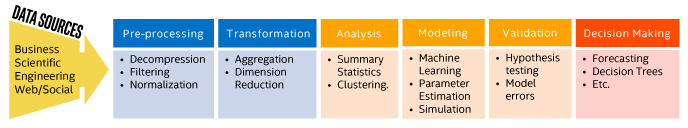

Intel DAAL helps speed big data analytics by providing highly optimized algorithmic building blocks for all data analysis stages and by supporting different processing modes.

The data analysis stages covered are:

- Pre-processing

- Transformation

- Analysis

- Modeling

- Validation

- Decision Making

The different processing modes are:

- Batch processing - Data is stored in memory and processed all at once.

- Online processing - Data is processed in chunks and then the partial results are combined during the finalizing stage. This is also called Streaming.

- Distributed processing - Similarly to MapReduce Consumers in a cluster process local data (map stage), and then the Producer process collects and combines partial results from Consumers (reduce stage). Developers can choose to use the data movement in a framework such as Hadoop or Spark, or explicitly coding communications using MPI.

How Intel DAAL Works

Intel DAAL comes pre-bundled with Intel® Parallel Studio XE and Intel® SystemStudio. It is also available as a stand-alone version and can be installed following these instructions instructions.

Intel DAAL is a simple and efficient solution to solve problems related to Big Data, Machine Learning, and Deep Learning.

The reasoning behind that is because it handles all the complex and tedious algorithms for you and software developers only have to worry about feeding the Data and follow the Data Analytics Ecosystem Flow.

Following, there are some pictures that show how Intel DAAL works in greater detail.

This picture shows the Intel DAAL Model(Data Management, Algorithms, and Services).

This model represents all the functionalities that Intel DAAL offers from grabbing the data to making a final decision.

This picture shows the Data Flow in Intel DAAL.

The picture shows the data being fed to the program and all the steps that Intel DAAL goes through when processing the data.

This picture shows how Intel DAAL processes the data.

Intel DAAL in handwritten digit recognition

A very good type of machine learning problem is handwritten digit recognition. Intel DAAL does a good job at solving this problem by providing several relevant application algorithms such as Support Vector Machine (SVM), Principal Component Analysis (PCA), Naïve Bayes, and Neural Networks. Below there is an example that uses SVM to solve this problem.

Recognition is essentially the prediction or inference stage in the machine learning pipeline. In simple words, given a handwritten digit, the system should be able to recognize or infer what digit was written. For a system to be able to predict the output with a given input, it needs a trained model learned from the training data set that provides the system with the capability to make an inference or prediction. The first step before constructing a training model is to collect training data.

Loading Data in Intel DAAL

Setting Training and Testing Files:

string trainDatasetFileName = "digits_tra.csv";

string trainGroundTruthFileName = "digits_tra_labels.csv";

string testDatasetFileName = "digits_tes.csv";

string testGroundTruthFileName = "digits_tes_labels.csv";The object trainDataSource is a CSVFeatureManager that can load the data from a CSV file into memory.

FileDataSource<CSVFeatureManager> trainDataSource(trainDatasetFileName,

DataSource::doAllocateNumericTable, DataSource::doDictionaryFromContext);The data in memory would be stored as a numerical table. With the CSVFeatureManager, the table is automatically created. Load data from the CSV file by calling the member function loadDataBlock().

trainDataSource.loadDataBlock(nTrainObservations);Training Data in Intel DAAL

With the training data in memory, DAAL can start use that data to train by passing it to an algorithm by the training data numeric table trainDataSource.getNumericTable()

First the creation of a training model must occur

services::SharedPtr<svm::training::Batch<> > training(new

svm::training::Batch<>());Create algorithm object for multi-class SVM training

multi_class_classifier::training::Batch<> algorithm;

algorithm.parameter.nClasses = nClasses;

algorithm.parameter.training = training;Pass training dataset and dependent values to the algorithm

algorithm.input.set(classifier::training::data,

trainDataSource.getNumericTable());Build multi-class SVM model by calling the SVM computation on algorithm

algorithm.compute();Retrieve algorithm results and place within trainingResult object

trainingResult = algorithm.getResult();Serialize the learned model into a disk file. The training data from trainingResult is written to the model.

ModelFileWriter writer("./model");

writer.serializeToFile(trainingResult->get(classifier::training::model));Testing The Trained Model

The prediction model is created <source lang=c++> services::SharedPtr<svm::prediction::Batch<> > prediction(new

svm::prediction::Batch<>());

<source>

Initialize testDataSource and load data from /* Initialize FileDataSource<CSVFeatureManager> to retrieve the test data from .csv file */

FileDataSource<CSVFeatureManager> testDataSource(testDatasetFileName,

DataSource::doAllocateNumericTable,

DataSource::doDictionaryFromContext);

testDataSource.loadDataBlock(nTestObservations);

/* Create algorithm object for prediction of multi-class SVM values */

multi_class_classifier::prediction::Batch<> algorithm;

algorithm.parameter.prediction = prediction;

/* Pass testing dataset and trained model to the algorithm */

algorithm.input.set(classifier::prediction::data,

testDataSource.getNumericTable());

algorithm.input.set(classifier::prediction::model,

trainingResult->get(classifier::training::model));

/* Predict multi-class SVM values */

algorithm.compute();

/* Retrieve algorithm results */

predictionResult = algorithm.getResult();