Team Darth Vector

TEAM, use this for formatting. Wiki Editing Cheat Sheet

Join me, and together we can fork the problem as master and thread

Members

Alistair Godwin

Giorgi Osadze

Leonel Jara

Contents

Generic Programming

Generic Programming is a an objective when writing code to make algorithms reusable and with the least amount of specific code. An example of generic code is STL's templating functions which provide generic code that can be used with many different types without requiring much specific coding for the type( an addition template could be used for int, double, float, short, etc without requiring re-coding). A non-generic library requires types to be specified, meaning more type-specific code has to be created.

TBB Background

Threaded building blocks is an attempt by Intel to push the development of multi-threaded programs, the library implements containers and algorithms that improve the ability of the programmer to create multi-threaded applications. The library implements parallel versions of for, reduce and scan patterns. Threaded building blocks was originally developed by Intel but the concepts that it uses are derived from a diverse range of sources. Threaded building blocks was created in 2004 and was open sourced in 2007, the latest release of Threaded building blocks was in 2017.

STL Background

The STL was created as a general purpose computation library that a focus on generic programming. The STL uses templates extensively to achieve compile time polymorphism. In general the library provide four components: algorithms, containers, functions and iterators.

The library was, mostly, created by Alexander Stepanov due to his ideas about generic programming and its potential to revolutionize software development. Because of the ability of C++ to provide access to storage using pointers, C++ was used by Stepanov, even though the language was still relatively young at the time.

After a long period of engineering and development of the library, it obtained final approval in July 1994 to become part of the language standard.

List of STL Functions:

Algorithms Are supported by STL for various algorithms such as sorting, searching and accumulation. All can be found within the header "<algorithm>". Examples include sort() and reverse() functions.

STL iterators Are supported for serial traversal. Should you use an iterator in parallel, you must be cautious to not change the data while a thread is going through the iterator. They are defined within the header "<iterator>" and is coded as

#include<iterator>

foo(){

vector<type> myVector;

vector<type>::iterator i;

for( i = myVector.begin(); i < myVector.end(); i++){

bar();

}

}

Containers STL supports a variety of containers for data storage. Generally these containers are supported in parallel for read actions, but does not safely support writing to the container with or without reading at the same time. There are several header files that are included such as "<vector>", "<queue>", and "<deque>". They are coded as:

#include<vector>

#include<dequeue>

#include<queue>

int main(){

vector<type> myVector;

dequeue<type> cards;

queue<type> SenecaYorkTimHortons;

}

List of TBB containers

concurrent_queue : This is the concurrent version of the STL container Queue. This container supports first-in-first-out data storage like its STL counterpart. Multiple threads may simultaneously push and pop elements from the queue. Queue does NOT support and front() or back() in concurrent operations(the front could change while accessing). Also supports iterations, but are slow and are only intended for debug. This is defined within the header "tbb/concurrent_queue.h" and is coded as:#include <tbb/concurrent_queue.h> //....// tbb:concurrent_queue<typename> name;concurrent_vector : This is a container class for vectors with concurrent(parallel) support. These vectors do not support insertion or erase operations but support operations done by multiple threads. Note that when elements are inserted, they cannot be removed without calling the clear() member function on it, which removes every element in the array. This is defined within the header "tbb/concurrent_vector.h" and is coded as:

#include <tbb/concurrent_vector.h> //...// tbb:concurrent_vector<typename> name;

concurrent_hash_map : A container class that supports hashing in parallel. The generated keys are not ordered and there will always be at least 1 element for a key. Defined within "tbb/concurrent_hash_map.h"

List of TBB Algorithms

parallel_for: Provides concurrent support for for loops. This allows data to be divided up into chunks that each thread can work on. The code is defined in "tbb/parallel_for.h" and takes the template of:

foo parallel_for(firstPos, lastPos, increment { boo()}

parallel_scan: Provides concurrent support for a parallel scan. Intel promises it may invoke the function up to 2 times the amount when compared to the serial algorithm. The code is defined in "tbb/parallel_scan.h" and according to intel takes the template of:

void parallel_scan( const Range& range, Body& body [, partitioner] );

tbb:parallel_invoke(myFuncA, myFuncB, myFuncC);

Lock Convoying Problem

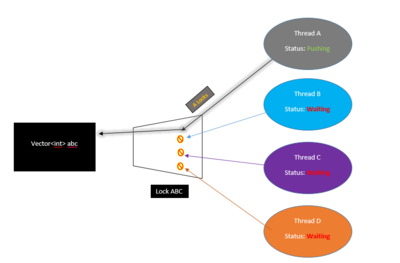

What is a Lock?

A Lock(also called "mutex") is a method for programmers to secure code that when executing in parallel can cause multiple threads to fight for a resource/container for some operation. When threads work in parallel to complete a task with containers, there is no indication when the thread reach the container and need to perform an operation on it. This causes problems when multiple threads are accessing the same place. When doing an insertion on a container with threads, we must ensure only 1 thread is capable of pushing to it or else threads may fight for control. By "Locking" the container, we ensure only 1 thread accesses at any given time.

To use a lock, you program must be working in parallel(ex #include <thread>) and should be completing something in parallel. You can find c++11 locks with #include <mutex>

Code example or Picture here ^_^

#include <iostream>

#include <thread>

#include <mutex>

//Some threads are spawned which call this function

//Declared the following within the class std::mutex NightsWatch;

void GameOfThronesClass::GuardTheWall(){

//Protect until Unlock() is called. Only 1 thread may do this below at a time. It is //"locked"

NightsWatch.Lock();

//Increment

DaysWithoutWhiteWalkerAttack++;

std::cout << "It has been " << DaysWithoutWhiteWalkerAttack << " since the last attack at Castle Black!\n";

//Allow Next thread to execute the above iteration

NightsWatch.Unlock();

}

Note that there can be problems with locks. If a thread is locked but it is never unlocked, any other threads will be forced to wait which may cause performance issues. Another problem is called "Dead Locking" where each thread may be waiting for another to unlock (and vice versa) and the program is forced to wait and wait .

Parallelism Problems & Convoying in STL

Within STL, issues arise when you attempt to access containers in parallel. With containers, when threads update the container say with push back, it is difficult to determine where the insertion occurred within the container(each thread is updating this container in any order) additionally, the size of the container is unknown as each thread may be updating the size as it goes (thread A may see a size of 4 while thread B a size of 9). For example, in a vector we can push some data to it in parallel but knowing where that data was pushed to requires us to iterate through the vector for the exact location . If we attempt to find the data in parallel with other operations ongoing, 1 thread could search for the data, but another could update the vector size during that time which causes problems with thread 1's search as the memory location may change should the vector need to grow(performs a deep copy to new memory).

Locks can solve the above issue but cause significant performance issues as the threads are forced to wait for each other. This performance hit is known as Lock Convoying.

Lock Convoying in TBB

TBB attempts to mitigate the performance issue from parallel code when accessing or completing an operation on a container through its own containers such as concurrent_vector. Through concurrent_vector, every time an element is accessed/changed, a return of the index location is given. TBB promises that any time an element is pushed, it will always be in the same location, no matter if the size of the vector changes in memory. With a standard vector, when the size of the vector changes, the data is copied over. If any threads are currently traversing this vector when the size changes, any iterators may no longer be valid.

Study Ref: https://software.intel.com/en-us/blogs/2008/10/20/tbb-containers-vs-stl-performance-in-the-multi-core-age This leads into Concurrent_vector growing below..

A Comparison between Serial Vector and TBB concurrent_vector

If only you knew the power of the Building Blocks

Using the code below, we will test the speed at completing some operations regarding vectors using the stl library with stl's vector and tbb's concurrent_vector. The code below will perform a "push back" operation both in serial and concurrent. Then, it measure the time taken to complete an n of push back operations.

#include <iostream>

#include <tbb/tbb.h>

#include <tbb/concurrent_vector.h>

#include <vector>

#include <fstream>

#include <cstring>

#include <chrono>

#include <string>

using namespace std::chrono;

// define a stl and tbb vector

tbb::concurrent_vector<std::string> con_vector_string;

std::vector<std::string> s_vector_string;

tbb::concurrent_vector<int> con_vector_int;

std::vector<int> s_vector_int;

void reportTime(const char* msg, steady_clock::duration span) {

auto ms = duration_cast<milliseconds>(span);

std::cout << msg << " - took - " <<

ms.count() << " milliseconds" << std::endl;

}

int main(int argc, char** argv){

if(argc != 2) { return 1; }

int size = std::atoi(argv[1]);

steady_clock::time_point ts, te;

/*

TEST WITH STRING OBJECT

*/

ts = steady_clock::now();

// serial for loop

for(int i = 0; i < size; ++i)

s_vector_string.push_back(std::string());

te = steady_clock::now();

reportTime("Serial vector speed - STRING: ", te-ts);

ts = steady_clock::now();

// concurrent for loop

tbb::parallel_for(0, size, 1, [&](int i){

con_vector_string.push_back(std::string());

});

te = steady_clock::now();

reportTime("Concurrent vector speed - STRING: ", te-ts);

/*

TEST WITH INT DATA TYPE

*/

std::cout<< "\n\n";

ts = steady_clock::now();

// serial for loop

for(int i = 0; i < size; ++i)

s_vector_int.push_back(i);

te = steady_clock::now();

reportTime("Serial vector speed - INT: ", te-ts);

ts = steady_clock::now();

// concurrent for loop

tbb::parallel_for(0, size, 1, [&](int i){

con_vector_int.push_back(i);

});

te = steady_clock::now();

reportTime("Concurrent vector speed - INT: ", te-ts);

}

The Speed Improvement

As the table suggests, completing push back operations using tbb's concurrent vector allows for increased performance against a serial connection. TBB additionally provides a benefit that it will never need to resize the vector as pushback operations are completed. In STL, the vector is dynamically allocated which requires it to reallocate and copy memory over which may further slow down the push back operation.

Why was TBB slower for Int?

When dealing with primitive types like int, the actual operation to push back is not very complex; meaning that a serial process can complete the push back quite quickly. The vector can also likely hold a lot more data before requiring to reallocate its memory. TBB's practicality comes when performing a more complex action on a primitive type or from a simple action on a more complex type. Parallel overhead(the resources required to support tbb in parallel) may also increase the time required which could be the cause of the slowdown in the above comparison.