GPU621/Intel Advisor

Intel Parallel Studio Advisor

Group Members

Introduction

Intel Advisor is software tool that is bundled with Intel Parallel Studio that is used to analyze a program to...

Vectorization

Vectorization is the process of utilizing vector registers to perform a single instruction on multiple values all at the same time.

A CPU register is a very, very tiny block of memory that sits right on top of the CPU. A 64-bit CPU can store 8 bytes of data in a single register.

A vector register is an expanded version of a CPU register. A 128-bit vector register can store 16 bytes of data. A 256-bit vector register can store 32 bytes of data.

The vector register can then be divided into lanes, where each lane stores a single value of a certain data type.

A 128-bit vector register can be divided into the following ways:

- 16 lanes: 16x characters (1 byte each)

- 8 lanes: 8x shorts (2 bytes each)

- 4 lanes: 4x integers / floats (4 bytes each)

- 2 lanes: 2x doubles (8 byte eachs)

a | b | c | d | e | f | g | h

1 | 2 | 3 | 4 | 5 | 6 | 7 | 8

10 | 20 | 30 | 40

1.5 | 2.5 | 3.5 | 4.5

3.14159 | 3.14159Instruction Set Architecture

- SSE

- SSE2

- SSE3

- SSSE3

- SSE4.1

- SSE4.2

- AVX

- AVX2

Example

For SSE >= SSE4.1, to multiply two 128-bit vector of signed 32-bit integers, you would use the following Intel intrisic function:

__m128i _mm_mullo_epi32(__m128i a, __m128i b)Prior to SSE4.1, the same thing can be done with the following sequence of function calls.

// Vec4i operator * (Vec4i const & a, Vec4i const & b) {

// #ifdef

__m128i a13 = _mm_shuffle_epi32(a, 0xF5); // (-,a3,-,a1)

__m128i b13 = _mm_shuffle_epi32(b, 0xF5); // (-,b3,-,b1)

__m128i prod02 = _mm_mul_epu32(a, b); // (-,a2*b2,-,a0*b0)

__m128i prod13 = _mm_mul_epu32(a13, b13); // (-,a3*b3,-,a1*b1)

__m128i prod01 = _mm_unpacklo_epi32(prod02, prod13); // (-,-,a1*b1,a0*b0)

__m128i prod23 = _mm_unpackhi_epi32(prod02, prod13); // (-,-,a3*b3,a2*b2)

__m128i prod = _mm_unpacklo_epi64(prod01, prod23); // (ab3,ab2,ab1,ab0)Intel Intrinsics SSE,SSE2,SSE3,SSSE3,SSE4.1,SSE4.2

Examples

[INSERT IMAGE HERE]

int a[8] = { 1, 2, 3, 4, 5, 6, 7, 8 };

for (int i = 0; i < 8; i++) {

a[i] *= 10;

}Intel Advisor Tutorial Example

You can find the sample code in the directory of your Intel Parallel Studio installation. Just unzip the file and you can build the code on the command line or in Visual Studio.

Typically: C:\Program Files (x86)\IntelSWTools\Advisor 2019\samples\en\C++\vec_samples.zip

Intel® Advisor Tutorial: Add Efficient SIMD Parallelism to C++ Code Using the Vectorization Advisor

Loop Unrolling

The compiler can "unroll" a loop so that the body of the loop is duplicated a number of times, and as a result, reduce the number of conditional checks and counter increments per loop.

Warning: Do not write your code like this. The compiler will do it for you, unless you tell it not to.

#pragma nounrollfor (int i = 0; i < 50; i++) {

foo(i);

}

for (int i = 0; i < 50; i+=5) {

foo(i);

foo(i+1);

foo(i+2);

foo(i+3);

foo(i+4);

}

// foo(0)

// foo(1)

// foo(2)

// foo(3)

// foo(4)

// ...

// foo(45)

// foo(46)

// foo(47)

// foo(48)

// foo(49)Dependencies

Pointer Alias

A pointer alias means that two pointers point to the same location in memory or the two pointers overlap in memory.

If you compile the vec_samples project with the `NOALIAS` macro, the `matvec` function declaration will include the `restrict` keyword. The `restrict` keyword will tell the compiler that pointers `a` and `b` do not overlap and that the compiler is free optimize the code blocks that uses the pointers.

[INSERT IMAGE HERE]

multiply.c

#ifdef NOALIAS

void matvec(int size1, int size2, FTYPE a[][size2], FTYPE b[restrict], FTYPE x[], FTYPE wr[])

#else

void matvec(int size1, int size2, FTYPE a[][size2], FTYPE b[], FTYPE x[], FTYPE wr[])

#endifTo learn more about the `restrict` keyword and how the compiler can optimize code if it knows that two pointers do not overlap, you can visit this StackOverflow thread: What does the restrict keyword mean in C++?

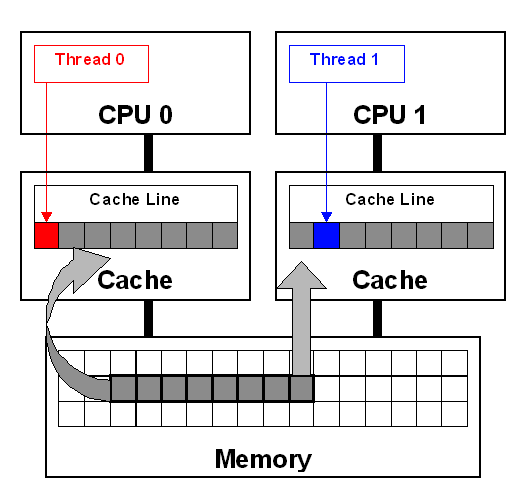

Loop-Carried Dependency

Pointers that overlap one another may introduce a loop-carried dependency when those pointers point to an array of data. The vectorizer will make this assumption and, as a result, will not auto-vectorize the code.

In the code example below, `a` is a function of `b`. If pointers `a` and `b` overlap, then there exists the possibility that if `a` is modified then `b` will also be modified, and therefore may create the possibility of a loop-carried dependency. This means the loop cannot be vectorized.

void func(int* a, int* b) {

...

for (i = 0; i < size1; i++) {

for (j = 0; j < size2; j++) {

a[i] = foo(b[j]);

}

}

}The following image illustrates the loop-carried dependency when two pointers overlap.

[INSERT IMAGE HERE]

Memory Alignment

Intel Advisor can detect if there are any memory alignment issues that may produce inefficient vectorization code.

A loop can be vectorized if there are no data dependencies across loop iterations. However, if the data is not aligned, the vectorizer may have to use a "peeled" loop to address the misalignment. So instead of vectorizing the entire loop, an extra loop needs to be inserted to perform operations on the front-end of the array that not aligned with memory.

[INSERT IMAGE HERE]

Alignment

To align data elements to an `x` amount of bytes in memory, use the `align` macro.

Code snippet that is used to align the data elements in the 'vec_samples' project.

// Tell the compiler to align the a, b, and x arrays

// boundaries. This allows the vectorizer to use aligned instructions

// and produce faster code.

#ifdef _WIN32

_declspec(align(ALIGN_BOUNDARY, OFFSET)) FTYPE a[ROW][COLWIDTH];

_declspec(align(ALIGN_BOUNDARY, OFFSET)) FTYPE b[ROW];

_declspec(align(ALIGN_BOUNDARY, OFFSET)) FTYPE x[COLWIDTH];

_declspec(align(ALIGN_BOUNDARY, OFFSET)) FTYPE wr[COLWIDTH];

#else

FTYPE a[ROW][COLWIDTH] __attribute__((align(ALIGN_BOUNDARY, OFFSET)));

FTYPE b[ROW] __attribute__((align(ALIGN_BOUNDARY, OFFSET)));

FTYPE x[COLWIDTH] __attribute__((align(ALIGN_BOUNDARY, OFFSET)));

FTYPE wr[COLWIDTH] __attribute__((align(ALIGN_BOUNDARY, OFFSET)));

#endif // _WIN32Padding

Even if the array elements are aligned with memory, say at 16 byte boundaries, you might still encounter a "remainder" loop that deals with back-end of the array that cannot be included in the vectorized code. The vectorizer will have to insert an extra loop at the end of the vectorized loop to perform operations on the back-end of the array.

To address this issue, add some padding.

For example, if you have a `4 x 19` array of floats, and your system access to a 128-bit vector registers, then you should add 1 column to make the array `4 x 20` so that the number of columns is evenly divisible by the number of floats that can be loaded onto a 128-bit vector register, which is 4 floats.

[INSERT IMAGE HERE]