DPS921/Franky

Contents

Intel vTune Amplifier

Team Members

Introduction

Intel vTune Amplifier is a performance profiler. It is specifically designed to analyze various aspect of the run-time environment and provide a snapshot of how the program performed with respect to the hardware. Some of the information include the time consumed, percentage of time used per function call, and cpu core usage. It also performs few automated analysis to suggests different ways to improve the performance, especially when using TBB.

Our project aims to leverage the power of vTune Amplifier to improve a solution to a trivial challenge.

Details

Intel vTune Amplifier is a part of the Intel Parallel Studio package as well as a standalone package. It provides a comprehensive report of resource usage by the target executable. The information of the report includes Thread usage, Memory usage, Storage usage, and other system-level resource usage. It provides useful suggestions on how how to further maximize the performance, such as increasing the grainsize or increasing the scope of data. It also provides information on how different function calls performed during run-time. This is a powerful tool to perform a data-driven system improvement. Using this tool, the developer can quickly and accurately point out the source of bottleneck and resolve it to provide an increase in scalability.

To demonstrate its usability, our team will take on a trivial challenge of finding the line-of-best-fit using linear regression method on a biased data points. We will compare a single-threaded approach, optimized using Intel DAAL, and TBB on the first code, each being analyzed by vTune to compare the performance and resource utilization.

Case-study

The test was performed over 99999999 data points with learn rate of .001 and 100 times calculation.

(i.e.$<cmd> 99999999 .001 100Single-thread

Code

#include <iostream>

#include <random>

#include <chrono>

void gradient_descent(const double x[], const double y[], const size_t& epoches, const size_t& N, const double& learn_rate, double& m, double& b) {

double p;

double err;

size_t idx;

for(size_t i = 0; i < epoches * N; i++) {

idx = i % N;

p = b + m * x[idx];

err = p - y[idx];

b = b - learn_rate * err;

m = m - learn_rate * err * x[idx];

}

}

int main(int argc, char* argv[]) {

size_t N;

double learn_rate;

size_t epoches;

double correct_b = 1.0;

double correct_m = 0.5;

if (argc != 4) {

N = 1000;

learn_rate = 1.0e-3;

epoches = 5;

} else {

N = std::strtoul(argv[1], NULL, 10);

learn_rate = std::strtod(argv[2], NULL);

epoches = std::strtoul(argv[3], NULL,10);

std::cout << "N = " << N << ", learn_rate = " << learn_rate << ", epoches = " << epoches << std::endl;

}

std::chrono::steady_clock::time_point tStart, tStop;

tStart = std::chrono::steady_clock::now();

double* m_real = new double[N];

double* b_real = new double[N];

double* x = new double[N];

double* y = new double[N];

// setting up random generators

std::default_random_engine generator;

std::normal_distribution<double> m_dist(correct_m,0.2);

std::normal_distribution<double> b_dist(correct_b,0.2);

std::normal_distribution<double> x_dist(0.0,1);

// creating random dataset

for(size_t i = 0; i < N; i++) {

m_real[i] = m_dist(generator);

b_real[i] = b_dist(generator);

x[i] = x_dist(generator);

y[i] = m_real[i] * x[i] + b_real[i];

}

// estimated b, m

double b = 0;

double m = 0;

// gradient descent

gradient_descent(x, y, epoches, N, learn_rate, m, b);

tStop = std::chrono::steady_clock::now();

auto ms = std::chrono::duration_cast<std::chrono::milliseconds>(tStop - tStart);

std::cout << "Execution Time: " << ms.count() << " milliseconds" << std::endl;

std::cout << "Predicted:\nb = " << b << ", m = " << m << std::endl;

std::cout << "Correct:\nb = " << correct_b << ", m = " << correct_m << std::endl;

std::cout << std::endl;

delete[] m_real;

delete[] b_real;

delete[] x;

delete[] y;

return 0;

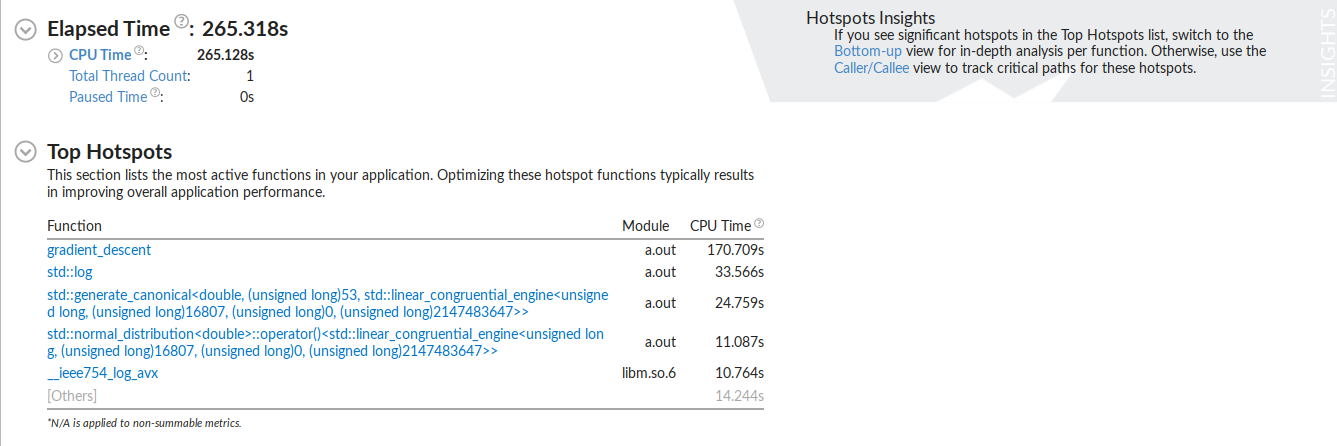

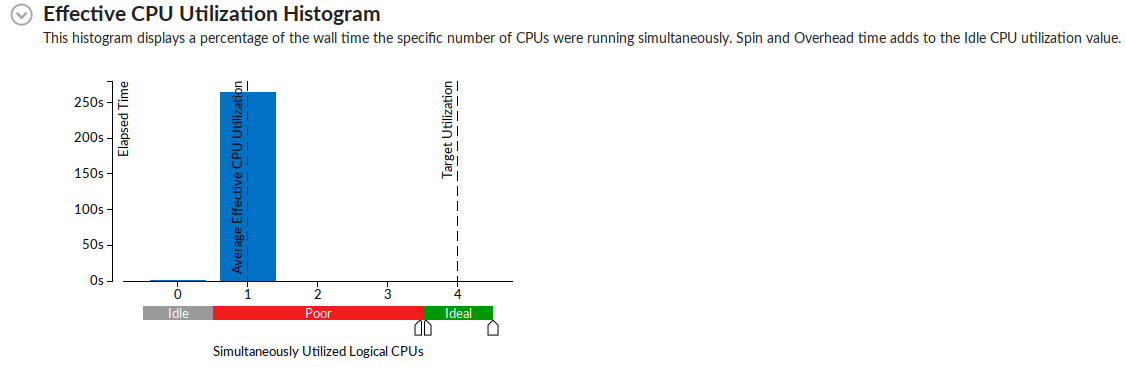

}Performance

Optimized with DAAL

Code

Performance

Multi-thread

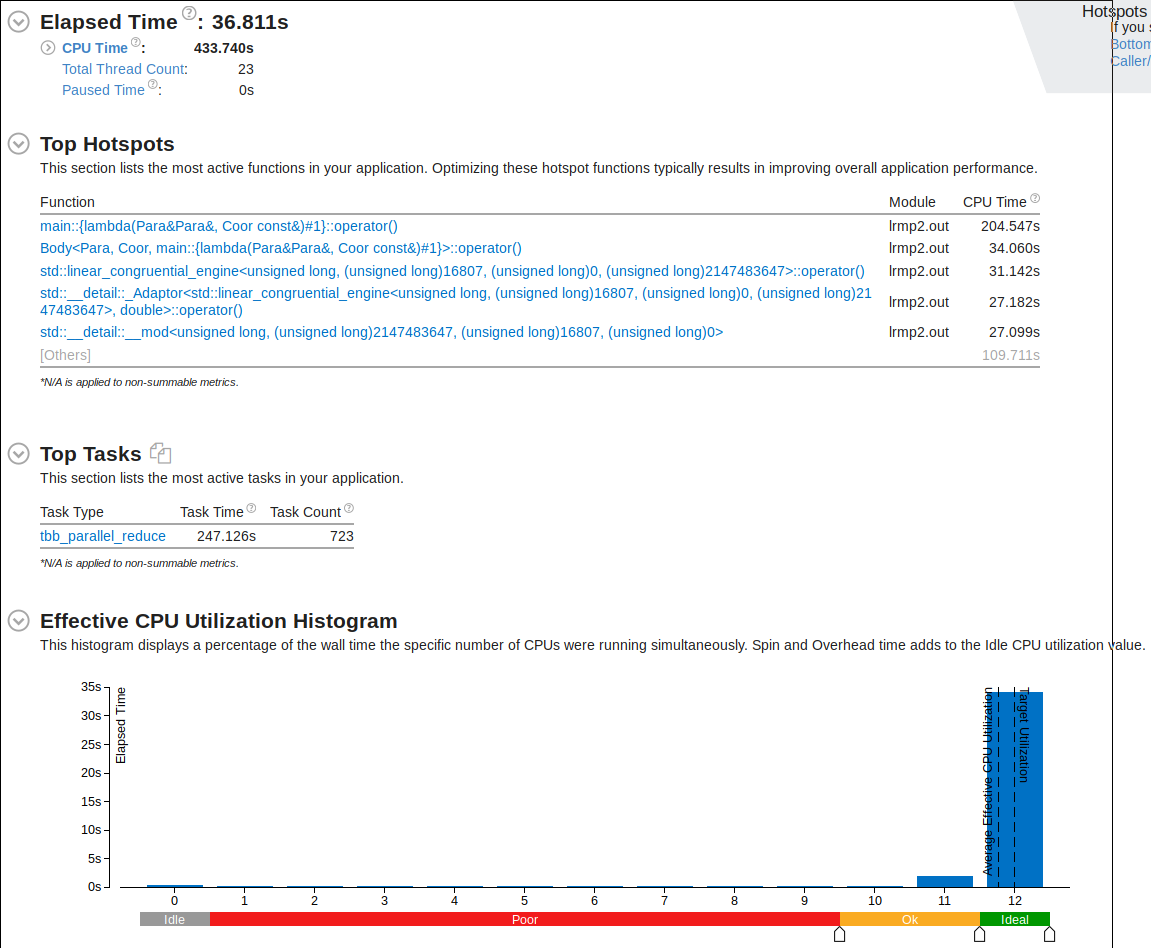

This code takes the single-threaded version above and applies TBB to leverage the power of threading to increase performance.

Code

#include <iostream>

#include <random>

#include <tbb/tbb.h>

struct Para{

double s_m;

double s_b;

};

struct Coor{

double s_x;

double s_y;

};

template<typename T,typename R, typename C>

class Body{

T m_acc;

const R* m_coor;

T* m_out;

T m_i;

C m_c;

public:

Body(T* out,R* co, T i, C c): m_acc(i),m_coor(co), m_out(out), m_i(i), m_c(c) {}

T get_accumul() const { return m_acc; }

void operator()(tbb::blocked_range<std::size_t>& r){

T temp = m_acc;

for (std::size_t i = r.begin(); i != r.end(); i++)

temp = m_c(temp, m_out[i],m_coor[i]);

m_acc = temp;

}

template<typename Tag>

void operator()(tbb::blocked_range<std::size_t>& r, Tag){

T temp = m_acc;

for (std::size_t i = r.begin(); i != r.end(); i++){

temp = m_c(temp, m_out[i],m_coor[i]);

if (Tag::is_final_scan()){

m_out[i] = temp;

}

}

m_acc = temp;

}

Body(Body& b, tbb::split) : m_acc(b.m_i), m_coor(b.m_coor), m_out(b.m_out), m_i(b.m_i), m_c(b.m_c){}

void reverse_join(Body& a){

m_acc.s_m = (m_acc.s_m + a.m_acc.s_m)/2;

m_acc.s_b = (m_acc.s_b + a.m_acc.s_b)/2;

}

void join(Body& a){

m_acc.s_m = (m_acc.s_m + a.m_acc.s_m)/2;

m_acc.s_b = (m_acc.s_b + a.m_acc.s_b)/2;

}

void assign(Body& b) { m_acc = b.m_acc ; }

};

template<typename T,typename R, typename C>

T scan( T* out, R* co, std::size_t n, T identity, C combine){

Body<T,R,C> body(out, co, identity, combine);

tbb::parallel_reduce ( tbb::blocked_range<std::size_t>(0,n,5000), body );

return body.get_accumul();

}

int main(int argc, char* argv[]) {

std::size_t N;

double learn_rate;

std::size_t epoches;

if (argc != 4) {

N = 1000;

learn_rate = 1.0e-3;

epoches = 5;

} else {

N = std::strtoul(argv[1], NULL, 10);

learn_rate = std::strtod(argv[2], NULL);

epoches = std::strtoul(argv[3], NULL,10);

std::cout << "N = " << N << ", learn_rate = " << learn_rate << ", epoches" << epoches << std::endl;

}

// creating random dataset

Coor* c = new Coor[N]; /* coordinates */

double* m_real = new double[N];

double* b_real = new double[N];

std::default_random_engine generator;

std::normal_distribution<double> m_dist(0.5,0.2);

std::normal_distribution<double> b_dist(1.0,0.2);

std::normal_distribution<double> x_dist(0.0,1);

#pragma omp parallel for

for(std::size_t i = 0; i < N; i++) {

m_real[i] = m_dist(generator);

b_real[i] = b_dist(generator);

c[i].s_x = x_dist(generator);

c[i].s_y = m_real[i] * c[i].s_x + b_real[i];

}

Para* a = new Para[N]; /* parameters */

auto calc = [&](Para& temp, Para& a, const Coor& c ) {

double p = temp.s_b + temp.s_m * c.s_x;

double err = p - c.s_y;

a.s_b = temp.s_b - learn_rate * err;

a.s_m = temp.s_m - learn_rate * err * c.s_x;

return a;

};

Para final;

for(std::size_t i = 0 ; i < epoches ; i++){

final = scan(a,c,N,final,calc);

}

std::cout << "b = " << a[N-1].s_b << ", m = " << a[N-1].s_m << std::endl;

delete [] m_real;

delete [] b_real;

delete [] a;

delete [] c;

return 0;

}