Difference between revisions of "Team False Sharing"

Msivanesan4 (talk | contribs) (→Identifying False Sharing) |

Msivanesan4 (talk | contribs) (→Identifying False Sharing) |

||

| Line 13: | Line 13: | ||

The frequent coordination required between processors when cache lines are marked ‘Invalid’ requires cache lines to be written to memory and subsequently loaded. False sharing increases this coordination and can significantly degrade application performance. | The frequent coordination required between processors when cache lines are marked ‘Invalid’ requires cache lines to be written to memory and subsequently loaded. False sharing increases this coordination and can significantly degrade application performance. | ||

In Figure, threads 0 and 1 require variables that are adjacent in memory and reside on the same cache line. The cache line is loaded into the caches of CPU 0 and CPU 1. Even though the threads modify different variables, the cache line is invalidated forcing a memory update to maintain cache coherency. | In Figure, threads 0 and 1 require variables that are adjacent in memory and reside on the same cache line. The cache line is loaded into the caches of CPU 0 and CPU 1. Even though the threads modify different variables, the cache line is invalidated forcing a memory update to maintain cache coherency. | ||

| + | #include <iostream> | ||

| + | #include <omp.h> | ||

| + | #include "timer.h" | ||

| + | #define NUM_THREADS 1 | ||

| + | #define SIZE 1000000 | ||

| + | <code> | ||

| + | #include <iostream> | ||

| + | #include <omp.h> | ||

| + | #include "timer.h" | ||

| + | #define NUM_THREADS 1 | ||

| + | #define SIZE 1000000 | ||

| + | |||

| + | int main(int argc, const char * argv[]) { | ||

| + | int* a = new int [SIZE]; | ||

| + | int* b = new int [SIZE]; | ||

| + | int sum = 0.0; | ||

| + | int threadsUsed; | ||

| + | Timer stopwatch; | ||

| + | |||

| + | for(int i = 0; i < SIZE; i++){//initialize arrays | ||

| + | a[i] = i; | ||

| + | b[i] = i; | ||

| + | } | ||

| + | |||

| + | omp_set_num_threads(NUM_THREADS); | ||

| + | stopwatch.start(); | ||

| + | #pragma omp parallel for reduction(+:sum) | ||

| + | for(int i = 0; i < SIZE; i++){//calcultae sum of product of arrays | ||

| + | threadsUsed = omp_get_num_threads(); | ||

| + | sum+= a[i] * b[i]; | ||

| + | } | ||

| + | stopwatch.stop(); | ||

| + | |||

| + | std::cout<<sum<<std::endl; | ||

| + | std::cout<<"Threads Used: "<<threadsUsed<<std::endl; | ||

| + | std::cout<<"Time: "<<stopwatch.currtime()<<std::endl; | ||

| + | |||

| + | return 0; | ||

| + | } | ||

| + | </code> | ||

=Eliminating False Sharing= | =Eliminating False Sharing= | ||

Revision as of 16:35, 16 December 2017

Cache Coherence

In Symmetric Multiprocessor (SMP)systems , each processor has a local cache. The local cache is a smaller, faster memory which stores copies of data from frequently used main memory locations. Cache lines are closer to the CPU than the main memory and are intended to make memory access more efficient. In a shared memory multiprocessor system with a separate cache memory for each processor, it is possible to have many copies of shared data: one copy in the main memory and one in the local cache of each processor that requested it. When one of the copies of data is changed, the other copies must reflect that change. Cache coherence is the discipline which ensures that the changes in the values of shared operands(data) are propagated throughout the system in a timely fashion. To ensure data consistency across multiple caches, multiprocessor-capable Intel® processors follow the MESI (Modified/Exclusive/Shared/Invalid) protocol. On first load of a cache line, the processor will mark the cache line as ‘Exclusive’ access. As long as the cache line is marked exclusive, subsequent loads are free to use the existing data in cache. If the processor sees the same cache line loaded by another processor on the bus, it marks the cache line with ‘Shared’ access. If the processor stores a cache line marked as ‘S’, the cache line is marked as ‘Modified’ and all other processors are sent an ‘Invalid’ cache line message. If the processor sees the same cache line which is now marked ‘M’ being accessed by another processor, the processor stores the cache line back to memory and marks its cache line as ‘Shared’. The other processor that is accessing the same cache line incurs a cache miss.

Identifying False Sharing

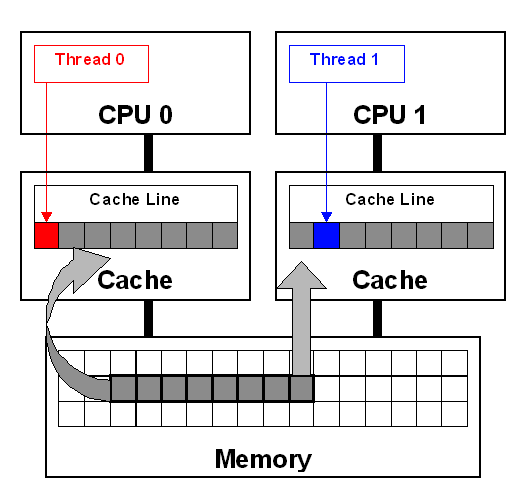

False sharing occurs when threads on different processors modify variables that reside on the same cache line. This invalidates the cache line and forces an update, which hurts performance.

False sharing is a well-know performance issue on SMP systems, where each processor has a local cache. it occurs when treads on different processors modify varibles that reside on th the same cache line like so.

The frequent coordination required between processors when cache lines are marked ‘Invalid’ requires cache lines to be written to memory and subsequently loaded. False sharing increases this coordination and can significantly degrade application performance.

In Figure, threads 0 and 1 require variables that are adjacent in memory and reside on the same cache line. The cache line is loaded into the caches of CPU 0 and CPU 1. Even though the threads modify different variables, the cache line is invalidated forcing a memory update to maintain cache coherency.

- include <iostream>

- include <omp.h>

- include "timer.h"

- define NUM_THREADS 1

- define SIZE 1000000

- include <iostream>

- include <omp.h>

- include "timer.h"

- define NUM_THREADS 1

- define SIZE 1000000

int main(int argc, const char * argv[]) {

int* a = new int [SIZE];

int* b = new int [SIZE];

int sum = 0.0;

int threadsUsed;

Timer stopwatch;

for(int i = 0; i < SIZE; i++){//initialize arrays

a[i] = i;

b[i] = i;

}

omp_set_num_threads(NUM_THREADS);

stopwatch.start();

- pragma omp parallel for reduction(+:sum)

for(int i = 0; i < SIZE; i++){//calcultae sum of product of arrays

threadsUsed = omp_get_num_threads();

sum+= a[i] * b[i];

}

stopwatch.stop();

std::cout<<sum<<std::endl;

std::cout<<"Threads Used: "<<threadsUsed<<std::endl;

std::cout<<"Time: "<<stopwatch.currtime()<<std::endl;

return 0;

}