Difference between revisions of "Team Darth Vector"

(→List of TBB containers) |

(→Efficiency Comparison Parallel for and concurrent_vector) |

||

| Line 59: | Line 59: | ||

tbb:concurrent_vector<typename> name; </pre> | tbb:concurrent_vector<typename> name; </pre> | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | '''<u>concurrent_hash_map</u>''' : A container class that supports hashing in parallel. The generated keys are not ordered and there will always be at least 1 element for a key. Defined within "'''tbb/concurrent_hash_map.h'''" | ||

| + | |||

| + | ==List of TBB Algorithms== | ||

| + | <u>'''parallel_for:'''</u> Provides concurrent support for for loops. This allows data to be divided up into chunks that each thread can work on. The code is defined in "'''tbb/parallel_for.h'''" and takes the template of: | ||

| + | <pre> | ||

| + | foo parallel_for(firstPos, lastPos, increment { boo()} | ||

| + | |||

| + | </pre> | ||

| + | |||

| + | <u>'''parallel_scan:'''</u> Provides concurrent support for a parallel scan. Intel promises it may invoke the function up to 2 times the amount when compared to the serial algorithm. The code is defined in "'''tbb/parallel_scan.h'''" and according to intel takes the template of: | ||

| + | <pre> | ||

| + | void parallel_scan( const Range& range, Body& body [, partitioner] ); | ||

| + | |||

| + | </pre> | ||

| + | |||

| + | |||

| + | <u>'''parallel_invoke:'''</u> Provides support for parallel calling to functions provided in the arguments. It is defined within the header "'''tbb/parallel_invoke.h'''" and is coded as: <pre> | ||

| + | tbb:parallel_invoke(myFuncA, myFuncB, myFuncC); | ||

| + | </pre> | ||

| + | |||

| + | ==Lock Convoying Problem== | ||

| + | ===What is a Lock?=== | ||

| + | |||

| + | A Lock(also called "mutex") is a method for programmers to secure code that when executing in parallel can cause multiple threads to fight for a resource/container for some operation. When threads work in parallel to complete a task with containers, there is no indication when the thread reach the container and need to perform an operation on it. This causes problems when multiple threads are accessing the same place. When doing an insertion on a container with threads, we must ensure only 1 thread is capable of pushing to it or else threads may fight for control. By "Locking" the container, we ensure only 1 thread accesses at any given time. | ||

| + | |||

| + | To use a lock, you program must be working in parallel(ex #include <thread>) and should be completing something in parallel. You can find c++11 locks with #include <mutex> | ||

| + | |||

| + | Code example or Picture here ^_^ | ||

| + | <pre> | ||

| + | #include <iostream> | ||

| + | #include <thread> | ||

| + | #include <mutex> | ||

| + | |||

| + | //Some threads are spawned which call this function | ||

| + | //Declared the following within the class std::mutex NightsWatch; | ||

| + | void GameOfThronesClass::GuardTheWall(){ | ||

| + | |||

| + | //Protect until Unlock() is called. Only 1 thread may do this below at a time. It is //"locked" | ||

| + | NightsWatch.Lock(); | ||

| + | |||

| + | //Increment | ||

| + | DaysWithoutWhiteWalkerAttack++; | ||

| + | std::cout << "It has been " << DaysWithoutWhiteWalkerAttack << " since the last attack at Castle Black!\n"; | ||

| + | |||

| + | //Allow Next thread to execute the above iteration | ||

| + | NightsWatch.Unlock(); | ||

| + | |||

| + | |||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | Note that there can be problems with locks. If a thread is locked but it is never unlocked, any other threads will be forced to wait which may cause performance issues. Another problem is called "Dead Locking" where each thread may be waiting for another to unlock (and vice versa) and the program is forced to wait and wait . | ||

| + | |||

| + | ===Parallelism Problems & Convoying in STL=== | ||

| + | |||

| + | Within STL, issues arise when you attempt to access containers in parallel. With containers, when threads update the container say with push back, it is difficult to determine where the insertion occurred within the container(each thread is updating this container in any order) additionally, the size of the container is unknown as each thread may be updating the size as it goes (thread A may see a size of 4 while thread B a size of 9). | ||

| + | For example, in a vector we can push some data to it in parallel but knowing where that data was pushed to requires us to iterate through the vector for the exact location . | ||

| + | If we attempt to find the data in parallel with other operations ongoing, 1 thread could search for the data, but another could update the vector size during that time which causes problems with thread 1's search as the memory location may change should the vector need to grow(performs a deep copy to new memory). | ||

| + | |||

| + | Locks can solve the above issue but cause significant performance issues as the threads are forced to wait for each other. This performance hit is known as '''Lock Convoying'''. | ||

| + | |||

| + | [[File:DarthVector ThreadLock.PNG |thumb|center|400px| Performance issues inside STL]] | ||

| + | |||

| + | ===Lock Convoying in TBB=== | ||

| + | TBB attempts to mitigate the performance issue from parallel code when accessing or completing an operation on a container through its own containers such as concurrent_vector. | ||

| + | Through '''concurrent_vector''', every time an element is accessed/changed, a return of the index location is given. TBB promises that any time an element is pushed, it will always be in the same location, no matter if the size of the vector changes in memory. With a standard vector, when the size of the vector changes, the data is copied over. If any threads are currently traversing this vector when the size changes, any iterators may no longer be valid. | ||

| + | |||

| + | |||

| + | |||

| + | Study Ref: https://software.intel.com/en-us/blogs/2008/10/20/tbb-containers-vs-stl-performance-in-the-multi-core-age | ||

| + | This leads into Concurrent_vector growing below.. | ||

| + | |||

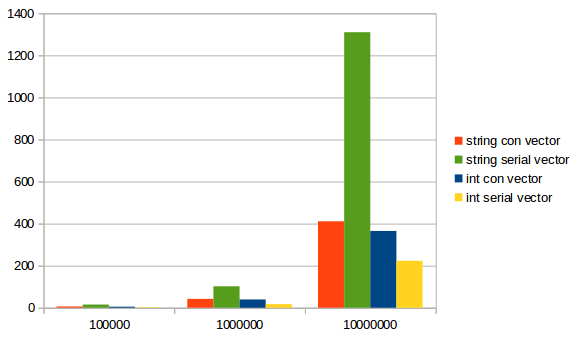

| + | ==Efficiency Comparison Parallel for and concurrent_vector== | ||

| + | |||

| Line 151: | Line 229: | ||

[[File:Vector_speed.png]] | [[File:Vector_speed.png]] | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

''If only you knew the power of the Building Blocks'' | ''If only you knew the power of the Building Blocks'' | ||

Revision as of 22:31, 14 December 2017

TEAM, use this for formatting. Wiki Editing Cheat Sheet

Join me, and together we can fork the problem as master and thread

Members

Alistair Godwin

Giorgi Osadze

Leonel Jara

Contents

[hide]Generic Programming

Generic Programming is a an objective when writing code to make algorithms reusable and with the least amount of specific code. An example of generic code is STL's templating functions which provide generic code that can be used with many different types without requiring much specific coding for the type( an addition template could be used for int, double, float, short, etc without requiring re-coding). A non-generic library requires types to be specified, meaning more type-specific code has to be created.

TBB Background

<TODO>

STL Background

<Todo>

List of STL Functions:

Algorithms Are supported by STL for various algorithms such as sorting, searching and accumulation. All can be found within the header "<algorithm>". Examples include sort() and reverse functions()

STL iterators Are supported for serial traversal. Should you use an iterator in parallel, you must be cautious to not change the data while a thread is going through the iterator. They are defined within the header "<iterator>" and is coded as

#include<iterator>

foo(){

vector<type> myVector;

vector<type>::iterator i;

for( i = myVector.begin(); i < myVector.end(); i++){

bar();

}

}

Containers STL supports a variety of containers for data storage. Generally these containers are supported in parallel for read actions, but does not safely support writing to the container with or without reading at the same time. There are several header files that are included such as "<vector>", "<queue>", and "<deque>".

List of TBB containers

concurrent_queue : This is the concurrent version of the STL container Queue. This container supports first-in-first-out data storage like its STL counterpart. Multiple threads may simultaneously push and pop elements from the queue. Queue does NOT support and front() or back() in concurrent operations(the front could change while accessing). Also supports iterations, but are slow and are only intended for debug. This is defined within the header "tbb/concurrent_queue.h" and is coded as:#include <tbb/concurrent_queue.h> //....// tbb:concurrent_queue<typename> name;concurrent_vector : This is a container class for vectors with concurrent(parallel) support. These vectors do not support insertion or erase operations but support operations done by multiple threads. Note that when elements are inserted, they cannot be removed without calling the clear() member function on it, which removes every element in the array. This is defined within the header "tbb/concurrent_vector.h" and is coded as:

#include <tbb/concurrent_vector.h> //...// tbb:concurrent_vector<typename> name;

concurrent_hash_map : A container class that supports hashing in parallel. The generated keys are not ordered and there will always be at least 1 element for a key. Defined within "tbb/concurrent_hash_map.h"

List of TBB Algorithms

parallel_for: Provides concurrent support for for loops. This allows data to be divided up into chunks that each thread can work on. The code is defined in "tbb/parallel_for.h" and takes the template of:

foo parallel_for(firstPos, lastPos, increment { boo()}

parallel_scan: Provides concurrent support for a parallel scan. Intel promises it may invoke the function up to 2 times the amount when compared to the serial algorithm. The code is defined in "tbb/parallel_scan.h" and according to intel takes the template of:

void parallel_scan( const Range& range, Body& body [, partitioner] );

tbb:parallel_invoke(myFuncA, myFuncB, myFuncC);

Lock Convoying Problem

What is a Lock?

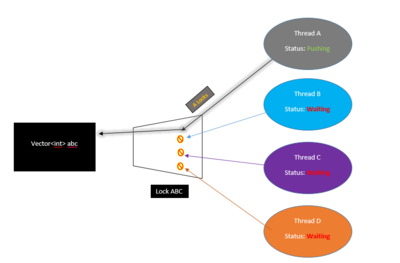

A Lock(also called "mutex") is a method for programmers to secure code that when executing in parallel can cause multiple threads to fight for a resource/container for some operation. When threads work in parallel to complete a task with containers, there is no indication when the thread reach the container and need to perform an operation on it. This causes problems when multiple threads are accessing the same place. When doing an insertion on a container with threads, we must ensure only 1 thread is capable of pushing to it or else threads may fight for control. By "Locking" the container, we ensure only 1 thread accesses at any given time.

To use a lock, you program must be working in parallel(ex #include <thread>) and should be completing something in parallel. You can find c++11 locks with #include <mutex>

Code example or Picture here ^_^

#include <iostream>

#include <thread>

#include <mutex>

//Some threads are spawned which call this function

//Declared the following within the class std::mutex NightsWatch;

void GameOfThronesClass::GuardTheWall(){

//Protect until Unlock() is called. Only 1 thread may do this below at a time. It is //"locked"

NightsWatch.Lock();

//Increment

DaysWithoutWhiteWalkerAttack++;

std::cout << "It has been " << DaysWithoutWhiteWalkerAttack << " since the last attack at Castle Black!\n";

//Allow Next thread to execute the above iteration

NightsWatch.Unlock();

}

Note that there can be problems with locks. If a thread is locked but it is never unlocked, any other threads will be forced to wait which may cause performance issues. Another problem is called "Dead Locking" where each thread may be waiting for another to unlock (and vice versa) and the program is forced to wait and wait .

Parallelism Problems & Convoying in STL

Within STL, issues arise when you attempt to access containers in parallel. With containers, when threads update the container say with push back, it is difficult to determine where the insertion occurred within the container(each thread is updating this container in any order) additionally, the size of the container is unknown as each thread may be updating the size as it goes (thread A may see a size of 4 while thread B a size of 9). For example, in a vector we can push some data to it in parallel but knowing where that data was pushed to requires us to iterate through the vector for the exact location . If we attempt to find the data in parallel with other operations ongoing, 1 thread could search for the data, but another could update the vector size during that time which causes problems with thread 1's search as the memory location may change should the vector need to grow(performs a deep copy to new memory).

Locks can solve the above issue but cause significant performance issues as the threads are forced to wait for each other. This performance hit is known as Lock Convoying.

Lock Convoying in TBB

TBB attempts to mitigate the performance issue from parallel code when accessing or completing an operation on a container through its own containers such as concurrent_vector. Through concurrent_vector, every time an element is accessed/changed, a return of the index location is given. TBB promises that any time an element is pushed, it will always be in the same location, no matter if the size of the vector changes in memory. With a standard vector, when the size of the vector changes, the data is copied over. If any threads are currently traversing this vector when the size changes, any iterators may no longer be valid.

Study Ref: https://software.intel.com/en-us/blogs/2008/10/20/tbb-containers-vs-stl-performance-in-the-multi-core-age This leads into Concurrent_vector growing below..

Efficiency Comparison Parallel for and concurrent_vector

#include <iostream>

#include <tbb/tbb.h>

#include <tbb/concurrent_vector.h>

#include <vector>

#include <fstream>

#include <cstring>

#include <chrono>

#include <string>

using namespace std::chrono;

// define a stl and tbb vector

tbb::concurrent_vector<std::string> con_vector_string;

std::vector<std::string> s_vector_string;

tbb::concurrent_vector<int> con_vector_int;

std::vector<int> s_vector_int;

void reportTime(const char* msg, steady_clock::duration span) {

auto ms = duration_cast<milliseconds>(span);

std::cout << msg << " - took - " <<

ms.count() << " milliseconds" << std::endl;

}

int main(int argc, char** argv){

if(argc != 2) { return 1; }

int size = std::atoi(argv[1]);

steady_clock::time_point ts, te;

/*

TEST WITH STRING OBJECT

*/

ts = steady_clock::now();

// serial for loop

for(int i = 0; i < size; ++i)

s_vector_string.push_back(std::string());

te = steady_clock::now();

reportTime("Serial vector speed - STRING: ", te-ts);

ts = steady_clock::now();

// concurrent for loop

tbb::parallel_for(0, size, 1, [&](int i){

con_vector_string.push_back(std::string());

});

te = steady_clock::now();

reportTime("Concurrent vector speed - STRING: ", te-ts);

/*

TEST WITH INT DATA TYPE

*/

std::cout<< "\n\n";

ts = steady_clock::now();

// serial for loop

for(int i = 0; i < size; ++i)

s_vector_int.push_back(i);

te = steady_clock::now();

reportTime("Serial vector speed - INT: ", te-ts);

ts = steady_clock::now();

// concurrent for loop

tbb::parallel_for(0, size, 1, [&](int i){

con_vector_int.push_back(i);

});

te = steady_clock::now();

reportTime("Concurrent vector speed - INT: ", te-ts);

}

If only you knew the power of the Building Blocks

Concept: Fine-grained locking

Multiple threads operate on the container by locking only those portions they really need to lock.

Concept: Lock-free algorithms

Bits of knowledge:

STL interfaces are inherently not thread-safe.

Threading Building Blocks containers are not templated with an allocator argument.

Links

http://www.cs.northwestern.edu/~riesbeck/programming/c++/stl-summary.html

http://www.cplusplus.com/reference/stl/

https://www.inf.ed.ac.uk/teaching/courses/ppls/TBBtutorial.pdf

Business Point of View Comparison for STL and TBB

Which library is better depending the on the use case?

One major aspect to look for when parallelizing a piece of code is the Cost- Benefit. Is it worth the time and effort to parallelize a part of your software only to get a small performance gain? Or is just faster to keep in serial? Many of these questions must be answered when deciding to parallelize your code or not.

TBB helps to lower the cost of smaller performance benefit. Due to TBB requiring less effort to implement. Compared to other multi-threading libraries

- If are just trying to parallelize a section of your code with a simple map, scan, or reduce pattern. Without much thought TBB has you covered.

- When working with large collections of data TBB with its use of block range coupled with it algorithms makes it simpler to come up with solutions for the collection

TBB enables you to specify logical parallelism instead of threads. It eliminates the time needed when developing a backbone for your code when working with threads. For quick and easy solutions for parallelize your code TBB is the way to go.

STL stands on its own right, with its well-designed serial features. When trying to have more control near hardware level, or you need to work near hardware level, the STL library with the threading libraries is the way to go. Though there are thread safety issues.

Conclusion

TBB only gives you parallel solutions, STL gives you the foundations for many serial algorithms for sorting, searching, and a verity of containers.

Implementation Safety for TBB and STL

We are all human and we do make mistakes. Less mistakes done by developers will equal to less wasted time.

- TBB specifically makes concurrent_vector container not to support insert and erase operations. Only new items can be pushed back, and cannot be shrunk.

- This prevents developers to write bad code. If for example, we would allow insert and erase operations on concurrent_vector, it could cause a big performance hit. This performance hit can burden both iterating and growing operations which will not only make the concurrent containers in TBB unless, but also your program inefficient.

- As already stated most of the STL containers are not thread safe. Though some operations in TBB containers are also not thread safe, like reserve() and clear() in concurrent_vector.

- Thread Creation, terminating, and synchronizing, partitioning is managed by TBB. This creates a layer of safety on the programmer’s end, has they do not have to deal with the threads themselves, making a developer less prone to make mistakes in their code.

Identifying the worries and responsibilities

The increasing complexity of your code is a natural problem when working in parallel. Knowing the responsibilities as in what you must worry about as a developer is key. When trying quickly implement parallel regions in your code, or to just to keep your code serial.

STL

If you are going to try to parallelize your code using STL coupled with the threading libraries this is what you must worry

- Thread Creation, terminating, and synchronizing, partitioning, thread creation, and management must be handled by you. This increases the work load and the complexity.

- The thread creation and overall resource for STL is managed by a combination of libraries.

- Dividing collection of data is more of the problem when using the STL containers. Also mentioning that is not thread safe.

- C++11 does not have any parallel algorithms. So, any common parallel patterns such as; map, scan, reduce, must be implemented by yourself, or by another library. But the latest STL C++17, will have some parallel algorithms like scan, map, and reduce.

The benefit of STL is due to the fact that you must manage the thread/ resources yourself which give you more control on the code, and fine tuning optimizations. Nonetheless, managing the thread yourself can be a double edge sword since with more control, it will take time implementing the code and the level of complexity will increase.

What you don’t need to worry about

- Making sorting, searching algorithms.

- Partitioning data.

- Array algorithms; like copying, assigning, and checking data

- Types of data storage

- Value pairing

Note all algorithms is done in serial, and may not be thread safe

TBB

Thread Creation, terminating, and synchronizing, partitioning, thread creation, and management is managed by TBB. This make you need not to worry about the heavy constructs of threads which are close to the hardware level.

- Making a solution from close to hardware level allows you to be flexible to the solution you are wanting to make. But the major downside is the requirement of implementing the foundations first to make your solution work. It also has the potential of making your program inefficient if not done correctly.

TBB does have Parallel Algorithms support, that has been already mentioned.

Benefit

The benefit of TBB is that it is made in such a way that you as a programmer can only worry about one thing; how to parallelize your serial code. You do not need to worry about the resource management. The TBB model allows you to make quick parallel implementation solutions with less amount of effort.

Downside

The downside of TBB is since much of the close to hard hardware management is done be hide the scenes, it makes you has a developer have less control on finetuning your program. Unlike how STL with the threading library allows you to do.