Difference between revisions of "DPS921/Halt and Catch Fire"

(→Results - Efficiency profile) |

(→Results - Serial vs parallel Performance) |

||

| Line 132: | Line 132: | ||

Evaluating the time it takes the serial and parallel version of the program to run through the Monte Carlo Iterations for 1,000,000,000 (1 billion) iterations.<br/> | Evaluating the time it takes the serial and parallel version of the program to run through the Monte Carlo Iterations for 1,000,000,000 (1 billion) iterations.<br/> | ||

[[Image:MCSim.PNG|alt=Monte Carlo Simulations]]<br/> | [[Image:MCSim.PNG|alt=Monte Carlo Simulations]]<br/> | ||

| − | We can see that the parallel version gives us about a ~4x performance gain matching our benchmark results. | + | We can see that the parallel version gives us about a '''~4x performance gain''' matching our benchmark results. |

Revision as of 19:34, 1 December 2016

Contents

Halt and Catch Fire

Project: Parallelism with Go

Group Members

- Colin Paul [1] Research etc.

Progress

Oct 31st:

- Picked topic

- Picked presentation date.

- Created Wiki page

Nov 28th:

- Presented topic

Parallel Programming in Go

What is Go?

- The Go language is an open source project to make programmers more productive.

- It is expressive, concise, clean, and efficient. Its concurrency mechanisms make it easy to write programs that get the most out of multi-core and networked machines, while its novel type system enables flexible and modular program construction. Go compiles quickly to machine code yet has the convenience of garbage collection and the power of run-time reflection. It's a fast, statically typed, compiled language that feels like a dynamically typed, interpreted language.

By what means does Go allow parallelism?

- Go allows multi-core programming using concurrency methods, and enables the ability to parallelize.

TLDR;

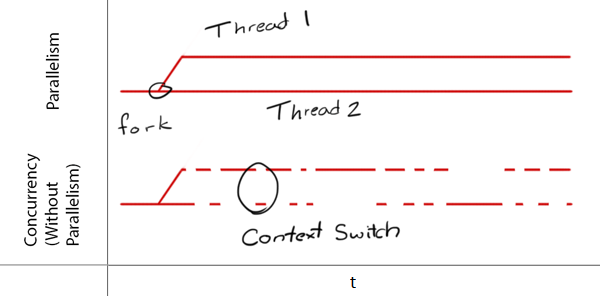

What is parallelism?

- Programming as the simultaneous execution of (possibly related) computations.

What is concurrency?

- Concurrency is the composition of independently executing computations.

- It is a way to structure software, particularly as a way to write clean code that interacts well with the real world.

- It is not parallelism, but it enables it.

- If you have only one processor, your program can still be concurrent but it cannot be parallel.

- On the other hand, a well-written concurrent program might run efficiently in parallel on a multi-processor.

Concurrency vs parallelism

- Concurrency is about dealing with lots of things at once.

- Parallelism is about doing lots of things at once.

- Not the same, but related.

- Concurrency is about structure, parallelism is about execution.

- Concurrency provides a way to structure a solution to solve a problem that may (but not necessarily) be parallelizable.

Examples of things that use a concurrent model

- I/O - Mouse, keyboard, display, and disk drivers.

Examples of things that use a parallel model

- GPU - performing vector dot products.

Up and running with Go

Download and installation

Documentation

Playground

Demo: Concurrency and parallelism in Go

Monte Carlo Simulations

- A probabilistic way to come up with the answer to a mathematical question by running a large number of simulations when you cannot get or want to double-check a closed-formed solution.

- Use it to calculate the value of π (pi) - 3.1415926535

Method

- Draw a square, then inscribe a circle within it.

- Uniformly scatter some objects of uniform size (grains of rice or sand) over the square.

- Count the number of objects inside the circle and the total number of objects.

- The ratio of the two counts is an estimate of the ratio of the two areas, which is π/4. Multiply the result by 4 to estimate π.

Pseudo implementation

- To make the simulations simple, we’ll use a unit square with sides of length 1.

- That will make our final ratio of: (π*(1)2/4) / 12 = π/4

- We just need to multiply by 4 to get π.

Implementation

Serial

func PI(samples int) float64 {

var inside int = 0

r := rand.New(rand.NewSource(time.Now().UnixNano()))

for i := 0; i < samples; i++ {

x := r.Float64()

y := r.Float64()

if (x*x + y*y) <= 1 {

inside++

}

}

ratio := float64(inside) / float64(samples)

return ratio * 4

}Serial execution CPU profile

As you can see only one core is work to compute the value of Pi - CPU8 @ 100%

Parallel

func MultiPI(samples int) float64 {

runtime.GOMAXPROCS(runtime.NumCPU())

cpus := runtime.NumCPU()

threadSamples := samples / cpus

results := make(chan float64, cpus)

for j := 0; j < cpus; j++ {

go func() {

var inside int

r := rand.New(rand.NewSource(time.Now().UnixNano()))

for i := 0; i < threadSamples; i++ {

x, y := r.Float64(), r.Float64()

if (x*x + y*y) <= 1 {

inside++

}

}

results <- float64(inside) / float64(threadSamples) * 4

}()

}

var total float64

for i := 0; i < cpus; i++ {

total += <-results

}

return total / float64(cpus)

}Parallel execution CPU profile

As you can see all cores are working to compute the value of Pi - CPU1-8 @ 100%

Results - Efficiency profile

Profiling the execution time at a computation hot spot for both the serial and parallel version of the Monte Carlo Simulations program.

We can see that the parallel version gives us about a ~4x performance gain.

Results - Serial vs parallel Performance

Evaluating the time it takes the serial and parallel version of the program to run through the Monte Carlo Iterations for 1,000,000,000 (1 billion) iterations.

We can see that the parallel version gives us about a ~4x performance gain matching our benchmark results.