Difference between revisions of "A-Team"

Spdjurovic (talk | contribs) (→Sebastian's findings) |

Spdjurovic (talk | contribs) (→Sebastian's findings) |

||

| Line 11: | Line 11: | ||

=====Neural Network===== | =====Neural Network===== | ||

======Sebastian's findings====== | ======Sebastian's findings====== | ||

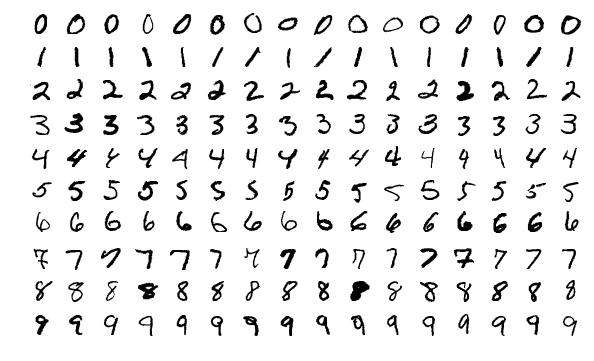

| − | I found a simple [https://gist.github.com/sbugrov/7f373f0e4788f8e076b8efa2abfd227a.js neural network] that takes a MNIST data set and preforms training on batches of the data. For a quick illustration MNIST is a numerical data set that contains many written numbers | + | I found a simple [https://gist.github.com/sbugrov/7f373f0e4788f8e076b8efa2abfd227a.js neural network] that takes a MNIST data set and preforms training on batches of the data. For a quick illustration MNIST is a numerical data set that contains many written numbers --in a gray scale format at 28 x28 pixels in size. As well as the corresponding numerical values; between 0 and 9. The reason for this data set is to train networks such that they will be able to recognize written numbers when they are confronted with them.[[File:MnistExamples.png]] |

=====Initial Profile===== | =====Initial Profile===== | ||

Revision as of 15:33, 7 March 2019

Contents

Back Propagation Acceleration

Team Members

- Sebastian Djurovic, Team Lead and Developer

- Henry Leung, Developer and Quality Control

- ...

Progress

Assignment 1

Our group decided to profile a couple of different solutions, the first being a simple neural network and ray tracing solution, in order to determine the best project to generate a solution for.

Neural Network

Sebastian's findings

I found a simple neural network that takes a MNIST data set and preforms training on batches of the data. For a quick illustration MNIST is a numerical data set that contains many written numbers --in a gray scale format at 28 x28 pixels in size. As well as the corresponding numerical values; between 0 and 9. The reason for this data set is to train networks such that they will be able to recognize written numbers when they are confronted with them.

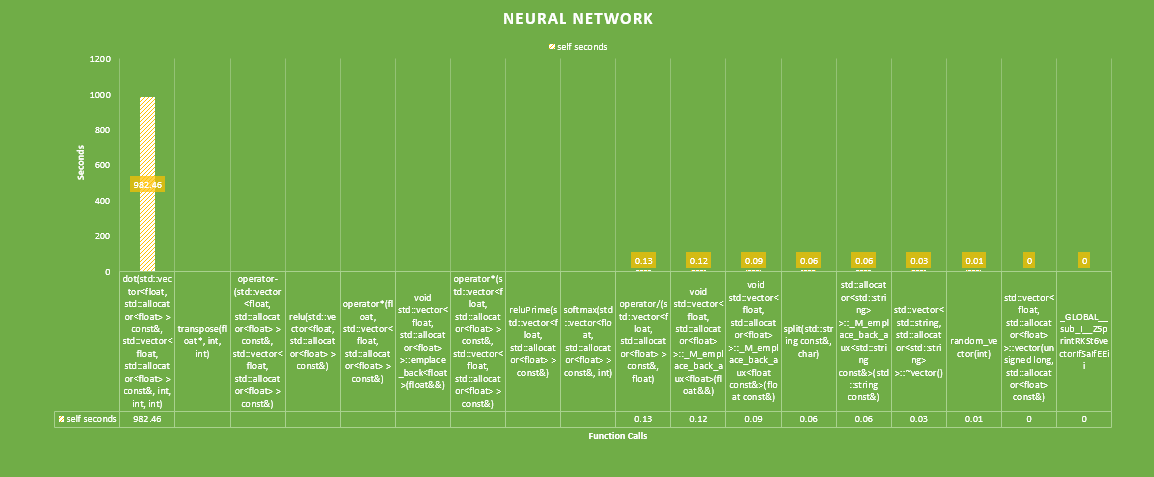

Initial Profile

Flat profile: Each sample counts as 0.01 seconds. % cumulative self self total time seconds seconds calls ns/call ns/call name 97.94 982.46 982.46 dot(std::vector<float, std::allocator<float> > const&, std::vector<float, std::allocator<float> > const&, int, int, int) 1.45 997.05 14.58 transpose(float*, int, int) 0.15 998.56 1.51 operator-(std::vector<float, std::allocator<float> > const&, std::vector<float, std::allocator<float> > const&) 0.15 1000.06 1.50 relu(std::vector<float, std::allocator<float> > const&) 0.15 1001.55 1.49 operator*(float, std::vector<float, std::allocator<float> > const&) 0.07 1002.27 0.72 519195026 1.39 1.39 void std::vector<float, std::allocator<float> >::emplace_back<float>(float&&) 0.06 1002.91 0.63 operator*(std::vector<float, std::allocator<float> > const&, std::vector<float, std::allocator<float> > const&) 0.05 1003.37 0.46 reluPrime(std::vector<float, std::allocator<float> > const&) 0.02 1003.62 0.25 softmax(std::vector<float, std::allocator<float> > const&, int) 0.01 1003.75 0.13 operator/(std::vector<float, std::allocator<float> > const&, float) 0.01 1003.87 0.12 442679 271.35 271.35 void std::vector<float, std::allocator<float> >::_M_emplace_back_aux<float>(float&&) 0.01 1003.96 0.09 13107321 6.87 6.87 void std::vector<float, std::allocator<float> >::_M_emplace_back_aux<float const&>(float const&) 0.01 1004.02 0.06 split(std::string const&, char) 0.01 1004.08 0.06 462000 130.00 130.00 void std::vector<std::string, std::allocator<std::string> >::_M_emplace_back_aux<std::string const&>(std::string const&) 0.00 1004.11 0.03 std::vector<std::string, std::allocator<std::string> >::~vector() 0.00 1004.12 0.01 random_vector(int) 0.00 1004.12 0.00 3 0.00 0.00 std::vector<float, std::allocator<float> >::vector(unsigned long, std::allocator<float> const&) 0.00 1004.12 0.00 1 0.00 0.00 _GLOBAL__sub_I__Z5printRKSt6vectorIfSaIfEEii