Difference between revisions of "DPS921/Group 8"

(→Group 8) |

(→Padding) |

||

| Line 29: | Line 29: | ||

[[File:Cacheline2.jpeg]] | [[File:Cacheline2.jpeg]] | ||

| + | |||

| + | ____________________________________________________________________________________________________________________________________________________________ | ||

| + | //Test.cpp Test padding excution time | ||

| + | |||

| + | #include <iostream> | ||

| + | #include <iomanip> | ||

| + | #include <cstdlib> | ||

| + | #include <chrono> | ||

| + | #include <algorithm> | ||

| + | #include <omp.h> | ||

| + | #include "pch.h" | ||

| + | using namespace std::chrono; | ||

| + | |||

| + | struct MyStruct | ||

| + | { | ||

| + | float value; | ||

| + | int padding[24]; | ||

| + | }; | ||

| + | |||

| + | int main(int argc, char** argv) | ||

| + | { | ||

| + | MyStruct testArr[4]; | ||

| + | |||

| + | omp_set_num_threads(3); | ||

| + | double start_time = omp_get_wtime(); | ||

| + | |||

| + | #pragma omp parallel for | ||

| + | for (int i = 0; i < 4; i++) { | ||

| + | for (int j = 0; j < 10000; j++) { | ||

| + | //#pragma omp critical | ||

| + | testArr[i].value = testArr[i].value + 2; | ||

| + | } | ||

| + | |||

| + | } | ||

| + | |||

| + | double time = omp_get_wtime() - start_time; | ||

| + | std::cout << "Execution Time: " << time << std::endl; | ||

| + | |||

| + | return 0; | ||

| + | } | ||

| + | |||

| + | ____________________________________________________________________________________________________________________________________________________________ | ||

== Synchronization == | == Synchronization == | ||

Revision as of 09:36, 26 November 2018

Contents

Group 8

Our Project: Analyzing False Sharing - Case Studies

https://wiki.cdot.senecacollege.ca/wiki/DPS921/Group_8

Group Members

False Sharing in Parallel Programming

Introduction

Location of the problem - Local cache

Signs of false sharing

Solutions

We will now explore two typical ways to deal with false sharing in an OMP environment.

Padding

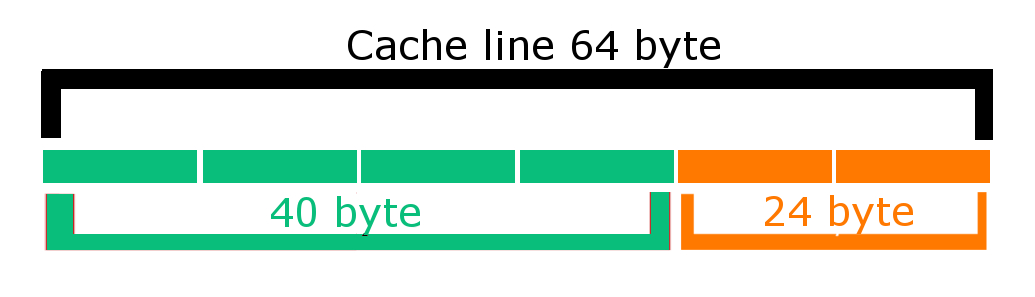

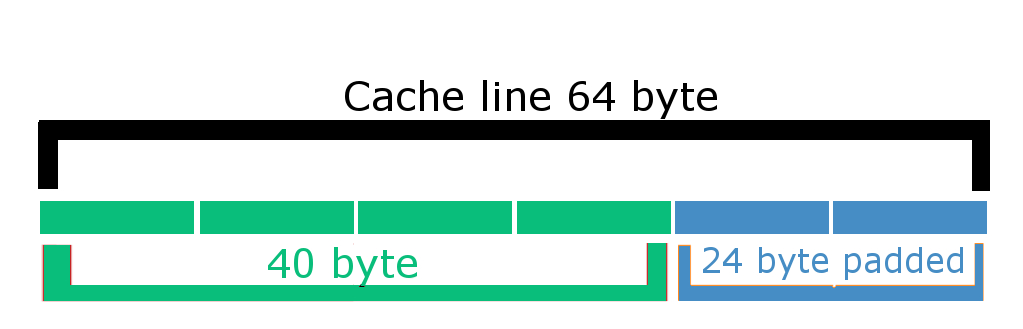

One way to eliminating false sharing is to add in padding to the data. The idea of padding in general is for memory alignment, by utilizing padding we can eliminate cache line invalidation interfering with read and write of elements.

How padding works: Let's say we have an int element num[i] = 10; in memory this would be stored as 40 bytes ( 10 * 4 byte) and a single standard cache line is 64 byte which means 24 byte needs to be padded otherwise another element will occupy that region which will result in 2 or more thread accessing same cache line causing false sharing.

____________________________________________________________________________________________________________________________________________________________ //Test.cpp Test padding excution time

- include <iostream>

- include <iomanip>

- include <cstdlib>

- include <chrono>

- include <algorithm>

- include <omp.h>

- include "pch.h"

using namespace std::chrono;

struct MyStruct { float value; int padding[24]; };

int main(int argc, char** argv) { MyStruct testArr[4];

omp_set_num_threads(3); double start_time = omp_get_wtime();

#pragma omp parallel for

for (int i = 0; i < 4; i++) { for (int j = 0; j < 10000; j++) { //#pragma omp critical testArr[i].value = testArr[i].value + 2; }

}

double time = omp_get_wtime() - start_time; std::cout << "Execution Time: " << time << std::endl;

return 0; }

____________________________________________________________________________________________________________________________________________________________

Synchronization

The other way to eliminating false sharing is to implement a mutual exclusion construct. This the better method than using padding as there is no wasting of memory and data access is not hindered due to cache line invalidation. Programming a mutual exclusion implementation is done by using the critical construct in an op environment. The critical construct restricts statements to a single thread to process at a time, making variables local to a single thread ensures that multiple threads do not write data to the same cache line.