Difference between revisions of "GPU621/Fighting Mongooses"

Kyle Klerks (talk | contribs) (→Fighting Mongooses: Added content) (Tags: Mobile edit, Mobile web edit) |

(→Fighting Mongooses) |

||

| Line 22: | Line 22: | ||

That said, while the C++11 standard solutions are considered ‘experimental’, they are largely functional and comparable to TBB functionality in most cases in terms of efficiency. | That said, while the C++11 standard solutions are considered ‘experimental’, they are largely functional and comparable to TBB functionality in most cases in terms of efficiency. | ||

| + | |||

==STL== | ==STL== | ||

The [https://en.wikipedia.org/wiki/Standard_Template_Library Standard Template Library (STL)] is a software library for the C++ programming language that influenced many parts of the C++ Standard Library. It provides four components called algorithms, containers, functional, and iterators. As early as 2006, parallelization has been being pushed for inclusion in the STL for C++, to some success (more on this later). | The [https://en.wikipedia.org/wiki/Standard_Template_Library Standard Template Library (STL)] is a software library for the C++ programming language that influenced many parts of the C++ Standard Library. It provides four components called algorithms, containers, functional, and iterators. As early as 2006, parallelization has been being pushed for inclusion in the STL for C++, to some success (more on this later). | ||

| + | |||

==TBB== | ==TBB== | ||

TBB (Threading Building Blocks) is a high-level, general purpose, feature-rich library for implementing parametric polymorphism using threads. It includes a variety of containers and algorithms that execute in parallel and has been designed to work without requiring any change to the compiler. Uses task parallelism, Vectorization not supported. | TBB (Threading Building Blocks) is a high-level, general purpose, feature-rich library for implementing parametric polymorphism using threads. It includes a variety of containers and algorithms that execute in parallel and has been designed to work without requiring any change to the compiler. Uses task parallelism, Vectorization not supported. | ||

| + | |||

==BOOST== | ==BOOST== | ||

| Line 36: | Line 39: | ||

Since 2006 an intimate week long annual conference related to Boost called [http://cppnow.org/ C++ Now] has been held in Aspen, Colorado each May. Boost has been a participant in the annual [https://developers.google.com/open-source/soc/?csw=1 Google Summer of Code] since 2007. | Since 2006 an intimate week long annual conference related to Boost called [http://cppnow.org/ C++ Now] has been held in Aspen, Colorado each May. Boost has been a participant in the annual [https://developers.google.com/open-source/soc/?csw=1 Google Summer of Code] since 2007. | ||

| + | |||

==STD(PPL) – since Visual Studio 2015== | ==STD(PPL) – since Visual Studio 2015== | ||

| − | + | The Parallel Patterns Library (PPL) provides general-purpose containers and algorithms for performing fine-grained parallelism. The PPL enables imperative data parallelism by providing parallel algorithms that distribute computations on collections or on sets of data across computing resources. It also enables task parallelism by providing task objects that distribute multiple independent operations across computing resources. To use PPL classes and functions, simply include the ppl.h header file. | |

| + | |||

| + | |||

| + | ==Concurrency Runtime== | ||

| − | + | Classes that simplify the writing of programs that use data parallelism or task parallelism. | |

| − | + | [[File:cRuntime.png]] | |

| − | |||

| − | + | ==Task Scheduler== | |

| + | |||

| + | The Task Scheduler schedules and coordinates tasks at run time. The Task Scheduler is cooperative and uses a work-stealing algorithm to achieve maximum usage of processing resources. | ||

| + | The Concurrency Runtime provides a default scheduler so that you do not have to manage infrastructure details. However, to meet the quality needs of your application, you can also provide your own scheduling policy or associate specific schedulers with specific tasks. | ||

| + | |||

| + | |||

| + | ==Resource Manager== | ||

| + | |||

| + | The role of the Resource Manager is to manage computing resources, such as processors and memory. The Resource Manager responds to workloads as they change at runtime by assigning resources to where they can be most effective. | ||

| + | The Resource Manager serves as an abstraction over computing resources and primarily interacts with the Task Scheduler. Although you can use the Resource Manager to fine-tune the performance of your libraries and applications, you typically use the functionality that is provided by the Parallel Patterns Library, the Agents Library, and the Task Scheduler. These libraries use the Resource Manager to dynamically rebalance resources as workloads change. | ||

| + | |||

| + | |||

| + | ==Asynchronous Agents Library== | ||

| + | |||

| + | Not relevant to this comparison, .NET Framework. | ||

| + | |||

| − | |||

==Auto-Parallelizer== | ==Auto-Parallelizer== | ||

| Line 55: | Line 75: | ||

Multiple example loops [https://msdn.microsoft.com/en-ca/library/hh872235.aspx here] | Multiple example loops [https://msdn.microsoft.com/en-ca/library/hh872235.aspx here] | ||

| − | |||

| − | |||

| − | |||

==C++ AMP (C++ Accelerated Massive Parallelism)== | ==C++ AMP (C++ Accelerated Massive Parallelism)== | ||

C++ AMP accelerates the execution of your C++ code by taking advantage of the data-parallel hardware that's commonly present as a graphics processing unit (GPU) on a discrete graphics card. The C++ AMP programming model includes support for multidimensional arrays, indexing, memory transfer, and tiling. It also includes a mathematical function library. You can use C++ AMP language extensions to control how data is moved from the CPU to the GPU and back. | C++ AMP accelerates the execution of your C++ code by taking advantage of the data-parallel hardware that's commonly present as a graphics processing unit (GPU) on a discrete graphics card. The C++ AMP programming model includes support for multidimensional arrays, indexing, memory transfer, and tiling. It also includes a mathematical function library. You can use C++ AMP language extensions to control how data is moved from the CPU to the GPU and back. | ||

| + | |||

==AMP Tiling== | ==AMP Tiling== | ||

| − | Tiling divides threads into equal rectangular subsets or tiles. If you use an appropriate tile size and tiled algorithm, you can get even more acceleration from your C++ AMP code. | + | Tiling divides threads into equal rectangular subsets or tiles. If you use an appropriate tile size and tiled algorithm, you can get even more acceleration from your C++ AMP code. |

| − | + | ||

| − | + | ||

| − | + | ==*A note on AMP and tiling== | |

| − | + | ||

| + | AMP does not properly compile on the visual studio 2015 platform, it must be run using libraries before VS2015. Tiling does not seem to be supported on the Intel Compiler as well. | ||

| + | |||

| + | |||

| + | ==A simple for_Each Comparison== | ||

| + | |||

| + | [[File:ForEachCode.PNG]] | ||

| + | |||

| + | [[File:ForEachTable.PNG]] | ||

| + | |||

| + | [[File:ForEachChart.PNG]] | ||

| − | |||

==Comparing STL/PPL to TBB: Sorting Algorithm== | ==Comparing STL/PPL to TBB: Sorting Algorithm== | ||

| Line 94: | Line 121: | ||

The clear differentiation in the code is that TBB does not have to operate using random access iterators, while STL’s parallel solution to sorting (and serial solution) does. If TBB sort is run using a vector instead of a simple array, you will see more even times. | The clear differentiation in the code is that TBB does not have to operate using random access iterators, while STL’s parallel solution to sorting (and serial solution) does. If TBB sort is run using a vector instead of a simple array, you will see more even times. | ||

| + | |||

==Conclusion== | ==Conclusion== | ||

The conclusion to draw when comparing TBB to STL, in their current states, is that you ideally should use TBB over STL. STL parellelism is still very experimental and unrefined, and will likely remain that way until we see the release of C++17. However, following C++17’s release, using the native parallel library solution will likely be the ideal road to follow. | The conclusion to draw when comparing TBB to STL, in their current states, is that you ideally should use TBB over STL. STL parellelism is still very experimental and unrefined, and will likely remain that way until we see the release of C++17. However, following C++17’s release, using the native parallel library solution will likely be the ideal road to follow. | ||

| + | |||

| + | |||

| + | ==References== | ||

| + | |||

| + | http://www.boost.org/ | ||

| + | |||

| + | https://scs.senecac.on.ca/~gpu621/pages/content/tbb__.html | ||

| + | |||

| + | Auto-Parallelization and Auto-Vectorization: https://msdn.microsoft.com/en-ca/library/hh872235.aspx | ||

| + | |||

| + | Concurrency Runtime: | ||

| + | https://msdn.microsoft.com/en-ca/library/dd504870.aspx | ||

| + | |||

| + | Accelerated Massive Parallelism (AMP): https://msdn.microsoft.com/en-ca/library/hh265137.aspx | ||

| + | |||

| + | Using Lambdas, Function objects and Restricted functions: | ||

| + | https://msdn.microsoft.com/en-ca/library/hh873133.aspx | ||

| + | |||

| + | Using Tiles: | ||

| + | https://msdn.microsoft.com/en-ca/library/hh873135.aspx | ||

| + | |||

| + | Concurrency Runtime Overview: | ||

| + | https://msdn.microsoft.com/en-us/library/ee207192.aspx | ||

---- | ---- | ||

Revision as of 14:47, 5 December 2016

Contents

- 1 Fighting Mongooses

- 1.1 Group Members

- 1.2 Introduction

- 1.3 STL

- 1.4 TBB

- 1.5 BOOST

- 1.6 STD(PPL) – since Visual Studio 2015

- 1.7 Concurrency Runtime

- 1.8 Task Scheduler

- 1.9 Resource Manager

- 1.10 Asynchronous Agents Library

- 1.11 Auto-Parallelizer

- 1.12 C++ AMP (C++ Accelerated Massive Parallelism)

- 1.13 AMP Tiling

- 1.14 *A note on AMP and tiling

- 1.15 A simple for_Each Comparison

- 1.16 Comparing STL/PPL to TBB: Sorting Algorithm

- 1.17 Conclusion

- 1.18 References

Fighting Mongooses

Group Members

- Mike Doherty, Everything.

- Kyle Klerks, Everything.

Entry on: October 31st 2016

Group page (Fighting Mongooses) has been created suggested project topic is being considered

- - C++11 STL Comparison to TBB

Once project topic is confirmed by Professor Chris Szalwinski), I will be able to proceed with topic research.

Entry on: November 30th 2016

Introduction

There are decisions to make regarding, if even you SHOULD parallelize your program, what method or libraries should you use? To compare two specific possibilties, is it better to use standardized C++ solutions, or Thread Building Blocks?

The main issue with parallelism as it exists currently in C++ is that it is very much a Wild West scenario where external companies are the ones flagshipping the parallel movement. That isn’t to say that there are no options for parallelization in C++ natively, but aside from experimental and non-finalized solutions there is not much more to work with other than std::thread to manually create threads, or the Boost library.

That said, while the C++11 standard solutions are considered ‘experimental’, they are largely functional and comparable to TBB functionality in most cases in terms of efficiency.

STL

The Standard Template Library (STL) is a software library for the C++ programming language that influenced many parts of the C++ Standard Library. It provides four components called algorithms, containers, functional, and iterators. As early as 2006, parallelization has been being pushed for inclusion in the STL for C++, to some success (more on this later).

TBB

TBB (Threading Building Blocks) is a high-level, general purpose, feature-rich library for implementing parametric polymorphism using threads. It includes a variety of containers and algorithms that execute in parallel and has been designed to work without requiring any change to the compiler. Uses task parallelism, Vectorization not supported.

BOOST

BOOST provides free peer-reviewed portable C++ source libraries. Boost emphasizes libraries that work well with the C++ Standard Library. Boost libraries are intended to be widely useful, and usable across a broad spectrum of applications. Ten Boost libraries are included in the C++ Standards Committee's Library Technical Report (TR1) and in the new C++11 Standard. C++11 also includes several more Boost libraries in addition to those from TR1. More Boost libraries are proposed for standardization in C++17.

Since 2006 an intimate week long annual conference related to Boost called C++ Now has been held in Aspen, Colorado each May. Boost has been a participant in the annual Google Summer of Code since 2007.

STD(PPL) – since Visual Studio 2015

The Parallel Patterns Library (PPL) provides general-purpose containers and algorithms for performing fine-grained parallelism. The PPL enables imperative data parallelism by providing parallel algorithms that distribute computations on collections or on sets of data across computing resources. It also enables task parallelism by providing task objects that distribute multiple independent operations across computing resources. To use PPL classes and functions, simply include the ppl.h header file.

Concurrency Runtime

Classes that simplify the writing of programs that use data parallelism or task parallelism.

Task Scheduler

The Task Scheduler schedules and coordinates tasks at run time. The Task Scheduler is cooperative and uses a work-stealing algorithm to achieve maximum usage of processing resources. The Concurrency Runtime provides a default scheduler so that you do not have to manage infrastructure details. However, to meet the quality needs of your application, you can also provide your own scheduling policy or associate specific schedulers with specific tasks.

Resource Manager

The role of the Resource Manager is to manage computing resources, such as processors and memory. The Resource Manager responds to workloads as they change at runtime by assigning resources to where they can be most effective. The Resource Manager serves as an abstraction over computing resources and primarily interacts with the Task Scheduler. Although you can use the Resource Manager to fine-tune the performance of your libraries and applications, you typically use the functionality that is provided by the Parallel Patterns Library, the Agents Library, and the Task Scheduler. These libraries use the Resource Manager to dynamically rebalance resources as workloads change.

Asynchronous Agents Library

Not relevant to this comparison, .NET Framework.

Auto-Parallelizer

The /Qpar compiler switch enables automatic parallelization of loops in your code. When you specify this flag without changing your existing code, the compiler evaluates the code to find loops that might benefit from parallelization. Because it might find loops that don't do much work and therefore won't benefit from parallelization, and because every unnecessary parallelization can engender the spawning of a thread pool, extra synchronization, or other processing that would tend to slow performance instead of improving it, the compiler is conservative in selecting the loops that it parallelizes. Multiple example loops here

C++ AMP (C++ Accelerated Massive Parallelism)

C++ AMP accelerates the execution of your C++ code by taking advantage of the data-parallel hardware that's commonly present as a graphics processing unit (GPU) on a discrete graphics card. The C++ AMP programming model includes support for multidimensional arrays, indexing, memory transfer, and tiling. It also includes a mathematical function library. You can use C++ AMP language extensions to control how data is moved from the CPU to the GPU and back.

AMP Tiling

Tiling divides threads into equal rectangular subsets or tiles. If you use an appropriate tile size and tiled algorithm, you can get even more acceleration from your C++ AMP code.

*A note on AMP and tiling

AMP does not properly compile on the visual studio 2015 platform, it must be run using libraries before VS2015. Tiling does not seem to be supported on the Intel Compiler as well.

A simple for_Each Comparison

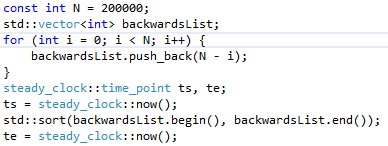

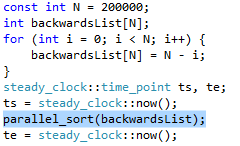

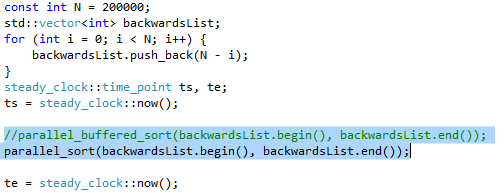

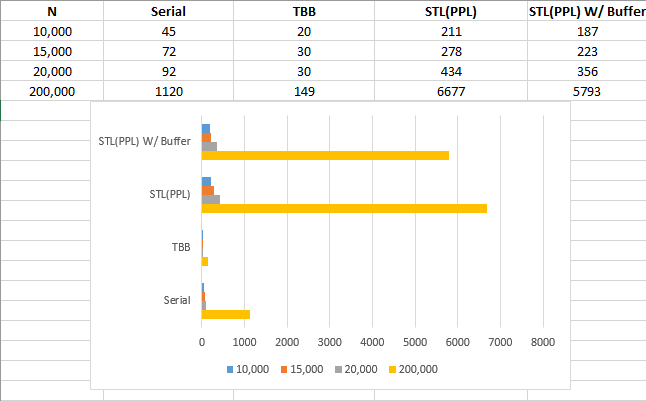

Comparing STL/PPL to TBB: Sorting Algorithm

Let’s compare a simple sorting algorithm between TBB and STL/PPL.

Serial

TBB

STL

Results

The clear differentiation in the code is that TBB does not have to operate using random access iterators, while STL’s parallel solution to sorting (and serial solution) does. If TBB sort is run using a vector instead of a simple array, you will see more even times.

Conclusion

The conclusion to draw when comparing TBB to STL, in their current states, is that you ideally should use TBB over STL. STL parellelism is still very experimental and unrefined, and will likely remain that way until we see the release of C++17. However, following C++17’s release, using the native parallel library solution will likely be the ideal road to follow.

References

https://scs.senecac.on.ca/~gpu621/pages/content/tbb__.html

Auto-Parallelization and Auto-Vectorization: https://msdn.microsoft.com/en-ca/library/hh872235.aspx

Concurrency Runtime: https://msdn.microsoft.com/en-ca/library/dd504870.aspx

Accelerated Massive Parallelism (AMP): https://msdn.microsoft.com/en-ca/library/hh265137.aspx

Using Lambdas, Function objects and Restricted functions: https://msdn.microsoft.com/en-ca/library/hh873133.aspx

Using Tiles: https://msdn.microsoft.com/en-ca/library/hh873135.aspx

Concurrency Runtime Overview: https://msdn.microsoft.com/en-us/library/ee207192.aspx