Difference between revisions of "AAA Adrina Arsa Andriy"

(→Progress) |

|||

| Line 52: | Line 52: | ||

Because the data would be split into smaller pieces by the explode function, this could be paralleled. Since this takes almost 55% of the time of the program, it would be worth speeding up. | Because the data would be split into smaller pieces by the explode function, this could be paralleled. Since this takes almost 55% of the time of the program, it would be worth speeding up. | ||

| + | |||

| + | ====Andriy Guzenko’s Findings==== | ||

| + | |||

| + | I found a jpeg-compressor of a relatively small size written in C++. The core compression algorithm is about 900 lines plus the header file. You can find the code online here: [ https://code.google.com/p/jpeg-compressor/ ] | ||

| + | |||

| + | It is a console application which requires three command line arguments for successful execution. The first is the source ‘jpg’ file with appropriate ‘read’ permissions. The second is the destination file in any format. The third argument is the quality factor which is a number between 0 and 100. The 0 quality factor will produce a better quality compressed image. | ||

| + | |||

| + | The application also contains the decompression module which allows to decompress files back into ‘jpg’ format. | ||

| + | |||

| + | Finally, the application provides a number of options for compression and decompression such as: | ||

| + | |||

| + | ‘-o’ – enables optimized Huffman tables and makes compression slower but produces smaller files | ||

| + | |||

| + | ‘-x’ – exhaustive compression test | ||

| + | |||

| + | Profiling of this application revealed a number of hotspots in both compression and decompression algorithms. | ||

| + | |||

| + | The compression profile for 3.3 Mb ‘Jpg’ image which produced 0.7 Mb compressed file: | ||

| + | |||

| + | <pre> | ||

| + | > a.out img.jpg result 50 | ||

| + | |||

| + | Each sample counts as 0.01 seconds. | ||

| + | |||

| + | % cumulative self self total | ||

| + | |||

| + | time seconds seconds calls s/call s/call name | ||

| + | |||

| + | 12.18 1.66 1.66 image_compare(image_compare_results&, int, int, unsigned char const*, int, unsigned char const*, int, bool) | ||

| + | |||

| + | 8.90 2.87 1.21 YCbCr_to_RGB_row(unsigned char*, unsigned char const*, unsigned char const*, unsigned char const*, int, int) | ||

| + | |||

| + | 8.61 4.04 1.17 jpge::DCT2D(int*) | ||

| + | |||

| + | 8.46 5.18 1.15 jpge::RGB_to_YCC(unsigned char*, unsigned char const*, int) | ||

| + | |||

| + | 8.02 6.28 1.09 idct_block(unsigned char*, int, short*, unsigned char*) | ||

| + | |||

| + | 6.48 7.16 0.88 3456 0.00 0.00 jpgd::jpeg_decoder::expanded_convert() | ||

| + | |||

| + | 5.30 7.88 0.72 4214032 0.00 0.00 jpgd::Col<4>::idct(unsigned char*, int const*) | ||

| + | |||

| + | 4.12 8.44 0.56 373248 0.00 0.00 jpge::jpeg_encoder::load_quantized_coefficients(int) | ||

| + | |||

| + | 4.01 8.98 0.55 get_pixel(int*, unsigned char const*, bool, int) | ||

| + | |||

| + | 3.16 9.41 0.43 resample_row_h_2(unsigned char*, unsigned char*, unsigned char*, int, int) | ||

| + | |||

| + | 2.58 9.76 0.35 2140439 0.00 0.00 jpgd::Row<4>::idct(int*, short const*) | ||

| + | |||

| + | 2.43 10.09 0.33 373248 0.00 0.00 jpge::jpeg_encoder::code_coefficients_pass_two(int) | ||

| + | |||

| + | 2.02 10.37 0.28 decode_block(jpeg*, short*, huffman*, huffman*, int) | ||

| + | </pre> | ||

| + | |||

| + | And produced the output : | ||

| + | |||

| + | <pre> | ||

| + | jpge/jpgd example app | ||

| + | |||

| + | Source file: "img.JPG", image resolution: 4608x3456, actual comps: 3 | ||

| + | |||

| + | Writing JPEG image to file: comp | ||

| + | |||

| + | Compressed file size: 799619, bits/pixel: 0.402 | ||

| + | |||

| + | Compression time: 4609.175ms, Decompression time: 6812.550ms | ||

| + | |||

| + | Error Max: 71.000000, Mean: 5.951586, Mean^2: 20.892606, RMSE: 4.570843, PSNR: 3 | ||

| + | |||

| + | 4.930877 | ||

| + | |||

| + | Success. | ||

| + | </pre> | ||

| + | |||

| + | According to the profiling data such methods as “jpge::DCT2D(int*)” and “jpge::RGB_to_YCC” can be parallelized to improve the application performance which will be particularly useful for compressing large ‘jpg’ files at a better quality factor. | ||

=== Assignment 2 === | === Assignment 2 === | ||

=== Assignment 3 === | === Assignment 3 === | ||

Revision as of 10:04, 3 October 2014

Contents

To Be Determined

Team Members

- Adrian Sauvageot, Developer

- ...

Progress

Assignment 1

About

Our group has decided to each look at a different programming problem that could be sped up through paralysing it.

Adrian Sauvageot's Findings

Program To Parallelize

I chose to look into a cryptography library. Upon searching for libraries, I was able to find an open source library called CRYPTO++. This library is able to encrypt lines of text, and then decrypt them, based on a key, which is also a string.

The library allows the programmer to select a string. The string would then be secretly shared across two platforms, where it would be stored. The data to be sent would be encrypted on a computer, sent over the network, and then decrypted on the other computer.

I decided to look at the application as if someone was to send a large amount of encrypted data in a large string. To encrypt the string, there is data dependency; encrypting "The Quick Brown Fox Jumped Over The Moon" could give an encrypted code of:

C:ÖÜ+┘~ ╦÷bܼ↨▲+¶.f¾W.▀#§cÆ▼H╣♥¿aOv:Ss╚Ðr│y!%·∟

If you typed the same thing twice, you would get:

C:ÖÜ+┘~ ╦÷bܼ↨▲+¶.f¾W.▀#§cÆ▼H╣♥éý┌ï0샨ï╩↑R┘^õ¡#£2ÌÕøÈ榪ç}FÉñW{òpÂ╩☺üqÿgG‗iã$(♦G]<}*▲`eJÔÓW╗õí

However, it would be possible take a large string, break it into several pieces, and encrypt each piece separately.

For example, if a string was to be broken up after each ".", each part of the large string would be encoded separately, and sent over the network along with a header that contained the strings order. IE:

encryptedPacket{

int id;

string encodedString;

}

The strings could be encoded parallely, and then again decoded parallely.

This would be able to decrease the time taken to encode the strings if they were large. The data would still be encoded while being sent over the network.

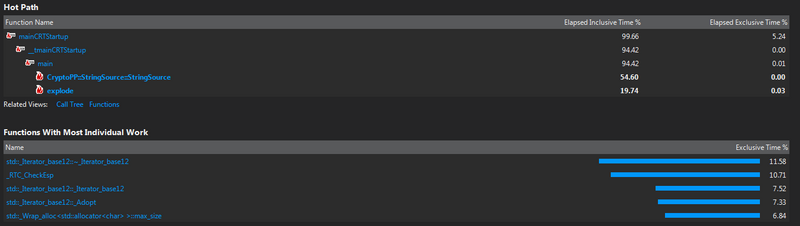

Hot Spot Identification By Profiling

In order to identify the hot spots of the program, I used Visual Studio. (Unfortunately, for this library I could not use the Seneca Matrix system, as my account did not have sufficient privileges to install the library.)

This program was called with a very large string that was split into pieces by spiting it on the "."

The explode function (which is a function I created) is the function that splits the string into pieces. This function could be paralleled to speed up the process of spiting up the string. Since this takes almost 20% of the total time it would be worth speeding up.

The other function that takes up a lot of resources is the Cryptopp::StringSource::StringSource function. This is part of the function that takes the String and encodes it. In the StringSource function, the strings that are being encrypted, or decrypted are being copied, being encrypted/decrypted, and stored.

Because the data would be split into smaller pieces by the explode function, this could be paralleled. Since this takes almost 55% of the time of the program, it would be worth speeding up.

Andriy Guzenko’s Findings

I found a jpeg-compressor of a relatively small size written in C++. The core compression algorithm is about 900 lines plus the header file. You can find the code online here: [ https://code.google.com/p/jpeg-compressor/ ]

It is a console application which requires three command line arguments for successful execution. The first is the source ‘jpg’ file with appropriate ‘read’ permissions. The second is the destination file in any format. The third argument is the quality factor which is a number between 0 and 100. The 0 quality factor will produce a better quality compressed image.

The application also contains the decompression module which allows to decompress files back into ‘jpg’ format.

Finally, the application provides a number of options for compression and decompression such as:

‘-o’ – enables optimized Huffman tables and makes compression slower but produces smaller files

‘-x’ – exhaustive compression test

Profiling of this application revealed a number of hotspots in both compression and decompression algorithms.

The compression profile for 3.3 Mb ‘Jpg’ image which produced 0.7 Mb compressed file:

> a.out img.jpg result 50 Each sample counts as 0.01 seconds. % cumulative self self total time seconds seconds calls s/call s/call name 12.18 1.66 1.66 image_compare(image_compare_results&, int, int, unsigned char const*, int, unsigned char const*, int, bool) 8.90 2.87 1.21 YCbCr_to_RGB_row(unsigned char*, unsigned char const*, unsigned char const*, unsigned char const*, int, int) 8.61 4.04 1.17 jpge::DCT2D(int*) 8.46 5.18 1.15 jpge::RGB_to_YCC(unsigned char*, unsigned char const*, int) 8.02 6.28 1.09 idct_block(unsigned char*, int, short*, unsigned char*) 6.48 7.16 0.88 3456 0.00 0.00 jpgd::jpeg_decoder::expanded_convert() 5.30 7.88 0.72 4214032 0.00 0.00 jpgd::Col<4>::idct(unsigned char*, int const*) 4.12 8.44 0.56 373248 0.00 0.00 jpge::jpeg_encoder::load_quantized_coefficients(int) 4.01 8.98 0.55 get_pixel(int*, unsigned char const*, bool, int) 3.16 9.41 0.43 resample_row_h_2(unsigned char*, unsigned char*, unsigned char*, int, int) 2.58 9.76 0.35 2140439 0.00 0.00 jpgd::Row<4>::idct(int*, short const*) 2.43 10.09 0.33 373248 0.00 0.00 jpge::jpeg_encoder::code_coefficients_pass_two(int) 2.02 10.37 0.28 decode_block(jpeg*, short*, huffman*, huffman*, int)

And produced the output :

jpge/jpgd example app Source file: "img.JPG", image resolution: 4608x3456, actual comps: 3 Writing JPEG image to file: comp Compressed file size: 799619, bits/pixel: 0.402 Compression time: 4609.175ms, Decompression time: 6812.550ms Error Max: 71.000000, Mean: 5.951586, Mean^2: 20.892606, RMSE: 4.570843, PSNR: 3 4.930877 Success.

According to the profiling data such methods as “jpge::DCT2D(int*)” and “jpge::RGB_to_YCC” can be parallelized to improve the application performance which will be particularly useful for compressing large ‘jpg’ files at a better quality factor.