Difference between revisions of "GPU610/Cosmosis"

(→Team Members) |

(→Dynamic Shared Memory (not implemented)) |

||

| (30 intermediate revisions by 4 users not shown) | |||

| Line 2: | Line 2: | ||

= Cosmosis = | = Cosmosis = | ||

== Team Members == | == Team Members == | ||

| − | # [mailto:cfbale@myseneca.ca?subject=gpu610 Clinton Bale], | + | # [mailto:cfbale@myseneca.ca?subject=gpu610 Clinton Bale], Developer |

| − | # [mailto:acraig1@myseneca.ca?subject=dps915 Alex Craig], | + | # [mailto:acraig1@myseneca.ca?subject=dps915 Alex Craig], Developer |

| − | # [mailto:jsantos13@myseneca.ca?subject=gpu610 Jesse Santos], | + | # [mailto:jsantos13@myseneca.ca?subject=gpu610 Jesse Santos], Developer |

| − | # [mailto:nbguzman@myseneca.ca?subject=dps915 Neil Guzman], | + | # [mailto:nbguzman@myseneca.ca?subject=dps915 Neil Guzman], Developer |

[mailto:nbguzman@myseneca.ca,jsantos13@myseneca.ca,acraig1@myseneca.ca,cfbale@myseneca.ca?subject=dps915-gpu610 Email All] | [mailto:nbguzman@myseneca.ca,jsantos13@myseneca.ca,acraig1@myseneca.ca,cfbale@myseneca.ca?subject=dps915-gpu610 Email All] | ||

| + | |||

| + | == Links == | ||

| + | * Repo - [https://code.google.com/p/gpu-nbody/ code.google.com/p/gpu-nbody] | ||

| + | * SFML - [http://www.sfml-dev.org/ www.sfml-dev.org/] | ||

| + | * SSE - [https://en.wikipedia.org/wiki/Streaming_SIMD_Extensions en.wikipedia.org/wiki/Streaming_SIMD_Extensions] | ||

| + | * N-Body - [http://www.cs.princeton.edu/courses/archive/fall07/cos126/assignments/nbody.html cs.princeton.edu/courses/archive/fall07/cos126/assignments/nbody.html] | ||

| + | * Grids - [http://www.resultsovercoffee.com/2011/02/cuda-blocks-and-grids.html resultsovercoffee.com/2011/02/cuda-blocks-and-grids.html] | ||

== Progress == | == Progress == | ||

=== Assignment 1 === | === Assignment 1 === | ||

| + | For our assignment 1, we are looking into finding and N-body simulator. All of us in the group have agreed to find 1 each, and after profiling them, we will choose the most inefficient one to parallelize. | ||

| + | |||

| + | ====Alex's Profiling Findings==== | ||

| + | I have found a Java applet that uses a brute force method for an N-Body simulator. It also has an improved algorithm to speed up the simulation and allow for a lot more bodies to be added. I will profile and show some sample run results for both, and see which one may be more fitting to try and parallelize. | ||

| + | |||

| + | =====Example Profiles of Brute Force Algorithm===== | ||

| + | When run with 1000 bodies: | ||

| + | [[File:1000BodyProfile.png]] | ||

| + | |||

| + | |||

| + | When run with 2500 bodies: | ||

| + | [[File:2500_bodies_profile.PNG]] | ||

| + | |||

| + | |||

| + | When run with 10000 bodies: | ||

| + | [[File:10000_bodies_profile.PNG]] | ||

| + | |||

| + | |||

| + | All of these profiles were ran for about 4 to 5 minutes each. It seems that when the body count is low (1000) the program spends most of its time in the '''addforces()''' function, which is a user defined function within one of the source files. However, when the body count gets higher, it seems that the program slows down so much from having to draw all of the bodies, it spends most of its time in the Java defined '''fillOval()''' function, rather than the '''addforces()''' function. I'm not entirely sure if we would be able to parrallelize that function, since it is in a library. It may be possible to simply define a function that does the same thing and put it in the program. | ||

| + | |||

| + | =====Example Profiles of Barnes Hut Algorithm===== | ||

| + | When run with 10000 bodies: | ||

| + | [[File:10000_bodies_BH_profile.PNG]] | ||

| + | |||

| + | |||

| + | When run with 25000 bodies: | ||

| + | [[File:25000_bodies_BH_profile.PNG]] | ||

| + | |||

| + | |||

| + | When run with 50000 bodies: | ||

| + | [[File:50000_bodies_BH_profile.PNG]] | ||

| + | |||

| + | |||

| + | All of these profiles were ran for about 5 minutes each. The percentage of time the program spends in all of the functions is pretty consistent. Again, the Java defined '''fillOval()''' function is taking the longest amount of time. I'll have to look into adding that function into the main class. Although the '''updateForce()''' and '''insert()''' functions combined take over 25% of the execution time, so something can be done about that. | ||

| + | |||

| + | If someone knows how to add spoiler tags, it would be much appreciated if you could add them to my 2 groups of pictures. | ||

| + | |||

| + | ====Clinton's Profiling Findings==== | ||

| + | |||

| + | I decided to code my own N-Body simulator using the instructions and data found at [http://www.cs.princeton.edu/courses/archive/fall07/cos126/assignments/nbody.html cs.princeton.edu]. I have created both a Windows and Linux version of the simulation, the Windows version supports drawing graphics to the screen while the Linux version does not. The implementation is coded in C++ and uses the "brute force" algorithm to calculate the bodies. While this implementation is "perfect", the run-time for it is O(n^2). I have also tried to implement a basic form of SSE ([https://en.wikipedia.org/wiki/Streaming_SIMD_Extensions Streaming SIMD Extensions]) which should increase the speed of the simulation. I will provide profiling for both SSE and non-SSE versions. | ||

| + | |||

| + | The profiling seen has been run with the following properties: | ||

| + | *Windows: i5 2500K @ 4.5Ghz | ||

| + | *Linux: Seneca Matrix | ||

| + | *Both drawing no graphics to the screen for unbiased results. | ||

| + | *Random position, velocity and mass for each body. | ||

| + | *Brute force algorithm for calculating the forces (O(n^2)). | ||

| + | |||

| + | =====Profile #1: 1000 Bodies CPU Usage===== | ||

| + | |||

| + | During this profile, the simulations are run for 240 seconds to determine which functions have the most CPU load. Inline functions are also disabled for this test so that we can properly determine where the bottlenecks of the code are. | ||

| + | |||

| + | '''Windows:''' | ||

| + | |||

| + | [[File:gpu670_cfbale_prof1_1.png|border]] | ||

| + | |||

| + | As expected, the main source of CPU load is coming from the function that does all the work computing the forces of the planets. This function takes 88.46% of the entire application's worth in computing power for four minutes. The hotspots for the AddForces function can be seen here: | ||

| + | |||

| + | [[File:gpu670_cfbale_prof1_2.png|border]] | ||

| + | |||

| + | This picture shows that the majority of the computing involved comes from the square root function and computing the gravitational constant. Both of which require heavy floating point math to complete. | ||

| + | |||

| + | '''Linux:''' | ||

| + | |||

| + | Linux shows very similar results for 1000 elements, most, if not all the cpu usuage has gone to the AddForces function. With just over 3.7 billion calls to the AddForces function, we can see the slowdown of the O(n2) run-time immediately. | ||

| + | |||

| + | [[File:gpu670_cfbale_prof1_3.png|border]] | ||

| + | |||

| + | =====Profile #2: 1000 Bodies Timing (SSE and non-SSE)===== | ||

| + | |||

| + | For this test, the simulations are run for 240 seconds to determine the amount of time it takes to calculate one “sample” which is one whole brute-force pass of all the bodies. | ||

| + | |||

| + | '''Windows (non-SSE):''' | ||

| + | |||

| + | [[File:gpu670_cfbale_prof2_1.png|border]] | ||

| + | |||

| + | This screenshot is a picture of the simulation running in the console. I added some information to show exactly how much information is being processed per second. Above you can see that on average it takes about 19.89 m/s to process one sample (full brute-force calculation). A nice speed which gives us about 50 samples per second. During the entire four minute long test, my Windows machine was able to execute 12067 samples. | ||

| + | |||

| + | '''Linux (non-SSE):''' | ||

| + | |||

| + | [[File:gpu670_cfbale_prof2_2.png|border]] | ||

| + | |||

| + | On Seneca's Matrix server, the results are surprisingly much slower, about half the speed of my Windows machine using full optimizations. You can see that in the time it took for my machine to run 12067 samples, Matrix only completed 6359. | ||

| + | |||

| + | '''Windows (SSE):''' | ||

| + | |||

| + | I rewrote some of the functions for calculating the forces on the bodies to use SSE code. This is the first time I have ever written SSE code and may not be properly optimized for what it's doing. The performance increases of my implementation is negligible, but I'm sure that If I had more knowledge in the SSE architecture the performance difference would be much more noticeable. | ||

| + | |||

| + | [[File:gpu670_cfbale_prof2_3.png|border]] | ||

| + | |||

| + | After the rewrite of my calculation functions, I only gained about a 2.5% increase in speed, which is definitely not worth it. | ||

| + | |||

| + | '''Linux (SSE):''' | ||

| + | |||

| + | To enable SSE on Linux, you can use the built in compiler parameters that g++ has to automatically generate SSE instructions for you: | ||

| + | |||

| + | <pre>-march=native -mfpmath=sse</pre> | ||

| + | |||

| + | [[File:gpu670_cfbale_prof2_4.png|border]] | ||

| + | |||

| + | Enabling this gave me a small performance boost of about 5 samples per second. Increasing my total sample count over four minutes to 7468 from 6359, that's a 15% increase in speed from just adding two compiler parameters, not bad. | ||

| + | |||

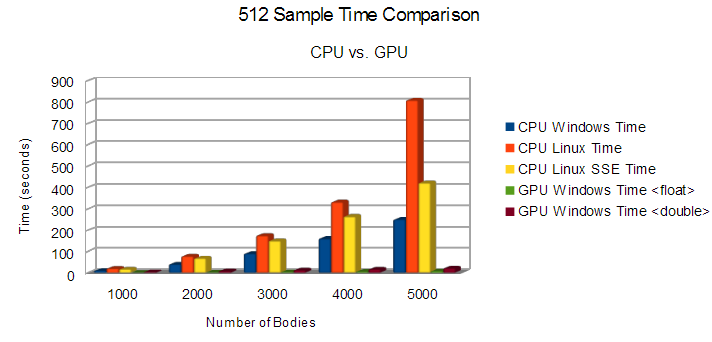

| + | =====Profile #3: 512 Samples 1000-5000 Bodies Comparison===== | ||

| + | |||

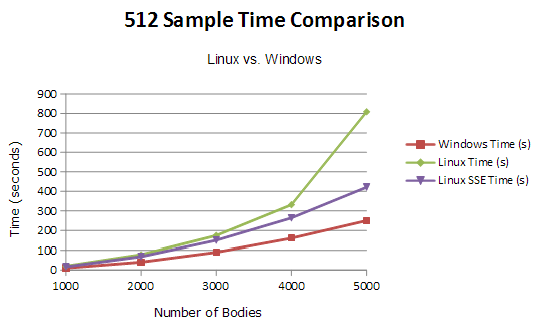

| + | For this final profile, I sampled the time difference between Linux and Windows. I include The Linux SSE and non-SSE versions, but only the standard Windows implementation due to the fact that the speed increase is next to nothing with my SSE version. The following test was timing the amount of seconds it took to compute 512 brute-force samples of the N-Body simulation, lower the better. | ||

| + | |||

| + | [[File:gpu670_cfbale_prof3_1.png|border]] | ||

| + | |||

| + | =====Parallelizable?===== | ||

| + | |||

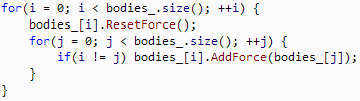

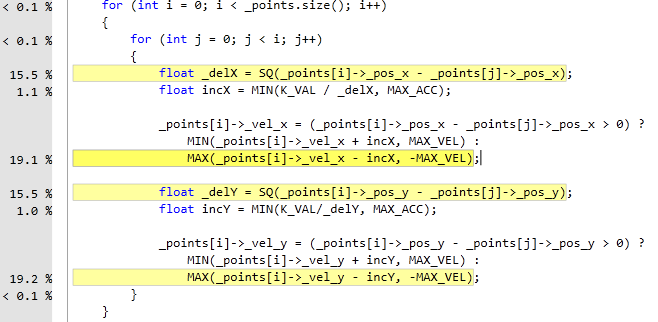

| + | For my 2D N-Body simulation, you can spot the section of code where parallelization would give massive speedups. Since processors do things in a serial order, the following double-for loop is the cause for most of the delay in the application: | ||

| + | |||

| + | [[File:gpu670_cfbale_last.png|border]] | ||

| + | |||

| + | If I was to parallelize this code using CUDA, I would put the ResetForce and AddForce function calls into their own thread on the GPU. Therefore, instead of computing all the forces sequentially, they all get computed at once. | ||

| + | |||

| + | ====Neil's Profiling Findings==== | ||

| + | The program I found is an n-body simulation using a brute force algorithm. It essentially does an n-body simulation of O(n^2) efficiency and displays the visual output onto the screen. For testing purposes-as well as for compatibility issues-I disabled the visual output and redirected the function call, vga_setmode, to text mode (0)). | ||

| + | |||

| + | |||

| + | '''Testing Environment:''' | ||

| + | |||

| + | *Operating System: Ubuntu 12.10, 32-bit | ||

| + | |||

| + | *Compiler: GNU C++, version 4:4.7.2-1ubuntu2 | ||

| + | |||

| + | *Processor: Intel(c) Core(tm) i5 CPU M 480 @ 2.67GHz x 4 | ||

| + | |||

| + | *Graphics Card: Intel(c) HD Graphics 2000 | ||

| + | |||

| + | *Memory: 5.7GB | ||

| + | |||

| + | |||

| + | '''How to Setup (on Linux machines with admin priveleges only):''' | ||

| + | * Download source file: [http://sourceforge.net/projects/nbodysim01/files/nbodysim01/C%2B%2B_code/galaxy_0.1.cpp.gz/download | n-body-simulation] | ||

| + | * Install required libraries/utils: | ||

| + | <pre>sudo apt-get install g++ | ||

| + | sudo apt-get install libsvgal-dev | ||

| + | </pre> | ||

| + | * Compile and run: | ||

| + | <pre>g++ galaxy_0.1.cpp -lvga -pg | ||

| + | sudo ./a.out | ||

| + | </pre> | ||

| + | |||

| + | |||

| + | '''Analysis:''' | ||

| + | |||

| + | The program contains 1 main function that takes up 90% of the execution time: Calculate_Gravity(). The reason being that the function has 3 for loops. 1 for loop to reinitialize the bodies' locations. The other 2 for loops are nested and is what does the main calculations of the simulation. | ||

| + | |||

| + | <pre> | ||

| + | |||

| + | for (i=0; i<=NumP-1; i++) { xa[i]=0; ya[i]=0; za[i]=0; } | ||

| + | for (i=0; i<=NumP-1; i++) | ||

| + | { | ||

| + | for (j=i+1; j<=NumP-1; j++) | ||

| + | { | ||

| + | // calculating statements | ||

| + | </pre> | ||

| + | |||

| + | Another hot spot in the code was the function planet::Interact(...) because it had to do a lot of calculations for each body | ||

| + | |||

| + | <pre> | ||

| + | for (i=0;i<= NumP-1;i++) | ||

| + | if (P[i].exist) P[i].Interact(xa[i],ya[i],za[i],swx,swy,swz); | ||

| + | </pre> | ||

| + | |||

| + | and then update the visual of each position. | ||

| + | |||

| + | <pre> | ||

| + | if (ins) | ||

| + | { | ||

| + | vga_setcolor(colr); | ||

| + | vga_drawpixel (xpos,ypos); | ||

| + | if (prj) Projection(7,sc); | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | Right now, the functions are running once at a time, making the program run slower. If both Calculate_Gravity() and planet::Interact(...)'s for loops were parallelized, there would be a significant speedup. | ||

| + | |||

| + | Using Amdahl's Law: | ||

| + | |||

| + | <pre> | ||

| + | S1344 = 1 / ( 1 - 0.9982 + 0.9982 / 1344) = 393.28 | ||

| + | </pre> | ||

| + | |||

| + | A ~393 times speedup would make a function that took 24.16 seconds to execute, only 0.06 seconds. | ||

| + | |||

| + | |||

| + | '''Difficulties Met:''' | ||

| + | |||

| + | The main difficulty was trying to find and install the library that would let the code compile. The library that the program uses, svgalibs, is an old library meant for displaying images on linux machines. This means that the program itself would try and access the /dev/tty of the linux system-this is also why you needed admin privileges to run the program. | ||

| + | |||

| + | Another difficulty was the initial reading of the code and trying to understand it. After a couple more reading, it becomes easier to understand and it looks like it could be improved much more by changing the graphics used and the structure of the code. | ||

| + | |||

| + | If we end up choosing this, the difficulty might light in the fact that we have to update the technologies used (graphics library), restructure the code, and possibly change the algorithm to Barnes-hut. | ||

| + | |||

| + | |||

| + | '''Summary:''' | ||

| + | * Algorithm used: Brute force O(n^2), is way too ineffecient for high numbers of n | ||

| + | ** Can be improved upto O(n log n) through use of Barnes-hut algorithm | ||

| + | * Main function to parallelize: Calculate_Gravity(), due to these for loops: | ||

| + | |||

| + | <pre> | ||

| + | |||

| + | for (i=0; i<=NumP-1; i++) { xa[i]=0; ya[i]=0; za[i]=0; } | ||

| + | for (i=0; i<=NumP-1; i++) | ||

| + | { | ||

| + | for (j=i+1; j<=NumP-1; j++) | ||

| + | { | ||

| + | // calculating statements | ||

| + | </pre> | ||

| + | * Potential speedup, based on Amdahl's Law and with 1344 cores (GTX670): ~393 times | ||

| + | ** This means going from 24.16 seconds of execution time to 0.06 seconds | ||

| + | |||

| + | |||

| + | '''Resources:''' | ||

| + | |||

| + | [http://zenit.senecac.on.ca/wiki/imgs/Dps915_neil_flat_profiles.pdf Flat Profiles for 100,500,1000,5000,10000 bodies] | ||

| + | |||

| + | <gallery> | ||

| + | File:Dps915_neil_exec_calc.png|Execution Time | ||

| + | File:Dps915_neil_self_calc.png|Self Seconds | ||

| + | File:Dps915_neil_calls_calc.png|Self Calls | ||

| + | </gallery> | ||

| + | |||

| + | ====Jesse's Profiling Findings==== | ||

| + | I found an iterated approximation of an N-Body project on GitHub at: https://github.com/jrupac/N-body-Simulation. The simulation uses the brute force algorithm with a run-time of O(n^2). It uses SDL to draw squares which represent each body. I have removed all SDL so it only does the calculations. It also has collision detection with other bodies. It still collides with the window sides even though I have removed the window. I also included a high resolution timer by Song Ho Ahn. The timer class can be found at: http://www.cnblogs.com/JohnShao/archive/2011/10/14/2211085.html | ||

| + | |||

| + | '''Testing Environment:''' | ||

| + | |||

| + | *Windows: i5 3570K @ 5Ghz | ||

| + | |||

| + | *Raw computations, no graphical visualization | ||

| + | |||

| + | *The velocity of the point is modified. Not a proper force. | ||

| + | |||

| + | *Brute force algoritim for calculation the forces O(N^2) | ||

| + | |||

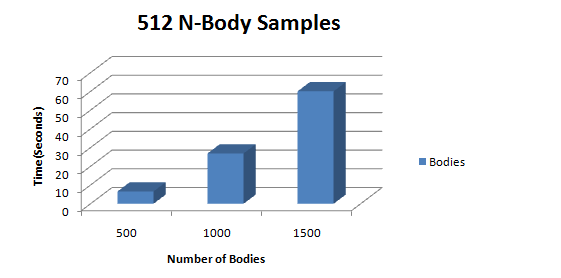

| + | =====Profile: 512 Samples of 500-1500 Bodies===== | ||

| + | I ran a series of N-Body simulations and sampled each run 512 times. The results are exactly as you would expect for an O(n^2) algorithm; a quadratic increase in time. | ||

| + | |||

| + | [[File:gpu610_jsantos13_samples.png|border]] | ||

| + | |||

| + | =====Analysis===== | ||

| + | Using Visual Studio 10 profiler, it is clear that the update function is an expensive call path. | ||

| + | |||

| + | [[File:gpu610_jsantos13_vsprofile.png|border]] | ||

| + | |||

| + | Although the program is an iterated approximation of an N-Body simulation, it is slower than a more proper N-Body simulation. The calculations are incorrect and the majority of them are unnecessary. The update function uses a double for loop to calculate the forces for each particle amongst one another. This is of O(n^2) runtime. | ||

| + | |||

| + | [[File:gpu610_jsantos13_code.png|border]] | ||

| + | |||

| + | =====Summary===== | ||

| + | You can see that the majority of the processing time is used on SQ(square) and MAX(which value is bigger) calculations. The point calculation can be done independently and therefore can be parallelized with CUDA. This program can be speed up even more if we utilize the Barnes-hut algorithm for calculating N-Bodies using quad trees and spatial partitions. | ||

| + | |||

=== Assignment 2 === | === Assignment 2 === | ||

| + | We decided to use the N-Body simulator that Clinton profiled for assignment 2. | ||

| + | |||

| + | ==== Baseline ==== | ||

| + | The following profiles were made under the following compiler and computer settings: | ||

| + | |||

| + | <pre>nvcc main.cpp timer.cpp sim\simbody.cu sim\simulation.cu -DWIN32 -O3</pre> | ||

| + | |||

| + | * i5 2500K @ 4.5Ghz | ||

| + | * Nvidia GTX 560Ti | ||

| + | * Both drawing no graphics to the screen for unbiased results. | ||

| + | * Random position, velocity and mass for each body. | ||

| + | * Brute force algorithm for calculating the forces (O(n^2)). | ||

| + | |||

| + | ==== Profiles ==== | ||

| + | ===== Profile #1 ===== | ||

| + | |||

| + | [[File:gpu670_cosmo_a2_prof1.png|border]] | ||

| + | |||

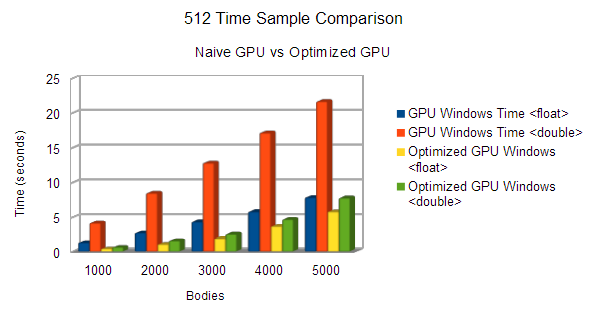

| + | For our initial profile we sampled the time difference from the CPU and GPU implementation of the N-Body brute force algorithm. The chart above describes the amount of seconds it took to compute 512 brute-force samples of the N-Body simulation, lower is better. Our findings proved to be quite impressive with a 3097% speed up on 5000 bodies using the GPU to simulate. According to Amdahl’s Law, there would be an ~8.5x speedup for a function taking up 88.46% of an application’s execution time with a graphics card that has 384 cuda cores. | ||

| + | |||

| + | ===== Profile #2 ===== | ||

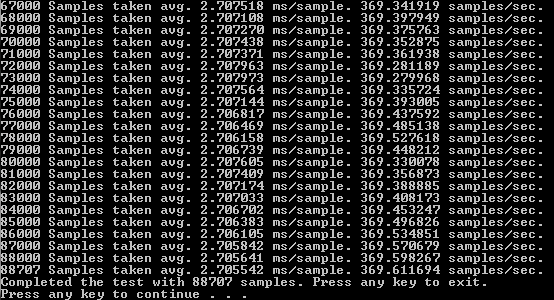

| + | Our second profile consists of running simulations for 240 seconds to determine how many samples we achieve per second, and how many total samples we end up with after four minutes. | ||

| + | |||

| + | [[File:gpu670_cosmo_a2_prof2.png|border]] | ||

| + | |||

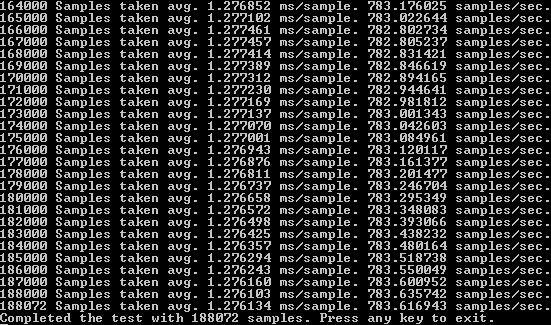

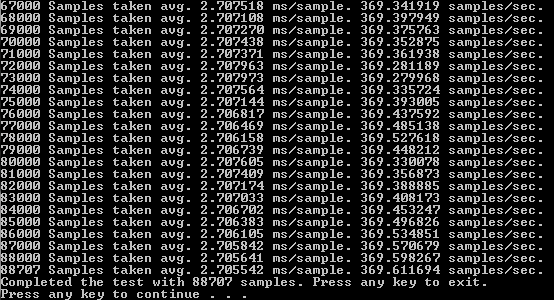

| + | This profile shows the unbelievable amount of speedup that we can achieve with simple parallelization. Using the CPU on Windows with SSE, we achieved an average of about 51 samples per second for a total of 12359 samples taken over a period of four minutes. With the GPU parallelization we achieved an average of 370 samples per second, with a total of 88707 samples over a period of four minutes. Therefore, giving us an average speed increase of about 7.25x per sample. | ||

| + | |||

| + | ==== Difficulties ==== | ||

| + | We faced many discrepant difficulties in our endeavor to transfer the code from being executed on the host to being executed on the GPU. One of the challenges faced was due to the fact that the image’s functions were within a library. Because of this we had to take the image management out of the ''Body ''class, as we could not use a thrust device vector to store the bodies because we could not make the functions the image used callable on the device. This was an annoying hurdle as we had to restructure one of our classes (''Body''), and a portion of the code within other classes (''BodyManager'' & ''Game''). | ||

| + | |||

| + | Another challenge we were presented with was getting nvcc to compile and link in 64-bit while using our static 32-bit SFML libraries. We ended up reverting to a dynamic-linking version of SFML and a 32-bit version of our executable. This change is only temporary until we can safely and more stably compile SFML and all of it’s dependencies''' '''using a 64-bit architecture. | ||

| + | |||

| + | ==== Code ==== | ||

| + | |||

| + | ===== Old CPU Code ===== | ||

| + | |||

| + | <div style='color:#000020;background:#f6f8ff;'> | ||

| + | <span style='color:#200080; font-weight:bold; '>void</span> Simulation<span style='color:#406080; '>::</span>Tick<span style='color:#308080; '>(</span><span style='color:#200080; font-weight:bold; '>double</span> dt<span style='color:#308080; '>)</span> <span style='color:#406080; '>{</span> | ||

| + | <span style='color:#003060; '>size_t</span> i <span style='color:#308080; '>=</span> <span style='color:#008c00; '>0</span><span style='color:#308080; '>,</span> j <span style='color:#308080; '>=</span> <span style='color:#008c00; '>0</span><span style='color:#406080; '>;</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>for</span><span style='color:#308080; '>(</span>i <span style='color:#308080; '>=</span> <span style='color:#008c00; '>0</span><span style='color:#406080; '>;</span> i <span style='color:#308080; '><</span> bodies_<span style='color:#308080; '>.</span>size<span style='color:#308080; '>(</span><span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> <span style='color:#308080; '>+</span><span style='color:#308080; '>+</span>i<span style='color:#308080; '>)</span> <span style='color:#406080; '>{</span> | ||

| + | bodies_<span style='color:#308080; '>[</span>i<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>ResetForce<span style='color:#308080; '>(</span><span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>for</span><span style='color:#308080; '>(</span>j <span style='color:#308080; '>=</span> <span style='color:#008c00; '>0</span><span style='color:#406080; '>;</span> j <span style='color:#308080; '><</span> bodies_<span style='color:#308080; '>.</span>size<span style='color:#308080; '>(</span><span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> <span style='color:#308080; '>+</span><span style='color:#308080; '>+</span>j<span style='color:#308080; '>)</span> <span style='color:#406080; '>{</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>if</span><span style='color:#308080; '>(</span>i <span style='color:#308080; '>!</span><span style='color:#308080; '>=</span> j<span style='color:#308080; '>)</span> <span style='color:#406080; '>{</span> | ||

| + | bodies_<span style='color:#308080; '>[</span>i<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>AddForce<span style='color:#308080; '>(</span>bodies_<span style='color:#308080; '>[</span>j<span style='color:#308080; '>]</span><span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>for</span><span style='color:#308080; '>(</span>i <span style='color:#308080; '>=</span> <span style='color:#008c00; '>0</span><span style='color:#406080; '>;</span> i <span style='color:#308080; '><</span> bodies_<span style='color:#308080; '>.</span>size<span style='color:#308080; '>(</span><span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> <span style='color:#308080; '>+</span><span style='color:#308080; '>+</span>i<span style='color:#308080; '>)</span> <span style='color:#406080; '>{</span> | ||

| + | bodies_<span style='color:#308080; '>[</span>i<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Tick<span style='color:#308080; '>(</span>dt<span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | </div> | ||

| + | |||

| + | ===== Updated Kernel Code ===== | ||

| + | |||

| + | <div style='color:#000020;background:#f6f8ff;'> | ||

| + | <span style='color:#200080; font-weight:bold; '>void</span> __global__ SimCalc<span style='color:#308080; '>(</span>BodyArray a<span style='color:#308080; '>)</span><span style='color:#406080; '>{</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>int</span> idx <span style='color:#308080; '>=</span> blockIdx<span style='color:#308080; '>.</span>x <span style='color:#308080; '>*</span> blockDim<span style='color:#308080; '>.</span>x <span style='color:#308080; '>+</span> threadIdx<span style='color:#308080; '>.</span>x<span style='color:#406080; '>;</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>if</span> <span style='color:#308080; '>(</span>idx <span style='color:#308080; '><</span> a<span style='color:#308080; '>.</span>size<span style='color:#308080; '>)</span> <span style='color:#406080; '>{</span> | ||

| + | a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>ResetForce<span style='color:#308080; '>(</span><span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>for</span> <span style='color:#308080; '>(</span><span style='color:#003060; '>size_t</span> j <span style='color:#308080; '>=</span> <span style='color:#008c00; '>0</span><span style='color:#406080; '>;</span> j <span style='color:#308080; '><</span> a<span style='color:#308080; '>.</span>size<span style='color:#406080; '>;</span> <span style='color:#308080; '>+</span><span style='color:#308080; '>+</span>j<span style='color:#308080; '>)</span> <span style='color:#406080; '>{</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>if</span> <span style='color:#308080; '>(</span>idx <span style='color:#308080; '>!</span><span style='color:#308080; '>=</span> j<span style='color:#308080; '>)</span> <span style='color:#406080; '>{</span> | ||

| + | a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>AddForce<span style='color:#308080; '>(</span>a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>j<span style='color:#308080; '>]</span><span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | |||

| + | <span style='color:#200080; font-weight:bold; '>void</span> __global__ SimTick<span style='color:#308080; '>(</span>BodyArray a<span style='color:#308080; '>,</span> <span style='color:#200080; font-weight:bold; '>float</span> dt<span style='color:#308080; '>)</span> <span style='color:#406080; '>{</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>int</span> idx <span style='color:#308080; '>=</span> blockIdx<span style='color:#308080; '>.</span>x <span style='color:#308080; '>*</span> blockDim<span style='color:#308080; '>.</span>x <span style='color:#308080; '>+</span> threadIdx<span style='color:#308080; '>.</span>x<span style='color:#406080; '>;</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>if</span> <span style='color:#308080; '>(</span>idx <span style='color:#308080; '><</span> a<span style='color:#308080; '>.</span>size<span style='color:#308080; '>)</span> <span style='color:#406080; '>{</span> | ||

| + | a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Tick<span style='color:#308080; '>(</span>dt<span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | </div> | ||

| + | |||

=== Assignment 3 === | === Assignment 3 === | ||

| + | |||

| + | ==== Problem Overview ==== | ||

| + | An N-body simulation is a simulation of a dynamical system of particles, usually under the influence of physical forces, such as gravity. In cosmology, they are used to study processes of non-linear structure formation such as the process of forming galaxy filaments and galaxy halos from dark matter in physical cosmology. Direct N-body simulations are used to study the dynamical evolution of star clusters. | ||

| + | |||

| + | To be able to successfully have an N-body simulation, a program must go through each body, and add the forces of each other body that is affecting it to update where its position would be in the simulation. Due to this, the algorithm that is used to do these calculations has a time complexity of O(n^2). Having the time increase exponentially as n increases linearly makes the simulation rather slow, and thus a perfect candidate for parallelization. | ||

| + | |||

| + | ==== Baseline ==== | ||

| + | The following profiles were made under the following compiler and computer settings: | ||

| + | |||

| + | <pre>nvcc main.cpp timer.cpp sim\simbody.cu sim\simulation.cu -DWIN32 -O3</pre> | ||

| + | |||

| + | * i5 2500K @ 4.5Ghz | ||

| + | * Nvidia GTX 560Ti | ||

| + | * Raw computations, no graphics drawn to the screen for unbiased results. | ||

| + | * Random position, velocity and mass for each body. | ||

| + | * Brute force algorithm for calculating the forces (O(n^2)). | ||

| + | |||

| + | ==== Initial Profiling ==== | ||

| + | |||

| + | Initially, the serial version of this program took about 13 minutes to calculate 512 samples in a 5000-body simulation. Even with the use of Steaming SIMD Extensions, the program took about 7 minutes to do the same test. | ||

| + | |||

| + | ==== Parallelization ==== | ||

| + | |||

| + | ===== Basic Parallelization ===== | ||

| + | |||

| + | * Turned old serial code where the program bottlenecked to into two separate kernels | ||

| + | |||

| + | ===== Optimized Parallelization ===== | ||

| + | |||

| + | * Changed the launch configuration for the kernels so there were no wasted threads (based on devices compute capabilities) | ||

| + | * Prefetched values that don’t change throughout the loops | ||

| + | * Did computations in the kernel to reduce function overhead | ||

| + | * Used constant memory for the gravitation constant | ||

| + | |||

| + | ==== Profiles ==== | ||

| + | |||

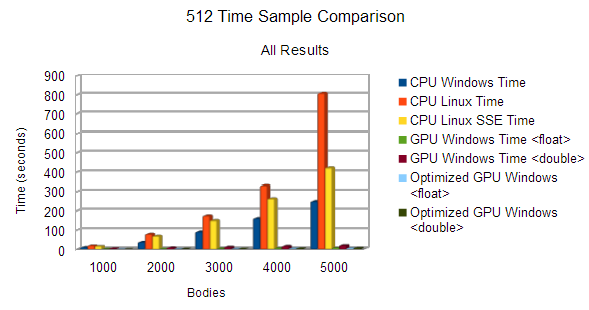

| + | ===== Profile #1 ===== | ||

| + | |||

| + | [[File:cosmosis_assn3_p1_1.png|border]] | ||

| + | |||

| + | |||

| + | [[File:cosmosis_assn3_p1_2.png|border]] | ||

| + | |||

| + | To be able to see the difference between the pre and post optimized code, this graph does not include the serial cpu timings. | ||

| + | |||

| + | ===== Profile #2 ===== | ||

| + | Our second profile again consists of running simulations for 240 seconds to determine how many samples we achieve per second, and how many total samples we end up with after four minutes. | ||

| + | |||

| + | [[File:cosmosis_assn3_p2_1.png|border]] | ||

| + | |||

| + | Optimized GPU after four minutes. | ||

| + | |||

| + | |||

| + | [[File:cosmosis_assn3_p2_2.png|border]] | ||

| + | |||

| + | Naive GPU Samples after four minutes. | ||

| + | |||

| + | |||

| + | Comparing our results from the previous GPU implementation, we managed to achieve a total of 188072 samples compared to 88707. Roughly a 112.015 % increase in the number of samples completed in four minutes. Compared with our CPU code, the optimized GPU code is 1421.741% faster. | ||

| + | |||

| + | ==== Test Suite ==== | ||

| + | |||

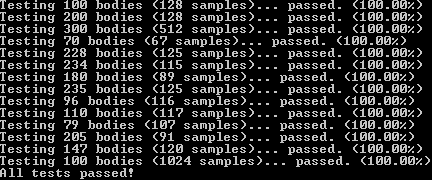

| + | [[File:cosmosis_assn3_test.png|border]] | ||

| + | |||

| + | During the initial stages of our optimizations, we noticed that incorrect data started showing up after some changes. In order to ensure that even after our optimizations the data was still correct we had to develop a comprehensive test suite. The test suite goes through multiple tests and compares host values (assumed 100% correct) to the device values. These values are compared using their final position after a number of samples. The test suite allows for 1.0 difference in values to compensate for floating-point errors. | ||

| + | |||

| + | ==== Conclusions ==== | ||

| + | |||

| + | Through the use of CUDA, we managed to achieve a total of 4229.33% speedup in time from serial CPU to the final optimized GPU. We used many basic techniques to achieve a speedup of 35.4% from the pre-optimized code, to the post-optimized code. There were several different parallelization techniques that we did not manage to get to work with our program that could have sped it up even further. One such thing was shared memory. | ||

| + | |||

| + | Our kernels accessed the same bodies for the calculations so we tried to implement shared memory so that threads in a current block can access them faster. It worked when n bodies was less than 1755 for graphics cards with a compute capability of 2.x. This is due to the fact that a body took up 28 bytes in memory, hence why 1755 bodies would not work because it took up 49,140 bytes (greater than the max shared memory a 2.x graphics card can hold: 48K). There was a roundabout way of feeding the kernel chunks of bodies at a time that only worked on some occasions, so we ended up scrapping it. | ||

| + | |||

| + | We initially intended on using the fast-math library provided by CUDA. At first our results were marginally faster than our previous code. Though after some optimizations we discovered that our code actually performed better than the fast-math library. With fast-math, it took 0.451889 seconds to process 1000 bodies for 512 samples, conversely without fast-math we got 0.409581 seconds, which is a considerable improvement. | ||

| + | |||

| + | ==== Optimized Code ==== | ||

| + | |||

| + | <div style='color:#000020;background:#f6f8ff;'> | ||

| + | <span style='color:#200080; font-weight:bold; '>void</span> __global__ SimCalc<span style='color:#308080; '>(</span>BodyArray a<span style='color:#308080; '>)</span> | ||

| + | <span style='color:#406080; '>{</span> | ||

| + | int_fast32_t idx <span style='color:#308080; '>=</span> blockIdx<span style='color:#308080; '>.</span>x <span style='color:#308080; '>*</span> blockDim<span style='color:#308080; '>.</span>x <span style='color:#308080; '>+</span> threadIdx<span style='color:#308080; '>.</span>x<span style='color:#406080; '>;</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>if</span> <span style='color:#308080; '>(</span>idx <span style='color:#308080; '><</span> a<span style='color:#308080; '>.</span>size<span style='color:#308080; '>)</span> <span style='color:#406080; '>{</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>const</span> _T G <span style='color:#308080; '>=</span> <span style='color:#008000; '>6.67384</span><span style='color:#006600; '>f</span> <span style='color:#308080; '>*</span> <span style='color:#003060; '>pow</span><span style='color:#308080; '>(</span><span style='color:#008000; '>10.0</span><span style='color:#006600; '>f</span><span style='color:#308080; '>,</span> <span style='color:#308080; '>-</span><span style='color:#008000; '>11.0</span><span style='color:#006600; '>f</span><span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> | ||

| + | <span style='color:#595979; '>//precompute positions at index</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>const</span> _T px <span style='color:#308080; '>=</span> a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Position<span style='color:#308080; '>.</span>x<span style='color:#406080; '>;</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>const</span> _T py <span style='color:#308080; '>=</span> a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Position<span style='color:#308080; '>.</span>y<span style='color:#406080; '>;</span> | ||

| + | <span style='color:#595979; '>//mass at the index</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>const</span> _T M_idx <span style='color:#308080; '>=</span> G<span style='color:#308080; '>*</span>a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Mass<span style='color:#406080; '>;</span> | ||

| + | a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Force <span style='color:#308080; '>=</span> vec2_t<span style='color:#308080; '>(</span><span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>for</span> <span style='color:#308080; '>(</span>int_fast32_t j<span style='color:#308080; '>(</span><span style='color:#008c00; '>0</span><span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> j <span style='color:#308080; '>!</span><span style='color:#308080; '>=</span> a<span style='color:#308080; '>.</span>size<span style='color:#406080; '>;</span> <span style='color:#308080; '>+</span><span style='color:#308080; '>+</span>j<span style='color:#308080; '>)</span> <span style='color:#406080; '>{</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>if</span> <span style='color:#308080; '>(</span>idx <span style='color:#308080; '>!</span><span style='color:#308080; '>=</span> j<span style='color:#308080; '>)</span> <span style='color:#406080; '>{</span> | ||

| + | _T dx <span style='color:#308080; '>=</span> a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>j<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Position<span style='color:#308080; '>.</span>x <span style='color:#308080; '>-</span> px<span style='color:#406080; '>;</span> | ||

| + | _T dy <span style='color:#308080; '>=</span> a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>j<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Position<span style='color:#308080; '>.</span>y <span style='color:#308080; '>-</span> py<span style='color:#406080; '>;</span> | ||

| + | _T r <span style='color:#308080; '>=</span> <span style='color:#003060; '>sqrt</span><span style='color:#308080; '>(</span>dx<span style='color:#308080; '>*</span>dx <span style='color:#308080; '>+</span> dy<span style='color:#308080; '>*</span>dy<span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> | ||

| + | _T F <span style='color:#308080; '>=</span> <span style='color:#308080; '>(</span>M_idx<span style='color:#308080; '>*</span>a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>j<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Mass<span style='color:#308080; '>)</span><span style='color:#308080; '>/</span><span style='color:#308080; '>(</span>r<span style='color:#308080; '>*</span>r<span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> | ||

| + | a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Force<span style='color:#308080; '>.</span>x <span style='color:#308080; '>+</span><span style='color:#308080; '>=</span> F <span style='color:#308080; '>*</span> <span style='color:#308080; '>(</span>dx <span style='color:#308080; '>/</span> r<span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> | ||

| + | a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Force<span style='color:#308080; '>.</span>y <span style='color:#308080; '>+</span><span style='color:#308080; '>=</span> F <span style='color:#308080; '>*</span> <span style='color:#308080; '>(</span>dy <span style='color:#308080; '>/</span> r<span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | |||

| + | <span style='color:#200080; font-weight:bold; '>void</span> __global__ SimTick<span style='color:#308080; '>(</span>BodyArray a<span style='color:#308080; '>,</span> _T dt<span style='color:#308080; '>)</span> | ||

| + | <span style='color:#406080; '>{</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>int</span> idx <span style='color:#308080; '>=</span> blockIdx<span style='color:#308080; '>.</span>x <span style='color:#308080; '>*</span> blockDim<span style='color:#308080; '>.</span>x <span style='color:#308080; '>+</span> threadIdx<span style='color:#308080; '>.</span>x<span style='color:#406080; '>;</span> | ||

| + | <span style='color:#200080; font-weight:bold; '>if</span> <span style='color:#308080; '>(</span>idx <span style='color:#308080; '><</span> a<span style='color:#308080; '>.</span>size<span style='color:#308080; '>)</span> | ||

| + | <span style='color:#406080; '>{</span> | ||

| + | _T mass <span style='color:#308080; '>=</span> a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Mass<span style='color:#406080; '>;</span> | ||

| + | a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Velocity<span style='color:#308080; '>.</span>x <span style='color:#308080; '>+</span><span style='color:#308080; '>=</span> dt <span style='color:#308080; '>*</span> <span style='color:#308080; '>(</span>a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Force<span style='color:#308080; '>.</span>x <span style='color:#308080; '>/</span> mass<span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> | ||

| + | a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Velocity<span style='color:#308080; '>.</span>y <span style='color:#308080; '>+</span><span style='color:#308080; '>=</span> dt <span style='color:#308080; '>*</span> <span style='color:#308080; '>(</span>a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Force<span style='color:#308080; '>.</span>y <span style='color:#308080; '>/</span> mass<span style='color:#308080; '>)</span><span style='color:#406080; '>;</span> | ||

| + | a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Position<span style='color:#308080; '>.</span>x <span style='color:#308080; '>+</span><span style='color:#308080; '>=</span> dt <span style='color:#308080; '>*</span> a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Velocity<span style='color:#308080; '>.</span>x<span style='color:#406080; '>;</span> | ||

| + | a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Position<span style='color:#308080; '>.</span>y <span style='color:#308080; '>+</span><span style='color:#308080; '>=</span> dt <span style='color:#308080; '>*</span> a<span style='color:#308080; '>.</span>array<span style='color:#308080; '>[</span>idx<span style='color:#308080; '>]</span><span style='color:#308080; '>.</span>Velocity<span style='color:#308080; '>.</span>y<span style='color:#406080; '>;</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | <span style='color:#406080; '>}</span> | ||

| + | </div> | ||

| + | |||

| + | ==== Launch Control ==== | ||

| + | |||

| + | We used the following calculations to determine the the number of threads and blocks to launch with: | ||

| + | |||

| + | <div style='color:#000020;background:#f6f8ff;'> | ||

| + | numThreads_ <span style='color:#308080; '>=</span> prop<span style='color:#308080; '>.</span>maxThreadsPerMultiProcessor <span style='color:#308080; '>/</span> maxBlocks<span style='color:#406080; '>;</span> | ||

| + | numBlocks_ <span style='color:#308080; '>=</span> <span style='color:#308080; '>(</span>bodies_<span style='color:#308080; '>.</span>size<span style='color:#308080; '>(</span><span style='color:#308080; '>)</span> <span style='color:#308080; '>+</span> numThreads_ <span style='color:#308080; '>-</span> <span style='color:#008c00; '>1</span><span style='color:#308080; '>)</span> <span style='color:#308080; '>/</span> numThreads_<span style='color:#406080; '>;</span> | ||

| + | numThreads_ <span style='color:#308080; '>=</span> <span style='color:#308080; '>(</span>numThreads_ <span style='color:#308080; '>+</span> <span style='color:#008c00; '>1</span><span style='color:#308080; '>)</span> <span style='color:#308080; '>&</span> <span style='color:#308080; '>~</span><span style='color:#008c00; '>1</span><span style='color:#406080; '>;</span> | ||

| + | </div> | ||

| + | |||

| + | ==== Dynamic Shared Memory (not implemented) ==== | ||

| + | |||

| + | This is the roundabout way we thought of, of how to send in chunks to the kernel so that the kernel can handle shared memory size of no greater than the max shared memory size of the GPU: | ||

| + | <div style='color:#000000;background:#ffffff;'> | ||

| + | CHUNKSIZE <span style='color:#808030; '>=</span> <span style='color:#008c00; '>512</span><span style='color:#800080; '>;</span> | ||

| + | shared_ <span style='color:#808030; '>=</span> CHUNKSIZE <span style='color:#808030; '>*</span> <span style='color:#800000; font-weight:bold; '>sizeof</span><span style='color:#808030; '>(</span>SimBody<span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>while</span> <span style='color:#808030; '>(</span>chunks <span style='color:#808030; '>></span> <span style='color:#008c00; '>0</span><span style='color:#808030; '>)</span> | ||

| + | <span style='color:#800080; '>{</span> | ||

| + | BodyArray ba <span style='color:#808030; '>=</span> <span style='color:#800080; '>{</span> <span style='color:#808030; '>&</span>arr<span style='color:#808030; '>.</span><span style='color:#603000; '>array</span><span style='color:#808030; '>[</span>index<span style='color:#808030; '>]</span><span style='color:#808030; '>,</span> CHUNKSIZE <span style='color:#800080; '>}</span><span style='color:#800080; '>;</span> | ||

| + | SimCalc <span style='color:#808030; '><</span><span style='color:#808030; '><</span><span style='color:#808030; '><</span> numBlocks_<span style='color:#808030; '>,</span> numThreads_<span style='color:#808030; '>,</span> shared_ <span style='color:#808030; '>></span><span style='color:#808030; '>></span><span style='color:#808030; '>></span><span style='color:#808030; '>(</span>ba<span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | cudaThreadSynchronize<span style='color:#808030; '>(</span><span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | SimTick <span style='color:#808030; '><</span><span style='color:#808030; '><</span><span style='color:#808030; '><</span> numBlocks_<span style='color:#808030; '>,</span> numThreads_<span style='color:#808030; '>,</span> shared_ <span style='color:#808030; '>></span><span style='color:#808030; '>></span><span style='color:#808030; '>></span><span style='color:#808030; '>(</span>ba<span style='color:#808030; '>,</span> timeStep<span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | cudaThreadSynchronize<span style='color:#808030; '>(</span><span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | index <span style='color:#808030; '>+</span><span style='color:#808030; '>=</span> CHUNKSIZE<span style='color:#800080; '>;</span> | ||

| + | <span style='color:#808030; '>-</span><span style='color:#808030; '>-</span>chunks<span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800080; '>}</span> | ||

| + | chunks <span style='color:#808030; '>=</span> arr<span style='color:#808030; '>.</span>size <span style='color:#808030; '>/</span> CHUNKSIZE <span style='color:#808030; '>+</span> <span style='color:#008c00; '>1</span><span style='color:#800080; '>;</span> | ||

| + | index <span style='color:#808030; '>=</span> <span style='color:#008c00; '>0</span><span style='color:#800080; '>;</span> | ||

| + | </div> | ||

| + | It handles calculations in chunks so that the kernel can do calculations on body sizes of more than 1175 for devices with compute capabilities of 3.x. | ||

| + | |||

| + | Here is what the shared memory kernels would look like (not implemented because not correct): | ||

| + | <div style='color:#000000;background:#ffffff;'> | ||

| + | <span style='color:#800000; font-weight:bold; '>void</span> __global__ SimCalc<span style='color:#808030; '>(</span>BodyArray a<span style='color:#808030; '>)</span> | ||

| + | <span style='color:#800080; '>{</span> | ||

| + | int_fast32_t idx <span style='color:#808030; '>=</span> blockIdx<span style='color:#808030; '>.</span>x <span style='color:#808030; '>*</span> blockDim<span style='color:#808030; '>.</span>x <span style='color:#808030; '>+</span> threadIdx<span style='color:#808030; '>.</span>x<span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>int</span> tid <span style='color:#808030; '>=</span> threadIdx<span style='color:#808030; '>.</span>x<span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>extern</span> __shared__ SimBody sa<span style='color:#808030; '>[</span><span style='color:#808030; '>]</span><span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>if</span> <span style='color:#808030; '>(</span>idx <span style='color:#808030; '>></span><span style='color:#808030; '>=</span> a<span style='color:#808030; '>.</span>size<span style='color:#808030; '>)</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>return</span><span style='color:#800080; '>;</span> | ||

| + | sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span> <span style='color:#808030; '>=</span> a<span style='color:#808030; '>.</span><span style='color:#603000; '>array</span><span style='color:#808030; '>[</span>idx<span style='color:#808030; '>]</span><span style='color:#800080; '>;</span> | ||

| + | __syncthreads<span style='color:#808030; '>(</span><span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>const</span> _T G <span style='color:#808030; '>=</span> <span style='color:#008000; '>6.67384</span><span style='color:#006600; '>f</span> <span style='color:#808030; '>*</span> <span style='color:#603000; '>pow</span><span style='color:#808030; '>(</span><span style='color:#008000; '>10.0</span><span style='color:#006600; '>f</span><span style='color:#808030; '>,</span> <span style='color:#808030; '>-</span><span style='color:#008000; '>11.0</span><span style='color:#006600; '>f</span><span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | <span style='color:#696969; '>//precompute positions at index</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>const</span> _T px <span style='color:#808030; '>=</span> sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Position<span style='color:#808030; '>.</span>x<span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>const</span> _T py <span style='color:#808030; '>=</span> sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Position<span style='color:#808030; '>.</span>y<span style='color:#800080; '>;</span> | ||

| + | <span style='color:#696969; '>//mass at the index</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>const</span> _T M_idx <span style='color:#808030; '>=</span> G<span style='color:#808030; '>*</span>sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Mass<span style='color:#800080; '>;</span> | ||

| + | sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Force <span style='color:#808030; '>=</span> vec2_t<span style='color:#808030; '>(</span><span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>for</span> <span style='color:#808030; '>(</span>int_fast32_t j<span style='color:#808030; '>(</span><span style='color:#008c00; '>0</span><span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> j <span style='color:#808030; '>!</span><span style='color:#808030; '>=</span> a<span style='color:#808030; '>.</span>size<span style='color:#800080; '>;</span> <span style='color:#808030; '>+</span><span style='color:#808030; '>+</span>j<span style='color:#808030; '>)</span> <span style='color:#800080; '>{</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>if</span> <span style='color:#808030; '>(</span>idx <span style='color:#808030; '>!</span><span style='color:#808030; '>=</span> j<span style='color:#808030; '>)</span> <span style='color:#800080; '>{</span> | ||

| + | _T dx <span style='color:#808030; '>=</span> a<span style='color:#808030; '>.</span><span style='color:#603000; '>array</span><span style='color:#808030; '>[</span>j<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Position<span style='color:#808030; '>.</span>x <span style='color:#808030; '>-</span> px<span style='color:#800080; '>;</span> | ||

| + | _T dy <span style='color:#808030; '>=</span> a<span style='color:#808030; '>.</span><span style='color:#603000; '>array</span><span style='color:#808030; '>[</span>j<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Position<span style='color:#808030; '>.</span>y <span style='color:#808030; '>-</span> py<span style='color:#800080; '>;</span> | ||

| + | _T r <span style='color:#808030; '>=</span> <span style='color:#603000; '>sqrt</span><span style='color:#808030; '>(</span>dx<span style='color:#808030; '>*</span>dx <span style='color:#808030; '>+</span> dy<span style='color:#808030; '>*</span>dy<span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | _T F <span style='color:#808030; '>=</span> <span style='color:#808030; '>(</span>M_idx<span style='color:#808030; '>*</span>a<span style='color:#808030; '>.</span><span style='color:#603000; '>array</span><span style='color:#808030; '>[</span>j<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Mass<span style='color:#808030; '>)</span><span style='color:#808030; '>/</span><span style='color:#808030; '>(</span>r<span style='color:#808030; '>*</span>r<span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Force<span style='color:#808030; '>.</span>x <span style='color:#808030; '>+</span><span style='color:#808030; '>=</span> F <span style='color:#808030; '>*</span> <span style='color:#808030; '>(</span>dx <span style='color:#808030; '>/</span> r<span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Force<span style='color:#808030; '>.</span>y <span style='color:#808030; '>+</span><span style='color:#808030; '>=</span> F <span style='color:#808030; '>*</span> <span style='color:#808030; '>(</span>dy <span style='color:#808030; '>/</span> r<span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800080; '>}</span> | ||

| + | __syncthreads<span style='color:#808030; '>(</span><span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800080; '>}</span> | ||

| + | a<span style='color:#808030; '>.</span><span style='color:#603000; '>array</span><span style='color:#808030; '>[</span>idx<span style='color:#808030; '>]</span> <span style='color:#808030; '>=</span> sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800080; '>}</span> | ||

| + | |||

| + | <span style='color:#800000; font-weight:bold; '>void</span> __global__ SimTick<span style='color:#808030; '>(</span>BodyArray a<span style='color:#808030; '>,</span> _T dt<span style='color:#808030; '>)</span> | ||

| + | <span style='color:#800080; '>{</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>int</span> idx <span style='color:#808030; '>=</span> blockIdx<span style='color:#808030; '>.</span>x <span style='color:#808030; '>*</span> blockDim<span style='color:#808030; '>.</span>x <span style='color:#808030; '>+</span> threadIdx<span style='color:#808030; '>.</span>x<span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>int</span> tid <span style='color:#808030; '>=</span> threadIdx<span style='color:#808030; '>.</span>x<span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>extern</span> __shared__ SimBody sa<span style='color:#808030; '>[</span><span style='color:#808030; '>]</span><span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>if</span> <span style='color:#808030; '>(</span>idx <span style='color:#808030; '>></span><span style='color:#808030; '>=</span> a<span style='color:#808030; '>.</span>size<span style='color:#808030; '>)</span> | ||

| + | <span style='color:#800000; font-weight:bold; '>return</span><span style='color:#800080; '>;</span> | ||

| + | sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span> <span style='color:#808030; '>=</span> a<span style='color:#808030; '>.</span><span style='color:#603000; '>array</span><span style='color:#808030; '>[</span>idx<span style='color:#808030; '>]</span><span style='color:#800080; '>;</span> | ||

| + | __syncthreads<span style='color:#808030; '>(</span><span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | _T mass <span style='color:#808030; '>=</span> sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Mass<span style='color:#800080; '>;</span> | ||

| + | sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Velocity<span style='color:#808030; '>.</span>x <span style='color:#808030; '>+</span><span style='color:#808030; '>=</span> dt <span style='color:#808030; '>*</span> <span style='color:#808030; '>(</span>sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Force<span style='color:#808030; '>.</span>x <span style='color:#808030; '>/</span> mass<span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Velocity<span style='color:#808030; '>.</span>y <span style='color:#808030; '>+</span><span style='color:#808030; '>=</span> dt <span style='color:#808030; '>*</span> <span style='color:#808030; '>(</span>sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Force<span style='color:#808030; '>.</span>y <span style='color:#808030; '>/</span> mass<span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Position<span style='color:#808030; '>.</span>x <span style='color:#808030; '>+</span><span style='color:#808030; '>=</span> dt <span style='color:#808030; '>*</span> sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Velocity<span style='color:#808030; '>.</span>x<span style='color:#800080; '>;</span> | ||

| + | sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Position<span style='color:#808030; '>.</span>y <span style='color:#808030; '>+</span><span style='color:#808030; '>=</span> dt <span style='color:#808030; '>*</span> sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#808030; '>.</span>Velocity<span style='color:#808030; '>.</span>y<span style='color:#800080; '>;</span> | ||

| + | __syncthreads<span style='color:#808030; '>(</span><span style='color:#808030; '>)</span><span style='color:#800080; '>;</span> | ||

| + | a<span style='color:#808030; '>.</span><span style='color:#603000; '>array</span><span style='color:#808030; '>[</span>idx<span style='color:#808030; '>]</span> <span style='color:#808030; '>=</span> sa<span style='color:#808030; '>[</span>tid<span style='color:#808030; '>]</span><span style='color:#800080; '>;</span> | ||

| + | <span style='color:#800080; '>}</span> | ||

| + | </div> | ||

Latest revision as of 14:10, 13 April 2013

GPU610/DPS915 | Student List | Group and Project Index | Student Resources | Glossary

Contents

- 1 Cosmosis

- 1.1 Team Members

- 1.2 Links

- 1.3 Progress

- 1.3.1 Assignment 1

- 1.3.2 Assignment 2

- 1.3.3 Assignment 3

Cosmosis

Team Members

- Clinton Bale, Developer

- Alex Craig, Developer

- Jesse Santos, Developer

- Neil Guzman, Developer

Links

- Repo - code.google.com/p/gpu-nbody

- SFML - www.sfml-dev.org/

- SSE - en.wikipedia.org/wiki/Streaming_SIMD_Extensions

- N-Body - cs.princeton.edu/courses/archive/fall07/cos126/assignments/nbody.html

- Grids - resultsovercoffee.com/2011/02/cuda-blocks-and-grids.html

Progress

Assignment 1

For our assignment 1, we are looking into finding and N-body simulator. All of us in the group have agreed to find 1 each, and after profiling them, we will choose the most inefficient one to parallelize.

Alex's Profiling Findings

I have found a Java applet that uses a brute force method for an N-Body simulator. It also has an improved algorithm to speed up the simulation and allow for a lot more bodies to be added. I will profile and show some sample run results for both, and see which one may be more fitting to try and parallelize.

Example Profiles of Brute Force Algorithm

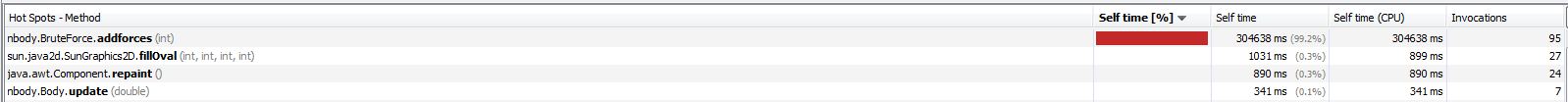

All of these profiles were ran for about 4 to 5 minutes each. It seems that when the body count is low (1000) the program spends most of its time in the addforces() function, which is a user defined function within one of the source files. However, when the body count gets higher, it seems that the program slows down so much from having to draw all of the bodies, it spends most of its time in the Java defined fillOval() function, rather than the addforces() function. I'm not entirely sure if we would be able to parrallelize that function, since it is in a library. It may be possible to simply define a function that does the same thing and put it in the program.

Example Profiles of Barnes Hut Algorithm

All of these profiles were ran for about 5 minutes each. The percentage of time the program spends in all of the functions is pretty consistent. Again, the Java defined fillOval() function is taking the longest amount of time. I'll have to look into adding that function into the main class. Although the updateForce() and insert() functions combined take over 25% of the execution time, so something can be done about that.

If someone knows how to add spoiler tags, it would be much appreciated if you could add them to my 2 groups of pictures.

Clinton's Profiling Findings

I decided to code my own N-Body simulator using the instructions and data found at cs.princeton.edu. I have created both a Windows and Linux version of the simulation, the Windows version supports drawing graphics to the screen while the Linux version does not. The implementation is coded in C++ and uses the "brute force" algorithm to calculate the bodies. While this implementation is "perfect", the run-time for it is O(n^2). I have also tried to implement a basic form of SSE (Streaming SIMD Extensions) which should increase the speed of the simulation. I will provide profiling for both SSE and non-SSE versions.

The profiling seen has been run with the following properties:

- Windows: i5 2500K @ 4.5Ghz

- Linux: Seneca Matrix

- Both drawing no graphics to the screen for unbiased results.

- Random position, velocity and mass for each body.

- Brute force algorithm for calculating the forces (O(n^2)).

Profile #1: 1000 Bodies CPU Usage

During this profile, the simulations are run for 240 seconds to determine which functions have the most CPU load. Inline functions are also disabled for this test so that we can properly determine where the bottlenecks of the code are.

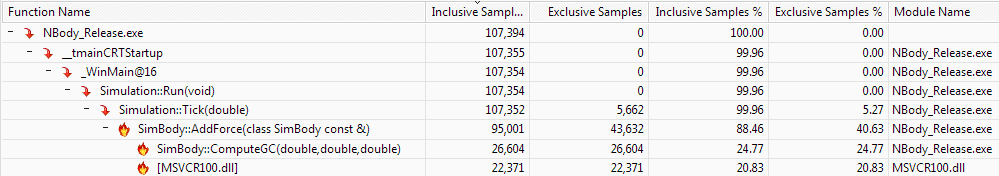

Windows:

As expected, the main source of CPU load is coming from the function that does all the work computing the forces of the planets. This function takes 88.46% of the entire application's worth in computing power for four minutes. The hotspots for the AddForces function can be seen here:

This picture shows that the majority of the computing involved comes from the square root function and computing the gravitational constant. Both of which require heavy floating point math to complete.

Linux:

Linux shows very similar results for 1000 elements, most, if not all the cpu usuage has gone to the AddForces function. With just over 3.7 billion calls to the AddForces function, we can see the slowdown of the O(n2) run-time immediately.

Profile #2: 1000 Bodies Timing (SSE and non-SSE)

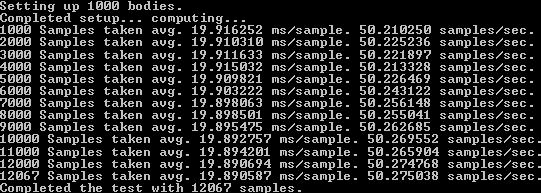

For this test, the simulations are run for 240 seconds to determine the amount of time it takes to calculate one “sample” which is one whole brute-force pass of all the bodies.

Windows (non-SSE):

This screenshot is a picture of the simulation running in the console. I added some information to show exactly how much information is being processed per second. Above you can see that on average it takes about 19.89 m/s to process one sample (full brute-force calculation). A nice speed which gives us about 50 samples per second. During the entire four minute long test, my Windows machine was able to execute 12067 samples.

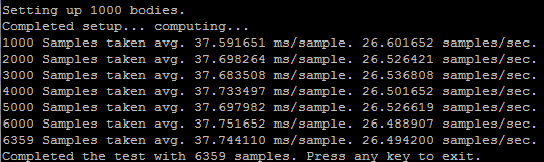

Linux (non-SSE):

On Seneca's Matrix server, the results are surprisingly much slower, about half the speed of my Windows machine using full optimizations. You can see that in the time it took for my machine to run 12067 samples, Matrix only completed 6359.

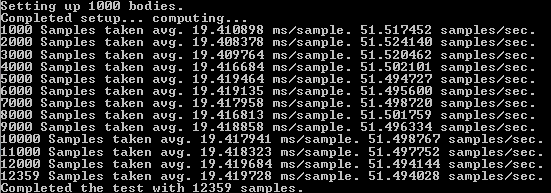

Windows (SSE):

I rewrote some of the functions for calculating the forces on the bodies to use SSE code. This is the first time I have ever written SSE code and may not be properly optimized for what it's doing. The performance increases of my implementation is negligible, but I'm sure that If I had more knowledge in the SSE architecture the performance difference would be much more noticeable.

After the rewrite of my calculation functions, I only gained about a 2.5% increase in speed, which is definitely not worth it.

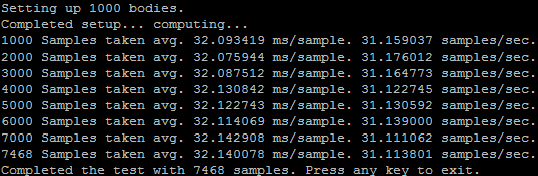

Linux (SSE):

To enable SSE on Linux, you can use the built in compiler parameters that g++ has to automatically generate SSE instructions for you:

-march=native -mfpmath=sse

Enabling this gave me a small performance boost of about 5 samples per second. Increasing my total sample count over four minutes to 7468 from 6359, that's a 15% increase in speed from just adding two compiler parameters, not bad.

Profile #3: 512 Samples 1000-5000 Bodies Comparison

For this final profile, I sampled the time difference between Linux and Windows. I include The Linux SSE and non-SSE versions, but only the standard Windows implementation due to the fact that the speed increase is next to nothing with my SSE version. The following test was timing the amount of seconds it took to compute 512 brute-force samples of the N-Body simulation, lower the better.

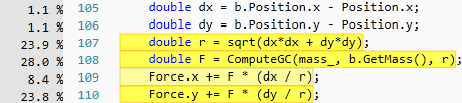

Parallelizable?

For my 2D N-Body simulation, you can spot the section of code where parallelization would give massive speedups. Since processors do things in a serial order, the following double-for loop is the cause for most of the delay in the application:

If I was to parallelize this code using CUDA, I would put the ResetForce and AddForce function calls into their own thread on the GPU. Therefore, instead of computing all the forces sequentially, they all get computed at once.

Neil's Profiling Findings

The program I found is an n-body simulation using a brute force algorithm. It essentially does an n-body simulation of O(n^2) efficiency and displays the visual output onto the screen. For testing purposes-as well as for compatibility issues-I disabled the visual output and redirected the function call, vga_setmode, to text mode (0)).

Testing Environment:

- Operating System: Ubuntu 12.10, 32-bit

- Compiler: GNU C++, version 4:4.7.2-1ubuntu2

- Processor: Intel(c) Core(tm) i5 CPU M 480 @ 2.67GHz x 4

- Graphics Card: Intel(c) HD Graphics 2000

- Memory: 5.7GB

How to Setup (on Linux machines with admin priveleges only):

- Download source file: | n-body-simulation

- Install required libraries/utils:

sudo apt-get install g++ sudo apt-get install libsvgal-dev

- Compile and run:

g++ galaxy_0.1.cpp -lvga -pg sudo ./a.out

Analysis:

The program contains 1 main function that takes up 90% of the execution time: Calculate_Gravity(). The reason being that the function has 3 for loops. 1 for loop to reinitialize the bodies' locations. The other 2 for loops are nested and is what does the main calculations of the simulation.

for (i=0; i<=NumP-1; i++) { xa[i]=0; ya[i]=0; za[i]=0; }

for (i=0; i<=NumP-1; i++)

{

for (j=i+1; j<=NumP-1; j++)

{

// calculating statements

Another hot spot in the code was the function planet::Interact(...) because it had to do a lot of calculations for each body

for (i=0;i<= NumP-1;i++)

if (P[i].exist) P[i].Interact(xa[i],ya[i],za[i],swx,swy,swz);

and then update the visual of each position.

if (ins)

{

vga_setcolor(colr);

vga_drawpixel (xpos,ypos);

if (prj) Projection(7,sc);

}

Right now, the functions are running once at a time, making the program run slower. If both Calculate_Gravity() and planet::Interact(...)'s for loops were parallelized, there would be a significant speedup.

Using Amdahl's Law:

S1344 = 1 / ( 1 - 0.9982 + 0.9982 / 1344) = 393.28

A ~393 times speedup would make a function that took 24.16 seconds to execute, only 0.06 seconds.

Difficulties Met:

The main difficulty was trying to find and install the library that would let the code compile. The library that the program uses, svgalibs, is an old library meant for displaying images on linux machines. This means that the program itself would try and access the /dev/tty of the linux system-this is also why you needed admin privileges to run the program.

Another difficulty was the initial reading of the code and trying to understand it. After a couple more reading, it becomes easier to understand and it looks like it could be improved much more by changing the graphics used and the structure of the code.

If we end up choosing this, the difficulty might light in the fact that we have to update the technologies used (graphics library), restructure the code, and possibly change the algorithm to Barnes-hut.

Summary:

- Algorithm used: Brute force O(n^2), is way too ineffecient for high numbers of n

- Can be improved upto O(n log n) through use of Barnes-hut algorithm

- Main function to parallelize: Calculate_Gravity(), due to these for loops:

for (i=0; i<=NumP-1; i++) { xa[i]=0; ya[i]=0; za[i]=0; }

for (i=0; i<=NumP-1; i++)

{

for (j=i+1; j<=NumP-1; j++)

{

// calculating statements

- Potential speedup, based on Amdahl's Law and with 1344 cores (GTX670): ~393 times

- This means going from 24.16 seconds of execution time to 0.06 seconds

Resources:

Flat Profiles for 100,500,1000,5000,10000 bodies

Jesse's Profiling Findings

I found an iterated approximation of an N-Body project on GitHub at: https://github.com/jrupac/N-body-Simulation. The simulation uses the brute force algorithm with a run-time of O(n^2). It uses SDL to draw squares which represent each body. I have removed all SDL so it only does the calculations. It also has collision detection with other bodies. It still collides with the window sides even though I have removed the window. I also included a high resolution timer by Song Ho Ahn. The timer class can be found at: http://www.cnblogs.com/JohnShao/archive/2011/10/14/2211085.html

Testing Environment:

- Windows: i5 3570K @ 5Ghz

- Raw computations, no graphical visualization

- The velocity of the point is modified. Not a proper force.

- Brute force algoritim for calculation the forces O(N^2)

Profile: 512 Samples of 500-1500 Bodies

I ran a series of N-Body simulations and sampled each run 512 times. The results are exactly as you would expect for an O(n^2) algorithm; a quadratic increase in time.

Analysis

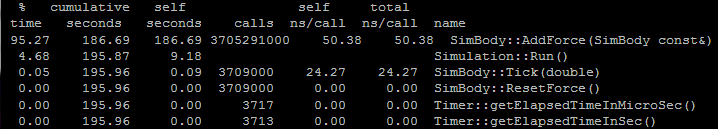

Using Visual Studio 10 profiler, it is clear that the update function is an expensive call path.

Although the program is an iterated approximation of an N-Body simulation, it is slower than a more proper N-Body simulation. The calculations are incorrect and the majority of them are unnecessary. The update function uses a double for loop to calculate the forces for each particle amongst one another. This is of O(n^2) runtime.

Summary