Difference between revisions of "GPU621/Intel DAAL"

(→Computation modes) |

(→Intel DAAL) |

||

| (11 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

== Intel DAAL == | == Intel DAAL == | ||

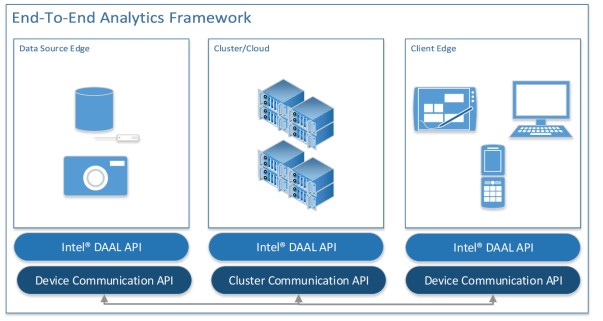

| − | Intel Data Analytics Acceleration Library is essentially a library which is optimized to work with large data sources and analytics. It covers the comprehensive range of tasks that arise when working with large data, from preprocessing, transformation, analysis, modeling, validation and decision making. This makes it quite flexible as it can be used in many end-to-end analytics frameworks. | + | Intel Data Analytics Acceleration Library is essentially a library which is optimized to work with large data sources and analytics. It covers the comprehensive range of tasks that arise when working with large data, from preprocessing, transformation, analysis, modeling, validation and decision making. This makes it quite flexible as it can be used in many end-to-end analytics frameworks. What this means is that we can use this library to extract data from files, store files, structure the data in the files in an orderly way and perform complex operations on that data - all within the same library. |

[[File:alow1.jpg]] | [[File:alow1.jpg]] | ||

| − | Having a complete framework is a very powerful perk to have as we can be assured that all parts of the system will link together. | + | Having a complete framework is a very powerful perk to have as we can be assured that all parts of the system will link together. This appears to be one of the main appeals of the system as we will not have to worry if how we are handling data sets, for example reading a large csv file, will affect our ability to process them algorithmically. |

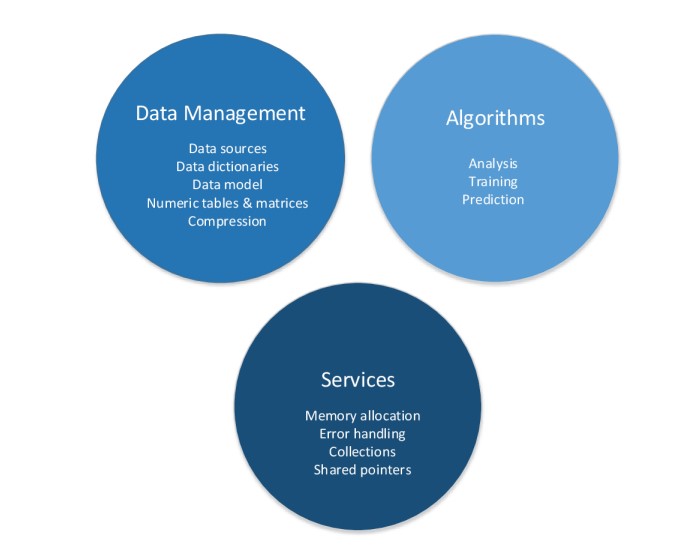

The framework is composed of 3 major components: data management + algorithms + services | The framework is composed of 3 major components: data management + algorithms + services | ||

| Line 43: | Line 43: | ||

1) Download Intel oneAPI Base Toolkit | 1) Download Intel oneAPI Base Toolkit | ||

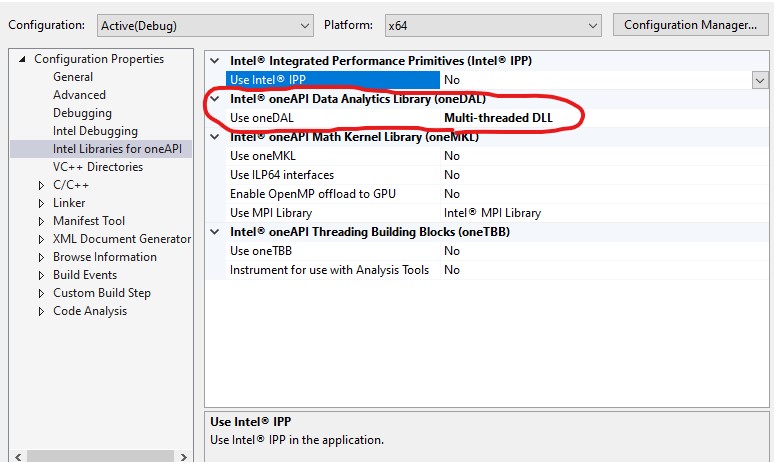

| − | 2) Project Properties -> intel libraries for oneAPI -> use oneDAL | + | 2) Within VS Code -> Project Properties -> intel libraries for oneAPI -> use oneDAL |

[[File:alow2.jpg]] | [[File:alow2.jpg]] | ||

== Computation Modes == | == Computation Modes == | ||

| + | |||

| + | Computation modes refer to how the functions in the library interact with the data that the data management part of the library handled. This can be divided into 3 types of interactions, batching, online and distributed. While batching and online types are just efficient code handling of large data sets, distributed processing falls under what we know as parallel processing. One of the reasons why the computation is quick is simply because the data being accessed has been efficiently organized by the data management part of the library. Additionally, many functions in the library have the potential to be used by two or even three different computation modes. This gives the developer a lot of freedom in deciding how to handle the data. If the data set is not so large, it may be much better to do batch processing. Whereas if the data set is actually very large, it may make a lot more sense to use distributed processing. | ||

'''Batching:''' | '''Batching:''' | ||

| − | + | Many common functions in the library appear to be simple batch processing. I believe batch processing is the equivalent to serial code, where the algorithm works with the entire block of data at once. However, the library is still quite optimized even in these situations. For example the sort function: | |

[[File:alow5.jpg]] | [[File:alow5.jpg]] | ||

| Line 57: | Line 59: | ||

[[File:alow4.jpg]] | [[File:alow4.jpg]] | ||

| − | We can see that the DAAL version versus a vector quick sort is much slower with a small data collection but as the data set gets larger and larger it starts to outperform the quick sort more and more. | + | We can see that the DAAL version versus a vector quick sort is much slower with a small data collection but as the data set gets larger and larger it starts to outperform the quick sort more and more. This shows that there is a small amount of overhead when calling this function and to only use it for larger data sets. |

[https://github.com/oneapi-src/oneDAL/blob/master/examples/daal/cpp/source/sorting/sorting_dense_batch.cpp Batch Sort Code Link] | [https://github.com/oneapi-src/oneDAL/blob/master/examples/daal/cpp/source/sorting/sorting_dense_batch.cpp Batch Sort Code Link] | ||

| Line 64: | Line 66: | ||

'''Online:''' | '''Online:''' | ||

| − | + | DAAL also supports online processing. In the online Processing method, chunks of data are fed into the algorithm sequentially. Not all of the data is accessed at once. This is of course very beneficial when working with large sets of data. As you can see in the example code, the number of rows in the block of code being extracted is user defined. This is missing in the previous sort processing we just looked at which just took all of the data. | |

[https://github.com/oneapi-src/oneDAL/blob/master/examples/daal/cpp/source/svd/svd_dense_online.cpp SVD Code Example] | [https://github.com/oneapi-src/oneDAL/blob/master/examples/daal/cpp/source/svd/svd_dense_online.cpp SVD Code Example] | ||

| Line 70: | Line 72: | ||

'''Distributed:''' | '''Distributed:''' | ||

| − | The final method of processing in the library is distributed processing. This is exactly what it sounds like, the library now forks different chunks of data to different compute nodes before finally rejoining all the data in one place. | + | The final method of processing in the library is distributed processing. This is exactly what it sounds like, the library now forks different chunks of data to different compute nodes before finally rejoining all the data in one place. These functions are obviously best used for larger data sets and more complex operations. |

| − | + | ||

| + | Below are a list of algorithms, all of which are optimized for large data sets and use distributed processing. | ||

[https://github.com/oneapi-src/oneDAL/blob/master/examples/daal/cpp/source/kmeans/kmeans_dense_distr.cpp K-means Code Example] | [https://github.com/oneapi-src/oneDAL/blob/master/examples/daal/cpp/source/kmeans/kmeans_dense_distr.cpp K-means Code Example] | ||

| + | |||

| + | [https://github.com/oneapi-src/oneDAL/blob/master/examples/daal/cpp/source/moments/low_order_moms_dense_distr.cpp Moments of Low Order Example] | ||

| + | |||

| + | [https://github.com/oneapi-src/oneDAL/blob/master/examples/daal/cpp/source/pca/pca_cor_dense_distr.cpp Principle Component Analysis] | ||

| + | |||

| + | [https://github.com/oneapi-src/oneDAL/blob/master/examples/daal/cpp/source/svd/svd_dense_distr.cpp Distributed Processing] | ||

[[File:alow7.jpg]] | [[File:alow7.jpg]] | ||

Latest revision as of 12:28, 7 December 2022

Intel DAAL

Intel Data Analytics Acceleration Library is essentially a library which is optimized to work with large data sources and analytics. It covers the comprehensive range of tasks that arise when working with large data, from preprocessing, transformation, analysis, modeling, validation and decision making. This makes it quite flexible as it can be used in many end-to-end analytics frameworks. What this means is that we can use this library to extract data from files, store files, structure the data in the files in an orderly way and perform complex operations on that data - all within the same library.

Having a complete framework is a very powerful perk to have as we can be assured that all parts of the system will link together. This appears to be one of the main appeals of the system as we will not have to worry if how we are handling data sets, for example reading a large csv file, will affect our ability to process them algorithmically.

The framework is composed of 3 major components: data management + algorithms + services

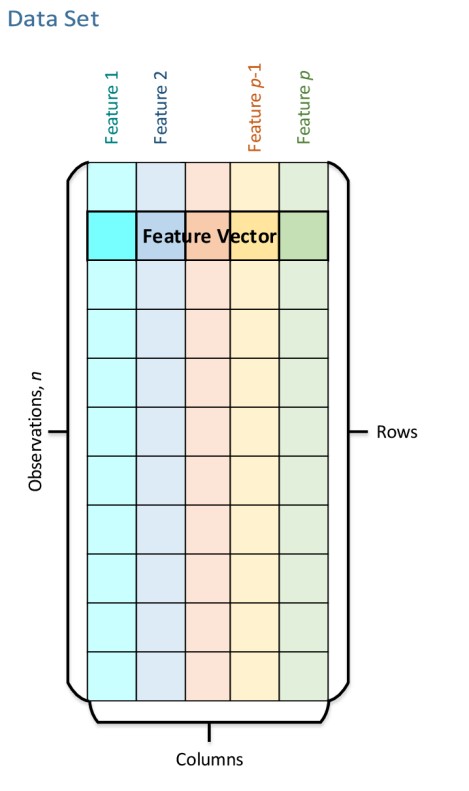

The data management part of the system is critical to the overall structure, since data must be formatted in such a way that the algorithmic functions will be able to operate on them swiftly and efficiently, as well as compression and decompression of very large data sets. This section is the part of the system which deals in extracting long csv files and putting the data in models where they can be accessed by the algorithms. Additionally, this part of the system handles the data in such a way that even if parts of data are missing the algorithmic section will still be able to understand. The following image is an example of how data management structures data, by putting it within a "data set". In the data set, table rows represent observations and columns represent features.

The algorithmic portion of the library supports three different methods of computing. These are in turn batch processing, online processing and distributed processing, which will be discussed later. To optimize performance the intel DAAL library takes and uses algorithms from the Math Kernel Library as well as the Intel Integrated Performance Primitives.

The services section round out the entire system, providing functions to the others such as memory allocation and error handling.

Example service functions include functions to safely read files:

size_t readTextFile(const std::string& datasetFileName, daal::byte** data) {

std::ifstream file(datasetFileName.c_str(), std::ios::binary | std::ios::ate);

if (!file.is_open())

{fileOpenError(datasetFileName.c_str());}

std::streampos end = file.tellg();

file.seekg(0, std::ios::beg);

size_t fileSize = static_cast<size_t>(end);

(*data) = new daal::byte[fileSize];

checkAllocation(data);

if (!file.read((char*)(*data), fileSize))

{ delete[] data;

fileReadError();

}

return fileSize;

}

How to enable

1) Download Intel oneAPI Base Toolkit

2) Within VS Code -> Project Properties -> intel libraries for oneAPI -> use oneDAL

Computation Modes

Computation modes refer to how the functions in the library interact with the data that the data management part of the library handled. This can be divided into 3 types of interactions, batching, online and distributed. While batching and online types are just efficient code handling of large data sets, distributed processing falls under what we know as parallel processing. One of the reasons why the computation is quick is simply because the data being accessed has been efficiently organized by the data management part of the library. Additionally, many functions in the library have the potential to be used by two or even three different computation modes. This gives the developer a lot of freedom in deciding how to handle the data. If the data set is not so large, it may be much better to do batch processing. Whereas if the data set is actually very large, it may make a lot more sense to use distributed processing.

Batching:

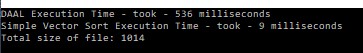

Many common functions in the library appear to be simple batch processing. I believe batch processing is the equivalent to serial code, where the algorithm works with the entire block of data at once. However, the library is still quite optimized even in these situations. For example the sort function:

We can see that the DAAL version versus a vector quick sort is much slower with a small data collection but as the data set gets larger and larger it starts to outperform the quick sort more and more. This shows that there is a small amount of overhead when calling this function and to only use it for larger data sets.

Online:

DAAL also supports online processing. In the online Processing method, chunks of data are fed into the algorithm sequentially. Not all of the data is accessed at once. This is of course very beneficial when working with large sets of data. As you can see in the example code, the number of rows in the block of code being extracted is user defined. This is missing in the previous sort processing we just looked at which just took all of the data.

Distributed:

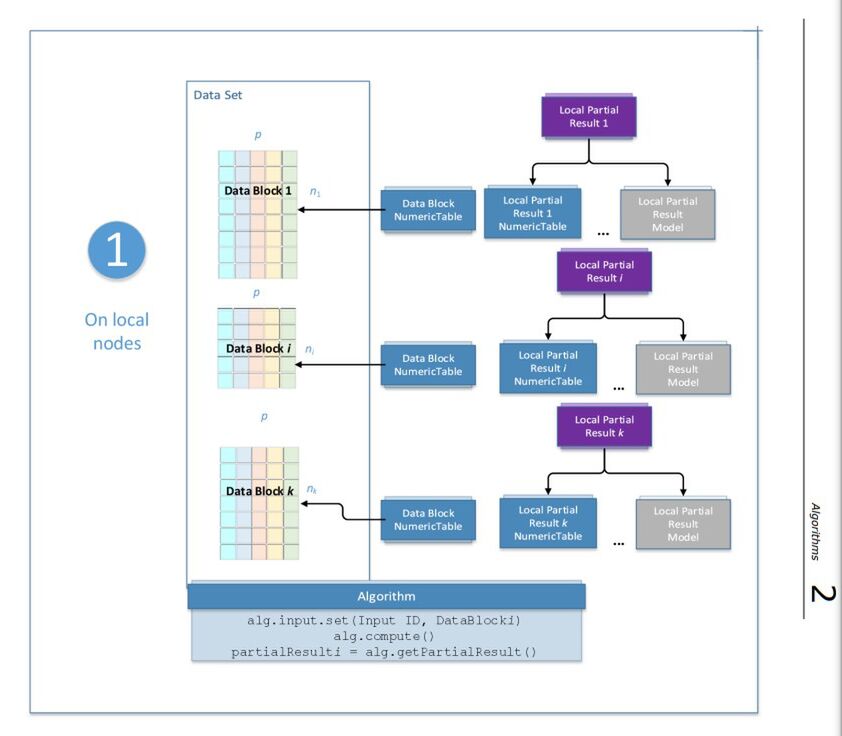

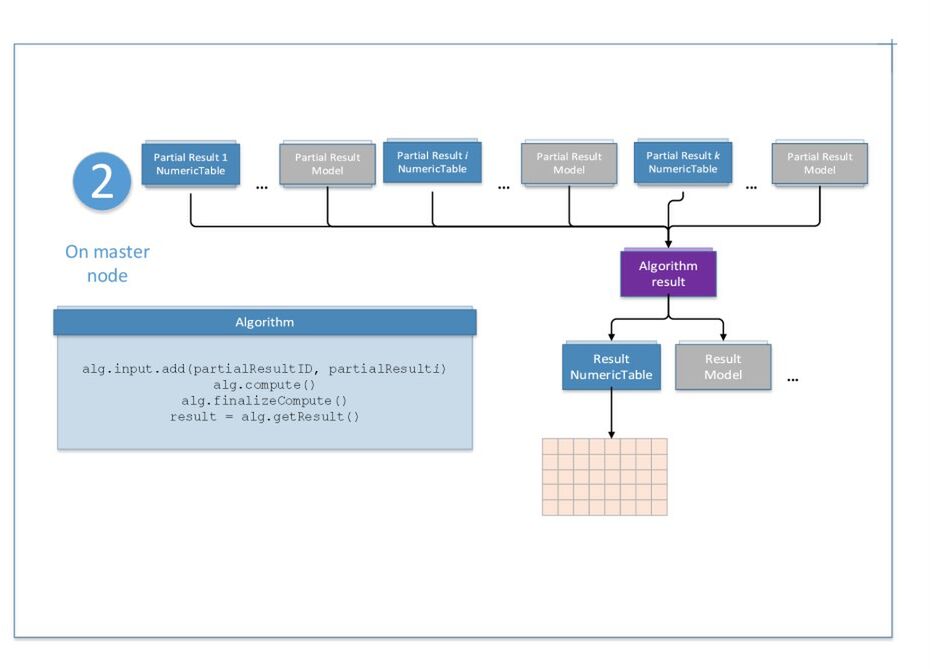

The final method of processing in the library is distributed processing. This is exactly what it sounds like, the library now forks different chunks of data to different compute nodes before finally rejoining all the data in one place. These functions are obviously best used for larger data sets and more complex operations.

Below are a list of algorithms, all of which are optimized for large data sets and use distributed processing.

Locally, our code operate on different chunks.

On the master thread, all operations eventually rejoin.