Difference between revisions of "GPU621/GPU Targeters"

(→Sources) |

(→Instructions for AMD) |

||

| (23 intermediate revisions by 2 users not shown) | |||

| Line 9: | Line 9: | ||

3. Yunseon Lee | 3. Yunseon Lee | ||

| − | + | == Difference of CPU and GPU for parallel applications == | |

| − | |||

| − | |||

| − | == Difference of CPU and GPU for parallel applications | ||

| Line 34: | Line 31: | ||

[[File:cpuGpu.png|700px|center|]] | [[File:cpuGpu.png|700px|center|]] | ||

| − | == Latest GPU specs == | + | == Latest Flagship GPU specs == |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| Line 53: | Line 41: | ||

== Means of parallelisation on GPUs == | == Means of parallelisation on GPUs == | ||

| − | + | ||

'''CUDA | '''CUDA | ||

| Line 66: | Line 54: | ||

| − | OpenMP ( | + | '''HIP''' |

| + | |||

| + | What is Heterogeneous-Computing Interface for Portability (HIP)? It’s a C++ dialect designed to ease conversion of Cuda applications to portable C++ code. It provides a C-style API and a C++ kernel language. The C++ interface can use templates and classes across the host/kernel boundary. | ||

| + | |||

| + | The HIPify tool automates much of the conversion work by performing a source-to-source transformation from Cuda to HIP. HIP code can run on AMD hardware (through the HCC compiler) or Nvidia hardware (through the NVCC compiler) with no performance loss compared with the original Cuda code. | ||

| + | |||

| + | [More information: https://www.olcf.ornl.gov/wp-content/uploads/2019/09/AMD_GPU_HIP_training_20190906.pdf] | ||

| + | |||

| + | |||

| + | '''OpenCL (Open Compute Language)''' | ||

| + | |||

| + | What is OpenCL ? It’s a framework for developing programs that can execute across a wide variety of heterogeneous platforms. AMD, Intel and Nvidia GPUs support version 1.2 of the specification, as do x86 CPUs and other devices (including FPGAs and DSPs). OpenCL provides a C run-time API and C99-based kernel language. | ||

| + | |||

| + | When to Use OpenCL: | ||

| + | Use OpenCL when you have existing code in that language and when you need portability to multiple platforms and devices. It runs on Windows, Linux and Mac OS, as well as a wide variety of hardware platforms (described above). | ||

| + | |||

| + | |||

| + | '''OpenMP (Open MultiProcessing)''' | ||

| + | OpenMP is a parallel programming model based on compiler directives which allows application developers to incrementally add parallelism to their application codes. | ||

| + | |||

| + | OpenMP API specification for parallel programming provides an application programming interface (API) that supports multi-platform shared memory multiprocessing programming in C, C++, and Fortran, on most platforms. It consists of a set of compiler directives, library routines, and environment variables that influence run-time behavior. | ||

| + | |||

| + | Benefits of OpenMP. Why to choose over GPU kernel model? | ||

| + | -supports multi-core, vectorization and GPU | ||

| + | -allows for "teams of threads" | ||

| + | -portable between various plaforms | ||

| + | -heterogeneous memory allocation and custom data mappers | ||

| + | |||

| + | [More information (compare OpenMP syntax with CUDA, HIP and other): | ||

| + | https://github.com/ROCm-Developer-Tools/aomp/blob/master/docs/openmp_terms.md] | ||

| + | |||

| + | == Programming GPUs with OpenMP == | ||

| + | <h3>Target Region</h3> | ||

| + | * The target region is the offloading construct in OpenMP. | ||

| + | <pre>int main() { | ||

| + | // This code executes on the host (CPU) | ||

| + | |||

| + | #pragma omp target | ||

| + | // This code executes on the device | ||

| + | |||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | * An OpenMP program will begin executing on the host (CPU). | ||

| + | * When a target region is encountered the code that is within the target region will begin to execute on a device (GPU). | ||

| + | |||

| + | If no other construct is specified, for instance a construct to enable a parallelized region (''#pragma omp parallel''). | ||

| + | By default, the code within the target region will execute sequentially. The target region does not express parallelism, it only expresses where the contained code is going to be executed on. | ||

| + | |||

| + | There is an implied synchronization between the host and the device at the end of a target region. At the end of a target region the host thread waits for the target region to finish execution and continues executing the next statements. | ||

| + | |||

| + | <h3>Mapping host and device data</h3> | ||

| + | |||

| + | * In order to access data inside the target region it must be mapped to the device. | ||

| + | * The host environment and device environment have separate memory. | ||

| + | * Data that has been mapped to the device from the host cannot access that data until the target region (Device) has completed its execution. | ||

| + | |||

| + | The map clause provides the ability to control a variable over a target region. | ||

| + | |||

| + | ''#pragma omp target map(map-type : list)'' | ||

| + | |||

| + | * ''list'' specifies the data variables to be mapped from the host data environment to the target's device environment. | ||

| + | |||

| + | * ''map-type'' is one of the types '''to''', '''from''', '''tofrom''', or '''alloc'''. | ||

| + | |||

| + | '''to''' - copies the data to the device on execution. | ||

| + | |||

| + | '''from''' - copies the data to the host on exit. | ||

| + | |||

| + | '''tofrom''' - copies the data to the device on execution and back on exit. | ||

| + | |||

| + | '''alloc''' - allocated an uninitialized copy on the device (without copying from the host environment). | ||

| + | |||

| + | <pre> | ||

| + | // Offloading to the target device, but still without parallelism. | ||

| + | #pragma omp target map(to:A,B), map(tofrom:sum) | ||

| + | { | ||

| + | for (int i = 0; i < N; i++) | ||

| + | sum += A[i] + B[i]; | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | <h3>Dynamically allocated data</h3> | ||

| + | If we have dynamically allocated data in the host region that we'd like to map to the target region. Then in the map clause we'll need to specify the number of elements that we'd like to copy over. Otherwise all the compiler would have is a pointer to some region in memory. As it would require the size of allocated memory that needs to be mapped over to the target device. | ||

| + | |||

| + | <pre> | ||

| + | int* a = (int*)malloc(sizeof(int) * N); | ||

| + | #pragma omp target map(to: a[0:N]) // [start:length] | ||

| + | </pre> | ||

| + | |||

| + | <h3>Parallelism on the GPU</h3> | ||

| + | GPUs contain many single stream multiprocessors (SM), each of which can run multiple threads within them. | ||

| + | |||

| + | OpenMP still allows us to use the traditional OpenMP constructs inside the target region to create and use threads on a device. However a parallel region executing inside a target region will only execute on one single stream multiprocessor (SM). So parallelization will work but will only be executed on one single stream multiprocessor (SM), leaving most of the cores on the GPU idle. | ||

| − | + | Within a single stream multiprocessor no synchronization is possible between SMs, since GPU's are not able to support a full threading model outside of a single stream multiprocessor (SM). | |

| − | + | <pre> | |

| + | // This will only execute one single stream multiprocessor. | ||

| + | // Threads are still created but the iteration can be distributed across more SMs. | ||

| − | + | #pragma omp target map(to:A,B), map(tofrom:sum) | |

| − | + | #pragma omp parallel for reduction(+:sum) | |

| + | for (int i = 0; i < N; i++) { | ||

| + | sum += A[i] + B[i]; | ||

| + | } | ||

| + | </pre> | ||

| − | == Instructions for | + | <h3>Teams construct</h3> |

| + | |||

| + | In order to provide parallelization within the GPU architectures there is an additional construct known as the ''teams'' construct, which creates multiple master threads on the device. [[File: Teams.JPG|thumb|upright=1.2|right|alt=OpenMP teams]] | ||

| + | |||

| + | Each master thread can spawn a team of its own threads within a parallel region. But threads from different teams cannot synchronize with other threads outside of their own team. | ||

| + | [[File: Distribute.JPG|thumb|upright=1.2|right|alt=OpenMP distribute]] | ||

| + | <pre> | ||

| + | int main() { | ||

| + | |||

| + | #pragma omp target // Offload to device | ||

| + | #pragma omp teams // Create teams of master threads | ||

| + | #pragma omp parallel // Create parallel region for each team | ||

| + | { | ||

| + | // Code to execute on GPU | ||

| + | } | ||

| + | |||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | <h3> Distribute construct </h3> | ||

| + | The ''distribute'' construct allows us to distribute iterations. This means if we offload a parallel loop to the device, we will be able to distribute the iterations of the loop across all of the created teams, and across the threads within the teams. | ||

| + | |||

| + | Similar to how the ''for'' construct works, but ''distribute'' assigns the iterations to different teams (single stream multiprocessors). | ||

| + | <pre> | ||

| + | // Distributes iterations to SMs, and across threads within each SM. | ||

| + | |||

| + | #pragma omp target teams distribute parallel for\ | ||

| + | map(to: A,B), map(tofrom:sum) reduction(+:sum) | ||

| + | for (int i = 0; i < N; i++) { | ||

| + | sum += A[i] + B[i]; | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | <h3>Declare Target</h3> | ||

| + | ''Calling functions within the scope of a target region.'' | ||

| + | |||

| + | * The ''declare target'' construct will compile a version of a function that can be called on the device. | ||

| + | * In order to offload a function onto the target's device region the function must be first declare on the target. | ||

| + | <pre> | ||

| + | #pragma omp declare target | ||

| + | int combine(int a, int b); | ||

| + | #pragma omp end declare target | ||

| + | |||

| + | #pragma omp target teams distribute parallel for \ | ||

| + | map(to: A, B), map(tofrom:sum), reduction(+:sum) | ||

| + | for (int i = 0; i < N; i++) { | ||

| + | sum += combine(A[i], B[i]) | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | |||

| + | == Instructions for NVIDIA == | ||

'''How to set up the compiler and target offloading for Linux with a target NVIDIA GPU''' | '''How to set up the compiler and target offloading for Linux with a target NVIDIA GPU''' | ||

| Line 113: | Line 251: | ||

$ mv cfe-7.0.0.src llvm-7.0.0.src/tools/clang | $ mv cfe-7.0.0.src llvm-7.0.0.src/tools/clang | ||

$ mv openmp-7.0.0.src llvm-7.0.0.src/projects/openmp | $ mv openmp-7.0.0.src llvm-7.0.0.src/projects/openmp | ||

| + | $ sudo usermod -a -G video $USER | ||

</pre> | </pre> | ||

| Line 189: | Line 328: | ||

$ clang -fopenmp -fopenmp-targets=nvptx64 -O2 foo.c | $ clang -fopenmp -fopenmp-targets=nvptx64 -O2 foo.c | ||

</pre> | </pre> | ||

| − | |||

== Instructions for AMD== | == Instructions for AMD== | ||

| − | How to set up compiler and target offloading for Linux on AMD GPU: | + | How to set up compiler and target offloading for Linux on AMD GPU: |

| + | Note: user should be member of 'video' group; if this doesn't help, may add user to 'render' group | ||

[AOMP https://github.com/ROCm-Developer-Tools/aomp] is an open source Clang/LLVM based compiler with added support for the OpenMP® API on Radeon™ GPUs. | [AOMP https://github.com/ROCm-Developer-Tools/aomp] is an open source Clang/LLVM based compiler with added support for the OpenMP® API on Radeon™ GPUs. | ||

| Line 238: | Line 377: | ||

https://rocmdocs.amd.com/en/latest/Programming_Guides/aomp.html | https://rocmdocs.amd.com/en/latest/Programming_Guides/aomp.html | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | '''Hello world compilation example:''' | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

<pre> | <pre> | ||

| − | // | + | // File helloWorld.c |

| − | # | + | #include <omp.h> |

| + | #include <stdio.h> | ||

| + | int main() | ||

{ | { | ||

| − | + | #pragma omp parallel | |

| − | + | { | |

| + | printf("Hello world!"); | ||

| + | } | ||

} | } | ||

</pre> | </pre> | ||

| − | + | Make sure to export your new AOMP to PATH | |

| − | |||

| − | |||

<pre> | <pre> | ||

| − | + | export AOMP="/usr/lib/aomp" | |

| − | + | export PATH=$AOMP/bin:$PATH | |

| − | |||

| − | + | clang -fopenmp helloWorld.c -o helloWorld | |

| − | |||

| − | + | ./helloWorld | |

| + | </pre> | ||

| − | + | '''Hello world on GPU example''' | |

<pre> | <pre> | ||

| − | // | + | // File helloWorld.c |

| − | + | #include <omp.h> | |

| − | + | #include <stdio.h> | |

| − | #pragma omp target | + | int main(void) |

| − | #pragma omp parallel | + | { |

| − | + | #pragma omp target | |

| − | + | #pragma omp parallel | |

| + | printf("Hello world from GPU! THREAD %d\n", omp_get_thread_num()); | ||

| + | |||

} | } | ||

</pre> | </pre> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

<pre> | <pre> | ||

| − | + | export AOMP="/usr/lib/aomp" | |

| + | export PATH=$AOMP/bin:$PATH | ||

| + | export LIBOMPTARGET_KERNEL_TRACE=1 | ||

| − | + | clang -O2 -fopenmp -fopenmp-targets=amdgcn-amd-amdhsa -Xopenmp-target=amdgcn-amd-amdhsa -march=gfx803 helloWorld.c -0 helloWorld | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | ./helloWorld | |

</pre> | </pre> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | To see the name of your device for (-march=gfx803) you may run 'rocminfo' tool: | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

<pre> | <pre> | ||

| − | + | $ /opt/rocm/bin/rocminfo | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

</pre> | </pre> | ||

| − | + | If further problems with compiling and running, try starting with examples: | |

| − | + | https://github.com/ROCm-Developer-Tools/aomp/tree/master/examples/openmp | |

| − | |||

| − | |||

== Sources == | == Sources == | ||

Latest revision as of 13:01, 18 December 2020

GPU621/DPS921 | Participants | Groups and Projects | Resources | Glossary

Contents

OpenMP Device Offloading

OpenMP 4.0/4.5 introduced support for heterogeneous systems such as accelerators and GPUs. The purpose of this overview is to demonstrate OpenMP's device constructs used for offloading data and code from a host device (Multicore CPU) to a target's device environment (GPU/Accelerator). We will demonstrate how to manage the device's data environment, parallelism and work-sharing. Review how data is mapped from the host data environment to the device data environment, and attempt to use different compilers that support OpenMP offloading such as LLVM/Clang or GCC.

Group Members

1. Elena Sakhnovitch

2. Nathan Olah

3. Yunseon Lee

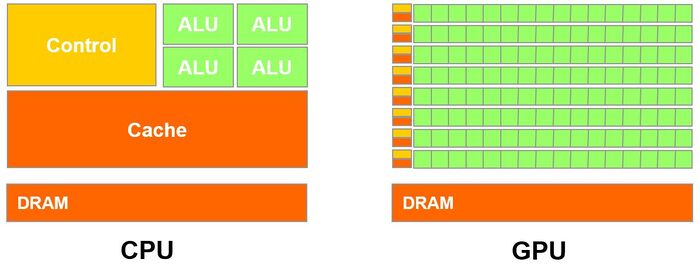

Difference of CPU and GPU for parallel applications

GPU(Graphics processing unit)

GPU is designed with thousands of processor cores running simultaneously and it enables massive parallelism where each of the cores is focused on making efficient calculations which are repetitive and highly-parallel computing tasks. GPU was originally designed to create quick image rendering which is a specialized type of microprocessor. However, modern graphic processors are powerful enough to be used to accelerate calculations with a massive amount of data and others apart from image rendering. GPUs can perform parallel operations on multiple sets of data, and able to complete more work at the same time compare to CPU by using Parallelism. Even with these abilities, GPU can never fully replace the CPU because cores are limited in processing power with the limited instruction set.

CPU(Central processing unit)

CPU can work on a variety of different calculations, and it usually has less than 100 cores (8-24) which can also do parallel computing using its instruction pipelines and also cores. Each core is strong and processing power is significant. For this reason, the CPU core can execute a big instruction set, but not too many at a time. Compare to GPUs, CPUs are usually smarter and have large and wide instructions that manage every input and output of a computer.

What is the difference?

CPU can work on a variety of different calculations, while a GPU is best at focusing all the computing abilities on a specific task. Because the CPU is consisting of a few cores (up to 24) optimized for sequential serial processing. It is designed to maximize the performance of a single task within a job; however, it can do a variety of tasks. On the other hand, GPU uses thousands of processor cores running simultaneously and it enables massive parallelism where each of the cores is focused on making efficient calculations which are repetitive and highly-paralleled architecture computing tasks.

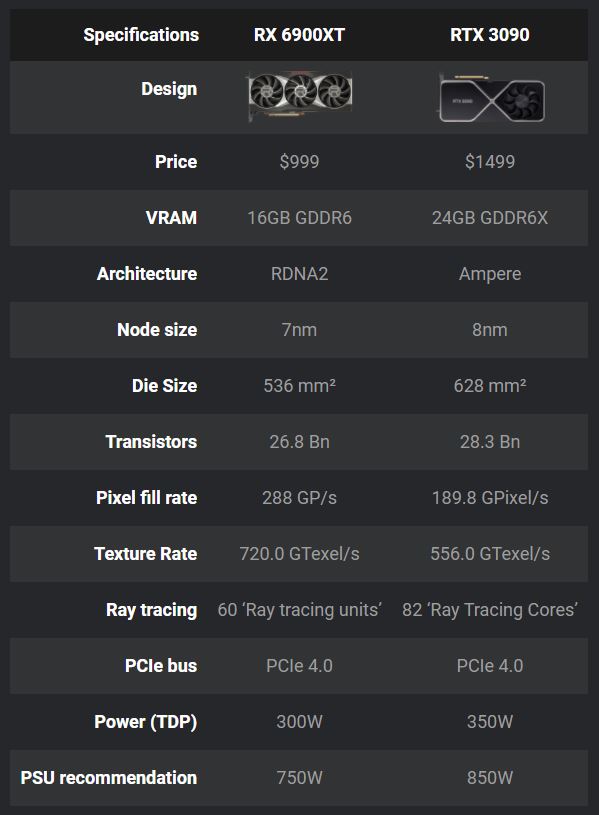

Latest Flagship GPU specs

AMD RX 6900 XT vs RTX 3090: Specifications:

Means of parallelisation on GPUs

CUDA

CUDA is a parallel computing platform and programming model developed by Nvidia for general computing on its own GPUs (graphics processing units). CUDA enables developers to speed up compute-intensive applications by harnessing the power of GPUs for the parallelizable part of the computation.

CUDA and NVIDIA GPUs have been improved together in past years. The combination of CUDA and Nvidia GPUs dominates several application areas, including deep learning, and is a foundation for some of the fastest computers in the world.

CUDA version 9.2, using multiple P100 server GPUs, you can realize up to 50x performance improvements over CPUs.

HIP

What is Heterogeneous-Computing Interface for Portability (HIP)? It’s a C++ dialect designed to ease conversion of Cuda applications to portable C++ code. It provides a C-style API and a C++ kernel language. The C++ interface can use templates and classes across the host/kernel boundary.

The HIPify tool automates much of the conversion work by performing a source-to-source transformation from Cuda to HIP. HIP code can run on AMD hardware (through the HCC compiler) or Nvidia hardware (through the NVCC compiler) with no performance loss compared with the original Cuda code.

[More information: https://www.olcf.ornl.gov/wp-content/uploads/2019/09/AMD_GPU_HIP_training_20190906.pdf]

OpenCL (Open Compute Language)

What is OpenCL ? It’s a framework for developing programs that can execute across a wide variety of heterogeneous platforms. AMD, Intel and Nvidia GPUs support version 1.2 of the specification, as do x86 CPUs and other devices (including FPGAs and DSPs). OpenCL provides a C run-time API and C99-based kernel language.

When to Use OpenCL: Use OpenCL when you have existing code in that language and when you need portability to multiple platforms and devices. It runs on Windows, Linux and Mac OS, as well as a wide variety of hardware platforms (described above).

OpenMP (Open MultiProcessing)

OpenMP is a parallel programming model based on compiler directives which allows application developers to incrementally add parallelism to their application codes.

OpenMP API specification for parallel programming provides an application programming interface (API) that supports multi-platform shared memory multiprocessing programming in C, C++, and Fortran, on most platforms. It consists of a set of compiler directives, library routines, and environment variables that influence run-time behavior.

Benefits of OpenMP. Why to choose over GPU kernel model? -supports multi-core, vectorization and GPU -allows for "teams of threads" -portable between various plaforms -heterogeneous memory allocation and custom data mappers

[More information (compare OpenMP syntax with CUDA, HIP and other): https://github.com/ROCm-Developer-Tools/aomp/blob/master/docs/openmp_terms.md]

Programming GPUs with OpenMP

Target Region

- The target region is the offloading construct in OpenMP.

int main() {

// This code executes on the host (CPU)

#pragma omp target

// This code executes on the device

}

- An OpenMP program will begin executing on the host (CPU).

- When a target region is encountered the code that is within the target region will begin to execute on a device (GPU).

If no other construct is specified, for instance a construct to enable a parallelized region (#pragma omp parallel). By default, the code within the target region will execute sequentially. The target region does not express parallelism, it only expresses where the contained code is going to be executed on.

There is an implied synchronization between the host and the device at the end of a target region. At the end of a target region the host thread waits for the target region to finish execution and continues executing the next statements.

Mapping host and device data

- In order to access data inside the target region it must be mapped to the device.

- The host environment and device environment have separate memory.

- Data that has been mapped to the device from the host cannot access that data until the target region (Device) has completed its execution.

The map clause provides the ability to control a variable over a target region.

#pragma omp target map(map-type : list)

- list specifies the data variables to be mapped from the host data environment to the target's device environment.

- map-type is one of the types to, from, tofrom, or alloc.

to - copies the data to the device on execution.

from - copies the data to the host on exit.

tofrom - copies the data to the device on execution and back on exit.

alloc - allocated an uninitialized copy on the device (without copying from the host environment).

// Offloading to the target device, but still without parallelism.

#pragma omp target map(to:A,B), map(tofrom:sum)

{

for (int i = 0; i < N; i++)

sum += A[i] + B[i];

}

Dynamically allocated data

If we have dynamically allocated data in the host region that we'd like to map to the target region. Then in the map clause we'll need to specify the number of elements that we'd like to copy over. Otherwise all the compiler would have is a pointer to some region in memory. As it would require the size of allocated memory that needs to be mapped over to the target device.

int* a = (int*)malloc(sizeof(int) * N); #pragma omp target map(to: a[0:N]) // [start:length]

Parallelism on the GPU

GPUs contain many single stream multiprocessors (SM), each of which can run multiple threads within them.

OpenMP still allows us to use the traditional OpenMP constructs inside the target region to create and use threads on a device. However a parallel region executing inside a target region will only execute on one single stream multiprocessor (SM). So parallelization will work but will only be executed on one single stream multiprocessor (SM), leaving most of the cores on the GPU idle.

Within a single stream multiprocessor no synchronization is possible between SMs, since GPU's are not able to support a full threading model outside of a single stream multiprocessor (SM).

// This will only execute one single stream multiprocessor.

// Threads are still created but the iteration can be distributed across more SMs.

#pragma omp target map(to:A,B), map(tofrom:sum)

#pragma omp parallel for reduction(+:sum)

for (int i = 0; i < N; i++) {

sum += A[i] + B[i];

}

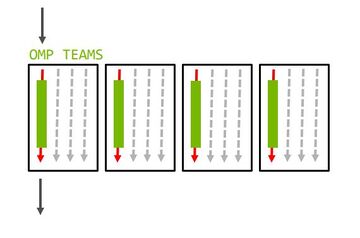

Teams construct

In order to provide parallelization within the GPU architectures there is an additional construct known as the teams construct, which creates multiple master threads on the device.Each master thread can spawn a team of its own threads within a parallel region. But threads from different teams cannot synchronize with other threads outside of their own team.

int main() {

#pragma omp target // Offload to device

#pragma omp teams // Create teams of master threads

#pragma omp parallel // Create parallel region for each team

{

// Code to execute on GPU

}

}

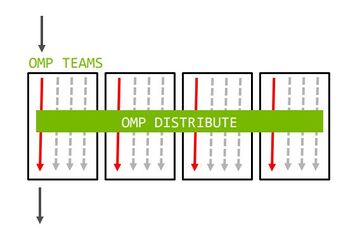

Distribute construct

The distribute construct allows us to distribute iterations. This means if we offload a parallel loop to the device, we will be able to distribute the iterations of the loop across all of the created teams, and across the threads within the teams.

Similar to how the for construct works, but distribute assigns the iterations to different teams (single stream multiprocessors).

// Distributes iterations to SMs, and across threads within each SM.

#pragma omp target teams distribute parallel for\

map(to: A,B), map(tofrom:sum) reduction(+:sum)

for (int i = 0; i < N; i++) {

sum += A[i] + B[i];

}

Declare Target

Calling functions within the scope of a target region.

- The declare target construct will compile a version of a function that can be called on the device.

- In order to offload a function onto the target's device region the function must be first declare on the target.

#pragma omp declare target

int combine(int a, int b);

#pragma omp end declare target

#pragma omp target teams distribute parallel for \

map(to: A, B), map(tofrom:sum), reduction(+:sum)

for (int i = 0; i < N; i++) {

sum += combine(A[i], B[i])

}

Instructions for NVIDIA

How to set up the compiler and target offloading for Linux with a target NVIDIA GPU

Using LLVM/Clang with OpenMP offloading to NVIDIA GPUs on Linux. Clang 7.0 has introduced support for offloading to NVIDIA GPUs.

Since Clang's OpenMP implementation for NVIDIA GPUs does not currently support multiple GPU architectures in a single binary, you must know the target GPU when compiling an OpenMP program.

Before building the compiler Clang/LLVM requires some software.

- To build an application a compiler needs to be installed. Ensure that you have GCC with at least version 4.8 installed or Clang with any version greater than 3.1 installed.

- You will need standard Linux commands such as make, tar, and xz. Most of the time these tools are built into your Linux distribution.

- LLVM requires the CMake more or less commands, make sure you have CMake with at least version 3.4.3.

- Runtime libraries the system needs both libelf and its development headers.

- You will finally need the CUDA toolkit by NVIDIA, with a recommended version using version 9.2.

Download and Extract

You will need the LLVM Core libraries, Clang and OpenMP. Enter these commands into the terminal to download the required tarballs.

$ wget https://releases.llvm.org/7.0.0/llvm-7.0.0.src.tar.xz $ wget https://releases.llvm.org/7.0.0/cfe-7.0.0.src.tar.xz $ wget https://releases.llvm.org/7.0.0/openmp-7.0.0.src.tar.xz

Next step is to unpack the downloaded tarballs, using the following commands.

$ tar xf llvm-7.0.0.src.tar.xz $ tar xf cfe-7.0.0.src.tar.xz $ tar xf openmp-7.0.0.src.tar.xz

This will leave you with 3 directories called llvm-7.0.0.src, cfe-7.0.0.src, and openmp-7.0.0.src. Afterwards move these components into their directories so that they can be built together.

Enter the following commands.

$ mv cfe-7.0.0.src llvm-7.0.0.src/tools/clang $ mv openmp-7.0.0.src llvm-7.0.0.src/projects/openmp $ sudo usermod -a -G video $USER

Building the compiler

Lets begin to configure the build the compiler. For this project we will use CMake to build this project in a separate directory. Enter the following commands.

$ mkdir build $ cd build

Next use CMake to generate the Makefiles which will be used for compilation.

$ cmake -DCMAKE_BUILD_TYPE=Release -DCMAKE_INSTALL_PREFIX=$(pwd)/../install \ -DCLANG_OPENMP_NVPTX_DEFAULT_ARCH=sm_60 \ -DLIBOMPTARGET_NVPTX_COMPUTE_CAPABILITIES=35,60,70 ../llvm-7.0.0.src

After execution of the above statement the following should display towards the end of the output.

-- Found LIBOMPTARGET_DEP_LIBELF: /usr/lib64/libelf.so -- Found PkgConfig: /usr/bin/pkg-config (found version "0.27.1") -- Found LIBOMPTARGET_DEP_LIBFFI: /usr/lib64/libffi.so -- Found LIBOMPTARGET_DEP_CUDA_DRIVER: <<<REDACTED>>>/libcuda.so -- LIBOMPTARGET: Building offloading runtime library libomptarget. -- LIBOMPTARGET: Not building aarch64 offloading plugin: machine not found in the system. -- LIBOMPTARGET: Building CUDA offloading plugin. -- LIBOMPTARGET: Not building PPC64 offloading plugin: machine not found in the system. -- LIBOMPTARGET: Not building PPC64le offloading plugin: machine not found in the system. -- LIBOMPTARGET: Building x86_64 offloading plugin. -- LIBOMPTARGET: Building CUDA offloading device RTL.

After enter the follow command $ make -j8 After the built libraries and binaries will have to be installed $ make -j8 install

Rebuild OpenMP Libraries

Now we need to rebuild the OpenMP runtime libraries with Clang.

First create a new build directory:

$ cd .. $ mkdir build-openmp $ cd build-openmp

Then configure the project with CMake using the Clang compiler built in the previous step:

$ cmake -DCMAKE_BUILD_TYPE=Release -DCMAKE_INSTALL_PREFIX=$(pwd)/../install \ -DCMAKE_C_COMPILER=$(pwd)/../install/bin/clang \ -DCMAKE_CXX_COMPILER=$(pwd)/../install/bin/clang++ \ -DLIBOMPTARGET_NVPTX_COMPUTE_CAPABILITIES=35,60,70 \ ../llvm-7.0.0.src/projects/openmp

Then build and install the OpenMP runtime libraries:

$ make -j8 $ make -j8 install

Using the compiler for offloading

The following steps leading up to this should now allow you to have a fully working Clang compiler with OpenMP support for offloading.

In order to use it you need to export some environment variables:

$ cd .. $ export PATH=$(pwd)/install/bin:$PATH $ export LD_LIBRARY_PATH=$(pwd)/install/lib:$LD_LIBRARY_PATH

After you will be able to compile an OpenMP application and offload it to a target region by running the Clang compiler with some additional flags to ensure offloading.

$ clang -fopenmp -fopenmp-targets=nvptx64 -O2 foo.c

Instructions for AMD

How to set up compiler and target offloading for Linux on AMD GPU:

Note: user should be member of 'video' group; if this doesn't help, may add user to 'render' group

[AOMP https://github.com/ROCm-Developer-Tools/aomp] is an open source Clang/LLVM based compiler with added support for the OpenMP® API on Radeon™ GPUs.

To install AOMP compiler on ubuntu: https://github.com/ROCm-Developer-Tools/aomp/blob/master/docs/UBUNTUINSTALL.md

AOMP will install to /usr/lib/aomp. The AOMP environment variable will automatically be set to the install location. This may require a new terminal to be launched to see the change.

On Ubuntu 18.04 LTS (bionic beaver), run these commands:

wget https://github.com/ROCm-Developer-Tools/aomp/releases/download/rel_11.11-2/aomp_Ubuntu1804_11.11-2_amd64.deb sudo dpkg -i aomp_Ubuntu1804_11.11-2_amd64.deb

Prerequisites AMD KFD Driver These commands are for supported Debian-based systems and target only the rock_dkms core component. More information can be found HERE.

echo 'SUBSYSTEM=="kfd", KERNEL=="kfd", TAG+="uaccess", GROUP="video"' | sudo tee /etc/udev/rules.d/70-kfd.rules wget -qO - http://repo.radeon.com/rocm/apt/debian/rocm.gpg.key | sudo apt-key add - echo 'deb [arch=amd64] http://repo.radeon.com/rocm/apt/debian/ xenial main' | sudo tee /etc/apt/sources.list.d/rocm.list sudo apt update sudo apt install rock-dkms sudo reboot sudo usermod -a -G video $USER

ALTERNATIVELY

You may also decide to install full ROCm (Radeon Open Compute) driver package, before installing AOMP package:

https://rocmdocs.amd.com/en/latest/Installation_Guide/Installation-Guide.html

More AOMP documentation:

https://rocmdocs.amd.com/en/latest/Programming_Guides/aomp.html

Hello world compilation example:

// File helloWorld.c

#include <omp.h>

#include <stdio.h>

int main()

{

#pragma omp parallel

{

printf("Hello world!");

}

}

Make sure to export your new AOMP to PATH

export AOMP="/usr/lib/aomp" export PATH=$AOMP/bin:$PATH clang -fopenmp helloWorld.c -o helloWorld ./helloWorld

Hello world on GPU example

// File helloWorld.c

#include <omp.h>

#include <stdio.h>

int main(void)

{

#pragma omp target

#pragma omp parallel

printf("Hello world from GPU! THREAD %d\n", omp_get_thread_num());

}

export AOMP="/usr/lib/aomp" export PATH=$AOMP/bin:$PATH export LIBOMPTARGET_KERNEL_TRACE=1 clang -O2 -fopenmp -fopenmp-targets=amdgcn-amd-amdhsa -Xopenmp-target=amdgcn-amd-amdhsa -march=gfx803 helloWorld.c -0 helloWorld ./helloWorld

To see the name of your device for (-march=gfx803) you may run 'rocminfo' tool:

$ /opt/rocm/bin/rocminfo

If further problems with compiling and running, try starting with examples: https://github.com/ROCm-Developer-Tools/aomp/tree/master/examples/openmp

Sources

https://hpc-wiki.info/hpc/Building_LLVM/Clang_with_OpenMP_Offloading_to_NVIDIA_GPUs

http://www.nvidia.com/en-us/geforce/graphics-cards/30-series/

https://www.nvidia.com/content/dam/en-zz/Solutions/design-visualization/technologies/turing-architecture/NVIDIA-Turing-Architecture-Whitepaper.pdf AMD RX-580 GPU architecture]

https://www.pcmag.com/encyclopedia/term/core-i7 AMD RX-580 GPU architecture

https://premiumbuilds.com/comparisons/rx-6900-xt-vs-rtx-3090/ -> compare Flagship GPU's 2020

http://www.nvidia.com/en-us/geforce/graphics-cards/30-series/ nvidia

https://www.pcmag.com/encyclopedia/term/core-i7 CPU picture

https://rocmdocs.amd.com/en/latest/Programming_Guides/Programming-Guides.html?highlight=hip <-- hip, openCL