Difference between revisions of "GPU621/History of Parallel Computing"

| (21 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

{{GPU621/DPS921 Index | 20207}} | {{GPU621/DPS921 Index | 20207}} | ||

| − | = History of Parallel Computing and | + | = History of Parallel Computing and Multi-core Systems = |

We will be looking into the history and evolution in parallel computing by focusing on three distinct timelines: | We will be looking into the history and evolution in parallel computing by focusing on three distinct timelines: | ||

| Line 41: | Line 41: | ||

-> Add new content(Domination of Two Semiconductor Giants Intel and AMD In Multi-core Processor Development) | -> Add new content(Domination of Two Semiconductor Giants Intel and AMD In Multi-core Processor Development) | ||

| + | Update 4 (11/30/2020): | ||

| − | + | -> Earliest Application of Parallel Computing | |

| + | |||

| + | -> Added graphics to Parallel Programming vs. Concurrent Programming | ||

| + | |||

| + | -> Parallel Computing in Supercomputers and HPC | ||

| + | |||

| + | -> Added References section | ||

| + | |||

| + | == Preface == | ||

=== Parallel Programming vs. Concurrent Programming === | === Parallel Programming vs. Concurrent Programming === | ||

| Line 48: | Line 57: | ||

Parallel computing is the idea that large problems can be split into smaller tasks, and these tasks are independent of each other running '''simultaneously''' on '''more than one''' processor. This concept is different from concurrent programming, which is the composition of multiple processes that may begin and end at different times, but are managed by the host system’s task scheduler which frequently '''switches between them'''. This gives off the illusion of multi-tasking as multiple tasks are '''in progress''' on a '''single''' processor. Concurrent computing can occur on both single and multi-core processors, whereas parallel computing takes advantage of distributing the workload across multiple physical processors. Thus, parallel computing is hardware-dependent. | Parallel computing is the idea that large problems can be split into smaller tasks, and these tasks are independent of each other running '''simultaneously''' on '''more than one''' processor. This concept is different from concurrent programming, which is the composition of multiple processes that may begin and end at different times, but are managed by the host system’s task scheduler which frequently '''switches between them'''. This gives off the illusion of multi-tasking as multiple tasks are '''in progress''' on a '''single''' processor. Concurrent computing can occur on both single and multi-core processors, whereas parallel computing takes advantage of distributing the workload across multiple physical processors. Thus, parallel computing is hardware-dependent. | ||

| − | [[File:P v c1.jpeg|thumb| | + | [[File:P v c1.jpeg|thumb|left|500px|Source: https://miro.medium.com/max/1170/1*cFUbDHxooUtT9KiBy-0SXQ.jpeg]] |

| + | [[File:Parallel v concurrent2.png|thumb|right|500px|Source: https://i.stack.imgur.com/V5sMZ.png]] | ||

| + | <br clear=all/> | ||

| + | |||

| + | == Demise of Single-Core and Rise of Multi-Core Systems == | ||

=== Transition from Single to Multi-Core === | === Transition from Single to Multi-Core === | ||

| − | The transition from single to multi-core systems came from the need to address the limitations of manufacturing technologies for single-core systems. Single-core systems suffered by several limiting factors | + | The transition from single to multi-core systems came from the need to address the limitations of manufacturing technologies for single-core systems. Single-core systems suffered by several limiting factors including: |

| + | * individual transistor gate size | ||

| + | * physical limits in the design of integrated circuits which caused significant heat dissipation | ||

| + | * synchronization issues with coherency of data. | ||

| + | |||

| + | A common metric of measurement in the number of instructions a processor can execute simultaneously for a given program is called Instruction-Level Parallelism (ILP). In the case of single-core processors, some ILP techniques were used to improve performance such as superscalar pipelining, speculative execution, and out-of-order execution. The superscalar ILP technique enables the processor to execute multiple instruction pipelines concurrently within a single clock cycle, but it along with the two other techniques were not suitable for many applications as the number of instructions that can be run simultaneously for a specific program may vary. Such issues with instruction-level parallelism were predominantly dictated by the disparity between the speed by which the processor operated and the access latency of system memory, which costed the processor many cycles by having to stall and wait for the fetch operation from system memory to complete. | ||

As manufacturing processes evolved in accordance with Moore’s Law which saw the size of a transistor shrink, it allowed for the number of transistors packed onto a single processor die (the physical silicon chip itself) to double roughly every two years. This enabled the available space on a processor die to grow, allowing more cores to fit on it than before. This led to an increased demand in thread-level parallelism which many applications benefitted from and were better suited for. The addition of multiple cores on a processor also increased the system's overall parallel computing capabilities. | As manufacturing processes evolved in accordance with Moore’s Law which saw the size of a transistor shrink, it allowed for the number of transistors packed onto a single processor die (the physical silicon chip itself) to double roughly every two years. This enabled the available space on a processor die to grow, allowing more cores to fit on it than before. This led to an increased demand in thread-level parallelism which many applications benefitted from and were better suited for. The addition of multiple cores on a processor also increased the system's overall parallel computing capabilities. | ||

| + | |||

| + | [[File:Moore law graph.png|thumb|left|500px|Source: https://en.wikipedia.org/wiki/File:Moore%27s_Law_Transistor_Count_1971-2018.png]] | ||

| + | [[File:Single v multi.png|thumb|right|620px|Source: https://www.researchgate.net/publication/332614728/figure/fig5/AS:751235892269058@1556120002090/Memory-management-of-single-core-and-multi-core-systems.png]] | ||

| + | <br clear=all /> | ||

=== Developments in the first Multi-Core Processors === | === Developments in the first Multi-Core Processors === | ||

| Line 61: | Line 83: | ||

The death of single-core processors came at the time of the Pentium 4, when, as mentioned above, excessive heat and power consumption became an issue. At this point, multi-core processors such as the Pentium D were introduced. However, Pentium D was not considered a “true” multi-core processor as what is considered today by definition, due to its design of being two separate single-core dies placed beside each other in the same processor package. | The death of single-core processors came at the time of the Pentium 4, when, as mentioned above, excessive heat and power consumption became an issue. At this point, multi-core processors such as the Pentium D were introduced. However, Pentium D was not considered a “true” multi-core processor as what is considered today by definition, due to its design of being two separate single-core dies placed beside each other in the same processor package. | ||

| + | The world's first true multi-core processor was called the POWER4, created in 2001 by IBM. It incorporated 2 physical cores on a single CPU die and implemented IBM's PowerPC 64-bit instruction set architecture (ISA). It was used in IBM's line of workstations, servers, and supercomputers at the time, namely the RS/6000 and AS/400 systems. | ||

| + | |||

| + | == Usage of Parallel Computing and HPC == | ||

| + | |||

| + | === Earliest Applications of Parallel Computing === | ||

| + | |||

| + | The idea and application of parallel computing goes back before the time multi-core processors were developed, between the 1960s and 1970s where it was heavily utilized in industries that relied on large investments for R&D such as aircraft design and defense, as well as modelling scientific and engineering problems. Parallel computing gave a significant edge to regular single-threaded programming by enabling more computing power to be directed at compute-intensive application. This enabled complex scientific and engineering simulations and computations to take full advantage of the scaled processing power of parallel systems. The systems allowed simulations for these problems to be accurately modelled and enabled every critical interaction between dynamic events to be tracked and recorded. The code for these simulations employed various parallel computing techniques from Flynn’s Taxonomy MIMD architecture such as SPMD and MPMD. | ||

| + | |||

| + | Today, almost every industry has found at least one or more practical applications for parallel computing, and the world is witnessing its evolving capabilities through one dominant contender: Artificial Intelligence, which has made popular and demanding use of GPUs to build deep learning neural networks. | ||

| + | The design and implementation of either parallelizing an existing serial program or writing one from scratch with the parallelization baked in from the start, used to be a very tedious, iterative, and error-prone process. Identification of which parts of a program could be parallelized and then implementing them was complex and time-consuming. Over time, various tools have been developed to ease and shorten the manual process such as parallelizing compilers, pre-processor directives (like OpenMP’s), and compiler flags (like ones used at the terminal or command prompt). The manual process of analyzing potential opportunities for parallelization is still an important step towards determining how much of a performance boost can be achieved and if it is worth investing time to implement it. | ||

| + | |||

| + | === Parallel Computing in Supercomputers and HPC === | ||

| + | |||

| + | Parallel computing used to be largely confined to High Performance Computing (HPC), system architectures designed to handle high speed and density calculations. When thinking of HPC, supercomputers are generally the types of machines that come to mind. Parallel programming became a significant sub-field in computer science by the late 1960s, and most of the compute-intensive processing was happening on supercomputers that employed multiple physical CPUs on nodes (or “racks”) in a hybrid-memory model. | ||

| + | |||

| + | The first supercomputer was designed and developed by Seymour Cray, an electrical engineer who was deemed the father of supercomputing. He initially worked for a company called Control Data Corporation where he worked on the CDC 6600 which was the first and fastest supercomputer between 1964 and 1969. | ||

| + | He left in 1972 to form Cray Research and in 1975, announced his own supercomputer, the Cray-1. It was the most powerful and most successful supercomputer in history and was used until the late 1980s. | ||

| + | |||

| + | Fast forward to the 2000s, which saw a huge boom in the number of processors working in parallel, with numbers upward in the tens of thousands. Such examples in the evolution in parallel computing, High Performance Computing, and multi-core systems include the fastest supercomputer today, which is Japan's Fugaku. It boasts an impressive 7.3 million cores, all of which are, for the first time in a supercomputer, ARM-based. It uses a hybrid-memory model and a new network architecture that provides higher cohesion among all the nodes. The success of the new system is a radical paradigm-shift from the departure of traditional supercomputing towards that of ARM-powered systems. It is also proof the designers wanted to highlight that HPC still has much room for improvement and innovation. | ||

| + | |||

| + | == How multicore products were marketed == | ||

| + | |||

| + | Since multicore systems offered a lot of extra processing power compared to the output of a single core processor, many companies were leaping at the opportunity to gain more power for their servers at the time. To capitalize on this, IBM started development of the first dual-core processor on the market, which became available on the market was the IBM POWER4 in 2001. It became highly successful and gave IBM a very strong foothold in the industry when sold as part of their eServer pSeries server, the IBM Regatta. They iterated more on the POWER series of processors, and in 2010, expanded the number of cores available from 2 to 8 with the release of the POWER7. | ||

| + | |||

| + | While IBM was dominating the market for server CPUs, there was still a hole in the market for integrating multicore into desktop computers. In may of 2015, AMD was the first company to release a dual-core desktop CPU, the Athlon 64 x2. With the cheapest in the line being $500 and the most powerful being $1000, It did not quite match IBM’s “twice the performance for half the cost”. However, they were still another large innovation in the industry by AMD, and a top competitor for the highest power CPU on the market. | ||

| − | |||

== Domination of Two Semiconductor Giants Intel and AMD In Multi-core Processor Development == | == Domination of Two Semiconductor Giants Intel and AMD In Multi-core Processor Development == | ||

| Line 89: | Line 135: | ||

Currently in 2020 the global multi-core processors market has been significantly more competitive. Today’s market has been segmented into dual-core processors, quad-core processors, octa-core processors, and hexa-core processors. It’s apparent the increasing advancement in high-performance computing, graphics and visualization technologies is anticipated to boost the growth of the multi-core processor. | Currently in 2020 the global multi-core processors market has been significantly more competitive. Today’s market has been segmented into dual-core processors, quad-core processors, octa-core processors, and hexa-core processors. It’s apparent the increasing advancement in high-performance computing, graphics and visualization technologies is anticipated to boost the growth of the multi-core processor. | ||

| + | |||

| + | |||

| + | == References == | ||

| + | |||

| + | MULTI-CORE PROCESSORS — THE NEXT EVOLUTION IN COMPUTING. (n.d.). Retrieved November 12, 2020, from http://static.highspeedbackbone.net/pdf/AMD_Athlon_Multi-Core_Processor_Article.pdf | ||

| + | |||

| + | Barney, B. (2020, November 18). Introduction to Parallel Computing. Retrieved November 18, 2020, from https://computing.llnl.gov/tutorials/parallel_comp/ | ||

| + | |||

| + | A very short history of parallel computing. (n.d.). Retrieved November 18, 2020, from https://subscription.packtpub.com/book/hardware_and_creative/9781783286195/1/ch01lvl1sec08/a-very-short-history-of-parallel-computing | ||

| + | |||

| + | What is Parallel Computing? Definition and FAQs. (n.d.). Retrieved November 12, 2020, from https://www.omnisci.com/technical-glossary/parallel-computing | ||

| + | |||

| + | Multi-core processor. (2020, November 18). Retrieved November 12, 2020, from https://en.wikipedia.org/wiki/Multi-core_processor | ||

| + | |||

| + | Instruction-level parallelism. (2020, September 23). Retrieved November 12, 2020, from https://en.wikipedia.org/wiki/Instruction-level_parallelism | ||

| + | |||

| + | Start, C. (2013, July 18). Multi-core Systems. Retrieved November 12, 2020, from https://www.cs.uaf.edu/2008/fall/cs441/proj1/fswcs/ | ||

| + | |||

| + | Kaufmann, M., Koniges, A. E., Jette, M. A., Eder, D. C., Cahir, M., Moench, R., . . . Brooks, J. (n.d.). Part 1: The Parallel Computing Environment. Retrieved November 12, 2020, from http://wayback.cecm.sfu.ca/PSG/book/intro.html | ||

| + | |||

| + | Jackson, K. (2020, June 23). The 5 fastest supercomputers in the world (1064822629 811109711 A. Alering, Ed.). Retrieved November 12, 2020, from https://sciencenode.org/feature/the-5-fastest-supercomputers-in-the-world.php | ||

| + | |||

| + | POWER4. (2020, October 19). Retrieved November 12, 2020, from https://en.wikipedia.org/wiki/POWER4 | ||

| + | |||

| + | No, J., Choudhary, A., Huang, W., Tafti, D., Resch, M., Gabriel, E., . . . Pressel, D. (n.d.). Parallel Computer. Retrieved November 18, 2020, from https://www.sciencedirect.com/topics/computer-science/parallel-computer | ||

| + | |||

| + | Wang, W. (n.d.). The Limitations of Instruction-Level Parallelism and Thread-Level Parallelism. Retrieved November 30, 2020, from https://wwang.github.io/teaching/CS5513_Fall19/lectures/ILP_Limitation.pdf | ||

Latest revision as of 19:17, 1 December 2020

GPU621/DPS921 | Participants | Groups and Projects | Resources | Glossary

Contents

- 1 History of Parallel Computing and Multi-core Systems

History of Parallel Computing and Multi-core Systems

We will be looking into the history and evolution in parallel computing by focusing on three distinct timelines:

1) The earliest developments of multi-core systems and how it gave rise to the realization of parallel programming

2) How chip makers marketed this new frontier in computing, specifically towards enterprise businesses being the primary target audience

3) How quickly it gained traction and when the two semiconductor giants Intel and AMD decided to introduce multi-core processors to domestic users, thus making parallel computing more widely available

From each of these timelines, we will be inspecting certain key events and how they impacted other events in their progression. This will help us understand how parallel computing came into fruition, its role and impact in many industries today, and what the future may hold going forward.

Group Members

Omri Golan

Patrick Keating

Yuka Sadaoka

Progress

Update 1 (11/12/2020):

-> Basic definition of parallel computing

-> Research on limitation of single-core systems and subsequent advent of multi-core with respect to parallel computing capabilities

-> Earliest development and usage of parallel computing

-> History and development of supercomputers and their parallel computing nature (incl. modern supercomputers -> world's fastest supercomputer)

Update 2 (11/27/2020):

-> Add new content(Demise of Single-Core and Rise of Multi-Core Systems)

Update 3 (11/29/2020):

-> Add new content(Domination of Two Semiconductor Giants Intel and AMD In Multi-core Processor Development)

Update 4 (11/30/2020):

-> Earliest Application of Parallel Computing

-> Added graphics to Parallel Programming vs. Concurrent Programming

-> Parallel Computing in Supercomputers and HPC

-> Added References section

Preface

Parallel Programming vs. Concurrent Programming

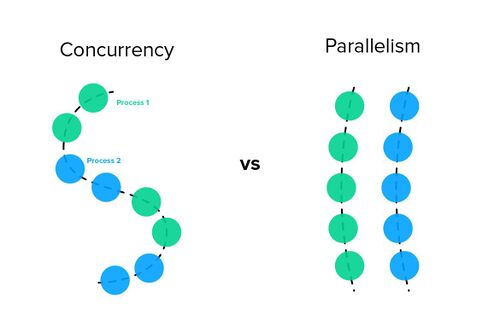

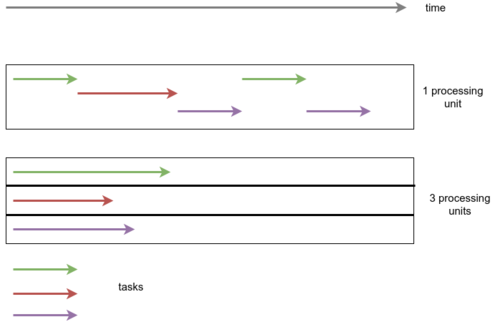

Parallel computing is the idea that large problems can be split into smaller tasks, and these tasks are independent of each other running simultaneously on more than one processor. This concept is different from concurrent programming, which is the composition of multiple processes that may begin and end at different times, but are managed by the host system’s task scheduler which frequently switches between them. This gives off the illusion of multi-tasking as multiple tasks are in progress on a single processor. Concurrent computing can occur on both single and multi-core processors, whereas parallel computing takes advantage of distributing the workload across multiple physical processors. Thus, parallel computing is hardware-dependent.

Demise of Single-Core and Rise of Multi-Core Systems

Transition from Single to Multi-Core

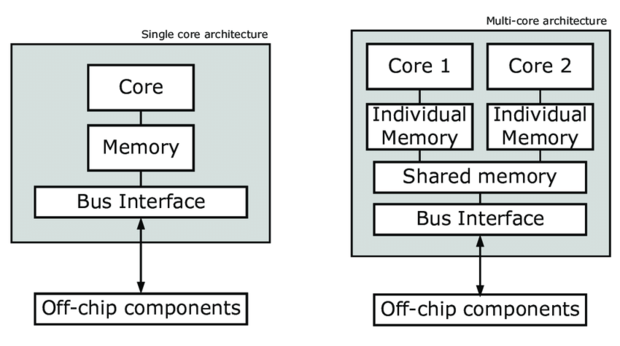

The transition from single to multi-core systems came from the need to address the limitations of manufacturing technologies for single-core systems. Single-core systems suffered by several limiting factors including:

- individual transistor gate size

- physical limits in the design of integrated circuits which caused significant heat dissipation

- synchronization issues with coherency of data.

A common metric of measurement in the number of instructions a processor can execute simultaneously for a given program is called Instruction-Level Parallelism (ILP). In the case of single-core processors, some ILP techniques were used to improve performance such as superscalar pipelining, speculative execution, and out-of-order execution. The superscalar ILP technique enables the processor to execute multiple instruction pipelines concurrently within a single clock cycle, but it along with the two other techniques were not suitable for many applications as the number of instructions that can be run simultaneously for a specific program may vary. Such issues with instruction-level parallelism were predominantly dictated by the disparity between the speed by which the processor operated and the access latency of system memory, which costed the processor many cycles by having to stall and wait for the fetch operation from system memory to complete.

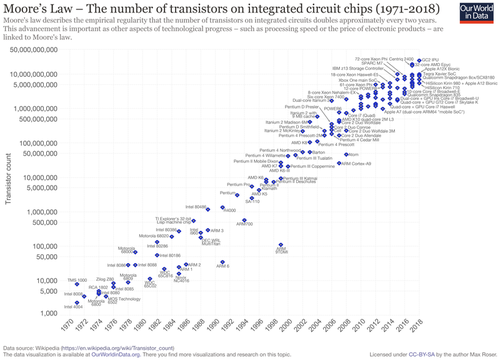

As manufacturing processes evolved in accordance with Moore’s Law which saw the size of a transistor shrink, it allowed for the number of transistors packed onto a single processor die (the physical silicon chip itself) to double roughly every two years. This enabled the available space on a processor die to grow, allowing more cores to fit on it than before. This led to an increased demand in thread-level parallelism which many applications benefitted from and were better suited for. The addition of multiple cores on a processor also increased the system's overall parallel computing capabilities.

Developments in the first Multi-Core Processors

The death of single-core processors came at the time of the Pentium 4, when, as mentioned above, excessive heat and power consumption became an issue. At this point, multi-core processors such as the Pentium D were introduced. However, Pentium D was not considered a “true” multi-core processor as what is considered today by definition, due to its design of being two separate single-core dies placed beside each other in the same processor package.

The world's first true multi-core processor was called the POWER4, created in 2001 by IBM. It incorporated 2 physical cores on a single CPU die and implemented IBM's PowerPC 64-bit instruction set architecture (ISA). It was used in IBM's line of workstations, servers, and supercomputers at the time, namely the RS/6000 and AS/400 systems.

Usage of Parallel Computing and HPC

Earliest Applications of Parallel Computing

The idea and application of parallel computing goes back before the time multi-core processors were developed, between the 1960s and 1970s where it was heavily utilized in industries that relied on large investments for R&D such as aircraft design and defense, as well as modelling scientific and engineering problems. Parallel computing gave a significant edge to regular single-threaded programming by enabling more computing power to be directed at compute-intensive application. This enabled complex scientific and engineering simulations and computations to take full advantage of the scaled processing power of parallel systems. The systems allowed simulations for these problems to be accurately modelled and enabled every critical interaction between dynamic events to be tracked and recorded. The code for these simulations employed various parallel computing techniques from Flynn’s Taxonomy MIMD architecture such as SPMD and MPMD.

Today, almost every industry has found at least one or more practical applications for parallel computing, and the world is witnessing its evolving capabilities through one dominant contender: Artificial Intelligence, which has made popular and demanding use of GPUs to build deep learning neural networks. The design and implementation of either parallelizing an existing serial program or writing one from scratch with the parallelization baked in from the start, used to be a very tedious, iterative, and error-prone process. Identification of which parts of a program could be parallelized and then implementing them was complex and time-consuming. Over time, various tools have been developed to ease and shorten the manual process such as parallelizing compilers, pre-processor directives (like OpenMP’s), and compiler flags (like ones used at the terminal or command prompt). The manual process of analyzing potential opportunities for parallelization is still an important step towards determining how much of a performance boost can be achieved and if it is worth investing time to implement it.

Parallel Computing in Supercomputers and HPC

Parallel computing used to be largely confined to High Performance Computing (HPC), system architectures designed to handle high speed and density calculations. When thinking of HPC, supercomputers are generally the types of machines that come to mind. Parallel programming became a significant sub-field in computer science by the late 1960s, and most of the compute-intensive processing was happening on supercomputers that employed multiple physical CPUs on nodes (or “racks”) in a hybrid-memory model.

The first supercomputer was designed and developed by Seymour Cray, an electrical engineer who was deemed the father of supercomputing. He initially worked for a company called Control Data Corporation where he worked on the CDC 6600 which was the first and fastest supercomputer between 1964 and 1969. He left in 1972 to form Cray Research and in 1975, announced his own supercomputer, the Cray-1. It was the most powerful and most successful supercomputer in history and was used until the late 1980s.

Fast forward to the 2000s, which saw a huge boom in the number of processors working in parallel, with numbers upward in the tens of thousands. Such examples in the evolution in parallel computing, High Performance Computing, and multi-core systems include the fastest supercomputer today, which is Japan's Fugaku. It boasts an impressive 7.3 million cores, all of which are, for the first time in a supercomputer, ARM-based. It uses a hybrid-memory model and a new network architecture that provides higher cohesion among all the nodes. The success of the new system is a radical paradigm-shift from the departure of traditional supercomputing towards that of ARM-powered systems. It is also proof the designers wanted to highlight that HPC still has much room for improvement and innovation.

How multicore products were marketed

Since multicore systems offered a lot of extra processing power compared to the output of a single core processor, many companies were leaping at the opportunity to gain more power for their servers at the time. To capitalize on this, IBM started development of the first dual-core processor on the market, which became available on the market was the IBM POWER4 in 2001. It became highly successful and gave IBM a very strong foothold in the industry when sold as part of their eServer pSeries server, the IBM Regatta. They iterated more on the POWER series of processors, and in 2010, expanded the number of cores available from 2 to 8 with the release of the POWER7.

While IBM was dominating the market for server CPUs, there was still a hole in the market for integrating multicore into desktop computers. In may of 2015, AMD was the first company to release a dual-core desktop CPU, the Athlon 64 x2. With the cheapest in the line being $500 and the most powerful being $1000, It did not quite match IBM’s “twice the performance for half the cost”. However, they were still another large innovation in the industry by AMD, and a top competitor for the highest power CPU on the market.

Domination of Two Semiconductor Giants Intel and AMD In Multi-core Processor Development

Early Product Launches

After the initial multicore processor introduction, on April 18, 2005 Intel announced that computer manufacturers Alienware, Dell and Velocity Micro started selling desktop PCs and workstations based on Intel's first dual-core processor-based platform. This dual-core processor-based systems were trying to attract computer hobbyists and entertainment enthusiasts.

The next month May 2005, AMD released Athlon 64 X2 which was the first dual core desktop processor series and Turion processor which were designed for low power consumption mobile processor segments. AMD was intending for the Turion to compete against Intel’s mobile processors, initially the Pentinum M and later the Intel Core and Intel Core 2 processors.

Intel released Core series was also the first Intel processor used as the main CPU in an Apple Macintosh computer on January 2006. The Core Duo was the CPU for the first-generation MacBook Pro, while the Core Solo appeared in Apple's Mac Mini line. Core Duo signified the beginning of Apple's shift to Intel processors across the entire Mac line. The successor to Core is the mobile version of the Intel Core 2 line of processors using cores based upon the Intel Core microarchitecture, released on July 27, 2006. The release of the mobile version of Intel Core 2 marked the reunification of Intel's desktop and mobile product lines as Core 2 processors were released for desktops and notebooks. The Core 2 architecture hit a wide range of devices, but Intel needed to produce something less expensive for the ultra-low-budget and portable markets. This led to the creation of Intel's Atom between 2008 and 2009, which used a 26mm2 die, less than one-fourth the size of the first Core 2 dies. Meanwhile, AMD launched Phenom dual-, triple- and quad-core versions to target a budget desktop processor market. AMD considered the quad core Phenoms to be the first "true" quad core design, as these processors were a monolithic multi-core design meaning all cores on the same silicon die, unlike Intel's Core 2 Quad series which were a multi-chip module (MCM) design. The processors were on the Socket AM2+ platform.

On August 8 2008, Intel announced the Nehalem microprocessor, which represents the new Core i7 brand of high-end microprocessors to replace the Core 2 Duo microprocessors. This brand targeted the business and high-end consumer markets for both desktop and laptop computers. Later during the year Intel planned to add more chips into the Intel Celeron E1000 dual-core lineup, creating a comprehensive family of affordable chips with two processing engines, additionally to target cost-effective desktops. The launch of low-cost dual-core Intel Celeron E1000-series processors would caused the chip giant’s rival AMD to either waterfall prices of its entry-level single-core AMD Athlon LE and AMD Sempron chips, or to introduce value dual-core processors as well and reconsider pricing of single-core offerings.

With the processor market in a highly competitive state, Intel had kept pushing their advantage. Therefore, it reworked the Core architecture to create Nehalem, which adds numerous enhancements. Intel released Core i3, i5, and i7. Core i7 was officially launched on November 17, 2008 as a family of three quad-core processor desktop models, further models started appearing throughout 2009. Intel intended the Core i3 as the new low-end of the performance processor line from Intel, following the retirement of the Core 2 brand.

AMD vs Intel in 2010s

Intel continued to grow its market share with the tick-tock improvement cycle of its Core series of microprocessors since the 2006 release of Core microarchitecture and continuing for the next ten years. Thus, AMD has been almost completely absent for many years due to AMD’s primary competitor Intel. However, AMD officially announced a new series of processors, named "Ryzen", during its New Horizon summit on December 13, 2016 and introduced Ryzen 1000 series processors in February 2017, featuring up to 8 cores and 16 threads, which launched on March 2, 2017. Ryzen was released as the return of AMD to the high-end CPU market, offering a product stack able to compete with Intel at every level. Having more processing cores, Ryzen processors offer greater multi-threaded performance at the same price point relative to Intel's Core processors. Since the release of Ryzen, AMD's CPU market share has increased while Intel appears to have stagnated.

Multi-core Processor Today

Today, multicore processor makers including AMD and Intel have been continuously improving their processors to satisfy the high demand of consumers. As of today(November, 2020), Intel and AMD both made announcements to release newer version of its core brand; 11th Gen Intel Core processors with Intel Iris Xe graphics and Ryzen 5000 Series desktop processor lineup powered by the new “Zen 3” architecture respectively.

Currently in 2020 the global multi-core processors market has been significantly more competitive. Today’s market has been segmented into dual-core processors, quad-core processors, octa-core processors, and hexa-core processors. It’s apparent the increasing advancement in high-performance computing, graphics and visualization technologies is anticipated to boost the growth of the multi-core processor.

References

MULTI-CORE PROCESSORS — THE NEXT EVOLUTION IN COMPUTING. (n.d.). Retrieved November 12, 2020, from http://static.highspeedbackbone.net/pdf/AMD_Athlon_Multi-Core_Processor_Article.pdf

Barney, B. (2020, November 18). Introduction to Parallel Computing. Retrieved November 18, 2020, from https://computing.llnl.gov/tutorials/parallel_comp/

A very short history of parallel computing. (n.d.). Retrieved November 18, 2020, from https://subscription.packtpub.com/book/hardware_and_creative/9781783286195/1/ch01lvl1sec08/a-very-short-history-of-parallel-computing

What is Parallel Computing? Definition and FAQs. (n.d.). Retrieved November 12, 2020, from https://www.omnisci.com/technical-glossary/parallel-computing

Multi-core processor. (2020, November 18). Retrieved November 12, 2020, from https://en.wikipedia.org/wiki/Multi-core_processor

Instruction-level parallelism. (2020, September 23). Retrieved November 12, 2020, from https://en.wikipedia.org/wiki/Instruction-level_parallelism

Start, C. (2013, July 18). Multi-core Systems. Retrieved November 12, 2020, from https://www.cs.uaf.edu/2008/fall/cs441/proj1/fswcs/

Kaufmann, M., Koniges, A. E., Jette, M. A., Eder, D. C., Cahir, M., Moench, R., . . . Brooks, J. (n.d.). Part 1: The Parallel Computing Environment. Retrieved November 12, 2020, from http://wayback.cecm.sfu.ca/PSG/book/intro.html

Jackson, K. (2020, June 23). The 5 fastest supercomputers in the world (1064822629 811109711 A. Alering, Ed.). Retrieved November 12, 2020, from https://sciencenode.org/feature/the-5-fastest-supercomputers-in-the-world.php

POWER4. (2020, October 19). Retrieved November 12, 2020, from https://en.wikipedia.org/wiki/POWER4

No, J., Choudhary, A., Huang, W., Tafti, D., Resch, M., Gabriel, E., . . . Pressel, D. (n.d.). Parallel Computer. Retrieved November 18, 2020, from https://www.sciencedirect.com/topics/computer-science/parallel-computer

Wang, W. (n.d.). The Limitations of Instruction-Level Parallelism and Thread-Level Parallelism. Retrieved November 30, 2020, from https://wwang.github.io/teaching/CS5513_Fall19/lectures/ILP_Limitation.pdf