Difference between revisions of "DPS921/Group 8"

(→Location of the problem - Local cache) |

(→Location of the problem - Local cache) |

||

| (22 intermediate revisions by 2 users not shown) | |||

| Line 2: | Line 2: | ||

Our Project: Analyzing False Sharing - Case Studies | Our Project: Analyzing False Sharing - Case Studies | ||

| − | https://wiki.cdot.senecacollege.ca/wiki/DPS921/Group_8 | + | == https://wiki.cdot.senecacollege.ca/wiki/DPS921/Group_8 == |

== Group Members == | == Group Members == | ||

| Line 16: | Line 16: | ||

= Location of the problem - Local cache = | = Location of the problem - Local cache = | ||

| − | False sharing is comment issue in symmetric multiprocessor (SMP) system. In SMP, each multi-processor has their own shared-memory architectures. There is a potential for those architectures being in a same cache line managed by the caching mechanism, as they are small enough | + | ===What is Cache=== |

| + | A cache is a small, fast array of memory placed between the processor core and main memory that stores portions of recently referenced main memory. The processor uses cache memory instead of main memory whenever possible to increase system performance. | ||

| + | |||

| + | False sharing is comment issue in symmetric multiprocessor (SMP) system. In SMP, each multi-processor has their own shared-memory architectures. There is a potential for those architectures being in a same cache line managed by the caching mechanism, as they are small enough. | ||

[[File:Smp.PNG]] | [[File:Smp.PNG]] | ||

| + | |||

| + | ===Caching Coherency Protocol=== | ||

| + | The caching coherency protocol would not allow other party to access the data. Except those data shares a cache block with data. Those data are protected by a hardware write lock that only one core can hold at a time. When two processors are both altered data, the protocol would force the first participant to update the whole unit despite a lack of logical necessity. Each update of an individual element of a cache line marks the line as invalid. Other processors accessing a different element in the same line see the line marked as invalid. They are forced to fetch a more recent copy of the line from memory or elsewhere, even though the element accessed has not been modified. This is because cache coherency is maintained on a cache-line basis, and not for individual elements. As a result, there will be an increase in interconnect traffic and overhead. | ||

| + | |||

| + | |||

| + | [[File:Painter_1.PNG]] | ||

| + | |||

| + | [[File:Painter_2.PNG]] | ||

| + | |||

| + | [[File:Painter_3.PNG]] | ||

===How MESI protocol works:=== | ===How MESI protocol works:=== | ||

| − | The cache line would be signed ‘Exclusive’ as its first load. As the processor find the cache line is loaded by other processors. The access of cache line is changed to ‘Share’. If the processor stored a cache line marked as ‘Share’, the cache line is marked as ‘Modified’ and all other processors are sent an ‘Invalid’ cache line message. | + | To ensure data consistency across multiple caches, multiprocessor-capable Intel® processors follow the MESI protocol. |

| + | |||

| + | The cache line would be signed ‘Exclusive’ as its first load. As the processor find the cache line is loaded by other processors. The access of cache line is changed to ‘Share’. If the processor stored a cache line marked as ‘Share’, the cache line is marked as ‘Modified’ and all other processors are sent an ‘Invalid’ cache line message. If the processor sees the same cache line which is now marked ‘Modified’ being accessed by another processor, the processor stores the cache line back to memory and marks its cache line as ‘Shared’. | ||

[[File: Untitled.png]] | [[File: Untitled.png]] | ||

= Signs of false sharing = | = Signs of false sharing = | ||

| − | False sharing is more than two threads updating at lease two independence element in the same cache line. False sharing occurs when processors in a shared-memory parallel system refer to data objects within a same cache line. So that the | + | False sharing is more than two threads updating at lease two independence element in the same cache line. False sharing occurs when processors in a shared-memory parallel system refer to data objects within a same cache line. So that false sharing has two unique characteristics. One is the data processes occur on one same cache line, the another is at least two threads participate the execution. |

let’s take an example. | let’s take an example. | ||

| Line 38: | Line 53: | ||

We will now explore two typical ways to deal with false sharing in an OMP environment. | We will now explore two typical ways to deal with false sharing in an OMP environment. | ||

| − | == Padding == | + | ____________________________________________________________________________________________________________________________________________________________ |

| + | |||

| + | <nowiki> | ||

| + | |||

| + | #include <iostream> | ||

| + | #include <iomanip> | ||

| + | #include <cstdlib> | ||

| + | #include <chrono> | ||

| + | #include <algorithm> | ||

| + | #include <omp.h> | ||

| + | #include "pch.h" | ||

| + | using namespace std::chrono; | ||

| + | |||

| + | struct MyStruct | ||

| + | { | ||

| + | int value; | ||

| + | }; | ||

| + | |||

| + | int main(int argc, char** argv) | ||

| + | { | ||

| + | MyStruct testArr[4]; | ||

| + | |||

| + | int value[4] = {}; | ||

| + | |||

| + | omp_set_num_threads(4); | ||

| + | double start_time = omp_get_wtime(); | ||

| + | |||

| + | #pragma omp parallel for | ||

| + | for (int i = 0; i < 4; i++) { | ||

| + | |||

| + | testArr[i].value = 0; | ||

| + | |||

| + | for (int j = 0; j < 10000; j++) { | ||

| + | testArr[i].value = testArr[i].value + 1; | ||

| + | } | ||

| + | |||

| + | } | ||

| + | |||

| + | std::cout << "testArr value 1: " << testArr[0].value << std::endl; | ||

| + | std::cout << "testArr value 2: " << testArr[1].value << std::endl; | ||

| + | std::cout << "testArr value 3: " << testArr[2].value << std::endl; | ||

| + | std::cout << "testArr value 4: " << testArr[3].value << std::endl; | ||

| + | |||

| + | |||

| + | double time = omp_get_wtime() - start_time; | ||

| + | std::cout << "Execution Time: " << time << std::endl; | ||

| + | |||

| + | return 0; | ||

| + | } | ||

| + | |||

| + | </nowiki> | ||

| + | |||

| + | [[File:serial.png]] | ||

| + | |||

| + | _________________________________________________________________________________________________________________________________________ | ||

| + | |||

| + | == '''Padding''' == | ||

One way to eliminating false sharing is to add in padding to the data. The idea of padding in general is for memory alignment, by utilizing padding we can eliminate cache line invalidation interfering with read and write of elements. | One way to eliminating false sharing is to add in padding to the data. The idea of padding in general is for memory alignment, by utilizing padding we can eliminate cache line invalidation interfering with read and write of elements. | ||

| Line 58: | Line 129: | ||

#include <omp.h> | #include <omp.h> | ||

#include "pch.h" | #include "pch.h" | ||

| − | |||

using namespace std::chrono; | using namespace std::chrono; | ||

struct MyStruct | struct MyStruct | ||

| − | { | + | { |

| − | + | int value; | |

| − | + | int padding[15]; | |

| − | }; | + | }; |

| − | int main(int argc, char** argv) | + | int main(int argc, char** argv) |

| − | { | + | { |

MyStruct testArr[4]; | MyStruct testArr[4]; | ||

| − | int | + | int value[4] = {}; |

| − | omp_set_num_threads( | + | |

| + | omp_set_num_threads(4); | ||

double start_time = omp_get_wtime(); | double start_time = omp_get_wtime(); | ||

| + | |||

| + | #pragma omp parallel for | ||

| + | for (int i = 0; i < 4; i++) { | ||

| + | |||

| + | testArr[i].value = 0; | ||

| − | |||

| − | |||

for (int j = 0; j < 10000; j++) { | for (int j = 0; j < 10000; j++) { | ||

| − | + | //#pragma omp critical | |

| − | + | testArr[i].value = testArr[i].value + 1; | |

} | } | ||

} | } | ||

| + | |||

| + | std::cout << "testArr value 1: " << testArr[0].value << std::endl; | ||

| + | std::cout << "testArr value 2: " << testArr[1].value << std::endl; | ||

| + | std::cout << "testArr value 3: " << testArr[2].value << std::endl; | ||

| + | std::cout << "testArr value 4: " << testArr[3].value << std::endl; | ||

| + | |||

double time = omp_get_wtime() - start_time; | double time = omp_get_wtime() - start_time; | ||

| Line 89: | Line 169: | ||

return 0; | return 0; | ||

} | } | ||

| + | |||

</nowiki> | </nowiki> | ||

____________________________________________________________________________________________________________________________________________________________ | ____________________________________________________________________________________________________________________________________________________________ | ||

| − | [[File: | + | [[File:padding.png]] |

| + | |||

| + | == '''Critical construct''' == | ||

| + | The other way to eliminating false sharing is to implement a mutual exclusion construct. This the better method than using padding as there is no wasting of memory and data access is not hindered due to cache line invalidation. Programming a mutual exclusion implementation is done by using the critical construct in an op environment. The critical construct restricts statements to a single thread to process at a time, making variables local to a single thread ensures that multiple threads do not write data to the same cache line. | ||

| + | |||

| + | ______________________________________________________________________________________________________________________________________________________________ | ||

| + | |||

| + | <nowiki> | ||

| + | #include <iostream> | ||

| + | #include <iomanip> | ||

| + | #include <cstdlib> | ||

| + | #include <chrono> | ||

| + | #include <algorithm> | ||

| + | #include <omp.h> | ||

| + | #include "pch.h" | ||

| + | using namespace std::chrono; | ||

| + | |||

| + | struct MyStruct | ||

| + | { | ||

| + | int value; | ||

| + | }; | ||

| + | |||

| + | int main(int argc, char** argv) | ||

| + | { | ||

| + | MyStruct testArr[4]; | ||

| + | |||

| + | int value[4] = {}; | ||

| + | |||

| + | omp_set_num_threads(4); | ||

| + | double start_time = omp_get_wtime(); | ||

| + | |||

| + | #pragma omp parallel for | ||

| + | for (int i = 0; i < 4; i++) { | ||

| + | |||

| + | testArr[i].value = 0; | ||

| + | |||

| + | for (int j = 0; j < 10000; j++) { | ||

| + | #pragma omp critical | ||

| + | testArr[i].value = testArr[i].value + 1; | ||

| + | } | ||

| + | } | ||

| − | [[ | + | std::cout << "testArr value 1: " << testArr[0].value << std::endl; |

| + | std::cout << "testArr value 2: " << testArr[1].value << std::endl; | ||

| + | std::cout << "testArr value 3: " << testArr[2].value << std::endl; | ||

| + | std::cout << "testArr value 4: " << testArr[3].value << std::endl; | ||

| − | + | double time = omp_get_wtime() - start_time; | |

| + | std::cout << "Execution Time: " << time << std::endl; | ||

| + | return 0; | ||

| + | } | ||

| + | } | ||

| − | + | </nowiki> | |

| + | ______________________________________________________________________________________________________________________________________________________________ | ||

| − | + | ||

| − | + | [[File:critical.png]] | |

=Conclusion = | =Conclusion = | ||

False sharing is a lurking problem that hinders the scalabilty of a program and it can be easily missed. It is very important to keep and eye out for the problem and recognize it quickly in parallel programming where performance is key. The two methods we explored, padding and thread local variable, are both reliable solution to false sharing but having a local variable to a thread is definitely better than padding as padding is more resource wasteful making it counter intuitive in parallel programming. | False sharing is a lurking problem that hinders the scalabilty of a program and it can be easily missed. It is very important to keep and eye out for the problem and recognize it quickly in parallel programming where performance is key. The two methods we explored, padding and thread local variable, are both reliable solution to false sharing but having a local variable to a thread is definitely better than padding as padding is more resource wasteful making it counter intuitive in parallel programming. | ||

Latest revision as of 10:32, 28 November 2018

Contents

Group 8

Our Project: Analyzing False Sharing - Case Studies

https://wiki.cdot.senecacollege.ca/wiki/DPS921/Group_8

Group Members

False Sharing in Parallel Programming

Introduction

One of the most important component used when utilizing multicore programming is the cache. In most modern systems, the cache line which is used to for read and write of data, is a shared resource. Due to this, there is potential for conflict in other word there is a chance for false sharing.False sharing occurs when two or more thread access different elements of the same cache line. Both threads tries to update the cache line resulting in the invalidation of the cache line. We will now explore the problem of false sharing and the methods to solving the problem.

Location of the problem - Local cache

What is Cache

A cache is a small, fast array of memory placed between the processor core and main memory that stores portions of recently referenced main memory. The processor uses cache memory instead of main memory whenever possible to increase system performance.

False sharing is comment issue in symmetric multiprocessor (SMP) system. In SMP, each multi-processor has their own shared-memory architectures. There is a potential for those architectures being in a same cache line managed by the caching mechanism, as they are small enough.

Caching Coherency Protocol

The caching coherency protocol would not allow other party to access the data. Except those data shares a cache block with data. Those data are protected by a hardware write lock that only one core can hold at a time. When two processors are both altered data, the protocol would force the first participant to update the whole unit despite a lack of logical necessity. Each update of an individual element of a cache line marks the line as invalid. Other processors accessing a different element in the same line see the line marked as invalid. They are forced to fetch a more recent copy of the line from memory or elsewhere, even though the element accessed has not been modified. This is because cache coherency is maintained on a cache-line basis, and not for individual elements. As a result, there will be an increase in interconnect traffic and overhead.

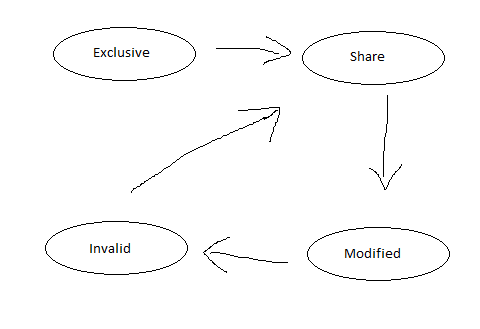

How MESI protocol works:

To ensure data consistency across multiple caches, multiprocessor-capable Intel® processors follow the MESI protocol.

The cache line would be signed ‘Exclusive’ as its first load. As the processor find the cache line is loaded by other processors. The access of cache line is changed to ‘Share’. If the processor stored a cache line marked as ‘Share’, the cache line is marked as ‘Modified’ and all other processors are sent an ‘Invalid’ cache line message. If the processor sees the same cache line which is now marked ‘Modified’ being accessed by another processor, the processor stores the cache line back to memory and marks its cache line as ‘Shared’.

Signs of false sharing

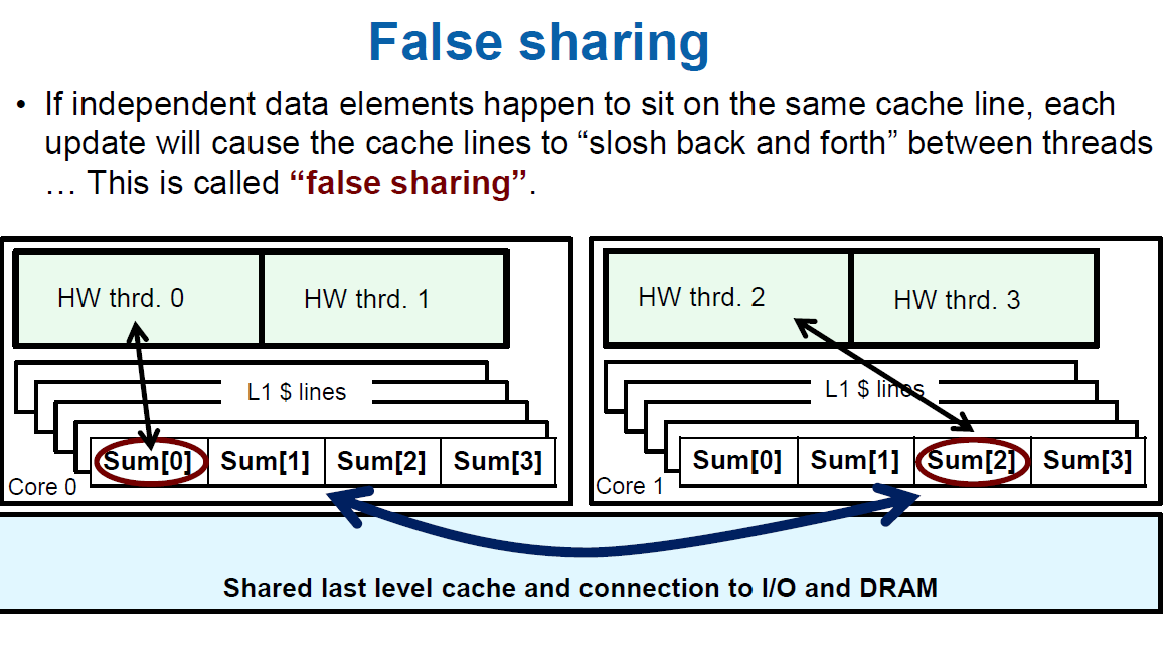

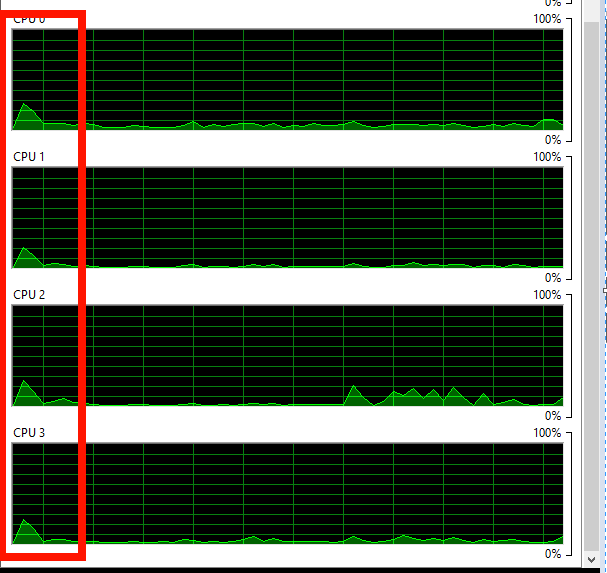

False sharing is more than two threads updating at lease two independence element in the same cache line. False sharing occurs when processors in a shared-memory parallel system refer to data objects within a same cache line. So that false sharing has two unique characteristics. One is the data processes occur on one same cache line, the another is at least two threads participate the execution. let’s take an example.

Thread 0 and thread 2 are executed and stored data in same cache line, which are sum[0] and sum[2]. HW thread 0 alter variable sum[0], Thread 2 is going to change value in Sum[2]. As the thread 0 finish the process. The changed must reflect on the cache line before thread 2 execute.

Solutions

We will now explore two typical ways to deal with false sharing in an OMP environment.

____________________________________________________________________________________________________________________________________________________________

#include <iostream>

#include <iomanip>

#include <cstdlib>

#include <chrono>

#include <algorithm>

#include <omp.h>

#include "pch.h"

using namespace std::chrono;

struct MyStruct

{

int value;

};

int main(int argc, char** argv)

{

MyStruct testArr[4];

int value[4] = {};

omp_set_num_threads(4);

double start_time = omp_get_wtime();

#pragma omp parallel for

for (int i = 0; i < 4; i++) {

testArr[i].value = 0;

for (int j = 0; j < 10000; j++) {

testArr[i].value = testArr[i].value + 1;

}

}

std::cout << "testArr value 1: " << testArr[0].value << std::endl;

std::cout << "testArr value 2: " << testArr[1].value << std::endl;

std::cout << "testArr value 3: " << testArr[2].value << std::endl;

std::cout << "testArr value 4: " << testArr[3].value << std::endl;

double time = omp_get_wtime() - start_time;

std::cout << "Execution Time: " << time << std::endl;

return 0;

}

_________________________________________________________________________________________________________________________________________

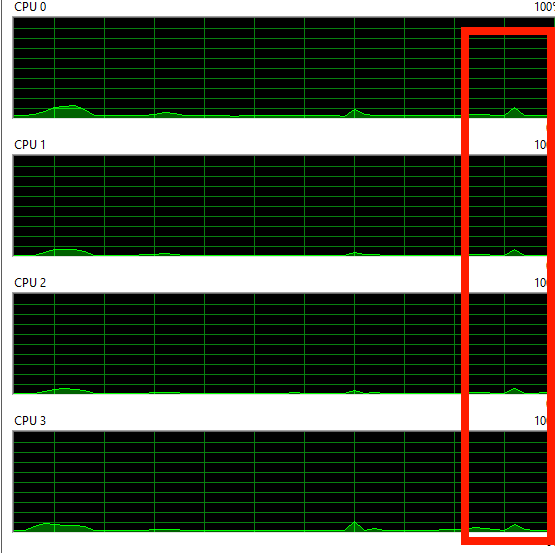

Padding

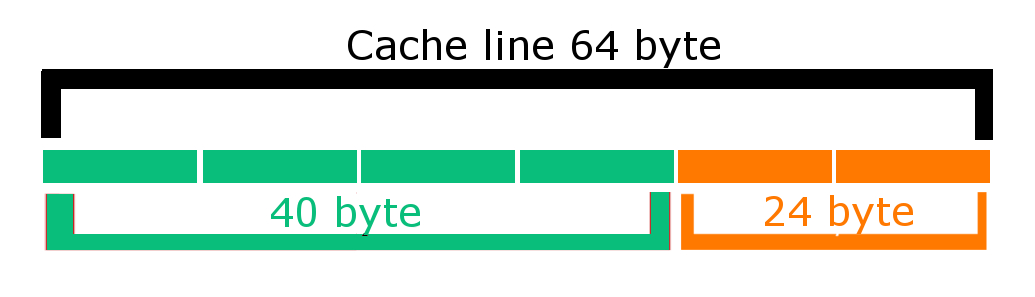

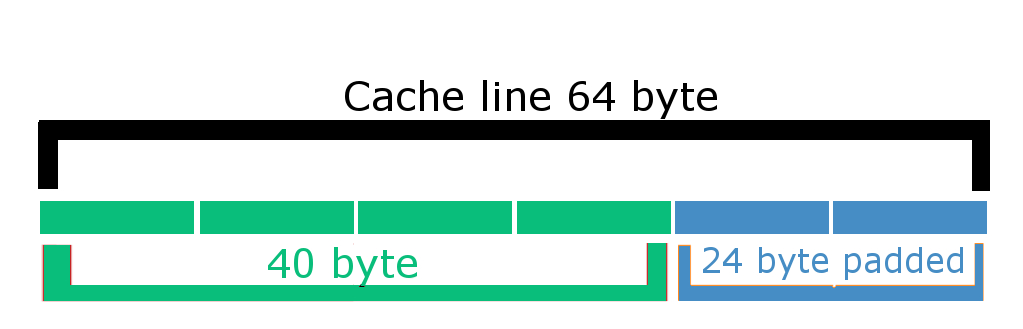

One way to eliminating false sharing is to add in padding to the data. The idea of padding in general is for memory alignment, by utilizing padding we can eliminate cache line invalidation interfering with read and write of elements.

How padding works: Let's say we have an int element num[i] = 10; in memory this would be stored as 40 bytes ( 10 * 4 byte) and a single standard cache line is 64 byte which means 24 byte needs to be padded otherwise another element will occupy that region which will result in 2 or more thread accessing same cache line causing false sharing.

____________________________________________________________________________________________________________________________________________________________

#include <iostream>

#include <iomanip>

#include <cstdlib>

#include <chrono>

#include <algorithm>

#include <omp.h>

#include "pch.h"

using namespace std::chrono;

struct MyStruct

{

int value;

int padding[15];

};

int main(int argc, char** argv)

{

MyStruct testArr[4];

int value[4] = {};

omp_set_num_threads(4);

double start_time = omp_get_wtime();

#pragma omp parallel for

for (int i = 0; i < 4; i++) {

testArr[i].value = 0;

for (int j = 0; j < 10000; j++) {

//#pragma omp critical

testArr[i].value = testArr[i].value + 1;

}

}

std::cout << "testArr value 1: " << testArr[0].value << std::endl;

std::cout << "testArr value 2: " << testArr[1].value << std::endl;

std::cout << "testArr value 3: " << testArr[2].value << std::endl;

std::cout << "testArr value 4: " << testArr[3].value << std::endl;

double time = omp_get_wtime() - start_time;

std::cout << "Execution Time: " << time << std::endl;

return 0;

}

____________________________________________________________________________________________________________________________________________________________

Critical construct

The other way to eliminating false sharing is to implement a mutual exclusion construct. This the better method than using padding as there is no wasting of memory and data access is not hindered due to cache line invalidation. Programming a mutual exclusion implementation is done by using the critical construct in an op environment. The critical construct restricts statements to a single thread to process at a time, making variables local to a single thread ensures that multiple threads do not write data to the same cache line.

______________________________________________________________________________________________________________________________________________________________

#include <iostream>

#include <iomanip>

#include <cstdlib>

#include <chrono>

#include <algorithm>

#include <omp.h>

#include "pch.h"

using namespace std::chrono;

struct MyStruct

{

int value;

};

int main(int argc, char** argv)

{

MyStruct testArr[4];

int value[4] = {};

omp_set_num_threads(4);

double start_time = omp_get_wtime();

#pragma omp parallel for

for (int i = 0; i < 4; i++) {

testArr[i].value = 0;

for (int j = 0; j < 10000; j++) {

#pragma omp critical

testArr[i].value = testArr[i].value + 1;

}

}

std::cout << "testArr value 1: " << testArr[0].value << std::endl;

std::cout << "testArr value 2: " << testArr[1].value << std::endl;

std::cout << "testArr value 3: " << testArr[2].value << std::endl;

std::cout << "testArr value 4: " << testArr[3].value << std::endl;

double time = omp_get_wtime() - start_time;

std::cout << "Execution Time: " << time << std::endl;

return 0;

}

}

______________________________________________________________________________________________________________________________________________________________

Conclusion

False sharing is a lurking problem that hinders the scalabilty of a program and it can be easily missed. It is very important to keep and eye out for the problem and recognize it quickly in parallel programming where performance is key. The two methods we explored, padding and thread local variable, are both reliable solution to false sharing but having a local variable to a thread is definitely better than padding as padding is more resource wasteful making it counter intuitive in parallel programming.