Difference between revisions of "GPU621/Intel Advisor"

Thomas Nolte (talk | contribs) (→Vectorization Advisor) |

|||

| (28 intermediate revisions by 2 users not shown) | |||

| Line 4: | Line 4: | ||

# [mailto:jespiritu@myseneca.ca?subject=GPU621 Jeffrey Espiritu] | # [mailto:jespiritu@myseneca.ca?subject=GPU621 Jeffrey Espiritu] | ||

# [mailto:tahmed36@myseneca.ca?subject=GPU621 Thaharim Ahmed] | # [mailto:tahmed36@myseneca.ca?subject=GPU621 Thaharim Ahmed] | ||

| + | # [mailto:tnolte@myseneca.ca?subject=GPU621 Thomas Nolte] | ||

# [mailto:jespiritu@myseneca.ca;tahmed36@myseneca.ca?subject=GPU621/DPS921 eMail All] | # [mailto:jespiritu@myseneca.ca;tahmed36@myseneca.ca?subject=GPU621/DPS921 eMail All] | ||

== Introduction == | == Introduction == | ||

| − | [https://software.intel.com/en-us/advisor Intel Advisor] is software tool that you can use to help you add multithreading to your | + | [https://software.intel.com/en-us/advisor Intel Advisor] is software tool that you can use to help you add multithreading to your application or parts of your application without disrupting your normal software development. Not only can you use it add multithreading to your application, it can be used to determine whether the performance improvements that come with multithreading are worth adding when you consider the costs associated with multithreading such as maintainability, more difficult to debug, and the effort with refactoring or reorganizing your code to resolve data dependencies. |

It is also a tool that can help you add vectorization to your program or to improve the efficiency of code that is already vectorized. | It is also a tool that can help you add vectorization to your program or to improve the efficiency of code that is already vectorized. | ||

Intel Advisor is bundled with [https://software.intel.com/en-us/parallel-studio-xe Intel Parallel Studio]. | Intel Advisor is bundled with [https://software.intel.com/en-us/parallel-studio-xe Intel Parallel Studio]. | ||

| + | |||

| + | Intel Advisor is separated into two workflows Vectorization Advisor and Threading Advisor. | ||

| + | |||

| + | = Vectorization Advisor = | ||

| + | |||

| + | The Vectorization Advisor is a tool for optimizing your code through vectorization. This tool will help identify loops that are high-impact and under-optimized, It also reports on what blocking loops from being vectorized and details on where it is safe to ignore the compiler's warnings and force vectorization. Finally it offers in-line code specific recommendations on how to fix these issues. | ||

| + | |||

| + | == Roofline Analysis == | ||

| + | |||

| + | Roofline charts provide a visual analysis of the performance ceiling imposed on your program given the hard-ware of your computer. This provides an entry point for optimization highlighting loops that are having the most impact on performance and loops with the most room for improvement. | ||

| + | |||

| + | The key use of roofline analysis is to profile an application and display if it is optimized for the hard-ware it's running on. | ||

| + | |||

| + | Roofline analysis allows us to tackle 2 key points: | ||

| + | |||

| + | * What are the bottlenecks limiting performance? | ||

| + | * what loops are inhibiting performance the most? | ||

| + | |||

| + | |||

| + | [[File:Roofline-Chart-Example.png]] | ||

| + | |||

| + | == Survey Report == | ||

| + | |||

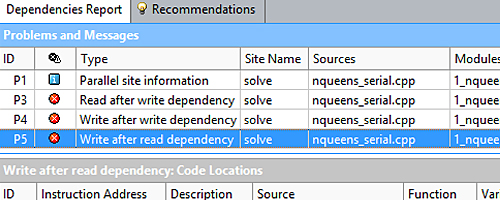

| + | Provides code-specific recommendations for fixing vectorization issues. This allows the programmer to solve these issues providing three key points of information: | ||

| + | |||

| + | * Where in the code would vectorization be the most impactful. | ||

| + | * How you can further improve vectorized loops. | ||

| + | * Which loops are not vectorized and information on how they can be. | ||

| + | |||

| + | |||

| + | [[File:Survey-Report-Example.png]] | ||

| + | |||

| + | |||

| + | === Trip Count and FLOPS Analysis === | ||

| + | |||

| + | Complementing the survey reports trip count and FLOPS analysis provides in-line messages that allow you to make better decisions on how to improve individual loops. These messages include: | ||

| + | |||

| + | * Number of time the loop iterates. | ||

| + | * Data about FLOPS (Floating point Operations Per Second). | ||

| + | |||

| + | |||

| + | [[File:In-Line-Analysis-Example.png]] | ||

| + | |||

| + | |||

| + | After Identifying what loops benefit the most from vectorization you can simple select them individually to run more detailed report on them. | ||

| + | |||

| + | |||

| + | == Data Dependencies Report == | ||

| + | |||

| + | Compilers may fail to vectorize loops due to potential data dependencies. This feature collects all the error messages from the compiler and creates a report for the programmer. The report allow the programmer to discern for themselves if these data dependencies actually exist and whether or not to force the compiler to ignore the error and vectorize the loop anyways. If the data dependencies really do exist the report provides information on the type of dependency and how to resolve the issue. | ||

| + | |||

| + | |||

| + | [[File:Data-Dependency-Example.png]] | ||

| + | |||

| + | = Threading Advisor = | ||

| + | |||

| + | The Threading Advisor tool is used to model, tune, and test the performance of various multi threading designs such as OpenMP, Threading Building Blocks (TBB), and Microsoft Task Parallel Library (TPL) without the hindering the development of the project. The tool accomplishes this by helping you with prototyping thread options, testing scalability of the project for larger systems, and optimizing faster. It will also help identify issues before implementing parallelization like eliminating data-sharing issues during design. The tool is primarily used for adding threading to the C, C++, C#, and Fotran languages. | ||

| + | |||

| + | == Annotations == | ||

| + | |||

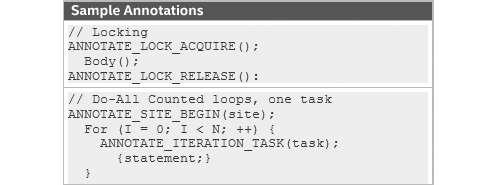

| + | Annotations can be inserted into your code to help design the potential parallelization for analysis. This way of designing multi threading prevents early error in the code's design to build up and cause slower performance then expected. This does not impact the design of your current code as the compiler ignores the annotations (they're only there to help model your design). This provides you with the ability to keep your code serial and prevents the bugs that can come from multiple threading while in your design phase. | ||

| + | |||

| + | |||

| + | [[File:Annotation-Example.jpg]] | ||

| + | |||

| + | == Scalability Analysis == | ||

| + | |||

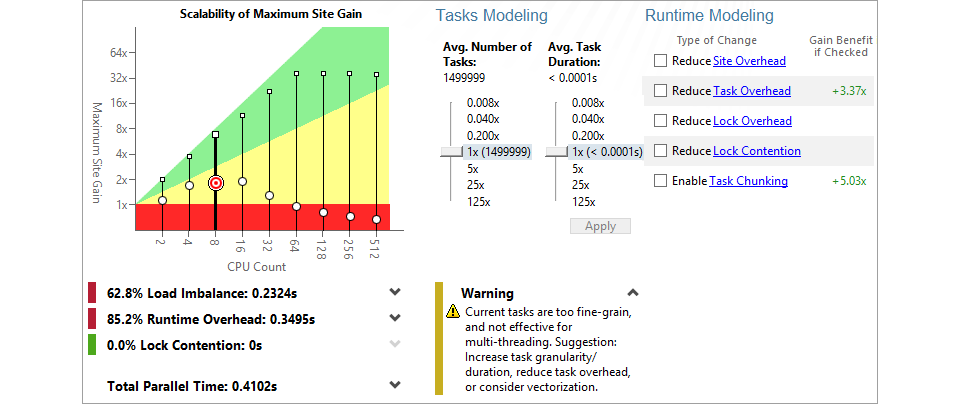

| + | Enables the evaluation of the performance and scalability of the various threading designs. The evaluation of the number of CPU's versus the Grain-size provides an easy to follow results on the impact of the common bottle necks found in all multi threading code when attempting to scale up a project without the need to test it on multiple high end machines yourself. | ||

| + | |||

| + | |||

| + | [[File:Scalabilty-Analysis-Example.png]] | ||

| + | |||

| + | == Dependencies Report == | ||

| + | |||

| + | The threading advisor's dependencies report works similar to the vectorization's. It will provide information on the data dependency errors a programmer encounters when parallelizing code including data-sharing, deadlocks, and races. The report also displays code snippets it finds is related to the dependency errors you can then follow these code snippets to their exact location and begin handling the errors on a case by case basis. | ||

| + | |||

| + | = Work Flow = | ||

| + | |||

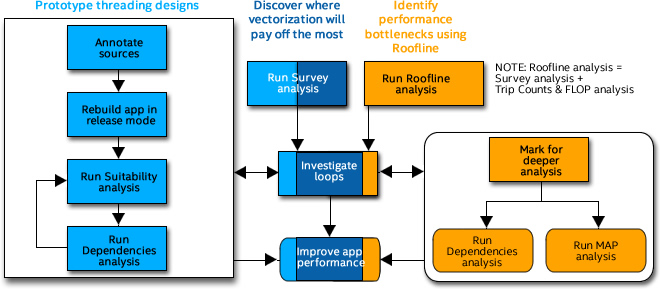

| + | With these two tool we can start to come up with a work flow for optimizing our code. | ||

| + | |||

| + | |||

| + | [[File:Work-Flow-Example.png]] | ||

= Vectorization = | = Vectorization = | ||

| Line 46: | Line 129: | ||

</pre> | </pre> | ||

| − | == | + | == SIMD Extensions == |

| − | [https://en.wikipedia.org/wiki/Streaming_SIMD_Extensions SSE] stands for Streaming SIMD Extensions which refers to the addition of a set of SIMD instructions as well as new XMM registers. | + | [https://en.wikipedia.org/wiki/Streaming_SIMD_Extensions SSE] stands for '''Streaming SIMD Extensions''' which refers to the addition of a set of SIMD instructions as well as new XMM registers. [https://en.wikipedia.org/wiki/SIMD SIMD] stands for '''Single Instruction Multiple Data''' and refers to instructions that can perform a single operation on multiple elements in parallel. |

List of SIMD extensions: | List of SIMD extensions: | ||

| Line 60: | Line 143: | ||

* AVX2 | * AVX2 | ||

| − | (For Unix/Linux) To display what | + | (For Unix/Linux) To display what instruction set and extensions set your processor supports, you can use the following commands: |

<pre> | <pre> | ||

| Line 89: | Line 172: | ||

__m128i prod = _mm_unpacklo_epi64(prod01, prod23); // (ab3,ab2,ab1,ab0) | __m128i prod = _mm_unpacklo_epi64(prod01, prod23); // (ab3,ab2,ab1,ab0) | ||

</source> | </source> | ||

| + | |||

| + | Code sample was taken from this StackOverflow thread: [https://stackoverflow.com/questions/17264399/fastest-way-to-multiply-two-vectors-of-32bit-integers-in-c-with-sse Fastest way to multiply two vectors of 32bit integers in C++, with SSE] | ||

Here is a link to an interactive guide to Intel Intrinsics: [https://software.intel.com/sites/landingpage/IntrinsicsGuide/#techs=SSE,SSE2,SSE3,SSSE3,SSE4_1,SSE4_2 Intel Intrinsics SSE, SSE2, SSE3, SSSE3, SSE4.1, SSE4.2] | Here is a link to an interactive guide to Intel Intrinsics: [https://software.intel.com/sites/landingpage/IntrinsicsGuide/#techs=SSE,SSE2,SSE3,SSSE3,SSE4_1,SSE4_2 Intel Intrinsics SSE, SSE2, SSE3, SSSE3, SSE4.1, SSE4.2] | ||

| Line 94: | Line 179: | ||

== Vectorization Examples == | == Vectorization Examples == | ||

| − | + | [[File:Vectorization-example-serial.png]] | |

| − | [[File: | ||

=== Serial Version === | === Serial Version === | ||

| Line 108: | Line 192: | ||

=== SIMD Version === | === SIMD Version === | ||

| + | |||

| + | [[File:Vectorization-example-simd.png]] | ||

<source lang="cpp"> | <source lang="cpp"> | ||

| Line 124: | Line 210: | ||

= Intel Advisor Tutorial Example = | = Intel Advisor Tutorial Example = | ||

| + | |||

| + | Here is a great tutorial on how to use Intel Advisor to vectorize your code. [https://software.intel.com/en-us/advisor-tutorial-vectorization-windows-cplusplus Intel® Advisor Tutorial: Add Efficient SIMD Parallelism to C++ Code Using the Vectorization Advisor] | ||

You can find the sample code in the directory of your Intel Parallel Studio installation. Just unzip the file and you can open the solution in Visual Studio or build on the command line. | You can find the sample code in the directory of your Intel Parallel Studio installation. Just unzip the file and you can open the solution in Visual Studio or build on the command line. | ||

| − | + | For default installations, the sample code would be located here: <code>C:\Program Files (x86)\IntelSWTools\Advisor 2019\samples\en\C++\vec_samples.zip</code> | |

| − | |||

| − | |||

== Loop Unrolling == | == Loop Unrolling == | ||

| Line 174: | Line 260: | ||

If you compile the vec_samples project with the macro, the <code>matvec</code> function declaration will include the <code>restrict</code> keyword. The <code>restrict</code> keyword will tell the compiler that pointers <code>a</code> and <code>b</code> do not overlap and that the compiler is free optimize the code blocks that uses the pointers. | If you compile the vec_samples project with the macro, the <code>matvec</code> function declaration will include the <code>restrict</code> keyword. The <code>restrict</code> keyword will tell the compiler that pointers <code>a</code> and <code>b</code> do not overlap and that the compiler is free optimize the code blocks that uses the pointers. | ||

| − | |||

| − | |||

| − | |||

==== multiply.c ==== | ==== multiply.c ==== | ||

| Line 208: | Line 291: | ||

The following image illustrates the loop-carried dependency when two pointers overlap. | The following image illustrates the loop-carried dependency when two pointers overlap. | ||

| − | [ | + | [[File:Pointer-alias.png]] |

| − | [[File: | + | |

| + | === Magnitude of a Vector === | ||

| + | |||

| + | To demonstrate a more familiar example of a loop-carried dependency that would block the auto-vectorization of a loop, I'm going to include a code snippet that calculates the magnitude of a vector. | ||

| + | |||

| + | To calculate the magnitude of a vector: <code>length = sqrt(x^2 + y^2 + z^)</code> | ||

| + | |||

| + | <source lang="cpp"> | ||

| + | for (int i = 0; i < n; i++) | ||

| + | sum += x[i] * x[i]; | ||

| + | |||

| + | length = sqrt(sum); | ||

| + | </source> | ||

| + | |||

| + | As you can see, there is a loop-carried dependency with the variable <code>sum</code>. The diagram below illustrates why the loop cannot be vectorized (nor can it be threaded). The dashed rectangle represents a single iteration in the loop, and the arrows represents dependencies between nodes. If an arrow crosses the iteration rectangle, then those iterations cannot be executed in parallel. | ||

| + | |||

| + | [[File:Magnitude-node-dependency-graph.png]] | ||

| + | |||

| + | To resolve the loop-carried dependency, use <code>simd</code> and the <code>reduction</code> clause to tell the compiler to auto-vectorize the loop and to reduce the array of elements to a single value. Each SIMD lane will compute its own sum and then combine the results into a single sum at the end. | ||

| + | |||

| + | <source lang="cpp"> | ||

| + | #pragma omp simd reduction(+:sum) | ||

| + | for (int i = 0; i < n; i++) | ||

| + | sum += x[i] * x[i]; | ||

| + | |||

| + | length = sqrt(sum); | ||

| + | </source> | ||

== Memory Alignment == | == Memory Alignment == | ||

| Line 215: | Line 324: | ||

Intel Advisor can detect if there are any memory alignment issues that may produce inefficient vectorization code. | Intel Advisor can detect if there are any memory alignment issues that may produce inefficient vectorization code. | ||

| − | A loop can be vectorized if there are no data dependencies across loop iterations | + | A loop can be vectorized if there are no data dependencies across loop iterations. |

| − | [ | + | === Peeled and Remainder Loops === |

| + | |||

| + | However, if the data is not aligned, the vectorizer may have to use a '''peeled''' loop to address the misalignment. So instead of vectorizing the entire loop, an extra loop needs to be inserted to perform operations on the front-end of the array that not aligned with memory. | ||

| + | |||

| + | [[File:Memory-alignment-peeled.png]] | ||

| + | |||

| + | A remainder loop is the result of having a number of elements in the array that is not evenly divisible by the vector length (the total number of elements of a certain data type that can be loaded into a vector register). | ||

| + | |||

| + | [[File:Memory-alignment-remainder.png]] | ||

| + | |||

| + | === Padding === | ||

| + | |||

| + | Even if the array elements are aligned with memory, say at 16 byte boundaries, you might still encounter a "remainder" loop that deals with back-end of the array that cannot be included in the vectorized code. The vectorizer will have to insert an extra loop at the end of the vectorized loop to perform operations on the back-end of the array. | ||

| + | |||

| + | To address this issue, add some padding. | ||

| + | |||

| + | For example, if you have a <code>4 x 19</code> array of floats, and your system has access to 128-bit vector registers, then you should add 1 column to make the array <code>4 x 20</code> so that the number of columns is evenly divisible by the number of floats that can be loaded into a 128-bit vector register, which is 4 floats. | ||

| + | |||

| + | [[File:Memory-alignment-padding.png]] | ||

=== Aligned vs Unaligned Instructions === | === Aligned vs Unaligned Instructions === | ||

| Line 255: | Line 382: | ||

|} | |} | ||

| − | === | + | The functions are taken from Intel's interactive guide to Intel Intrinsics: [https://software.intel.com/sites/landingpage/IntrinsicsGuide/#techs=SSE,SSE2,SSE3,SSSE3,SSE4_1,SSE4_2 Intel Intrinsics SSE, SSE2, SSE3, SSSE3, SSE4.1, SSE4.2] |

| + | |||

| + | === Aligning Data === | ||

To align data elements to an <code>x</code> amount of bytes in memory, use the <code>align</code> macro. | To align data elements to an <code>x</code> amount of bytes in memory, use the <code>align</code> macro. | ||

| − | Code snippet that is used to align the data elements in the | + | Code snippet that is used to align the data elements in the <code>vec_samples</code> project. |

<source lang="cpp"> | <source lang="cpp"> | ||

// Tell the compiler to align the a, b, and x arrays | // Tell the compiler to align the a, b, and x arrays | ||

| Line 276: | Line 405: | ||

#endif // _WIN32 | #endif // _WIN32 | ||

</source> | </source> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 09:57, 28 November 2018

Contents

Intel Parallel Studio Advisor

Group Members

Introduction

Intel Advisor is software tool that you can use to help you add multithreading to your application or parts of your application without disrupting your normal software development. Not only can you use it add multithreading to your application, it can be used to determine whether the performance improvements that come with multithreading are worth adding when you consider the costs associated with multithreading such as maintainability, more difficult to debug, and the effort with refactoring or reorganizing your code to resolve data dependencies.

It is also a tool that can help you add vectorization to your program or to improve the efficiency of code that is already vectorized.

Intel Advisor is bundled with Intel Parallel Studio.

Intel Advisor is separated into two workflows Vectorization Advisor and Threading Advisor.

Vectorization Advisor

The Vectorization Advisor is a tool for optimizing your code through vectorization. This tool will help identify loops that are high-impact and under-optimized, It also reports on what blocking loops from being vectorized and details on where it is safe to ignore the compiler's warnings and force vectorization. Finally it offers in-line code specific recommendations on how to fix these issues.

Roofline Analysis

Roofline charts provide a visual analysis of the performance ceiling imposed on your program given the hard-ware of your computer. This provides an entry point for optimization highlighting loops that are having the most impact on performance and loops with the most room for improvement.

The key use of roofline analysis is to profile an application and display if it is optimized for the hard-ware it's running on.

Roofline analysis allows us to tackle 2 key points:

- What are the bottlenecks limiting performance?

- what loops are inhibiting performance the most?

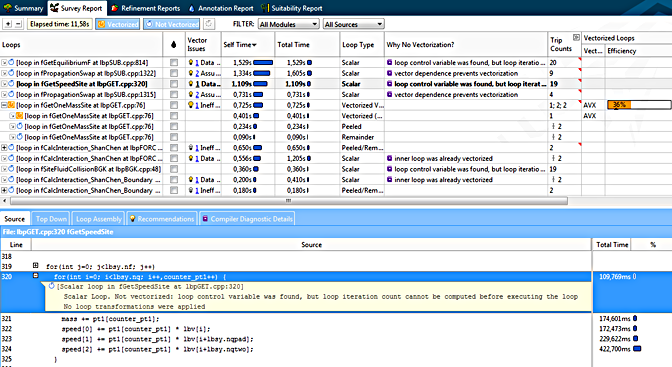

Survey Report

Provides code-specific recommendations for fixing vectorization issues. This allows the programmer to solve these issues providing three key points of information:

- Where in the code would vectorization be the most impactful.

- How you can further improve vectorized loops.

- Which loops are not vectorized and information on how they can be.

Trip Count and FLOPS Analysis

Complementing the survey reports trip count and FLOPS analysis provides in-line messages that allow you to make better decisions on how to improve individual loops. These messages include:

- Number of time the loop iterates.

- Data about FLOPS (Floating point Operations Per Second).

After Identifying what loops benefit the most from vectorization you can simple select them individually to run more detailed report on them.

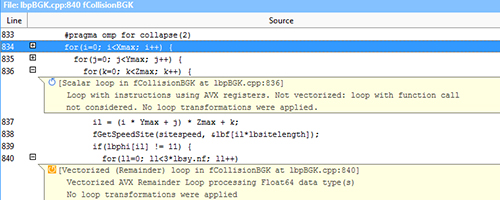

Data Dependencies Report

Compilers may fail to vectorize loops due to potential data dependencies. This feature collects all the error messages from the compiler and creates a report for the programmer. The report allow the programmer to discern for themselves if these data dependencies actually exist and whether or not to force the compiler to ignore the error and vectorize the loop anyways. If the data dependencies really do exist the report provides information on the type of dependency and how to resolve the issue.

Threading Advisor

The Threading Advisor tool is used to model, tune, and test the performance of various multi threading designs such as OpenMP, Threading Building Blocks (TBB), and Microsoft Task Parallel Library (TPL) without the hindering the development of the project. The tool accomplishes this by helping you with prototyping thread options, testing scalability of the project for larger systems, and optimizing faster. It will also help identify issues before implementing parallelization like eliminating data-sharing issues during design. The tool is primarily used for adding threading to the C, C++, C#, and Fotran languages.

Annotations

Annotations can be inserted into your code to help design the potential parallelization for analysis. This way of designing multi threading prevents early error in the code's design to build up and cause slower performance then expected. This does not impact the design of your current code as the compiler ignores the annotations (they're only there to help model your design). This provides you with the ability to keep your code serial and prevents the bugs that can come from multiple threading while in your design phase.

Scalability Analysis

Enables the evaluation of the performance and scalability of the various threading designs. The evaluation of the number of CPU's versus the Grain-size provides an easy to follow results on the impact of the common bottle necks found in all multi threading code when attempting to scale up a project without the need to test it on multiple high end machines yourself.

Dependencies Report

The threading advisor's dependencies report works similar to the vectorization's. It will provide information on the data dependency errors a programmer encounters when parallelizing code including data-sharing, deadlocks, and races. The report also displays code snippets it finds is related to the dependency errors you can then follow these code snippets to their exact location and begin handling the errors on a case by case basis.

Work Flow

With these two tool we can start to come up with a work flow for optimizing our code.

Vectorization

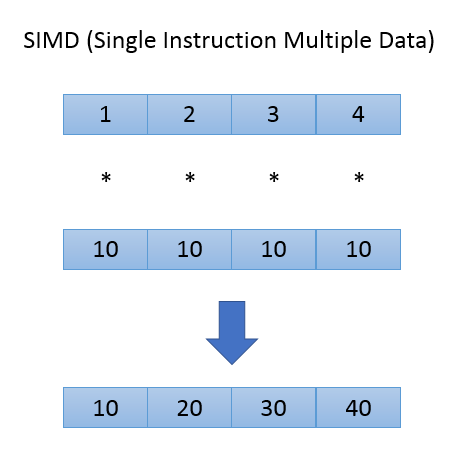

Vectorization is the process of utilizing vector registers to perform a single instruction on multiple values all at the same time.

A CPU register is a very, very tiny block of memory that sits right on top of the CPU. A 64-bit CPU can store 8 bytes of data in a single register.

A vector register is an expanded version of a CPU register. A 128-bit vector register can store 16 bytes of data. A 256-bit vector register can store 32 bytes of data.

The vector register can then be divided into lanes, where each lane stores a single value of a certain data type.

A 128-bit vector register can be divided into the following ways:

- 16 lanes: 16x 8-bit characters

- 8 lanes: 8x 16-bit integers

- 4 lanes: 4x 32-bit integers / floats

- 2 lanes: 2x 64-bit integers

- 2 lanes: 2x 64-bit doubles

a | b | c | d | e | f | g | h 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 10 | 20 | 30 | 40 1.5 | 2.5 | 3.5 | 4.5 1000 | 2000 3.14159 | 3.14159

SIMD Extensions

SSE stands for Streaming SIMD Extensions which refers to the addition of a set of SIMD instructions as well as new XMM registers. SIMD stands for Single Instruction Multiple Data and refers to instructions that can perform a single operation on multiple elements in parallel.

List of SIMD extensions:

- SSE

- SSE2

- SSE3

- SSSE3

- SSE4.1

- SSE4.2

- AVX

- AVX2

(For Unix/Linux) To display what instruction set and extensions set your processor supports, you can use the following commands:

$ uname -a $ lscpu $ cat /proc/cpuinfo

SSE Examples

For SSE >= SSE4.1, to multiply two 128-bit vector of signed 32-bit integers, you would use the following Intel intrisic function:

__m128i _mm_mullo_epi32(__m128i a, __m128i b)Prior to SSE4.1, the same thing can be done with the following sequence of function calls.

// Vec4i operator * (Vec4i const & a, Vec4i const & b) {

// #ifdef

__m128i a13 = _mm_shuffle_epi32(a, 0xF5); // (-,a3,-,a1)

__m128i b13 = _mm_shuffle_epi32(b, 0xF5); // (-,b3,-,b1)

__m128i prod02 = _mm_mul_epu32(a, b); // (-,a2*b2,-,a0*b0)

__m128i prod13 = _mm_mul_epu32(a13, b13); // (-,a3*b3,-,a1*b1)

__m128i prod01 = _mm_unpacklo_epi32(prod02, prod13); // (-,-,a1*b1,a0*b0)

__m128i prod23 = _mm_unpackhi_epi32(prod02, prod13); // (-,-,a3*b3,a2*b2)

__m128i prod = _mm_unpacklo_epi64(prod01, prod23); // (ab3,ab2,ab1,ab0)Code sample was taken from this StackOverflow thread: Fastest way to multiply two vectors of 32bit integers in C++, with SSE

Here is a link to an interactive guide to Intel Intrinsics: Intel Intrinsics SSE, SSE2, SSE3, SSSE3, SSE4.1, SSE4.2

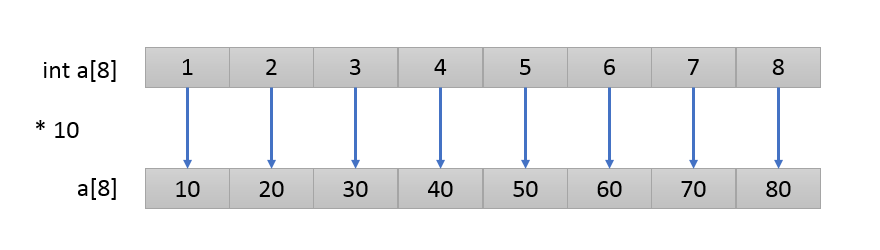

Vectorization Examples

Serial Version

int a[8] = { 1, 2, 3, 4, 5, 6, 7, 8 };

for (int i = 0; i < 8; i++) {

a[i] *= 10;

}SIMD Version

int a[8] = { 1, 2, 3, 4, 5, 6, 7, 8 };

int ten[4] = { 10, 10, 10, 10 }

__m128i va, v10;

v10 = _mm_loadu_si128((__m128i*)&ten);

for (int i = 0; i < 8; i+=4) {

va = _mm_loadu_si128((__m128i*)&a[i]); // 1 2 3 4

va = _mm_mullo_epi32(va, v10); // 10 20 30 40

_mm_storeu_si128((__m128i*)&a[i], va); // [10, 20, 30, 40]

}Intel Advisor Tutorial Example

Here is a great tutorial on how to use Intel Advisor to vectorize your code. Intel® Advisor Tutorial: Add Efficient SIMD Parallelism to C++ Code Using the Vectorization Advisor

You can find the sample code in the directory of your Intel Parallel Studio installation. Just unzip the file and you can open the solution in Visual Studio or build on the command line.

For default installations, the sample code would be located here: C:\Program Files (x86)\IntelSWTools\Advisor 2019\samples\en\C++\vec_samples.zip

Loop Unrolling

The compiler can "unroll" a loop so that the body of the loop is duplicated a number of times, and as a result, reduce the number of conditional checks and counter increments per loop.

Warning: Do not write your code like this. The compiler will do it for you, unless you tell it not to.

#pragma nounrollfor (int i = 0; i < 50; i++) {

foo(i);

}

for (int i = 0; i < 50; i+=5) {

foo(i);

foo(i+1);

foo(i+2);

foo(i+3);

foo(i+4);

}

// foo(0)

// foo(1)

// foo(2)

// foo(3)

// foo(4)

// ...

// foo(45)

// foo(46)

// foo(47)

// foo(48)

// foo(49)Data Dependencies

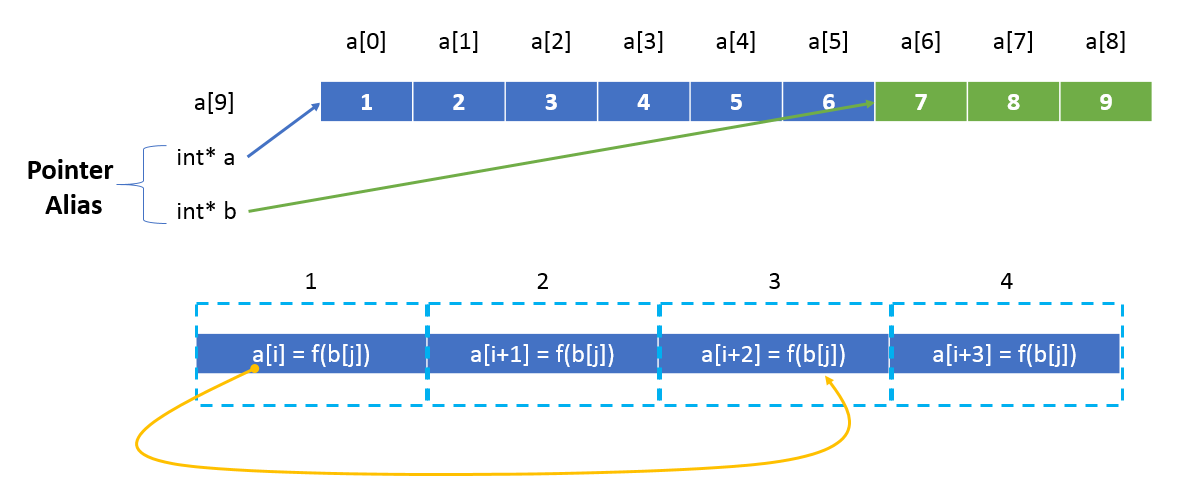

Pointer Alias

A pointer alias means that two pointers point to the same location in memory or the two pointers overlap in memory.

If you compile the vec_samples project with the macro, the matvec function declaration will include the restrict keyword. The restrict keyword will tell the compiler that pointers a and b do not overlap and that the compiler is free optimize the code blocks that uses the pointers.

multiply.c

#ifdef NOALIAS

void matvec(int size1, int size2, FTYPE a[][size2], FTYPE b[restrict], FTYPE x[], FTYPE wr[])

#else

void matvec(int size1, int size2, FTYPE a[][size2], FTYPE b[], FTYPE x[], FTYPE wr[])

#endifTo learn more about the restrict keyword and how the compiler can optimize code if it knows that two pointers do not overlap, you can visit this StackOverflow thread: What does the restrict keyword mean in C++?

Loop-Carried Dependency

Pointers that overlap one another may introduce a loop-carried dependency when those pointers point to an array of data. The vectorizer will make this assumption and, as a result, will not auto-vectorize the code.

In the code example below, a is a function of b. If pointers a and b overlap, then there exists the possibility that if a is modified then b will also be modified, and therefore may create the possibility of a loop-carried dependency. This means the loop cannot be vectorized.

void func(int* a, int* b) {

...

for (i = 0; i < size1; i++) {

for (j = 0; j < size2; j++) {

a[i] = foo(b[j]);

}

}

}The following image illustrates the loop-carried dependency when two pointers overlap.

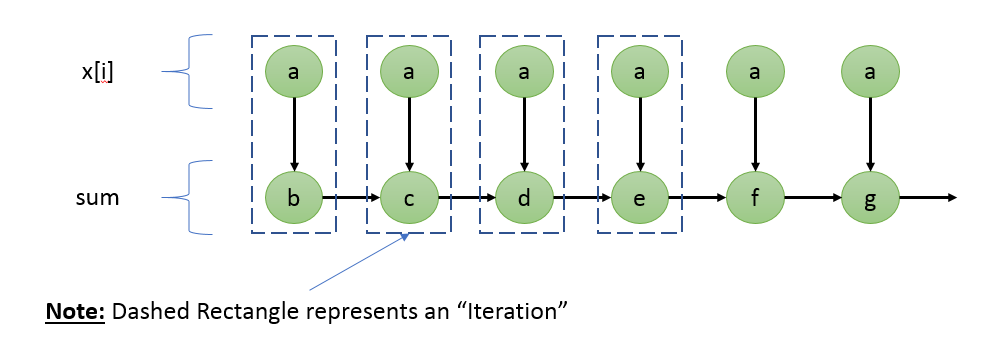

Magnitude of a Vector

To demonstrate a more familiar example of a loop-carried dependency that would block the auto-vectorization of a loop, I'm going to include a code snippet that calculates the magnitude of a vector.

To calculate the magnitude of a vector: length = sqrt(x^2 + y^2 + z^)

for (int i = 0; i < n; i++)

sum += x[i] * x[i];

length = sqrt(sum);As you can see, there is a loop-carried dependency with the variable sum. The diagram below illustrates why the loop cannot be vectorized (nor can it be threaded). The dashed rectangle represents a single iteration in the loop, and the arrows represents dependencies between nodes. If an arrow crosses the iteration rectangle, then those iterations cannot be executed in parallel.

To resolve the loop-carried dependency, use simd and the reduction clause to tell the compiler to auto-vectorize the loop and to reduce the array of elements to a single value. Each SIMD lane will compute its own sum and then combine the results into a single sum at the end.

#pragma omp simd reduction(+:sum)

for (int i = 0; i < n; i++)

sum += x[i] * x[i];

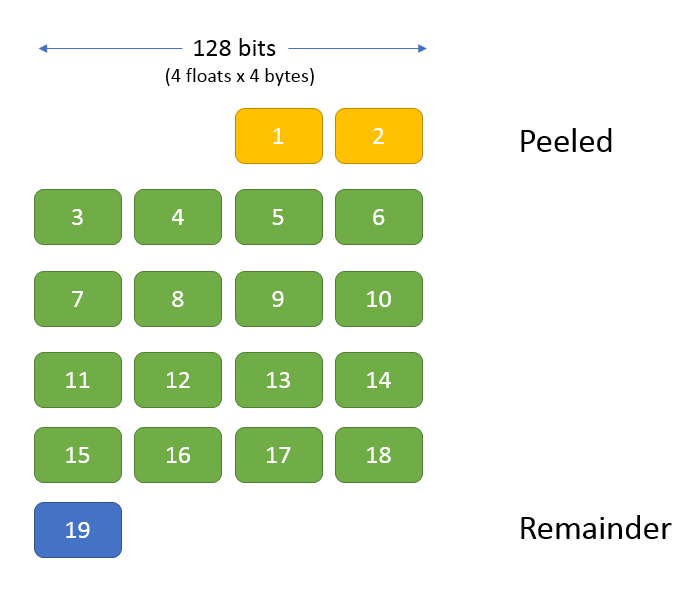

length = sqrt(sum);Memory Alignment

Intel Advisor can detect if there are any memory alignment issues that may produce inefficient vectorization code.

A loop can be vectorized if there are no data dependencies across loop iterations.

Peeled and Remainder Loops

However, if the data is not aligned, the vectorizer may have to use a peeled loop to address the misalignment. So instead of vectorizing the entire loop, an extra loop needs to be inserted to perform operations on the front-end of the array that not aligned with memory.

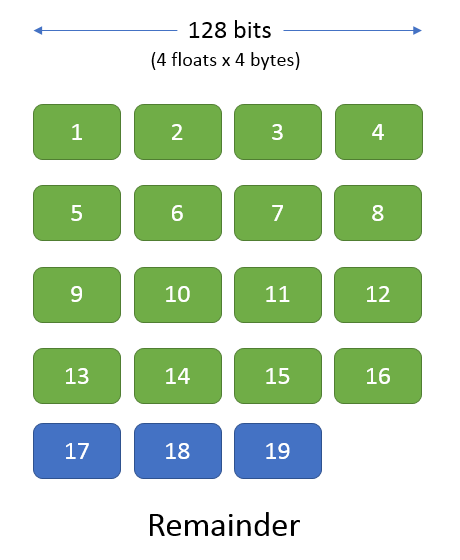

A remainder loop is the result of having a number of elements in the array that is not evenly divisible by the vector length (the total number of elements of a certain data type that can be loaded into a vector register).

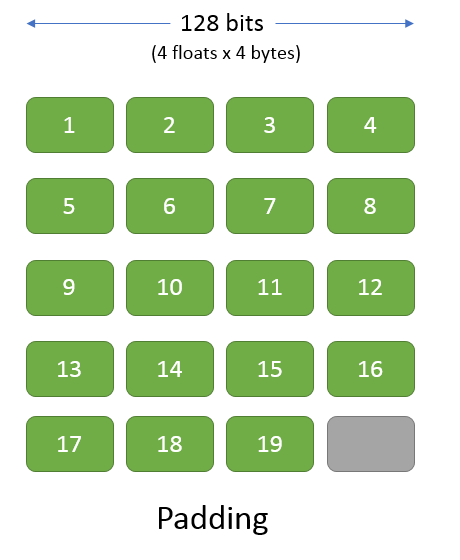

Padding

Even if the array elements are aligned with memory, say at 16 byte boundaries, you might still encounter a "remainder" loop that deals with back-end of the array that cannot be included in the vectorized code. The vectorizer will have to insert an extra loop at the end of the vectorized loop to perform operations on the back-end of the array.

To address this issue, add some padding.

For example, if you have a 4 x 19 array of floats, and your system has access to 128-bit vector registers, then you should add 1 column to make the array 4 x 20 so that the number of columns is evenly divisible by the number of floats that can be loaded into a 128-bit vector register, which is 4 floats.

Aligned vs Unaligned Instructions

There are two versions of SIMD instructions for loading into and storing from vector registers: aligned and unaligned.

The following table contains a list of Intel Intrinsics functions for both aligned and unaligned load and store instructions.

| Aligned | Unaligned | Description |

|---|---|---|

| __m128d _mm_load_pd (double const* mem_addr) | __m128d _mm_loadu_pd (double const* mem_addr) | Load 128-bits (composed of 2 packed double-precision (64-bit) floating-point elements) from memory into dst. |

| __m128 _mm_load_ps (float const* mem_addr) | __m128 _mm_loadu_ps (float const* mem_addr) | Load 128-bits (composed of 4 packed single-precision (32-bit) floating-point elements) from memory into dst. |

| __m128i _mm_load_si128 (__m128i const* mem_addr) | __m128i _mm_loadu_si128 (__m128i const* mem_addr) | Load 128-bits of integer data from memory into dst. |

| void _mm_store_pd (double* mem_addr, __m128d a) | void _mm_storeu_pd (double* mem_addr, __m128 a) | Store 128-bits (composed of 2 packed double-precision (64-bit) floating-point elements) from a into memory. |

| void _mm_store_ps (float* mem_addr, __m128 a) | void _mm_storeu_ps (float* mem_addr, __m128 a) | Store 128-bits (composed of 4 packed single-precision (32-bit) floating-point elements) from a into memory. |

| void _mm_store_si128 (__m128i* mem_addr, __m128i a) | void _mm_storeu_si128 (__m128i* mem_addr, __m128i a) | Store 128-bits of integer data from a into memory. |

The functions are taken from Intel's interactive guide to Intel Intrinsics: Intel Intrinsics SSE, SSE2, SSE3, SSSE3, SSE4.1, SSE4.2

Aligning Data

To align data elements to an x amount of bytes in memory, use the align macro.

Code snippet that is used to align the data elements in the vec_samples project.

// Tell the compiler to align the a, b, and x arrays

// boundaries. This allows the vectorizer to use aligned instructions

// and produce faster code.

#ifdef _WIN32

_declspec(align(ALIGN_BOUNDARY, OFFSET)) FTYPE a[ROW][COLWIDTH];

_declspec(align(ALIGN_BOUNDARY, OFFSET)) FTYPE b[ROW];

_declspec(align(ALIGN_BOUNDARY, OFFSET)) FTYPE x[COLWIDTH];

_declspec(align(ALIGN_BOUNDARY, OFFSET)) FTYPE wr[COLWIDTH];

#else

FTYPE a[ROW][COLWIDTH] __attribute__((align(ALIGN_BOUNDARY, OFFSET)));

FTYPE b[ROW] __attribute__((align(ALIGN_BOUNDARY, OFFSET)));

FTYPE x[COLWIDTH] __attribute__((align(ALIGN_BOUNDARY, OFFSET)));

FTYPE wr[COLWIDTH] __attribute__((align(ALIGN_BOUNDARY, OFFSET)));

#endif // _WIN32