Difference between revisions of "Solo Act"

(→Assignment 1) |

(→Solo Act) |

||

| (49 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

= Solo Act = | = Solo Act = | ||

| − | + | Student Number: 069 920 148 | |

| − | |||

| − | |||

== Progress == | == Progress == | ||

=== Assignment 1 === | === Assignment 1 === | ||

| + | INTRODUCTION: | ||

| + | |||

| + | - Project selection (bsp trees, dungeon generator), profiling | ||

| + | |||

| + | - Parallelize (decoupling, kernel) | ||

| + | |||

| + | - Optimize (get root, comparison) | ||

| + | |||

| + | |||

Profile | Profile | ||

| Line 18: | Line 25: | ||

The project was written for windows, so I decided to initially profile with the built in Visual Studio profiler. | The project was written for windows, so I decided to initially profile with the built in Visual Studio profiler. | ||

| + | [[File:NjsimasProfileresults.png|500px]] | ||

The above image shows the function inclusive time percentage. The results are 22% and 15% respectively. The only entries higher are Main and library components used for printing. This demonstrates that the majority of source code processing is occurring in these two functions. Both of them would thus are hotspots and may benefit from parallelization. | The above image shows the function inclusive time percentage. The results are 22% and 15% respectively. The only entries higher are Main and library components used for printing. This demonstrates that the majority of source code processing is occurring in these two functions. Both of them would thus are hotspots and may benefit from parallelization. | ||

| Line 24: | Line 32: | ||

Parallelize | Parallelize | ||

| + | One of the immediate problems I realized with this project was that the target functions were far too large. Parallelization would require possibly three or more kernels, which was beyond the scope of this assignment. Instead I decided to focus on the bsp tree portion of the code, and decouple this portion from actual dungeon generating logic. | ||

| + | |||

| + | |||

| + | [[File:NjsimasRecursive.png|1000px]] | ||

| + | |||

| + | |||

| + | The decoupled source-code can be seen above, along with the timing logic in it's respective main function. | ||

| + | |||

| + | |||

| + | [[File:newmytree.png|500px ]] | ||

| + | |||

| + | |||

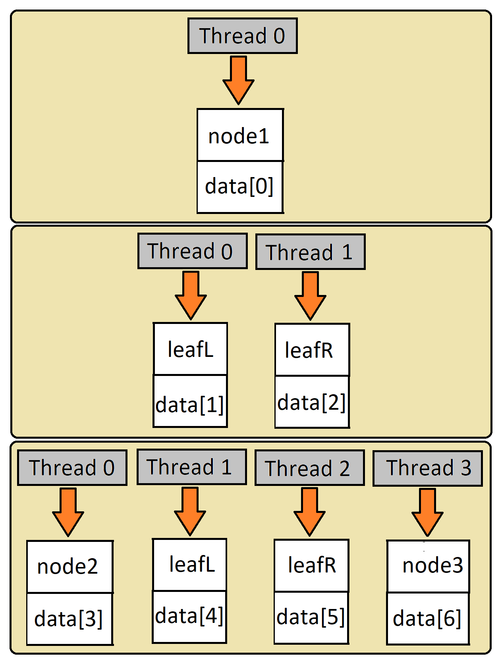

| + | To parrellize a bsp tree required some analysis which can be seen above. The tree itself must be stored in memory according to some design. The way I decided to organize the leafs and nodes of the tree, in a linear context, can be seen below. The above image shows how the tree design corresponds to the linear arrangement in memory. | ||

| + | |||

| + | |||

| + | [[File:Warpss.png|500px]] | ||

| + | The image above reflects the design I chose with respect to the warps and thread behavior. Each warp processes a single 'round' of the tree, utilizing as many threads equivalent to the number of leaves in that 'round'. | ||

| − | |||

| − | [[File: | + | [[File:NjsimasRegularKernel.png|1000px]] |

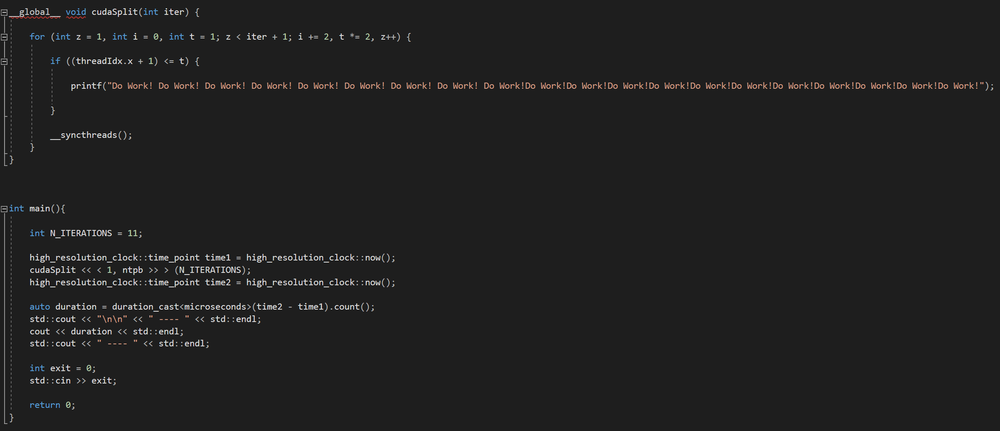

| + | The above image shows the kernel with the benchmarking logic in the main function. T | ||

| − | |||

| − | |||

| − | [[File: | + | [[File:Outputgood.png|500px]] |

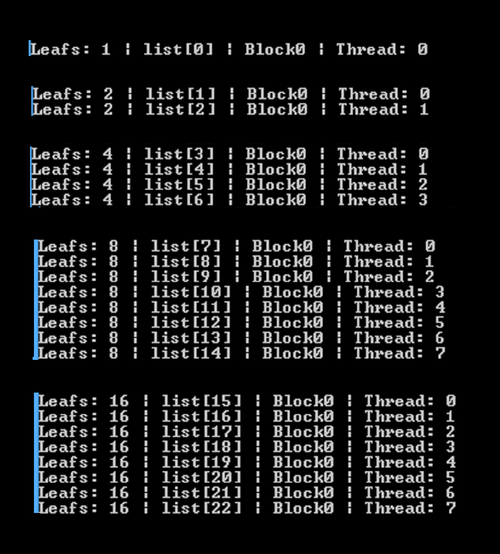

| − | + | This next image shows an output example for the first five elements. | |

| − | [[File: | + | |

| + | [[File:NjsimasGraphKernelRecursive.png|500px]] | ||

| + | |||

| + | |||

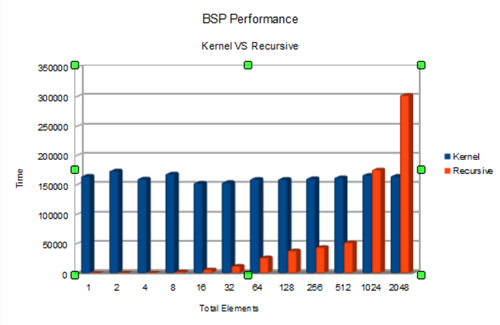

| + | Finally, the graphs above show a comparison in performance between the first, decouple function and the previous kernel. As you can see, the original, recursive function performs faster up until the 600th element where they are equal. The parallel kernel subsequently outperforms the recursive function. | ||

=== Assignment 3 === | === Assignment 3 === | ||

| − | + | ||

| + | From a theoretical perspective, the algorithm should benefit from shared memory access, provided there are operations being performed on the data. | ||

| + | |||

| + | To demonstrate this, I modified the kernel to perform a division on each node of the tree, storing the result in the two leaf indices. | ||

| + | |||

| + | To do this, I would need to be able to access the root node of a given leaf in a parallel way. The determinant factor would have to be the thread index. Achieving this was a bit of a math problem. | ||

| + | |||

| + | The fact is, this problem could have been solved very simply using if statements. This would have, however, increase thread divergence and therefore slowed down the kernel. In this way, the fact that I solved this problem mathematically could be viewed as an optimization in itself, by reducing thread divergence. | ||

| + | |||

| + | |||

| + | [[File:njsimasroot.png|500px ]] | ||

| + | |||

| + | |||

| + | [[File:njsimastables.png|500px ]] | ||

| + | |||

| + | |||

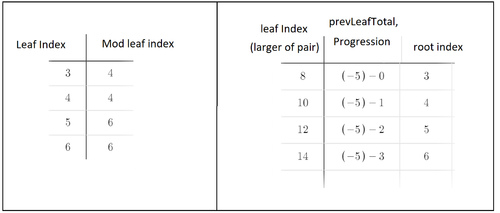

| + | The first step was using modulus to set the odd value in the leaf pair equal to the greater value: | ||

| + | |||

| + | |||

| + | modIndex =((ti + q) + (ti + 1) % 2) | ||

| + | |||

| + | |||

| + | I needed the number of nodes in the previous round, plus one, to later subtract from the current array index, basically 'walking backwards': | ||

| + | |||

| + | |||

| + | prevLeafTotal = ((t / 2) + 1); | ||

| + | |||

| + | |||

| + | The next step was making the thread index progression correspond to the root progression. | ||

| + | |||

| + | For example, in round four, the thread index progression is 1, 3, 5, 7, for the greater of the leaf pair. This needed to be 0, 1, 2, 3. | ||

| + | |||

| + | To do this, I add one, divide by two, and subtract one, and then add the modulus, as in step one, to account for the lesser of the leaf pair. | ||

| + | |||

| + | |||

| + | Progression = (((ti + 1) / 2) – 1) + ((ti + q) + (ti + 1) % 2) | ||

| + | |||

| + | |||

| + | Finally, I add this progression to the previous leaf total, and subtract the result from the new array index from stage 1: | ||

| + | |||

| + | |||

| + | root = modIndex – (leafTotal + progression) | ||

| + | |||

| + | |||

| + | Since this was all to be done by a single thread, in a single step, I condensed the formula into one line. | ||

| + | |||

| + | |||

| + | ((ti + q) + ((ti + 1) % 2)) - (((((ti + 1) / 2) - 1) + ((ti + 1) % 2)) + (t / 2) + 1); | ||

| + | |||

| + | |||

| + | |||

| + | [[File:njsimasGlobalKernel.png|1024px ]] | ||

| + | |||

| + | |||

| + | [[File:njsimasSharedMain.png|500px ]] | ||

| + | |||

| + | |||

| + | |||

| + | The improved kernel can be seen above. | ||

| + | |||

| + | |||

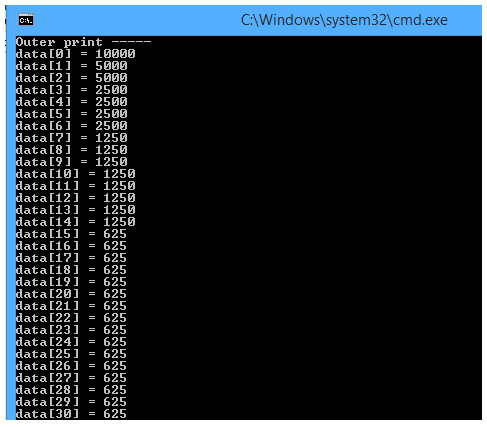

| + | [[File:njsimasfinaloutput.png|500px ]] | ||

| + | |||

| + | |||

| + | An example of the output can be seen above. | ||

| + | |||

| + | |||

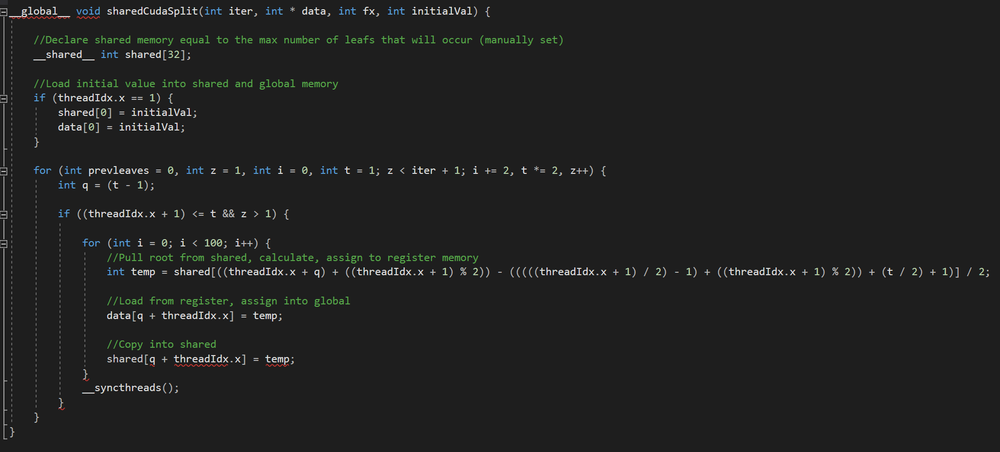

| + | [[File:njsimasSharedKernel.png|1000px ]] | ||

| + | |||

| + | |||

| + | |||

| + | I then made version of the kernel that used shared memory, seen above. | ||

| + | |||

| + | |||

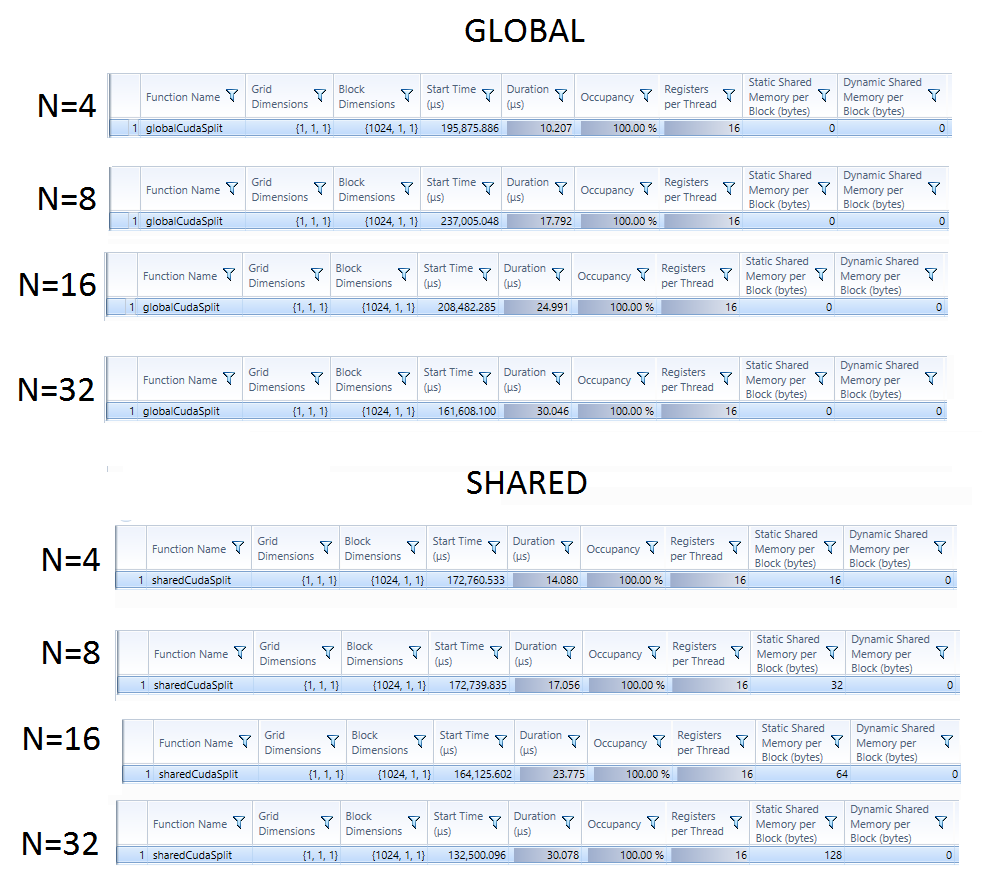

| + | [[File:njsimasGpuProfile.png|1000px ]] | ||

| + | |||

| + | |||

| + | |||

| + | [[File:njsimasGraphGlobalShared.png|800px ]] | ||

| + | |||

| + | |||

| + | |||

| + | The performance comparison can be seen in the graph above. The graph shows no significant improvement. | ||

| + | |||

| + | Although the shared memory likely performs faster when it is read, implementing shared memory required adding one instruction. By comparison, the global kernel 1) reads from global memory and then 2) writes to global memory. The shared kernel 1) reads from shared memory 2) writes to global memory and then 3) writes to shared memory. This extra instruction reduces the benefit from using shared memory. | ||

| + | |||

| + | One other likely cause for such similar results is the effect of coalescence on memory access. Each leaf node in a round is stored concurrently within the global memory. This means that each thread is accessing concurrent memory, and the hardware is likely merging these global read requests which reduces the detriment of global access. | ||

| + | |||

| + | The extra instruction reduces the benefit gain from shared memory, and the coalesced access speeds up the global memory. Despite both of these factors, the results are close to the same. This demonstrates just how much shared memory is faster, but also shows that the use of shared memory is not very effective in this situation. | ||

| + | |||

| + | I only tested this up to 32 elements to be sure I wouldn't run out of shared memory. For larger data sets, multiple blocks would have to be partitioned by memory, per block, and threads per block. These values will constrain the maximum leaf number. | ||

Latest revision as of 10:01, 25 April 2018

Solo Act

Student Number: 069 920 148

Progress

Assignment 1

INTRODUCTION:

- Project selection (bsp trees, dungeon generator), profiling

- Parallelize (decoupling, kernel)

- Optimize (get root, comparison)

Profile

For assignment 1, I selected an open-source dungeonGenerator project from github.

https://github.com/DivineChili/BSP-Dungeon-Generator/

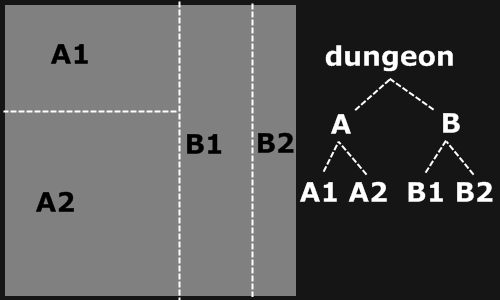

As you can see from the above images, the purpose of this program is to generate a map image for game content. The program achieves this by repetitively splitting a 2d space using a binary partition algorithm.

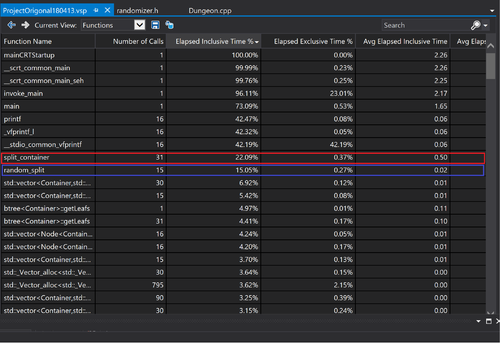

The project was written for windows, so I decided to initially profile with the built in Visual Studio profiler.

The above image shows the function inclusive time percentage. The results are 22% and 15% respectively. The only entries higher are Main and library components used for printing. This demonstrates that the majority of source code processing is occurring in these two functions. Both of them would thus are hotspots and may benefit from parallelization.

Assignment 2

Parallelize

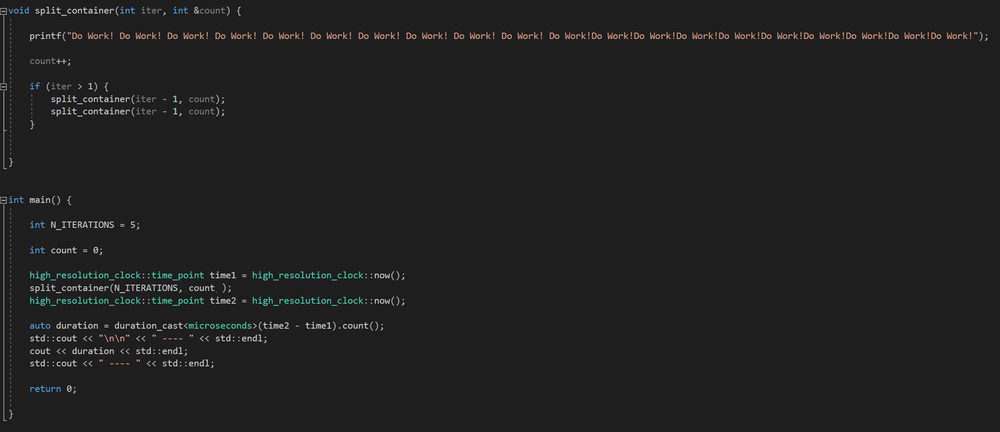

One of the immediate problems I realized with this project was that the target functions were far too large. Parallelization would require possibly three or more kernels, which was beyond the scope of this assignment. Instead I decided to focus on the bsp tree portion of the code, and decouple this portion from actual dungeon generating logic.

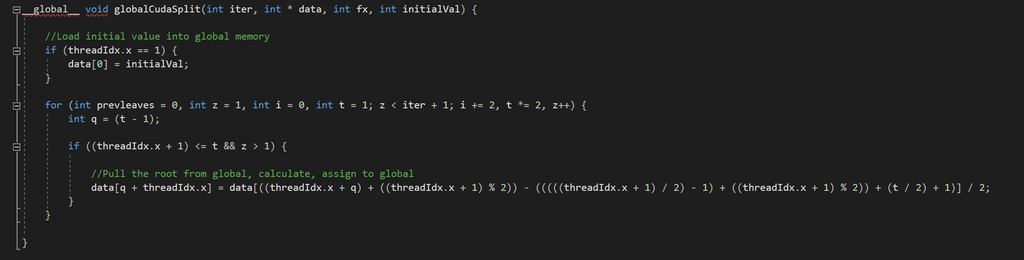

The decoupled source-code can be seen above, along with the timing logic in it's respective main function.

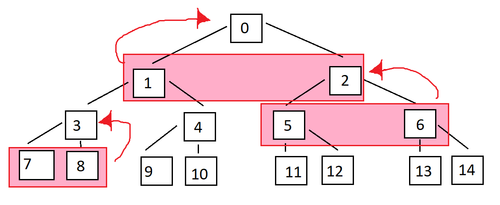

To parrellize a bsp tree required some analysis which can be seen above. The tree itself must be stored in memory according to some design. The way I decided to organize the leafs and nodes of the tree, in a linear context, can be seen below. The above image shows how the tree design corresponds to the linear arrangement in memory.

The image above reflects the design I chose with respect to the warps and thread behavior. Each warp processes a single 'round' of the tree, utilizing as many threads equivalent to the number of leaves in that 'round'.

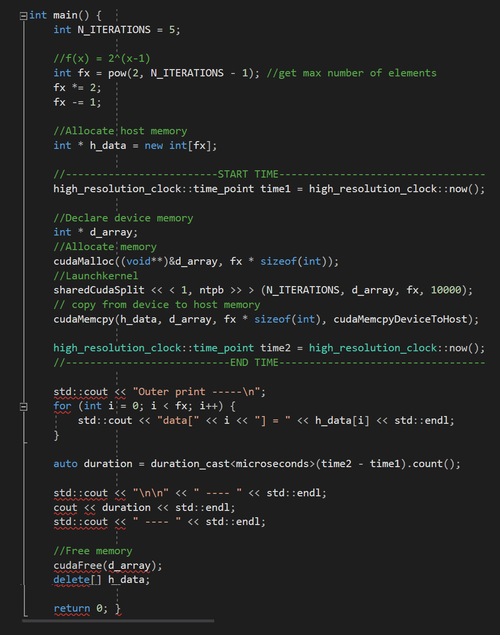

The above image shows the kernel with the benchmarking logic in the main function. T

This next image shows an output example for the first five elements.

Finally, the graphs above show a comparison in performance between the first, decouple function and the previous kernel. As you can see, the original, recursive function performs faster up until the 600th element where they are equal. The parallel kernel subsequently outperforms the recursive function.

Assignment 3

From a theoretical perspective, the algorithm should benefit from shared memory access, provided there are operations being performed on the data.

To demonstrate this, I modified the kernel to perform a division on each node of the tree, storing the result in the two leaf indices.

To do this, I would need to be able to access the root node of a given leaf in a parallel way. The determinant factor would have to be the thread index. Achieving this was a bit of a math problem.

The fact is, this problem could have been solved very simply using if statements. This would have, however, increase thread divergence and therefore slowed down the kernel. In this way, the fact that I solved this problem mathematically could be viewed as an optimization in itself, by reducing thread divergence.

The first step was using modulus to set the odd value in the leaf pair equal to the greater value:

modIndex =((ti + q) + (ti + 1) % 2)

I needed the number of nodes in the previous round, plus one, to later subtract from the current array index, basically 'walking backwards':

prevLeafTotal = ((t / 2) + 1);

The next step was making the thread index progression correspond to the root progression.

For example, in round four, the thread index progression is 1, 3, 5, 7, for the greater of the leaf pair. This needed to be 0, 1, 2, 3.

To do this, I add one, divide by two, and subtract one, and then add the modulus, as in step one, to account for the lesser of the leaf pair.

Progression = (((ti + 1) / 2) – 1) + ((ti + q) + (ti + 1) % 2)

Finally, I add this progression to the previous leaf total, and subtract the result from the new array index from stage 1:

root = modIndex – (leafTotal + progression)

Since this was all to be done by a single thread, in a single step, I condensed the formula into one line.

((ti + q) + ((ti + 1) % 2)) - (((((ti + 1) / 2) - 1) + ((ti + 1) % 2)) + (t / 2) + 1);

The improved kernel can be seen above.

An example of the output can be seen above.

I then made version of the kernel that used shared memory, seen above.

The performance comparison can be seen in the graph above. The graph shows no significant improvement.

Although the shared memory likely performs faster when it is read, implementing shared memory required adding one instruction. By comparison, the global kernel 1) reads from global memory and then 2) writes to global memory. The shared kernel 1) reads from shared memory 2) writes to global memory and then 3) writes to shared memory. This extra instruction reduces the benefit from using shared memory.

One other likely cause for such similar results is the effect of coalescence on memory access. Each leaf node in a round is stored concurrently within the global memory. This means that each thread is accessing concurrent memory, and the hardware is likely merging these global read requests which reduces the detriment of global access.

The extra instruction reduces the benefit gain from shared memory, and the coalesced access speeds up the global memory. Despite both of these factors, the results are close to the same. This demonstrates just how much shared memory is faster, but also shows that the use of shared memory is not very effective in this situation.

I only tested this up to 32 elements to be sure I wouldn't run out of shared memory. For larger data sets, multiple blocks would have to be partitioned by memory, per block, and threads per block. These values will constrain the maximum leaf number.