Difference between revisions of "Team MMD"

Mradmanovic (talk | contribs) (Created page with "== Team Members (MMD) == # [mailto:dhchang@myseneca.ca?subject=DPS921 Daniel Chang] # [mailto:mlnguyen4@myseneca.ca?subject=DPS921 Mattew Nguyen] # [mailto:mradmanovic@seneca...") |

Mradmanovic (talk | contribs) (→References) |

||

| (3 intermediate revisions by the same user not shown) | |||

| Line 5: | Line 5: | ||

# [mailto:mradmanovic@senecacollege.ca?subject=DPS921 Marko Radmanovic ] | # [mailto:mradmanovic@senecacollege.ca?subject=DPS921 Marko Radmanovic ] | ||

# [mailto:dhchang@myseneca.ca;mlnguyen4@myseneca.ca;mradmanovic@senecacollege.ca?subject=DPS921 eMail All] | # [mailto:dhchang@myseneca.ca;mlnguyen4@myseneca.ca;mradmanovic@senecacollege.ca?subject=DPS921 eMail All] | ||

| + | |||

| + | == Intel Math Kernel Library (MKL) Summary == | ||

| + | |||

| + | The MKL is a library of optimized math routines for science, engineering and financial applications. The library consists of many standard math library along with Intel’s math libraries implementation. Currently it is compatible with Windows, Linux and OS X operating systems. Currently Intel offers native support for C/C++, and Fortran. It is possible to use the MKL with Python but that will depend on the library that you will be required to use. | ||

| + | |||

| + | == Setting up MKL == | ||

| + | |||

| + | Firstly you will need to download the MKL from the following link https://software.intel.com/en-us/mkl. | ||

| + | <br> | ||

| + | Next you will need to install and allow for the intergration of the tools with your version of visual studio. | ||

| + | <br> | ||

| + | Now when you open a new project there will still be two more steps you will need to add to use MKL. The first is adding the mkl.h header file and the second is going under the project properties and select the use MKL option to yes. | ||

| + | |||

| + | == MKL testing == | ||

| + | |||

| + | For our project we wanted to test the performance of MKL verse other Intel parallel solutions such as Cilk Plus and Thread building blocks (TBB). | ||

| + | <br> | ||

| + | We also tested the serial implementation for completions sake | ||

| + | Serial version : | ||

| + | // serial_matmul_naive returns the product a * b using basic serial logic | ||

| + | void serial_matmul_naive(const double* a, const double* b, double* c, int numRows, int numCols) { | ||

| + | for (int i = 0; i < numRows; i++) { | ||

| + | for (int j = 0; j < numCols; j++) { | ||

| + | double sum = 0.0; | ||

| + | for (int k = 0; k < numRows; k++) | ||

| + | sum += a[i * numRows + k] * b[k * numRows + j]; | ||

| + | c[i * numRows + j] = sum; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | <br> | ||

| + | Serial version with reversed inner loops : | ||

| + | // serial_matmul_reversed returns the product a * b using serial logic loops reversed | ||

| + | void serial_matmul_naive(const double* a, const double* b, double* c, int numRows, int numCols) { | ||

| + | for (int i = 0; i < numRows; i++) { | ||

| + | for (int j = 0; j < numCols; j++) { | ||

| + | double sum = 0.0; | ||

| + | for (int k = 0; k < numRows; k++) | ||

| + | sum += a[i * numRows + k] * b[k * numRows + j]; | ||

| + | c[i * numRows + j] = sum; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | <br> | ||

| + | TBB version : | ||

| + | // Thread builing blocks matrix multipication | ||

| + | class Body { | ||

| + | double* a; | ||

| + | double* b; | ||

| + | double* c; | ||

| + | int nRows; | ||

| + | int nCols; | ||

| + | public: | ||

| + | Body(double* in1, double* in2, double* out, int nR, int nC) { | ||

| + | a = in1; | ||

| + | b = in2; | ||

| + | c = out; | ||

| + | nRows = nR; | ||

| + | nCols = nC; | ||

| + | } | ||

| + | |||

| + | void operator()(const tbb::blocked_range<int>& r) const { | ||

| + | for (int i = r.begin(); i < r.end() && i < nRows; i++) { | ||

| + | for (int j = 0; j < nCols; j++) { | ||

| + | c[i * nRows + j] = 0.0; | ||

| + | for (int k = 0; k < nRows; k++) | ||

| + | c[i * nRows + j] += a[i * nRows + k] * b[k * nRows + j]; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | }; | ||

| + | <br> | ||

| + | Cilk plus naive version : | ||

| + | // cilkplus_matmul_naive returns the product a * b using cilk_for | ||

| + | void cilkplus_matmul_naive(const double* a, const double* b, double* c, int numRows, int numCols) { | ||

| + | cilk_for(int i = 0; i < numRows; i++) { | ||

| + | for (int j = 0; j < numCols; j++) { | ||

| + | double sum = 0.0; | ||

| + | for (int k = 0; k < numRows; k++) | ||

| + | sum += a[i * numRows + k] * b[k * numRows + j]; | ||

| + | c[i * numRows + j] = sum; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | <br> | ||

| + | Cilk plus with vectorization : | ||

| + | // cilkplus_matmul_vectorized returns the product a * b using cilk_for and pragma simd (vectorization) | ||

| + | void cilkplus_matmul_vectorized(const double* a, const double* b, double* c, int numRows, int numCols) { | ||

| + | cilk_for(int i = 0; i < numRows; i++) { | ||

| + | for (int j = 0; j < numCols; j++) { | ||

| + | double sum = 0.0; | ||

| + | #pragma simd | ||

| + | for (int k = 0; k < numRows; k++) | ||

| + | sum += a[i * numRows + k] * b[k * numRows + j]; | ||

| + | c[i * numRows + j] = sum; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | <br> | ||

| + | MKL version (compressed) : | ||

| + | double *A, *B, *C; | ||

| + | double alpha = 1.0, beta = 0.0; | ||

| + | ... | ||

| + | A = (double *)mkl_malloc(numRows * numCols * sizeof(double), 64); | ||

| + | B = (double *)mkl_malloc(numRows * numCols * sizeof(double), 64); | ||

| + | C = (double *)mkl_malloc(numRows * numCols * sizeof(double), 64); | ||

| + | ... | ||

| + | if (A == NULL || B == NULL || C == NULL) { | ||

| + | std::cout << "\n ERROR: Can't allocate memory for matrices. Aborting... \n\n"; | ||

| + | mkl_free(A); | ||

| + | mkl_free(B); | ||

| + | mkl_free(C); | ||

| + | return 3; | ||

| + | } | ||

| + | ... | ||

| + | cblas_dgemm(CblasRowMajor, CblasNoTrans, CblasNoTrans, | ||

| + | numRows, numCols, 1, alpha, A, numRows, B, numCols, beta, C, numRows); | ||

| + | ... | ||

| + | //// deallocate mkl | ||

| + | mkl_free(A); | ||

| + | mkl_free(B); | ||

| + | mkl_free(C); | ||

| + | <br> | ||

| + | |||

| + | == Full code & Results == | ||

| + | Here is the full program source code that was used during our tests. | ||

| + | <pre> | ||

| + | //DPS921 - Project | ||

| + | |||

| + | #include <iostream> | ||

| + | #include <cstdlib> | ||

| + | #include <chrono> | ||

| + | using namespace std::chrono; | ||

| + | |||

| + | #include <cilk/cilk.h> | ||

| + | #include <tbb/tbb.h> | ||

| + | #include "mkl.h" | ||

| + | |||

| + | // report system time | ||

| + | void reportTime(const char* msg, steady_clock::duration span) { | ||

| + | auto ms = duration_cast<milliseconds>(span); | ||

| + | std::cout << msg << " - took - " << | ||

| + | ms.count() << " milliseconds" << std::endl; | ||

| + | } | ||

| + | |||

| + | // Print matricies | ||

| + | void printMatricies(const double* a, const double* b, double* mat, int numRows, int numCols) { | ||

| + | if (numRows <= 5 && numCols <= 5) | ||

| + | { | ||

| + | for (int i = 0; i < numRows; ++i) { | ||

| + | for (int j = 0; j < numCols; ++j) { | ||

| + | std::cout << a[i * numRows + j] << " "; | ||

| + | } | ||

| + | std::cout << std::endl; | ||

| + | } | ||

| + | std::cout << std::endl; | ||

| + | for (int i = 0; i < numRows; ++i) { | ||

| + | for (int j = 0; j < numCols; ++j) { | ||

| + | std::cout << b[i * numRows + j] << " "; | ||

| + | } | ||

| + | std::cout << std::endl; | ||

| + | } | ||

| + | std::cout << std::endl; | ||

| + | for (int i = 0; i < numRows; ++i) { | ||

| + | for (int j = 0; j < numCols; ++j) { | ||

| + | std::cout << mat[i * numRows + j] << " "; | ||

| + | } | ||

| + | std::cout << std::endl; | ||

| + | } | ||

| + | std::cout << std::endl; | ||

| + | } | ||

| + | } | ||

| + | |||

| + | // serial_matmul_naive returns the product a * b using basic serial logic | ||

| + | void serial_matmul_naive(const double* a, const double* b, double* c, int numRows, int numCols) { | ||

| + | for (int i = 0; i < numRows; i++) { | ||

| + | for (int j = 0; j < numCols; j++) { | ||

| + | double sum = 0.0; | ||

| + | for (int k = 0; k < numRows; k++) | ||

| + | sum += a[i * numRows + k] * b[k * numRows + j]; | ||

| + | c[i * numRows + j] = sum; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | |||

| + | // serial_matmul_reversed returns the product a * b using serial logic loops reversed | ||

| + | // TODO: actually test this one | ||

| + | void serial_matmul_reversed(const double* a, const double* b, double* c, int numRows, int numCols) { | ||

| + | for (int i = 0; i < numRows; i++) { | ||

| + | for (int j = 0; j < numCols; j++) { | ||

| + | c[i * numRows + j] = 0.0; | ||

| + | for (int k = 0; k < numRows; k++) | ||

| + | c[i * numRows + j] += a[i * numRows + k] * b[k * numRows + j]; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | |||

| + | // Thread builing blocks matrix multipication | ||

| + | class Body { | ||

| + | double* a; | ||

| + | double* b; | ||

| + | double* c; | ||

| + | int nRows; | ||

| + | int nCols; | ||

| + | public: | ||

| + | Body(double* in1, double* in2, double* out, int nR, int nC) { | ||

| + | a = in1; | ||

| + | b = in2; | ||

| + | c = out; | ||

| + | nRows = nR; | ||

| + | nCols = nC; | ||

| + | } | ||

| + | |||

| + | void operator()(const tbb::blocked_range<int>& r) const { | ||

| + | for (int i = r.begin(); i < r.end() && i < nRows; i++) { | ||

| + | for (int j = 0; j < nCols; j++) { | ||

| + | c[i * nRows + j] = 0.0; | ||

| + | for (int k = 0; k < nRows; k++) | ||

| + | c[i * nRows + j] += a[i * nRows + k] * b[k * nRows + j]; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | }; | ||

| + | |||

| + | // cilkplus_matmul_naive returns the product a * b using cilk_for | ||

| + | void cilkplus_matmul_naive(const double* a, const double* b, double* c, int numRows, int numCols) { | ||

| + | cilk_for(int i = 0; i < numRows; i++) { | ||

| + | for (int j = 0; j < numCols; j++) { | ||

| + | double sum = 0.0; | ||

| + | for (int k = 0; k < numRows; k++) | ||

| + | sum += a[i * numRows + k] * b[k * numRows + j]; | ||

| + | c[i * numRows + j] = sum; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | |||

| + | // cilkplus_matmul_vectorized returns the product a * b using cilk_for and pragma simd (vectorization) | ||

| + | void cilkplus_matmul_vectorized(const double* a, const double* b, double* c, int numRows, int numCols) { | ||

| + | cilk_for(int i = 0; i < numRows; i++) { | ||

| + | for (int j = 0; j < numCols; j++) { | ||

| + | double sum = 0.0; | ||

| + | #pragma simd | ||

| + | for (int k = 0; k < numRows; k++) | ||

| + | sum += a[i * numRows + k] * b[k * numRows + j]; | ||

| + | c[i * numRows + j] = sum; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | |||

| + | int main(int argc, char** argv) { | ||

| + | if (argc != 4) { | ||

| + | std::cerr << "*** Incorrect number of arguments ***\n"; | ||

| + | std::cerr << "Usage: " << argv[0] << " Method #rows #columns\n"; | ||

| + | return 1; | ||

| + | } | ||

| + | |||

| + | int numRows = std::atoi(argv[2]); | ||

| + | int numCols = std::atoi(argv[3]); | ||

| + | |||

| + | double *a, *b, *mat; | ||

| + | try | ||

| + | { | ||

| + | a = new double[numRows * numCols]; | ||

| + | b = new double[numRows * numCols]; | ||

| + | mat = new double[numRows * numCols]; | ||

| + | } | ||

| + | catch (std::exception& e) | ||

| + | { | ||

| + | std::cerr << "*** Failed to Allocate Memory for " | ||

| + | << numRows << " by " << numCols << "matrices" << std::endl; | ||

| + | return 2; | ||

| + | } | ||

| + | |||

| + | |||

| + | // initialize a and b | ||

| + | for (int i = 0; i < numRows * numCols; ++i) | ||

| + | { | ||

| + | a[i] = 0.0; | ||

| + | b[i] = 0.0; | ||

| + | } | ||

| + | for (int i = 0; i < numRows; ++i) | ||

| + | { | ||

| + | a[i * numRows + i] = 1.0; | ||

| + | b[i * numRows + i] = 1.0; | ||

| + | } | ||

| + | |||

| + | steady_clock::time_point ts, te; | ||

| + | |||

| + | // Serial | ||

| + | ts = steady_clock::now(); | ||

| + | serial_matmul_naive(a, b, mat, numRows, numCols); | ||

| + | printMatricies(a, b, mat, numRows, numCols); | ||

| + | te = steady_clock::now(); | ||

| + | reportTime("\n - Serial matrix naive multiplication ", te - ts); | ||

| + | std::cout << std::endl; | ||

| + | |||

| + | ts = steady_clock::now(); | ||

| + | serial_matmul_reversed(a, b, mat, numRows, numCols); | ||

| + | printMatricies(a, b, mat, numRows, numCols); | ||

| + | te = steady_clock::now(); | ||

| + | reportTime("\n - Serial matrix reversed multiplication ", te - ts); | ||

| + | std::cout << std::endl; | ||

| + | |||

| + | // TBB | ||

| + | ts = steady_clock::now(); | ||

| + | Body const body(a, b, mat, numRows, numCols); | ||

| + | size_t grainsize = 100000; | ||

| + | tbb::blocked_range<int> range(0, numRows, grainsize); | ||

| + | tbb::parallel_for(range, body); | ||

| + | printMatricies(a, b, mat, numRows, numCols); | ||

| + | te = steady_clock::now(); | ||

| + | reportTime("\n - TBB matrix multiplication ", te - ts); | ||

| + | std::cout << std::endl; | ||

| + | |||

| + | // Cilkplus | ||

| + | ts = steady_clock::now(); | ||

| + | cilkplus_matmul_naive(a, b, mat, numRows, numCols); | ||

| + | printMatricies(a, b, mat, numRows, numCols); | ||

| + | te = steady_clock::now(); | ||

| + | reportTime("\n - Cilkplus matrix multiplication naive ", te - ts); | ||

| + | std::cout << std::endl; | ||

| + | |||

| + | ts = steady_clock::now(); | ||

| + | cilkplus_matmul_vectorized(a, b, mat, numRows, numCols); | ||

| + | printMatricies(a, b, mat, numRows, numCols); | ||

| + | te = steady_clock::now(); | ||

| + | reportTime("\n - Cilkplus matrix multiplication vectorization ", te - ts); | ||

| + | std::cout << std::endl; | ||

| + | |||

| + | // Math kernel Library | ||

| + | // math kernel // | ||

| + | double *A, *B, *C; | ||

| + | double alpha = 1.0, beta = 0.0; | ||

| + | |||

| + | A = (double *)mkl_malloc(numRows * numCols * sizeof(double), 64); | ||

| + | B = (double *)mkl_malloc(numRows * numCols * sizeof(double), 64); | ||

| + | C = (double *)mkl_malloc(numRows * numCols * sizeof(double), 64); | ||

| + | |||

| + | // initialize a and b | ||

| + | for (int i = 0; i < numRows * numCols; ++i) | ||

| + | { | ||

| + | A[i] = 0.0; | ||

| + | B[i] = 0.0; | ||

| + | } | ||

| + | for (int i = 0; i < numRows; ++i) | ||

| + | { | ||

| + | A[i * numRows + i] = 1.0; | ||

| + | B[i * numRows + i] = 1.0; | ||

| + | } | ||

| + | |||

| + | |||

| + | if (A == NULL || B == NULL || C == NULL) { | ||

| + | std::cout << "\n ERROR: Can't allocate memory for matrices. Aborting... \n\n"; | ||

| + | mkl_free(A); | ||

| + | mkl_free(B); | ||

| + | mkl_free(C); | ||

| + | return 3; | ||

| + | } | ||

| + | ts = steady_clock::now(); | ||

| + | cblas_dgemm(CblasRowMajor, CblasNoTrans, CblasNoTrans, | ||

| + | numRows, numCols, 1, alpha, A, numRows, B, numCols, beta, C, numRows); | ||

| + | printMatricies(A, B, C, numRows, numCols); | ||

| + | te = steady_clock::now(); | ||

| + | reportTime("\n - MKL matrix multiplication ", te - ts); | ||

| + | std::cout << std::endl; | ||

| + | |||

| + | |||

| + | //// deallocate mkl | ||

| + | mkl_free(A); | ||

| + | mkl_free(B); | ||

| + | mkl_free(C); | ||

| + | // math kernel // | ||

| + | |||

| + | |||

| + | // deallocate | ||

| + | delete[] a; | ||

| + | delete[] b; | ||

| + | delete[] mat; | ||

| + | |||

| + | std::cout << std::endl; | ||

| + | system("pause"); | ||

| + | } | ||

| + | |||

| + | </pre> | ||

| + | |||

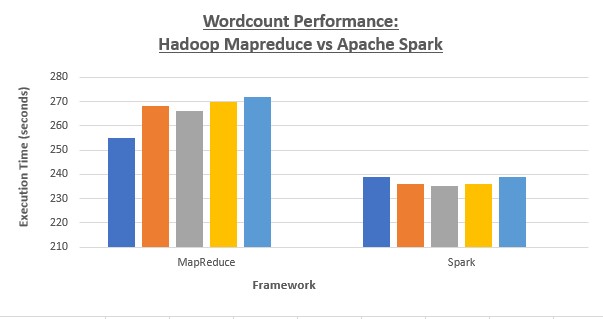

| + | And our results | ||

| + | |||

| + | [[File:results.jpg]] | ||

| + | |||

| + | == References == | ||

| + | |||

| + | https://software.intel.com/sites/default/files/managed/41/4d/Kitty_Indeed.pdf | ||

| + | <br> | ||

| + | https://software.intel.com/en-us/mkl-developer-reference-c-cblas-gemm | ||

| + | <br> | ||

| + | https://software.intel.com/en-us/mkl | ||

| + | <br> | ||

| + | https://software.intel.com/en-us/articles/manufacturing-package-fault-detection-using-deep-learning | ||

Latest revision as of 00:59, 21 December 2017

Contents

Team Members (MMD)

Intel Math Kernel Library (MKL) Summary

The MKL is a library of optimized math routines for science, engineering and financial applications. The library consists of many standard math library along with Intel’s math libraries implementation. Currently it is compatible with Windows, Linux and OS X operating systems. Currently Intel offers native support for C/C++, and Fortran. It is possible to use the MKL with Python but that will depend on the library that you will be required to use.

Setting up MKL

Firstly you will need to download the MKL from the following link https://software.intel.com/en-us/mkl.

Next you will need to install and allow for the intergration of the tools with your version of visual studio.

Now when you open a new project there will still be two more steps you will need to add to use MKL. The first is adding the mkl.h header file and the second is going under the project properties and select the use MKL option to yes.

MKL testing

For our project we wanted to test the performance of MKL verse other Intel parallel solutions such as Cilk Plus and Thread building blocks (TBB).

We also tested the serial implementation for completions sake

Serial version :

// serial_matmul_naive returns the product a * b using basic serial logic

void serial_matmul_naive(const double* a, const double* b, double* c, int numRows, int numCols) {

for (int i = 0; i < numRows; i++) {

for (int j = 0; j < numCols; j++) {

double sum = 0.0;

for (int k = 0; k < numRows; k++)

sum += a[i * numRows + k] * b[k * numRows + j];

c[i * numRows + j] = sum;

}

}

}

Serial version with reversed inner loops :

// serial_matmul_reversed returns the product a * b using serial logic loops reversed

void serial_matmul_naive(const double* a, const double* b, double* c, int numRows, int numCols) {

for (int i = 0; i < numRows; i++) {

for (int j = 0; j < numCols; j++) {

double sum = 0.0;

for (int k = 0; k < numRows; k++)

sum += a[i * numRows + k] * b[k * numRows + j];

c[i * numRows + j] = sum;

}

}

}

TBB version :

// Thread builing blocks matrix multipication

class Body {

double* a;

double* b;

double* c;

int nRows;

int nCols;

public:

Body(double* in1, double* in2, double* out, int nR, int nC) {

a = in1;

b = in2;

c = out;

nRows = nR;

nCols = nC;

}

void operator()(const tbb::blocked_range<int>& r) const {

for (int i = r.begin(); i < r.end() && i < nRows; i++) {

for (int j = 0; j < nCols; j++) {

c[i * nRows + j] = 0.0;

for (int k = 0; k < nRows; k++)

c[i * nRows + j] += a[i * nRows + k] * b[k * nRows + j];

}

}

}

};

Cilk plus naive version :

// cilkplus_matmul_naive returns the product a * b using cilk_for

void cilkplus_matmul_naive(const double* a, const double* b, double* c, int numRows, int numCols) {

cilk_for(int i = 0; i < numRows; i++) {

for (int j = 0; j < numCols; j++) {

double sum = 0.0;

for (int k = 0; k < numRows; k++)

sum += a[i * numRows + k] * b[k * numRows + j];

c[i * numRows + j] = sum;

}

}

}

Cilk plus with vectorization :

// cilkplus_matmul_vectorized returns the product a * b using cilk_for and pragma simd (vectorization)

void cilkplus_matmul_vectorized(const double* a, const double* b, double* c, int numRows, int numCols) {

cilk_for(int i = 0; i < numRows; i++) {

for (int j = 0; j < numCols; j++) {

double sum = 0.0;

#pragma simd

for (int k = 0; k < numRows; k++)

sum += a[i * numRows + k] * b[k * numRows + j];

c[i * numRows + j] = sum;

}

}

}

MKL version (compressed) :

double *A, *B, *C;

double alpha = 1.0, beta = 0.0;

...

A = (double *)mkl_malloc(numRows * numCols * sizeof(double), 64);

B = (double *)mkl_malloc(numRows * numCols * sizeof(double), 64);

C = (double *)mkl_malloc(numRows * numCols * sizeof(double), 64);

...

if (A == NULL || B == NULL || C == NULL) {

std::cout << "\n ERROR: Can't allocate memory for matrices. Aborting... \n\n";

mkl_free(A);

mkl_free(B);

mkl_free(C);

return 3;

}

...

cblas_dgemm(CblasRowMajor, CblasNoTrans, CblasNoTrans,

numRows, numCols, 1, alpha, A, numRows, B, numCols, beta, C, numRows);

...

//// deallocate mkl

mkl_free(A);

mkl_free(B);

mkl_free(C);

Full code & Results

Here is the full program source code that was used during our tests.

//DPS921 - Project

#include <iostream>

#include <cstdlib>

#include <chrono>

using namespace std::chrono;

#include <cilk/cilk.h>

#include <tbb/tbb.h>

#include "mkl.h"

// report system time

void reportTime(const char* msg, steady_clock::duration span) {

auto ms = duration_cast<milliseconds>(span);

std::cout << msg << " - took - " <<

ms.count() << " milliseconds" << std::endl;

}

// Print matricies

void printMatricies(const double* a, const double* b, double* mat, int numRows, int numCols) {

if (numRows <= 5 && numCols <= 5)

{

for (int i = 0; i < numRows; ++i) {

for (int j = 0; j < numCols; ++j) {

std::cout << a[i * numRows + j] << " ";

}

std::cout << std::endl;

}

std::cout << std::endl;

for (int i = 0; i < numRows; ++i) {

for (int j = 0; j < numCols; ++j) {

std::cout << b[i * numRows + j] << " ";

}

std::cout << std::endl;

}

std::cout << std::endl;

for (int i = 0; i < numRows; ++i) {

for (int j = 0; j < numCols; ++j) {

std::cout << mat[i * numRows + j] << " ";

}

std::cout << std::endl;

}

std::cout << std::endl;

}

}

// serial_matmul_naive returns the product a * b using basic serial logic

void serial_matmul_naive(const double* a, const double* b, double* c, int numRows, int numCols) {

for (int i = 0; i < numRows; i++) {

for (int j = 0; j < numCols; j++) {

double sum = 0.0;

for (int k = 0; k < numRows; k++)

sum += a[i * numRows + k] * b[k * numRows + j];

c[i * numRows + j] = sum;

}

}

}

// serial_matmul_reversed returns the product a * b using serial logic loops reversed

// TODO: actually test this one

void serial_matmul_reversed(const double* a, const double* b, double* c, int numRows, int numCols) {

for (int i = 0; i < numRows; i++) {

for (int j = 0; j < numCols; j++) {

c[i * numRows + j] = 0.0;

for (int k = 0; k < numRows; k++)

c[i * numRows + j] += a[i * numRows + k] * b[k * numRows + j];

}

}

}

// Thread builing blocks matrix multipication

class Body {

double* a;

double* b;

double* c;

int nRows;

int nCols;

public:

Body(double* in1, double* in2, double* out, int nR, int nC) {

a = in1;

b = in2;

c = out;

nRows = nR;

nCols = nC;

}

void operator()(const tbb::blocked_range<int>& r) const {

for (int i = r.begin(); i < r.end() && i < nRows; i++) {

for (int j = 0; j < nCols; j++) {

c[i * nRows + j] = 0.0;

for (int k = 0; k < nRows; k++)

c[i * nRows + j] += a[i * nRows + k] * b[k * nRows + j];

}

}

}

};

// cilkplus_matmul_naive returns the product a * b using cilk_for

void cilkplus_matmul_naive(const double* a, const double* b, double* c, int numRows, int numCols) {

cilk_for(int i = 0; i < numRows; i++) {

for (int j = 0; j < numCols; j++) {

double sum = 0.0;

for (int k = 0; k < numRows; k++)

sum += a[i * numRows + k] * b[k * numRows + j];

c[i * numRows + j] = sum;

}

}

}

// cilkplus_matmul_vectorized returns the product a * b using cilk_for and pragma simd (vectorization)

void cilkplus_matmul_vectorized(const double* a, const double* b, double* c, int numRows, int numCols) {

cilk_for(int i = 0; i < numRows; i++) {

for (int j = 0; j < numCols; j++) {

double sum = 0.0;

#pragma simd

for (int k = 0; k < numRows; k++)

sum += a[i * numRows + k] * b[k * numRows + j];

c[i * numRows + j] = sum;

}

}

}

int main(int argc, char** argv) {

if (argc != 4) {

std::cerr << "*** Incorrect number of arguments ***\n";

std::cerr << "Usage: " << argv[0] << " Method #rows #columns\n";

return 1;

}

int numRows = std::atoi(argv[2]);

int numCols = std::atoi(argv[3]);

double *a, *b, *mat;

try

{

a = new double[numRows * numCols];

b = new double[numRows * numCols];

mat = new double[numRows * numCols];

}

catch (std::exception& e)

{

std::cerr << "*** Failed to Allocate Memory for "

<< numRows << " by " << numCols << "matrices" << std::endl;

return 2;

}

// initialize a and b

for (int i = 0; i < numRows * numCols; ++i)

{

a[i] = 0.0;

b[i] = 0.0;

}

for (int i = 0; i < numRows; ++i)

{

a[i * numRows + i] = 1.0;

b[i * numRows + i] = 1.0;

}

steady_clock::time_point ts, te;

// Serial

ts = steady_clock::now();

serial_matmul_naive(a, b, mat, numRows, numCols);

printMatricies(a, b, mat, numRows, numCols);

te = steady_clock::now();

reportTime("\n - Serial matrix naive multiplication ", te - ts);

std::cout << std::endl;

ts = steady_clock::now();

serial_matmul_reversed(a, b, mat, numRows, numCols);

printMatricies(a, b, mat, numRows, numCols);

te = steady_clock::now();

reportTime("\n - Serial matrix reversed multiplication ", te - ts);

std::cout << std::endl;

// TBB

ts = steady_clock::now();

Body const body(a, b, mat, numRows, numCols);

size_t grainsize = 100000;

tbb::blocked_range<int> range(0, numRows, grainsize);

tbb::parallel_for(range, body);

printMatricies(a, b, mat, numRows, numCols);

te = steady_clock::now();

reportTime("\n - TBB matrix multiplication ", te - ts);

std::cout << std::endl;

// Cilkplus

ts = steady_clock::now();

cilkplus_matmul_naive(a, b, mat, numRows, numCols);

printMatricies(a, b, mat, numRows, numCols);

te = steady_clock::now();

reportTime("\n - Cilkplus matrix multiplication naive ", te - ts);

std::cout << std::endl;

ts = steady_clock::now();

cilkplus_matmul_vectorized(a, b, mat, numRows, numCols);

printMatricies(a, b, mat, numRows, numCols);

te = steady_clock::now();

reportTime("\n - Cilkplus matrix multiplication vectorization ", te - ts);

std::cout << std::endl;

// Math kernel Library

// math kernel //

double *A, *B, *C;

double alpha = 1.0, beta = 0.0;

A = (double *)mkl_malloc(numRows * numCols * sizeof(double), 64);

B = (double *)mkl_malloc(numRows * numCols * sizeof(double), 64);

C = (double *)mkl_malloc(numRows * numCols * sizeof(double), 64);

// initialize a and b

for (int i = 0; i < numRows * numCols; ++i)

{

A[i] = 0.0;

B[i] = 0.0;

}

for (int i = 0; i < numRows; ++i)

{

A[i * numRows + i] = 1.0;

B[i * numRows + i] = 1.0;

}

if (A == NULL || B == NULL || C == NULL) {

std::cout << "\n ERROR: Can't allocate memory for matrices. Aborting... \n\n";

mkl_free(A);

mkl_free(B);

mkl_free(C);

return 3;

}

ts = steady_clock::now();

cblas_dgemm(CblasRowMajor, CblasNoTrans, CblasNoTrans,

numRows, numCols, 1, alpha, A, numRows, B, numCols, beta, C, numRows);

printMatricies(A, B, C, numRows, numCols);

te = steady_clock::now();

reportTime("\n - MKL matrix multiplication ", te - ts);

std::cout << std::endl;

//// deallocate mkl

mkl_free(A);

mkl_free(B);

mkl_free(C);

// math kernel //

// deallocate

delete[] a;

delete[] b;

delete[] mat;

std::cout << std::endl;

system("pause");

}

And our results

References

https://software.intel.com/sites/default/files/managed/41/4d/Kitty_Indeed.pdf

https://software.intel.com/en-us/mkl-developer-reference-c-cblas-gemm

https://software.intel.com/en-us/mkl

https://software.intel.com/en-us/articles/manufacturing-package-fault-detection-using-deep-learning