Difference between revisions of "Installation Wizards"

Kramsamujh (talk | contribs) (→Sudoku Brute Force Solver) |

(→Assignment 3) |

||

| (6 intermediate revisions by one other user not shown) | |||

| Line 101: | Line 101: | ||

It is clear that the verifyValue function takes up majority of the execution time being called 356,39,645 times and taking 5.38 seconds of the total execution time. | It is clear that the verifyValue function takes up majority of the execution time being called 356,39,645 times and taking 5.38 seconds of the total execution time. | ||

| + | |||

| + | ==Project Selection== | ||

| + | We have decided to pursue the image processing project as it has more potential for a significant speedup after using the parallel processing. We will focus our time on speeding up the functions used to negate, reflect and enlarge the image. | ||

=== Assignment 2 === | === Assignment 2 === | ||

| + | == Parallel Image Processor == | ||

| + | |||

| + | For the second part of this assignment we proceeded to convert the C implementation of the image processor to a CUDA implementation that would use parallel programming for a performance increase. The first hurdle we encountered when beginning this process was that the image used in our image processor was stored as a 2D array which would create problems when we try to use it in a CUDA implementation. Matt took the responsibility of re writing the code to use a 1D array that stored the pixels in column major order. | ||

| + | |||

| + | Once we had the 1D array to store the image, we were able to start writing kernels for the compute-intensive functions of our processor. We created kernels for reflectImage(), enlargeImage() and negateImage() and began to test for any sort of performance increase. | ||

| + | |||

| + | The optimized version of the source code with the kernels we created can be found [https://pastebin.com/R0xEfN9W here]. | ||

| + | |||

| + | For reference of what the image processor we are using actually does, we have attached the sample input and output image we have been using. This is the results for running the image processor without specifying an enlarge scale which will perform a negation and reflection vertically. | ||

| + | |||

| + | [[File:input.jpg]] [[File:outputPGM.jpg]] | ||

| + | |||

| + | Due to device limitations we were only able to profile our program up to an enlarged scale of 8 but our results still showed a performance increase as the enlarge scale got to 4. | ||

| + | |||

| + | [[File:GpuA2Spreadsheet.png]] | ||

| + | |||

| + | As seen from the results above, our parallel implementation of the image processor shows some significant performance increase as the enlarge scale gets larger. We also noticed that the c++ implementation of the processor ran faster when there was no enlarge and when the enlarge was only 2. We believe that this could be due to the costly operation of cudaMemcpy() since the operations we are doing to the pixels are not that intensive, the time it takes for the cudaMEmcpy() could easily start to exceed the time of the transformations. | ||

| + | |||

| + | To continue our optimizations, we think that we could get more of a performance increase by minimizing the amount of data copied to and from the GPU. We are going to look into storing the image on the GPU until all transformations have been done and then copying the data back from the GPU. | ||

| + | |||

=== Assignment 3 === | === Assignment 3 === | ||

| + | |||

| + | For our optimizations to our parallel code we stuck to our plan and focused on reducing the amount of cudaMemcpy() used throughout each of the functions. | ||

| + | |||

| + | void Image::negateImage(Image& oldImage) | ||

| + | /*negates image*/ | ||

| + | { | ||

| + | Image tempImage(N, M, Q); | ||

| + | |||

| + | int* d_temp = nullptr; | ||

| + | int* d_img = nullptr; | ||

| + | int size = N * M; | ||

| + | int nblocks = size / ntpb; | ||

| + | cudaMalloc((void**)&d_temp, size * sizeof(int)); | ||

| + | cudaMalloc((void**)&d_img, size * sizeof(int)); | ||

| + | cudaMemcpy(d_temp, tempImage.pixelVal, size * sizeof(int), cudaMemcpyHostToDevice); | ||

| + | cudaMemcpy(d_img, pixelVal, size * sizeof(int), cudaMemcpyHostToDevice); | ||

| + | |||

| + | negate << <nblocks, ntpb >> >(d_temp, d_img, size); | ||

| + | |||

| + | cudaError_t err = cudaGetLastError(); | ||

| + | if (err != cudaSuccess) | ||

| + | printf("Error: %s\n", cudaGetErrorString(err)); | ||

| + | |||

| + | cudaDeviceSynchronize(); | ||

| + | cudaMemcpy(tempImage.pixelVal, d_temp, size * sizeof(int), cudaMemcpyDeviceToHost); | ||

| + | cudaFree(d_temp); | ||

| + | cudaFree(d_img); | ||

| + | |||

| + | oldImage = tempImage; | ||

| + | } | ||

| + | |||

| + | void Image::reflectImage(bool flag, Image& oldImage) | ||

| + | /*Reflects the Image based on users input*/ | ||

| + | { | ||

| + | int rows = oldImage.N; | ||

| + | int cols = oldImage.M; | ||

| + | Image tempImage(oldImage); | ||

| + | |||

| + | int* d_temp = nullptr; | ||

| + | int* d_img = nullptr; | ||

| + | int size = rows * cols; | ||

| + | int nblocks = size / ntpb; | ||

| + | cudaMalloc((void**)&d_temp, size * sizeof(int)); | ||

| + | cudaMalloc((void**)&d_img, size * sizeof(int)); | ||

| + | cudaMemcpy(d_temp, tempImage.pixelVal, size * sizeof(int), cudaMemcpyHostToDevice); | ||

| + | cudaMemcpy(d_img, oldImage.pixelVal, size * sizeof(int), cudaMemcpyHostToDevice); | ||

| + | if (flag) { | ||

| + | horizontalReflect << <nblocks, ntpb >> >(d_temp, d_img, size, rows, cols); | ||

| + | } | ||

| + | else { | ||

| + | verticalReflect << <nblocks, ntpb >> >(d_temp, d_img, size, rows, cols); | ||

| + | } | ||

| + | cudaDeviceSynchronize(); | ||

| + | cudaMemcpy(tempImage.pixelVal, d_temp, size * sizeof(int), cudaMemcpyDeviceToHost); | ||

| + | cudaFree(d_temp); | ||

| + | cudaFree(d_img); | ||

| + | |||

| + | oldImage = tempImage; | ||

| + | } | ||

| + | |||

| + | void Image::enlargeImage(int value, Image& oldImage) | ||

| + | /*enlarges Image and stores it in tempImage, resizes oldImage and stores the | ||

| + | larger image in oldImage*/ | ||

| + | { | ||

| + | int rows, cols, gray; | ||

| + | int pixel; | ||

| + | int enlargeRow, enlargeCol; | ||

| + | |||

| + | rows = oldImage.N * value; | ||

| + | cols = oldImage.M * value; | ||

| + | gray = oldImage.Q; | ||

| + | |||

| + | Image tempImage(rows, cols, gray); | ||

| + | |||

| + | |||

| + | int r = oldImage.N; | ||

| + | int c = oldImage.M; | ||

| + | |||

| + | int* d_temp = nullptr; | ||

| + | int* d_img = nullptr; | ||

| + | int size = rows * cols; | ||

| + | int nblocks = size / ntpb; | ||

| + | |||

| + | cudaMalloc((void**)&d_temp, size * sizeof(int)); | ||

| + | cudaMalloc((void**)&d_img, size * sizeof(int)); | ||

| + | |||

| + | cudaMemcpy(d_temp, tempImage.pixelVal, size * sizeof(int), cudaMemcpyHostToDevice); | ||

| + | cudaMemcpy(d_img, oldImage.pixelVal, (r * c) * sizeof(int), cudaMemcpyHostToDevice); | ||

| + | |||

| + | enlarge << <nblocks, ntpb >> >(d_temp, d_img, size, value, c, cols); | ||

| + | |||

| + | cudaDeviceSynchronize(); | ||

| + | |||

| + | //set the image's data | ||

| + | cudaMemcpy(tempImage.pixelVal, d_temp, size * sizeof(int), cudaMemcpyDeviceToHost); | ||

| + | |||

| + | //free device mem | ||

| + | cudaFree(d_temp); | ||

| + | cudaFree(d_img); | ||

| + | |||

| + | oldImage = tempImage; | ||

| + | } | ||

| + | |||

| + | As seen above, for all of the functions we converted to parallel, there is a lot of copying to and from the device. The use of a temp image makes this task even more intensive as we are basically copying the image to and from the device twice. In order to remove these cudaMemcpy() calls from each of the functions, we decided to simply create the image we are going to process directly onto the device allowing our parallel functions to operate on the pixels of the image without having to copy both the image and and a temporary image to and from the device. With this solution we were able to effectively reduce our 3 cudaMemcpy() calls per function from the parallel code into 0 cudaMemcpy() calls per function in our optimized code. | ||

| + | |||

| + | To implement this solution, we moved the intensive cudaMemcpy() calls to just be called when the image is created as seen below. | ||

| + | Image::Image(int numRows, int numCols, int grayLevels) | ||

| + | /* Creates an Image of numRows x numCols and creates the arrays for it*/ | ||

| + | { | ||

| + | N = numRows; | ||

| + | M = numCols; | ||

| + | Q = grayLevels; | ||

| + | cudaMalloc((void**)&pixelVal, N * M * sizeof(int)); | ||

| + | cudaMemset(pixelVal, 0, N * M * sizeof(int)); | ||

| + | } | ||

| + | |||

| + | Image::Image(const Image& oldImage) | ||

| + | /*copies oldImage into new Image object*/ | ||

| + | { | ||

| + | N = oldImage.N; | ||

| + | M = oldImage.M; | ||

| + | Q = oldImage.Q; | ||

| + | int sz = M * N * sizeof(int); | ||

| + | cudaMalloc((void**)&pixelVal, sz); | ||

| + | cudaMemcpy(pixelVal, oldImage.pixelVal, sz, cudaMemcpyDeviceToDevice); | ||

| + | } | ||

| + | |||

| + | void Image::operator=(const Image& oldImage) | ||

| + | /*copies oldImage into whatever you = it to*/ | ||

| + | { | ||

| + | N = oldImage.N; | ||

| + | M = oldImage.M; | ||

| + | Q = oldImage.Q; | ||

| + | |||

| + | if (pixelVal) { | ||

| + | cudaFree(pixelVal); | ||

| + | } | ||

| + | |||

| + | int sz = M * N * sizeof(int); | ||

| + | cudaMalloc((void**)&pixelVal, sz); | ||

| + | cudaMemcpy(pixelVal, oldImage.pixelVal, sz, cudaMemcpyDeviceToDevice); | ||

| + | } | ||

| + | |||

| + | Since we decided to store the image directly onto the device, we also had to add some cudaMemcpy() calls to the functions that handled retrieving and delivering the image to the host. This process was originally handled in the getPixelVal() and setPixelVal() functions below. | ||

| + | |||

| + | int Image::getPixelVal(int row, int col) | ||

| + | /*returns the gray value of a specific pixel*/ | ||

| + | { | ||

| + | return pixelVal[row * M + col]; | ||

| + | } | ||

| + | |||

| + | void Image::setPixelVal(int row, int col, int value) | ||

| + | /*sets the gray value of a specific pixel*/ | ||

| + | { | ||

| + | pixelVal[row * M + col] = value; | ||

| + | } | ||

| + | |||

| + | But now we need to transfer the data to and from the device which is where the image is stored. We created 2 new functions to handle this called getPixels() and setPixels(). | ||

| + | |||

| + | void Image::getPixels(int row, int col, int sz, int* out) { | ||

| + | cudaMemcpy(out, pixelVal + (row * M + col), sz * sizeof(int), cudaMemcpyDeviceToHost); | ||

| + | } | ||

| + | |||

| + | void Image::setPixels(int row, int col, int sz, int* in) { | ||

| + | cudaMemcpy(pixelVal + (row * M + col), in, sz * sizeof(int), cudaMemcpyHostToDevice); | ||

| + | } | ||

| + | |||

| + | After making these changes we were able to greatly simplify our parallel functions as shown below. | ||

| + | |||

| + | void Image::negateImage(Image& oldImage) | ||

| + | /*negates image*/ | ||

| + | { | ||

| + | Image tempImage(N, M, Q); | ||

| + | |||

| + | int* d_temp = tempImage.pixelVal; | ||

| + | int* d_img = pixelVal; | ||

| + | int size = N * M; | ||

| + | int nblocks = size / ntpb; | ||

| + | |||

| + | negate << <nblocks, ntpb >> >(d_temp, d_img, size); | ||

| + | |||

| + | cudaError_t err = cudaGetLastError(); | ||

| + | if (err != cudaSuccess) | ||

| + | printf("Error: %s\n", cudaGetErrorString(err)); | ||

| + | |||

| + | cudaDeviceSynchronize(); | ||

| + | |||

| + | oldImage = tempImage; | ||

| + | } | ||

| + | |||

| + | void Image::reflectImage(bool flag, Image& oldImage) | ||

| + | /*Reflects the Image based on users input*/ | ||

| + | { | ||

| + | int rows = oldImage.N; | ||

| + | int cols = oldImage.M; | ||

| + | Image tempImage(oldImage); | ||

| + | |||

| + | int* d_temp = tempImage.pixelVal; | ||

| + | int* d_img = oldImage.pixelVal; | ||

| + | int size = rows * cols; | ||

| + | int nblocks = size / ntpb; | ||

| + | |||

| + | if (flag) { | ||

| + | horizontalReflect << <nblocks, ntpb >> >(d_temp, d_img, size, rows, cols); | ||

| + | } | ||

| + | else { | ||

| + | verticalReflect << <nblocks, ntpb >> >(d_temp, d_img, size, rows, cols); | ||

| + | } | ||

| + | cudaDeviceSynchronize(); | ||

| + | |||

| + | oldImage = tempImage; | ||

| + | } | ||

| + | |||

| + | void Image::enlargeImage(int value, Image& oldImage) | ||

| + | /*enlarges Image and stores it in tempImage, resizes oldImage and stores the | ||

| + | larger image in oldImage*/ | ||

| + | { | ||

| + | int rows, cols, gray; | ||

| + | int pixel; | ||

| + | int enlargeRow, enlargeCol; | ||

| + | |||

| + | rows = oldImage.N * value; | ||

| + | cols = oldImage.M * value; | ||

| + | gray = oldImage.Q; | ||

| + | |||

| + | Image tempImage(rows, cols, gray); | ||

| + | |||

| + | |||

| + | int r = oldImage.N; | ||

| + | int c = oldImage.M; | ||

| + | |||

| + | int* d_temp = tempImage.pixelVal; | ||

| + | int* d_img = oldImage.pixelVal; | ||

| + | int size = rows * cols; | ||

| + | int nblocks = size / ntpb; | ||

| + | |||

| + | enlarge << <nblocks, ntpb >> >(d_temp, d_img, size, value, c, cols); | ||

| + | |||

| + | cudaDeviceSynchronize(); | ||

| + | |||

| + | oldImage = tempImage; | ||

| + | } | ||

| + | |||

| + | |||

| + | Finally we profiled everything again to ensure that our optimizations would show a performance increase. We noticed a large decrease in the execution time after the optimizations and were satisfied with the outcome. | ||

| + | [[File:gpu optimized.png]] | ||

| + | |||

| + | The source code for our final assignment can be viewed on [https://github.com/mdbell/GPUAssignment GitHub] | ||

Latest revision as of 13:10, 12 April 2017

GPU610/DPS915 | Student List | Group and Project Index | Student Resources | Glossary

Contents

Installation Wizards

Team Members

Progress

Assignment 1

Image Processing

Much like previous students who have taken this course, we decided to look over image processing (leveraging Christopher Ginac's PGM parser http://www.dreamincode.net/forums/topic/76816-image-processing-tutorial/ ).

For our profiling we scale an image up 10 times to better profile larger data sets, as our source image was only ~800kb in size. We then negate the image, and then reflect it.

The time taken to perform all 3 operations:

real 0m22.057s user 0m4.584s sys 0m14.221s

The results of our tests:

Flat profile: Each sample counts as 0.01 seconds. % cumulative self self total time seconds seconds calls s/call s/call name 42.92 6.70 6.70 3 2.23 2.23 Image::operator=(Image const&) 29.15 11.25 4.55 2 2.27 2.27 Image::Image(int, int, int) 15.31 13.64 2.39 1 2.39 2.39 Image::Image(Image const&) 4.04 14.27 0.63 writeImage(char*, Image&) 2.56 14.67 0.40 Image::negateImage(Image&) 2.37 15.04 0.37 86832000 0.00 0.00 Image::getPixelVal(int, int) 2.18 15.38 0.34 Image::reflectImage(bool, Image&) 1.47 15.61 0.23 Image::enlargeImage(int, Image&) 0.00 15.61 0.00 868320 0.00 0.00 Image::setPixelVal(int, int, int) 0.00 15.61 0.00 3 0.00 0.00 Image::~Image() 0.00 15.61 0.00 1 0.00 0.00 _GLOBAL__sub_I__ZN5ImageC2Ev 0.00 15.61 0.00 1 0.00 0.00 _GLOBAL__sub_I_main 0.00 15.61 0.00 1 0.00 0.00 Image::getImageInfo(int&, int&, int&)

As we can see, negating the image as well as reflecting and enlarging it are all costly operations, and we believe that we can optimize it to take far less time.

Sudoku Brute Force Solver

For this first assignment I decided to look into a brute force sudoku solver to see if it could benefit from parallel programming. The sudoku solver that was investigated was provided by Bryan Smith (https://github.com/bryanesmith/Sudoku-solver).

This solver goes through the given puzzle guessing numbers for any space that is not already filled and then checking to verify it doesn't break any of the sudoku rules such as horizontal, vertical and in box collisions.

As is, this solver is quick to solve a 9x9 sudoku puzzle. The time taken to solve a sudoku puzzle is under a second as seen in our time results:

real 0m0.097s user 0m0.072s sys 0m0.016s

However if this we were to scale this solver up to solve nxn sized puzzles we would be able to see a use for optimizing this code.

Results from profiling:

Flat profile: Each sample counts as 0.01 seconds. % cumulative self self total time seconds seconds calls ms/call ms/call name 60.00 0.03 0.03 468316 0.00 0.00 SudokuPuzzle::verifyValue(int, int) 20.00 0.04 0.01 468316 0.00 0.00 SudokuPuzzle::printTracerTryingValue(int, int) 20.00 0.05 0.01 1 10.00 50.00 SudokuPuzzle::solve(int, int) 0.00 0.05 0.00 445799 0.00 0.00 SudokuPuzzle::setBoardValue(int, int, int) 0.00 0.05 0.00 2 0.00 0.00 SudokuPuzzle::print() 0.00 0.05 0.00 1 0.00 0.00 _GLOBAL__sub_I__ZN12SudokuPuzzleC2Ev 0.00 0.05 0.00 1 0.00 0.00 _GLOBAL__sub_I_main 0.00 0.05 0.00 1 0.00 0.00 __static_initialization_and_destruction_0(int, int) 0.00 0.05 0.00 1 0.00 0.00 __static_initialization_and_destruction_0(int, int) 0.00 0.05 0.00 1 0.00 50.00 SudokuPuzzle::solve() 0.00 0.05 0.00 1 0.00 0.00 SudokuPuzzle::SudokuPuzzle()

The profiling of this solver shows that the verifyValue function is taking up most of the execution time at 60% which is expected as this function checks all for all of the collisions for the guessed values. This would be the function we would want to optimize however this may not be the easiest of tasks as it seems it has some data dependencies.

After modifying the code to handle nxn size sudoku puzzles we can get a better look at the execution time of the algorithm.

These are the time results for solving a 16x16 puzzle:

real 0m7.631s user 0m7.592s sys 0m0.012s

And Profiling results:

Flat profile: Each sample counts as 0.01 seconds. % cumulative self self total time seconds seconds calls s/call s/call name 90.35 5.38 5.38 35639645 0.00 0.00 SudokuPuzzle::verifyValue(int, int) 5.79 5.73 0.34 1 0.34 5.95 SudokuPuzzle::solve(int, int) 2.35 5.87 0.14 34877040 0.00 0.00 SudokuPuzzle::setBoardValue(int, int, int) 1.34 5.95 0.08 35639645 0.00 0.00 SudokuPuzzle::printTracerTryingValue(int, int) 0.17 5.96 0.01 1 0.01 5.96 SudokuPuzzle::solve() 0.00 5.96 0.00 2 0.00 0.00 SudokuPuzzle::print() 0.00 5.96 0.00 1 0.00 0.00 _GLOBAL__sub_I__ZN12SudokuPuzzleC2Ev 0.00 5.96 0.00 1 0.00 0.00 _GLOBAL__sub_I_main 0.00 5.96 0.00 1 0.00 0.00 __static_initialization_and_destruction_0(int, int) 0.00 5.96 0.00 1 0.00 0.00 __static_initialization_and_destruction_0(int, int) 0.00 5.96 0.00 1 0.00 0.00 SudokuPuzzle::CreateBoard() 0.00 5.96 0.00 1 0.00 0.00 SudokuPuzzle::SudokuPuzzle(int) 0.00 5.96 0.00 1 0.00 0.00 SudokuPuzzle::~SudokuPuzzle()

It is clear that the verifyValue function takes up majority of the execution time being called 356,39,645 times and taking 5.38 seconds of the total execution time.

Project Selection

We have decided to pursue the image processing project as it has more potential for a significant speedup after using the parallel processing. We will focus our time on speeding up the functions used to negate, reflect and enlarge the image.

Assignment 2

Parallel Image Processor

For the second part of this assignment we proceeded to convert the C implementation of the image processor to a CUDA implementation that would use parallel programming for a performance increase. The first hurdle we encountered when beginning this process was that the image used in our image processor was stored as a 2D array which would create problems when we try to use it in a CUDA implementation. Matt took the responsibility of re writing the code to use a 1D array that stored the pixels in column major order.

Once we had the 1D array to store the image, we were able to start writing kernels for the compute-intensive functions of our processor. We created kernels for reflectImage(), enlargeImage() and negateImage() and began to test for any sort of performance increase.

The optimized version of the source code with the kernels we created can be found here.

For reference of what the image processor we are using actually does, we have attached the sample input and output image we have been using. This is the results for running the image processor without specifying an enlarge scale which will perform a negation and reflection vertically.

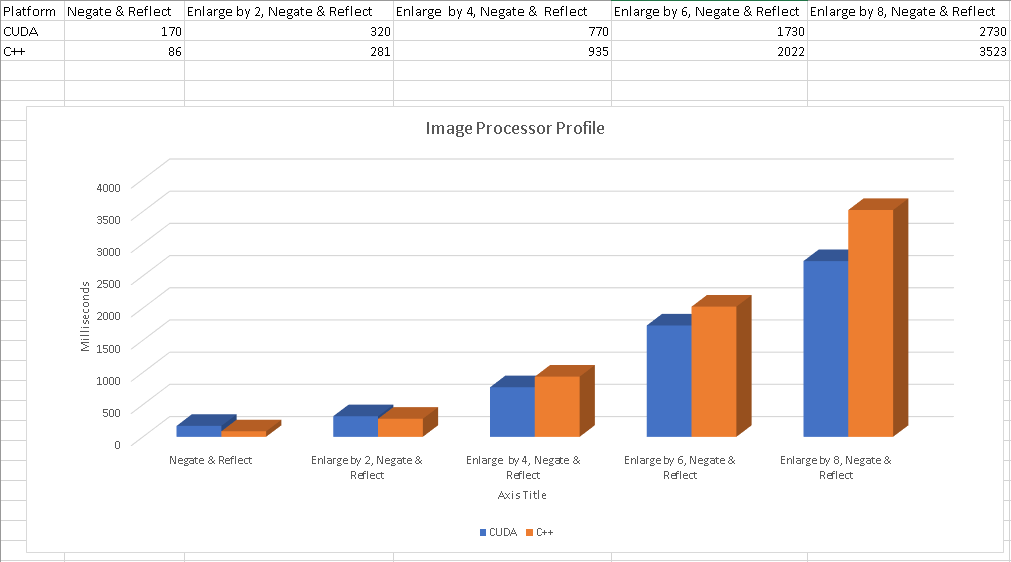

Due to device limitations we were only able to profile our program up to an enlarged scale of 8 but our results still showed a performance increase as the enlarge scale got to 4.

As seen from the results above, our parallel implementation of the image processor shows some significant performance increase as the enlarge scale gets larger. We also noticed that the c++ implementation of the processor ran faster when there was no enlarge and when the enlarge was only 2. We believe that this could be due to the costly operation of cudaMemcpy() since the operations we are doing to the pixels are not that intensive, the time it takes for the cudaMEmcpy() could easily start to exceed the time of the transformations.

To continue our optimizations, we think that we could get more of a performance increase by minimizing the amount of data copied to and from the GPU. We are going to look into storing the image on the GPU until all transformations have been done and then copying the data back from the GPU.

Assignment 3

For our optimizations to our parallel code we stuck to our plan and focused on reducing the amount of cudaMemcpy() used throughout each of the functions.

void Image::negateImage(Image& oldImage)

/*negates image*/

{

Image tempImage(N, M, Q);

int* d_temp = nullptr;

int* d_img = nullptr;

int size = N * M;

int nblocks = size / ntpb;

cudaMalloc((void**)&d_temp, size * sizeof(int));

cudaMalloc((void**)&d_img, size * sizeof(int));

cudaMemcpy(d_temp, tempImage.pixelVal, size * sizeof(int), cudaMemcpyHostToDevice);

cudaMemcpy(d_img, pixelVal, size * sizeof(int), cudaMemcpyHostToDevice);

negate << <nblocks, ntpb >> >(d_temp, d_img, size);

cudaError_t err = cudaGetLastError();

if (err != cudaSuccess)

printf("Error: %s\n", cudaGetErrorString(err));

cudaDeviceSynchronize();

cudaMemcpy(tempImage.pixelVal, d_temp, size * sizeof(int), cudaMemcpyDeviceToHost);

cudaFree(d_temp);

cudaFree(d_img);

oldImage = tempImage;

}

void Image::reflectImage(bool flag, Image& oldImage)

/*Reflects the Image based on users input*/

{

int rows = oldImage.N;

int cols = oldImage.M;

Image tempImage(oldImage);

int* d_temp = nullptr;

int* d_img = nullptr;

int size = rows * cols;

int nblocks = size / ntpb;

cudaMalloc((void**)&d_temp, size * sizeof(int));

cudaMalloc((void**)&d_img, size * sizeof(int));

cudaMemcpy(d_temp, tempImage.pixelVal, size * sizeof(int), cudaMemcpyHostToDevice);

cudaMemcpy(d_img, oldImage.pixelVal, size * sizeof(int), cudaMemcpyHostToDevice);

if (flag) {

horizontalReflect << <nblocks, ntpb >> >(d_temp, d_img, size, rows, cols);

}

else {

verticalReflect << <nblocks, ntpb >> >(d_temp, d_img, size, rows, cols);

}

cudaDeviceSynchronize();

cudaMemcpy(tempImage.pixelVal, d_temp, size * sizeof(int), cudaMemcpyDeviceToHost);

cudaFree(d_temp);

cudaFree(d_img);

oldImage = tempImage;

}

void Image::enlargeImage(int value, Image& oldImage)

/*enlarges Image and stores it in tempImage, resizes oldImage and stores the

larger image in oldImage*/

{

int rows, cols, gray;

int pixel;

int enlargeRow, enlargeCol;

rows = oldImage.N * value;

cols = oldImage.M * value;

gray = oldImage.Q;

Image tempImage(rows, cols, gray);

int r = oldImage.N;

int c = oldImage.M;

int* d_temp = nullptr;

int* d_img = nullptr;

int size = rows * cols;

int nblocks = size / ntpb;

cudaMalloc((void**)&d_temp, size * sizeof(int));

cudaMalloc((void**)&d_img, size * sizeof(int));

cudaMemcpy(d_temp, tempImage.pixelVal, size * sizeof(int), cudaMemcpyHostToDevice);

cudaMemcpy(d_img, oldImage.pixelVal, (r * c) * sizeof(int), cudaMemcpyHostToDevice);

enlarge << <nblocks, ntpb >> >(d_temp, d_img, size, value, c, cols);

cudaDeviceSynchronize();

//set the image's data

cudaMemcpy(tempImage.pixelVal, d_temp, size * sizeof(int), cudaMemcpyDeviceToHost);

//free device mem

cudaFree(d_temp);

cudaFree(d_img);

oldImage = tempImage;

}

As seen above, for all of the functions we converted to parallel, there is a lot of copying to and from the device. The use of a temp image makes this task even more intensive as we are basically copying the image to and from the device twice. In order to remove these cudaMemcpy() calls from each of the functions, we decided to simply create the image we are going to process directly onto the device allowing our parallel functions to operate on the pixels of the image without having to copy both the image and and a temporary image to and from the device. With this solution we were able to effectively reduce our 3 cudaMemcpy() calls per function from the parallel code into 0 cudaMemcpy() calls per function in our optimized code.

To implement this solution, we moved the intensive cudaMemcpy() calls to just be called when the image is created as seen below.

Image::Image(int numRows, int numCols, int grayLevels)

/* Creates an Image of numRows x numCols and creates the arrays for it*/

{

N = numRows;

M = numCols;

Q = grayLevels;

cudaMalloc((void**)&pixelVal, N * M * sizeof(int));

cudaMemset(pixelVal, 0, N * M * sizeof(int));

}

Image::Image(const Image& oldImage)

/*copies oldImage into new Image object*/

{

N = oldImage.N;

M = oldImage.M;

Q = oldImage.Q;

int sz = M * N * sizeof(int);

cudaMalloc((void**)&pixelVal, sz);

cudaMemcpy(pixelVal, oldImage.pixelVal, sz, cudaMemcpyDeviceToDevice);

}

void Image::operator=(const Image& oldImage)

/*copies oldImage into whatever you = it to*/

{

N = oldImage.N;

M = oldImage.M;

Q = oldImage.Q;

if (pixelVal) {

cudaFree(pixelVal);

}

int sz = M * N * sizeof(int);

cudaMalloc((void**)&pixelVal, sz);

cudaMemcpy(pixelVal, oldImage.pixelVal, sz, cudaMemcpyDeviceToDevice);

}

Since we decided to store the image directly onto the device, we also had to add some cudaMemcpy() calls to the functions that handled retrieving and delivering the image to the host. This process was originally handled in the getPixelVal() and setPixelVal() functions below.

int Image::getPixelVal(int row, int col)

/*returns the gray value of a specific pixel*/

{

return pixelVal[row * M + col];

}

void Image::setPixelVal(int row, int col, int value)

/*sets the gray value of a specific pixel*/

{

pixelVal[row * M + col] = value;

}

But now we need to transfer the data to and from the device which is where the image is stored. We created 2 new functions to handle this called getPixels() and setPixels().

void Image::getPixels(int row, int col, int sz, int* out) {

cudaMemcpy(out, pixelVal + (row * M + col), sz * sizeof(int), cudaMemcpyDeviceToHost);

}

void Image::setPixels(int row, int col, int sz, int* in) {

cudaMemcpy(pixelVal + (row * M + col), in, sz * sizeof(int), cudaMemcpyHostToDevice);

}

After making these changes we were able to greatly simplify our parallel functions as shown below.

void Image::negateImage(Image& oldImage)

/*negates image*/

{

Image tempImage(N, M, Q);

int* d_temp = tempImage.pixelVal;

int* d_img = pixelVal;

int size = N * M;

int nblocks = size / ntpb;

negate << <nblocks, ntpb >> >(d_temp, d_img, size);

cudaError_t err = cudaGetLastError();

if (err != cudaSuccess)

printf("Error: %s\n", cudaGetErrorString(err));

cudaDeviceSynchronize();

oldImage = tempImage;

}

void Image::reflectImage(bool flag, Image& oldImage)

/*Reflects the Image based on users input*/

{

int rows = oldImage.N;

int cols = oldImage.M;

Image tempImage(oldImage);

int* d_temp = tempImage.pixelVal;

int* d_img = oldImage.pixelVal;

int size = rows * cols;

int nblocks = size / ntpb;

if (flag) {

horizontalReflect << <nblocks, ntpb >> >(d_temp, d_img, size, rows, cols);

}

else {

verticalReflect << <nblocks, ntpb >> >(d_temp, d_img, size, rows, cols);

}

cudaDeviceSynchronize();

oldImage = tempImage;

}

void Image::enlargeImage(int value, Image& oldImage)

/*enlarges Image and stores it in tempImage, resizes oldImage and stores the

larger image in oldImage*/

{

int rows, cols, gray;

int pixel;

int enlargeRow, enlargeCol;

rows = oldImage.N * value;

cols = oldImage.M * value;

gray = oldImage.Q;

Image tempImage(rows, cols, gray);

int r = oldImage.N;

int c = oldImage.M;

int* d_temp = tempImage.pixelVal;

int* d_img = oldImage.pixelVal;

int size = rows * cols;

int nblocks = size / ntpb;

enlarge << <nblocks, ntpb >> >(d_temp, d_img, size, value, c, cols);

cudaDeviceSynchronize();

oldImage = tempImage;

}

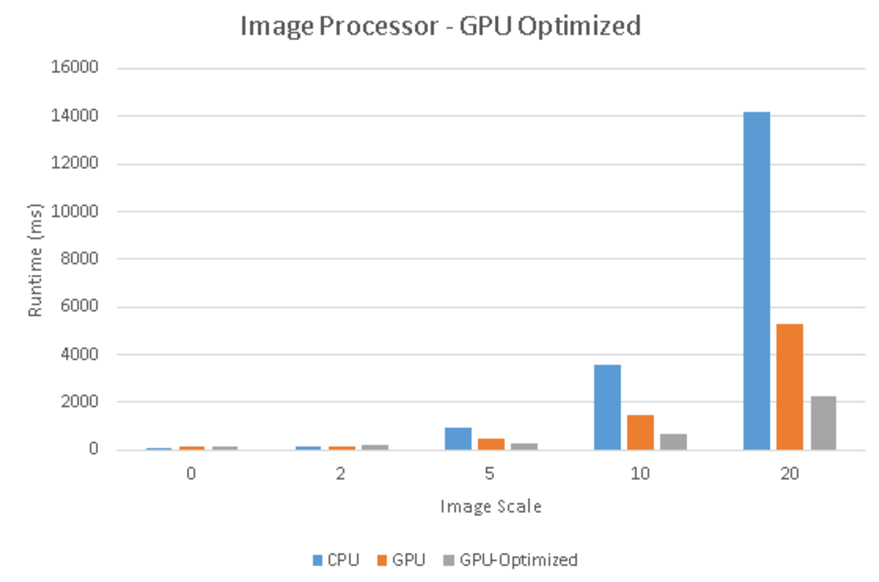

Finally we profiled everything again to ensure that our optimizations would show a performance increase. We noticed a large decrease in the execution time after the optimizations and were satisfied with the outcome.

The source code for our final assignment can be viewed on GitHub