Difference between revisions of "Midnight Tiger"

(→Advantages of Chapel Cray) |

(→Task vs Thread) |

||

| (53 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | = Chapel | + | = Chapel= |

== Team Information == | == Team Information == | ||

| Line 6: | Line 6: | ||

== Introduction to Chapel == | == Introduction to Chapel == | ||

| − | Chapel(Cascade High-Productivity Language) is an alternative parallel programming language that focuses on the productivity of high-end computing systems. | + | Chapel(Cascade High-Productivity Language) is an alternative parallel programming language that focuses on the '''productivity''' of high-end computing systems. |

| − | == Advantages of Chapel | + | The concept of "Productivity" is somewhat more special that we might think. |

| − | + | ||

| + | '''Who are interested in Parallel Programming and what do they want?''' | ||

| + | |||

| + | '''* Student:''' I want something that is similar to the languages that I learned in school such as c++, c, and Java. I want it to be easy to implement the parallel programming on my code. | ||

| + | |||

| + | '''* HPC Programmers:''' I want the full control that gives me more spot to increase the performance. | ||

| + | |||

| + | '''* Computational Scientists:''' I want something that I can easily implement my computation without knowing much architecture knowledge. | ||

| + | |||

| + | |||

| + | '''Chapel:''' Chapel is the language that is easy to implement the parallel computation and similar to other languages with granting full control to the users. | ||

| + | |||

| + | == Advantages of Chapel == | ||

| + | From [http://www.cray.com/blog/chapel-productive-parallel-programming/ one of Cray articles] | ||

* '''General Parallelism:''' Chapel has the goal of supporting any parallel algorithm you can conceive of on any parallel hardware you want to target. In particular, you should never hit a point where you think “Well, that was fun while it lasted, but now that I want to do x, I’d better go back to MPI.” | * '''General Parallelism:''' Chapel has the goal of supporting any parallel algorithm you can conceive of on any parallel hardware you want to target. In particular, you should never hit a point where you think “Well, that was fun while it lasted, but now that I want to do x, I’d better go back to MPI.” | ||

| + | |||

* '''Separation of Parallelism and Locality:''' Chapel supports distinct concepts for describing parallelism (“These things should run concurrently”) from locality (“This should be placed here; that should be placed over there”). This is in sharp contrast to conventional approaches that either conflate the two concepts or ignore locality altogether. | * '''Separation of Parallelism and Locality:''' Chapel supports distinct concepts for describing parallelism (“These things should run concurrently”) from locality (“This should be placed here; that should be placed over there”). This is in sharp contrast to conventional approaches that either conflate the two concepts or ignore locality altogether. | ||

| + | |||

* '''Multiresolution Design:''' Chapel is designed to support programming at higher or lower levels, as required by the programmer. Moreover, higher-level features—like data distributions or parallel loop schedules—may be specified by advanced programmers within the language. | * '''Multiresolution Design:''' Chapel is designed to support programming at higher or lower levels, as required by the programmer. Moreover, higher-level features—like data distributions or parallel loop schedules—may be specified by advanced programmers within the language. | ||

| + | |||

* '''Productivity Features:''' In addition to all of its features designed for supercomputers, Chapel also includes a number of sequential language features designed for productive programming. Examples include type inference, iterator functions, object-oriented programming, and a rich set of array types. The result combines productivity features as in Python™, Matlab®, or Java™ software with optimization opportunities as in Fortran or C. | * '''Productivity Features:''' In addition to all of its features designed for supercomputers, Chapel also includes a number of sequential language features designed for productive programming. Examples include type inference, iterator functions, object-oriented programming, and a rich set of array types. The result combines productivity features as in Python™, Matlab®, or Java™ software with optimization opportunities as in Fortran or C. | ||

== Installation Process == | == Installation Process == | ||

| + | |||

| + | * Download [https://github.com/chapel-lang/chapel/releases/download/1.12.0/chapel-1.12.0.tar.gz chapel-1.12.0.tar.gz] on [http://chapel.cray.com/install.html Chapel Hompage] | ||

| + | |||

| + | * On commandline, type tar xzf chapel-1.12.0.tar.gz | ||

| + | |||

| + | <pre>>tar xzf chapel-1.12.0.tar.gz</pre> | ||

| + | |||

| + | * Go to the chapel folder | ||

| + | |||

| + | <pre>>cd $CHPL_HOME</pre> | ||

| + | |||

| + | * Type gmake or make on command line to build the compiler | ||

| + | |||

| + | <pre>>gmake</pre> | ||

| + | |||

| + | or | ||

| + | |||

| + | <pre>>make</pre> | ||

| + | |||

| + | * Set up environment variable (http://chapel.cray.com/docs/1.12/usingchapel/chplenv.html) | ||

| + | |||

== How to compile sample code == | == How to compile sample code == | ||

| − | == How to parallelize your code using Chapel | + | |

| + | * How to compile | ||

| + | |||

| + | <pre>>chpl -o execution_name file_name</pre> | ||

| + | |||

| + | * How to run | ||

| + | |||

| + | <pre>>./execution_name</pre> | ||

| + | |||

| + | * How to run with the configuration of const variable | ||

| + | |||

| + | <pre>>./execution_name --variable_name=value</pre> | ||

| + | |||

| + | * Chapel offers some example codes in examples folder. Use one of the files to test. | ||

| + | |||

| + | <pre>>cd $CHPL_HOME/examples</pre> | ||

| + | |||

| + | == How to parallelize your code using Chapel == | ||

| + | === Task vs Thread === | ||

| + | * '''Task''': A unit of computation | ||

| + | |||

| + | * '''Thread''': A hardware resource where a task can be mapped to. | ||

| + | |||

| + | Iterations in a loop will be executed in parallel. | ||

| + | |||

| + | === forall === | ||

| + | |||

| + | * '''forall''': When the first iteration starts the tasks will be created on each available thread. It's recommended to use when the iteration size is bigger than the number of threads. It might not run in parallel, when there is not enough thread available. | ||

| + | |||

| + | * Sample Parallel Code using '''forall''' | ||

| + | <pre> | ||

| + | config const n = 10; | ||

| + | forall i in 1..n do | ||

| + | writeln("Iteration #", i," is executed."); | ||

| + | </pre> | ||

| + | |||

| + | * Sample Parallel Code Output using '''forall''' | ||

| + | |||

| + | [[Image:Chpl_output.PNG|Output Example]] | ||

| + | |||

| + | === coforall === | ||

| + | |||

| + | * '''coforall''': A task will be created at each iteration. It's recommended to use coforall when the computation is big and the iteration size is equal to the total number of logical cores(thread). Parllel is mandatory here. | ||

| + | |||

| + | * Sample Parallel Code using '''coforall''' | ||

| + | |||

| + | <pre> | ||

| + | config const n = here.numCores; | ||

| + | corforall i in 1..n do | ||

| + | writeln("Thread #", i,"of ", n); | ||

| + | </pre> | ||

| + | |||

| + | * Sample Parallel Code Output using '''coforall''' | ||

| + | |||

| + | [[Image:coforall.PNG|Output Example]] | ||

| + | |||

| + | === begin === | ||

| + | |||

| + | * '''begin''': Each begin statement will create a different task. | ||

| + | |||

| + | * Sample Parallel Code using '''begin''' | ||

| + | |||

| + | <pre> | ||

| + | begin writeln("There is an apple"); | ||

| + | begin writeln("There is a banana"); | ||

| + | begin writeln("There is an orange"); | ||

| + | begin writeln("There is a melon"); | ||

| + | </pre> | ||

| + | |||

| + | * Sample Parallel Code Output using '''begin''' | ||

| + | |||

| + | [[Image:begin.PNG|Output Example]] | ||

| + | |||

| + | === cobegin === | ||

| + | |||

| + | * '''cobegin''': the each statement in cobegin block will be parallelized. | ||

| + | |||

| + | * Sample Parallel Code using '''cobegin''' | ||

| + | |||

| + | <pre> | ||

| + | cobegin { | ||

| + | writeln("Item 1 is loaded"); | ||

| + | writeln("Item 2 is loaded"); | ||

| + | writeln("Item 3 is loaded"); | ||

| + | } | ||

| + | writeln("All the items are loaded"); | ||

| + | </pre> | ||

| + | |||

| + | * Sample Parallel Code Output using '''cobegin''' | ||

| + | |||

| + | |||

| + | [[Image:cobegin.PNG|Output Example]] | ||

| + | |||

| + | For more detail: http://faculty.knox.edu/dbunde/teaching/chapel | ||

| + | |||

== Demonstration of Sample Code == | == Demonstration of Sample Code == | ||

| + | |||

| + | While learning Chapel, I found a pi program for serial & parallel. I tweaked a little bit to remove errors in the code and added few more lines to check the performance. This is tested on dual-core computer. You can find original code here: http://chapel.cray.com/tutorials/SC10/ | ||

| + | |||

| + | === Serial Pi Program === | ||

| + | |||

| + | <pre> | ||

| + | use Random; | ||

| + | use Time; | ||

| + | |||

| + | config const n = 100000, // number of random points to try | ||

| + | seed = 589494289; // seed for random number generator | ||

| + | const ts = getCurrentTime(); | ||

| + | |||

| + | writeln("Number of points = ", n); | ||

| + | writeln("Random number seed = ", seed); | ||

| + | |||

| + | var rs = new RandomStream(seed, parSafe=false); | ||

| + | var count = 0; | ||

| + | |||

| + | for i in 1..n do | ||

| + | if (rs.getNext()**2 + rs.getNext()**2) <= 1.0 then | ||

| + | count += 1; | ||

| + | const te = getCurrentTime(); | ||

| + | writeln("Approximation of pi = ", count * 4.0 / n); | ||

| + | writeln("Integration :", te-ts); | ||

| + | delete rs; | ||

| + | </pre> | ||

| + | |||

| + | |||

| + | === Task Parallelized Pi Program === | ||

| + | |||

| + | <pre> | ||

| + | use Time; | ||

| + | use Random; | ||

| + | |||

| + | config const n = 100000, | ||

| + | tasks = here.numCores, | ||

| + | seed = 589494289; | ||

| + | const ts = getCurrentTime(); | ||

| + | |||

| + | writeln("Number of points = ", n); | ||

| + | writeln("Random number seed = ", seed); | ||

| + | writeln("Number of tasks = ", tasks); | ||

| + | |||

| + | var counts: [0..#tasks] int; | ||

| + | coforall tid in 0..#tasks { | ||

| + | var rs = new RandomStream(seed, parSafe=false); | ||

| + | const nPerTask = n/tasks, | ||

| + | extras = n%tasks; | ||

| + | rs.skipToNth(2*(tid*nPerTask + (if tid < extras then tid else extras)) + 1); | ||

| + | |||

| + | var count = 0; | ||

| + | for i in 1..nPerTask + (tid < extras) do | ||

| + | count += (rs.getNext()**2 + rs.getNext()**2) <= 1.0; | ||

| + | |||

| + | counts[tid] = count; | ||

| + | |||

| + | delete rs; | ||

| + | } | ||

| + | |||

| + | var count = + reduce counts; | ||

| + | const te = getCurrentTime(); | ||

| + | writeln("Approximation of pi = ", count * 4.0 / n); | ||

| + | writeln("Integration: ", te - ts); | ||

| + | </pre> | ||

| + | |||

| + | === Performance === | ||

| + | |||

| + | |||

| + | [[Image:pi.png|Performance]] | ||

| + | |||

| + | == Working in progress... == | ||

| + | |||

| + | While developing my own program, I got the following issue. | ||

| + | |||

| + | [[Image:bug.png|bug]] | ||

| + | |||

== Useful Links == | == Useful Links == | ||

| + | |||

| + | This is the list of links that I found useful while learning the basic of Chapel | ||

| + | |||

| + | https://learnxinyminutes.com/docs/chapel/ | ||

| + | |||

| + | https://www.cs.colostate.edu/wiki/Chapel_language | ||

| + | |||

| + | http://www.cray.com/blog/chapel-productive-parallel-programming/ | ||

| + | |||

| + | http://www.cray.com/blog/six-ways-to-say-hello-in-chapel-part-1/ | ||

| + | |||

| + | http://chapel.cray.com/publications/cug06.pdf | ||

| + | |||

| + | https://www.youtube.com/watch?v=lo3a_b34zX0 | ||

| + | |||

| + | http://www.inf.ed.ac.uk/teaching/courses/pa/Notes/lecture02-types.pdf | ||

| + | |||

| + | http://chapel.cray.com/presentations/ChapelForCopenhagen-presented.pdf | ||

| + | |||

| + | http://chapel.cray.com/tutorials/ | ||

| + | |||

| + | http://faculty.knox.edu/dbunde/teaching/chapel/ | ||

| + | |||

| + | http://chapel.cray.com/tutorials/SC10/MonteCarloPi.pdf | ||

| + | |||

| + | http://chapel.cray.com/tutorials/NOTUR09/NOTUR-5-DATAPAR.pdf | ||

| + | |||

| + | http://faculty.knox.edu/dbunde/teaching/chapel | ||

Latest revision as of 09:26, 5 April 2016

Contents

Chapel

Team Information

Introduction to Chapel

Chapel(Cascade High-Productivity Language) is an alternative parallel programming language that focuses on the productivity of high-end computing systems.

The concept of "Productivity" is somewhat more special that we might think.

Who are interested in Parallel Programming and what do they want?

* Student: I want something that is similar to the languages that I learned in school such as c++, c, and Java. I want it to be easy to implement the parallel programming on my code.

* HPC Programmers: I want the full control that gives me more spot to increase the performance.

* Computational Scientists: I want something that I can easily implement my computation without knowing much architecture knowledge.

Chapel: Chapel is the language that is easy to implement the parallel computation and similar to other languages with granting full control to the users.

Advantages of Chapel

From one of Cray articles

- General Parallelism: Chapel has the goal of supporting any parallel algorithm you can conceive of on any parallel hardware you want to target. In particular, you should never hit a point where you think “Well, that was fun while it lasted, but now that I want to do x, I’d better go back to MPI.”

- Separation of Parallelism and Locality: Chapel supports distinct concepts for describing parallelism (“These things should run concurrently”) from locality (“This should be placed here; that should be placed over there”). This is in sharp contrast to conventional approaches that either conflate the two concepts or ignore locality altogether.

- Multiresolution Design: Chapel is designed to support programming at higher or lower levels, as required by the programmer. Moreover, higher-level features—like data distributions or parallel loop schedules—may be specified by advanced programmers within the language.

- Productivity Features: In addition to all of its features designed for supercomputers, Chapel also includes a number of sequential language features designed for productive programming. Examples include type inference, iterator functions, object-oriented programming, and a rich set of array types. The result combines productivity features as in Python™, Matlab®, or Java™ software with optimization opportunities as in Fortran or C.

Installation Process

- Download chapel-1.12.0.tar.gz on Chapel Hompage

- On commandline, type tar xzf chapel-1.12.0.tar.gz

>tar xzf chapel-1.12.0.tar.gz

- Go to the chapel folder

>cd $CHPL_HOME

- Type gmake or make on command line to build the compiler

>gmake

or

>make

- Set up environment variable (http://chapel.cray.com/docs/1.12/usingchapel/chplenv.html)

How to compile sample code

- How to compile

>chpl -o execution_name file_name

- How to run

>./execution_name

- How to run with the configuration of const variable

>./execution_name --variable_name=value

- Chapel offers some example codes in examples folder. Use one of the files to test.

>cd $CHPL_HOME/examples

How to parallelize your code using Chapel

Task vs Thread

- Task: A unit of computation

- Thread: A hardware resource where a task can be mapped to.

Iterations in a loop will be executed in parallel.

forall

- forall: When the first iteration starts the tasks will be created on each available thread. It's recommended to use when the iteration size is bigger than the number of threads. It might not run in parallel, when there is not enough thread available.

- Sample Parallel Code using forall

config const n = 10;

forall i in 1..n do

writeln("Iteration #", i," is executed.");

- Sample Parallel Code Output using forall

coforall

- coforall: A task will be created at each iteration. It's recommended to use coforall when the computation is big and the iteration size is equal to the total number of logical cores(thread). Parllel is mandatory here.

- Sample Parallel Code using coforall

config const n = here.numCores;

corforall i in 1..n do

writeln("Thread #", i,"of ", n);

- Sample Parallel Code Output using coforall

begin

- begin: Each begin statement will create a different task.

- Sample Parallel Code using begin

begin writeln("There is an apple");

begin writeln("There is a banana");

begin writeln("There is an orange");

begin writeln("There is a melon");

- Sample Parallel Code Output using begin

cobegin

- cobegin: the each statement in cobegin block will be parallelized.

- Sample Parallel Code using cobegin

cobegin {

writeln("Item 1 is loaded");

writeln("Item 2 is loaded");

writeln("Item 3 is loaded");

}

writeln("All the items are loaded");

- Sample Parallel Code Output using cobegin

For more detail: http://faculty.knox.edu/dbunde/teaching/chapel

Demonstration of Sample Code

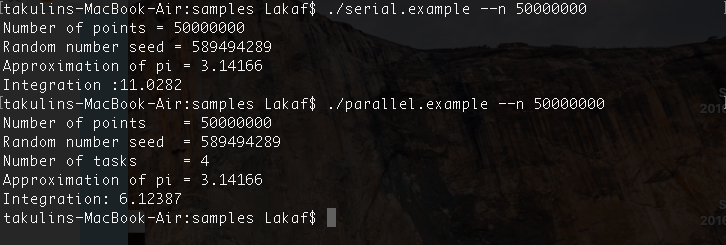

While learning Chapel, I found a pi program for serial & parallel. I tweaked a little bit to remove errors in the code and added few more lines to check the performance. This is tested on dual-core computer. You can find original code here: http://chapel.cray.com/tutorials/SC10/

Serial Pi Program

use Random;

use Time;

config const n = 100000, // number of random points to try

seed = 589494289; // seed for random number generator

const ts = getCurrentTime();

writeln("Number of points = ", n);

writeln("Random number seed = ", seed);

var rs = new RandomStream(seed, parSafe=false);

var count = 0;

for i in 1..n do

if (rs.getNext()**2 + rs.getNext()**2) <= 1.0 then

count += 1;

const te = getCurrentTime();

writeln("Approximation of pi = ", count * 4.0 / n);

writeln("Integration :", te-ts);

delete rs;

Task Parallelized Pi Program

use Time;

use Random;

config const n = 100000,

tasks = here.numCores,

seed = 589494289;

const ts = getCurrentTime();

writeln("Number of points = ", n);

writeln("Random number seed = ", seed);

writeln("Number of tasks = ", tasks);

var counts: [0..#tasks] int;

coforall tid in 0..#tasks {

var rs = new RandomStream(seed, parSafe=false);

const nPerTask = n/tasks,

extras = n%tasks;

rs.skipToNth(2*(tid*nPerTask + (if tid < extras then tid else extras)) + 1);

var count = 0;

for i in 1..nPerTask + (tid < extras) do

count += (rs.getNext()**2 + rs.getNext()**2) <= 1.0;

counts[tid] = count;

delete rs;

}

var count = + reduce counts;

const te = getCurrentTime();

writeln("Approximation of pi = ", count * 4.0 / n);

writeln("Integration: ", te - ts);

Performance

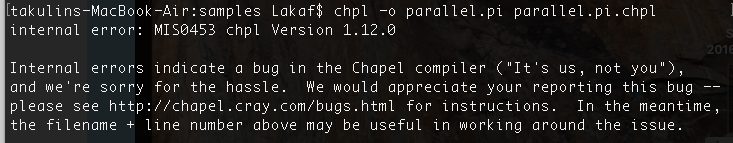

Working in progress...

While developing my own program, I got the following issue.

Useful Links

This is the list of links that I found useful while learning the basic of Chapel

https://learnxinyminutes.com/docs/chapel/

https://www.cs.colostate.edu/wiki/Chapel_language

http://www.cray.com/blog/chapel-productive-parallel-programming/

http://www.cray.com/blog/six-ways-to-say-hello-in-chapel-part-1/

http://chapel.cray.com/publications/cug06.pdf

https://www.youtube.com/watch?v=lo3a_b34zX0

http://www.inf.ed.ac.uk/teaching/courses/pa/Notes/lecture02-types.pdf

http://chapel.cray.com/presentations/ChapelForCopenhagen-presented.pdf

http://chapel.cray.com/tutorials/

http://faculty.knox.edu/dbunde/teaching/chapel/

http://chapel.cray.com/tutorials/SC10/MonteCarloPi.pdf

http://chapel.cray.com/tutorials/NOTUR09/NOTUR-5-DATAPAR.pdf