Difference between revisions of "GPU610/Team AGC"

(→xor_me) |

(→Sample Output) |

||

| (50 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{GPU610/DPS915 Index | | + | {{GPU610/DPS915 Index | 20143}} |

= Team AGC = | = Team AGC = | ||

== Team Members == | == Team Members == | ||

| − | # [mailto:acooc@myseneca?subject=gpu610 Andy Cooc], Some responsibility | + | <s> |

| − | # [mailto:gcastrolondono@myseneca.ca?subject=gpu610 Gabriel Castro], Some other responsibility | + | # [mailto:acooc@myseneca.ca?subject=gpu610 Andy Cooc], Some responsibility |

| − | # [mailto:cmarkieta@myseneca.ca?subject=gpu610 Christopher Markieta], | + | # [mailto:gcastrolondono@myseneca.ca?subject=gpu610 Gabriel Castro], Some other responsibility</s> |

| − | [mailto:acooc@myseneca,gcastrolondono@myseneca.ca,cmarkieta@myseneca.ca?subject=gpu610 Email All] | + | # [mailto:cmarkieta@myseneca.ca?subject=gpu610 Christopher Markieta], All responsibility |

| + | [mailto:acooc@myseneca.ca,gcastrolondono@myseneca.ca,cmarkieta@myseneca.ca?subject=gpu610 Email All] | ||

== Progress == | == Progress == | ||

| Line 14: | Line 15: | ||

==== Gabriel's Findings ==== | ==== Gabriel's Findings ==== | ||

| − | ... | + | |

| + | |||

| + | |||

| + | == Pillow Library (Python) == | ||

| + | |||

| + | The Pillow library is an image manipulation library for python. | ||

| + | It has many common functions like re-sizing, cropping, rotating, blur, sharpen etc. | ||

| + | It's available on github at https://github.com/python-pillow/Pillow | ||

| + | |||

| + | I used 4 filters for testing | ||

| + | |||

| + | {| | ||

| + | ! filter!! run time | ||

| + | |- | ||

| + | | Blur || 1.305s | ||

| + | |- | ||

| + | | Gaussian Blur || 4.492 | ||

| + | |- | ||

| + | | Auto Contrast || 0.092 | ||

| + | |- | ||

| + | | Grey Scale || 0.046 | ||

| + | |} | ||

| + | |||

| + | See the code [https://gist.github.com/GabrielCastro/585cc5cbfd98b12b4ed7 on github]. | ||

| + | ==== Gaussian Blur ==== | ||

| + | |||

| + | A gaussian blur is a filter that can be applied to an image to make it blurry, in its simplest form it goes through every pixel in an image and averages it with its surrounding pixels. | ||

| + | In the Pillow library it is implemented in a [https://github.com/python-pillow/Pillow/blob/master/libImaging/UnsharpMask.c#L58 C module]. This helps with processing time as C is hundreds of times faster than python. A quick look at the source shows that algorithm contains a nested for loop that's O(x * y) and then one that's O(x * y * r), that means that time grows n^2 based on the size of the image and n^3 based on the size of the blur radius. | ||

| + | |||

| + | === Conclusion === | ||

| + | |||

| + | With many nested operations, this a really good candidate for GPU paralization. | ||

==== Christopher's Findings ==== | ==== Christopher's Findings ==== | ||

| Line 22: | Line 54: | ||

xor_me is an open source, brute force password cracker for doc and xls files. | xor_me is an open source, brute force password cracker for doc and xls files. | ||

| + | xor_me was written by Benoît Sibaud and is copyrighted under the terms of the GNU Lesser General Public License version 3 only, as published by the Free Software Foundation. Here is a link to my fork of this repository: | ||

| + | |||

| + | https://github.com/Markieta/xor_me | ||

| + | Here is the profile of a short run of brute_force: | ||

<pre> | <pre> | ||

| Line 34: | Line 70: | ||

0.00 10.60 0.00 1 0.00 0.00 _GLOBAL__sub_I__Z9lclGetKeyPKhl | 0.00 10.60 0.00 1 0.00 0.00 _GLOBAL__sub_I__Z9lclGetKeyPKhl | ||

</pre> | </pre> | ||

| + | |||

| + | As you can see, lclGetKey is the function where I will spend most of my time trying to parallelize code. | ||

| + | |||

| + | This application is quite CPU intensive, but hasn't been optimized for multiple threads. It is using 100% of 1 thread from the 8 available on my CPU. See the list of processes below for an example. | ||

<pre> | <pre> | ||

| Line 57: | Line 97: | ||

5230 markieta 20 0 24952 1776 1176 R 0.3 0.0 0:00.14 top | 5230 markieta 20 0 24952 1776 1176 R 0.3 0.0 0:00.14 top | ||

</pre> | </pre> | ||

| + | |||

| + | Here I ran the brute_force function again to see how long it would really take. I did not run this command for too long, hence the the termination <code>^C</code> after about 2 minutes. | ||

| + | |||

| + | <pre> | ||

| + | markieta@Y560:~/Documents/xor_me$ time ./brute_force 0x499a 0xcc61 | ||

| + | Key: 499a | ||

| + | Hash: cc61 | ||

| + | Password: '1950' | ||

| + | Password: 'w2#1' | ||

| + | ^CState: 20:22:48:46:7e:48:62:c3c0:00000184023807d008a011c010800000:6c62477d45472100 | ||

| + | |||

| + | real 2m16.888s | ||

| + | user 2m16.082s | ||

| + | sys 0m0.640s | ||

| + | |||

| + | </pre> | ||

| + | |||

| + | I am hoping to see a lot of improvement after my research and implementation with CUDA and OpenCL. | ||

| + | |||

| + | Team AGC has decided to collaborate on this project for assignment 2 onwards. | ||

=== Assignment 2 === | === Assignment 2 === | ||

| + | |||

| + | ==== Christopher's Findings ==== | ||

| + | ===== xor_me ===== | ||

| + | |||

| + | <span style="color:red">This project has been dropped due to the complexity of the code and difficulty with respect to this assignment.</span> | ||

| + | |||

| + | Since the majority of CPU cycles is spent in the most inner for-loop, the goal is to parallelize this code only. It is possible to parallelize all 8 for-loops (Maximum of 8 character password) because they are performing very similar tasks. However, due to the time constraint and purpose of this assignment, I will only focus on this one. See below for the following code to be optimized for our GPU: | ||

| + | |||

| + | |||

| + | <pre> | ||

| + | for (o=32; o < 128; ++o) { | ||

| + | skipInits: | ||

| + | unsigned short x = nHash ^ hash; | ||

| + | lclRotateRight(x, 1); | ||

| + | if (32 <= x && x < 127) { | ||

| + | t[0] = static_cast<unsigned char>(x); | ||

| + | if (nKey == lclGetKey(t, 16)) { | ||

| + | std::cout << "Password: '" << t << "'" << std::endl; | ||

| + | } | ||

| + | } | ||

| + | hash ^= r[1]; | ||

| + | r[1] = t[1] = o; | ||

| + | lclRotateLeft(r[1], 2); | ||

| + | hash ^= r[1]; | ||

| + | if (o == 32) { | ||

| + | r[0] = '\0'; | ||

| + | hash = lclGetHash(t, r, 16); | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | My fork for this application can be found here: https://github.com/Markieta/xor_me | ||

| + | |||

| + | At first it seems impossible to do because of the data dependencies: hash, r[1], t[1], and o. But after doing some research, I have learned that the XOR operation is communicative and associative, meaning that we can simply calculate all of the dependencies in parallel first, then combine the results to achieve the same data. | ||

| + | |||

| + | Here is a non-formal proof to help make my point clear. The ability to achieve the same result vertically and horizontally will be the key to solving this task. | ||

| + | |||

| + | <pre> | ||

| + | Step 1: | ||

| + | |||

| + | 101 ^ 001 ^ 111 = 001 | ||

| + | ^ ^ ^ | ||

| + | 111 ^ 100 ^ 001 = 010 | ||

| + | ^ ^ ^ | ||

| + | 100 ^ 010 ^ 001 = 111 | ||

| + | |||

| + | || || || | ||

| + | |||

| + | 110 111 111 | ||

| + | |||

| + | Step 2: Operate with vertical and horizontal | ||

| + | |||

| + | 001 ^ 010 ^ 111 = 110 | ||

| + | 110 ^ 111 ^ 111 = 110 | ||

| + | </pre> | ||

| + | |||

| + | The first iteration of this loop executes a special condition which we must deal with before anything else: | ||

| + | |||

| + | <pre> | ||

| + | if (o == 32) { | ||

| + | r[0] = '\0'; | ||

| + | hash = lclGetHash(t, r, 16); | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | Although r[1] and t[1] are equivalent for some time during the loop, we will keep them on separate arrays for simplicity (lclRotateLeft takes a reference to r at index 1): | ||

| + | |||

| + | <pre> | ||

| + | r[1] = t[1] = o; | ||

| + | lclRotateLeft(r[1], 2); | ||

| + | </pre> | ||

| + | |||

| + | After struggling for a few hours I realized that I should be using Thrust instead: | ||

| + | |||

| + | <pre> | ||

| + | // unsigned short* h_r = new unsigned short[LOOPSIZE * RT_SIZE]; | ||

| + | // unsigned char* h_t = new unsigned char[LOOPSIZE * RT_SIZE]; | ||

| + | // unsigned char* h_x = new unsigned char[LOOPSIZE]; | ||

| + | |||

| + | thrust::host_vector<unsigned short> h_r(LOOPSIZE * RT_SIZE); | ||

| + | thrust::host_vector<unsigned short> h_hash(LOOPSIZE); | ||

| + | thrust::host_vector<unsigned char> h_t(LOOPSIZE * RT_SIZE); | ||

| + | thrust::host_vector<unsigned char> h_x(LOOPSIZE); | ||

| + | |||

| + | // unsigned short* d_r; | ||

| + | // unsigned char* d_t; | ||

| + | // unsigned char* d_x; | ||

| + | |||

| + | // cudaMalloc((void**)&d_r, LOOPSIZE * RT_SIZE); | ||

| + | // cudaMalloc((void**)&d_t, LOOPSIZE * RT_SIZE); | ||

| + | // cudaMalloc((void**)&d_x, LOOPSIZE); | ||

| + | </pre> | ||

| + | |||

| + | The following transformation allows the computation of all XOR operations needed in this phase: | ||

| + | |||

| + | <pre> | ||

| + | thrust::device_vector<unsigned short> d_r = h_r; | ||

| + | thrust::device_vector<unsigned short> d_hash = h_hash; | ||

| + | thrust::device_vector<unsigned char> d_t = h_t; | ||

| + | thrust::device_vector<unsigned char> d_x = h_x; | ||

| + | thrust::device_vector<unsigned short> xor_hash(LOOPSIZE); // XOR results | ||

| + | |||

| + | // For XOR operations with r[1] | ||

| + | // thrust::device_ptr<unsigned short> pHash = &d_hash[0]; | ||

| + | // thrust::device_reference<unsigned short> rHash(pHash); | ||

| + | thrust::transform(d_hash.begin(), d_hash.end(), d_r.begin(), | ||

| + | xor_hash.begin(), thrust::bit_xor<int>()); | ||

| + | </pre> | ||

| + | |||

| + | Now to check each result with x and t[1] if the password has been found, otherwise combine the XOR results by XOR'ing them together for the next iteration to use. | ||

| + | |||

=== Assignment 3 === | === Assignment 3 === | ||

| + | ==== Christopher's Findings ==== | ||

| + | |||

| + | Due to the difficulty of Assignment 2, I have chosen a new project to parallelize. | ||

| + | |||

| + | ===== 1-D Wave Equation ===== | ||

| + | |||

| + | [[Image:wave2.gif]] | ||

| + | |||

| + | |||

| + | The first step was to create a private repository on Bitbucket to avoid any plagiarism issues with this course for next semester students, as well as provide code revision and protection in case my progress is lost or corrupt. | ||

| + | |||

| + | The next step is to convert the following C file into C++ code that will be compatible with CUDA: | ||

| + | |||

| + | [https://computing.llnl.gov/tutorials/mpi/samples/C/mpi_wave.c mpi_wave.c] | ||

| + | |||

| + | And include the following dependency in the directory: | ||

| + | |||

| + | [https://computing.llnl.gov/tutorials/mpi/samples/C/draw_wave.c draw_wave.c] | ||

| + | |||

| + | ====== System Requirements ====== | ||

| + | |||

| + | This project will be built and tested on <s>Windows 7 64-bit</s> Fedora 20 ([http://www.r-tutor.com/gpu-computing/cuda-installation/cuda6.5-fc20 tutorial], remember to [http://www.if-not-true-then-false.com/2011/fedora-16-nvidia-drivers-install-guide-disable-nouveau-driver/#troubleshooting blacklist nouveau in your grub config].) with an Intel Core i5-4670K Haswell CPU (overclocked to 4.9 GHz) and an Nvidia GTX 480 GPU (overclocked to 830/924/1660 MHz) manufactured by Zotac with 1.5 GB of VRAM. | ||

| + | |||

| + | mpi_wave will require the OpenMPI library to compile. | ||

| + | |||

| + | Here is the profiling of the original CPU application, with an increased maximum step to better the test comparison, and calculate the curve at the given step value. | ||

| + | |||

| + | <pre> | ||

| + | % cumulative self self total | ||

| + | time seconds seconds calls s/call s/call name | ||

| + | 100.04 9.62 9.62 1 9.62 9.62 update(int, int) | ||

| + | 0.00 9.62 0.00 2 0.00 0.00 MPI::Is_initialized() | ||

| + | 0.00 9.62 0.00 2 0.00 0.00 MPI::Comm::~Comm() | ||

| + | 0.00 9.62 0.00 2 0.00 0.00 MPI::Comm_Null::~Comm_Null() | ||

| + | 0.00 9.62 0.00 1 0.00 0.00 _GLOBAL__sub_I_RtoL | ||

| + | 0.00 9.62 0.00 1 0.00 0.00 init_master() | ||

| + | 0.00 9.62 0.00 1 0.00 0.00 output_master() | ||

| + | 0.00 9.62 0.00 1 0.00 0.00 __static_initialization_and_destruction_0(int, int) | ||

| + | 0.00 9.62 0.00 1 0.00 0.00 draw_wave(double*) | ||

| + | 0.00 9.62 0.00 1 0.00 0.00 init_line() | ||

| + | </pre> | ||

| + | |||

| + | As you can see, the majority of the CPU time is spent in the update function, which is where I will begin implementing my code. | ||

| + | |||

| + | The 1D Wave Equation is already optimized for multiple CPU threads using the standard MPI library, spreading the sections of the curve to be calculated in parallel with as many available CPU threads at a time. However, a lot of this code is better left as a serial application to be dealt with the CPU, as GPU streams will perform much slower. The CUDA cores will take advantage of the highly parallelizable code in the update function. I am hoping that the separation of CPU cores will not cause complications while they each attempt to use the device to run the kernel and access the GPU's memory, and that it will only optimize it further. | ||

| + | |||

| + | I have included calls to clock() to determine specifically where the most time is being spent in the update function: | ||

| + | |||

| + | <pre> | ||

| + | void update(int left, int right) { | ||

| + | clock_t start0, start1, start2, start3, start4, end1, end2, end3, end4, end0; | ||

| + | start0 = clock(); | ||

| + | double block1 = 0.0, block2 = 0.0, block3 = 0.0, block4 = 0.0; | ||

| + | int i, j; | ||

| + | double dtime, c, dx, tau, sqtau; | ||

| + | MPI_Status status; | ||

| + | |||

| + | dtime = 0.3; | ||

| + | c = 1.0; | ||

| + | dx = 1.0; | ||

| + | tau = (c * dtime / dx); | ||

| + | sqtau = tau * tau; | ||

| + | |||

| + | /* Update values for each point along string */ | ||

| + | for (i = 1; i <= nsteps; i++) { | ||

| + | start1 = clock(); | ||

| + | /* Exchange data with "left-hand" neighbor */ | ||

| + | if (first != 1) { | ||

| + | MPI_Send(&values[1], 1, MPI_DOUBLE, left, RtoL, MPI_COMM_WORLD); | ||

| + | MPI_Recv(&values[0], 1, MPI_DOUBLE, left, LtoR, MPI_COMM_WORLD, | ||

| + | &status); | ||

| + | } | ||

| + | end1 = clock(); | ||

| + | block1 += double(end1 - start1)/CLOCKS_PER_SEC; | ||

| + | start2 = clock(); | ||

| + | /* Exchange data with "right-hand" neighbor */ | ||

| + | if (first + npoints -1 != TPOINTS) { | ||

| + | MPI_Send(&values[npoints], 1, MPI_DOUBLE, right, LtoR, MPI_COMM_WORLD); | ||

| + | MPI_Recv(&values[npoints+1], 1, MPI_DOUBLE, right, RtoL, | ||

| + | MPI_COMM_WORLD, &status); | ||

| + | } | ||

| + | end2 = clock(); | ||

| + | block2 += double(end2 - start2)/CLOCKS_PER_SEC; | ||

| + | start3 = clock(); | ||

| + | /* Update points along line */ | ||

| + | for (j = 1; j <= npoints; j++) { | ||

| + | /* Global endpoints */ | ||

| + | if ((first + j - 1 == 1) || (first + j - 1 == TPOINTS)) | ||

| + | newval[j] = 0.0; | ||

| + | else | ||

| + | /* Use wave equation to update points */ | ||

| + | newval[j] = (2.0 * values[j]) - oldval[j] | ||

| + | + (sqtau * (values[j-1] - (2.0 * values[j]) + values[j+1])); | ||

| + | } | ||

| + | end3 = clock(); | ||

| + | block3 += double(end3 - start3)/CLOCKS_PER_SEC; | ||

| + | start4 = clock(); | ||

| + | for (j = 1; j <= npoints; j++) { | ||

| + | oldval[j] = values[j]; | ||

| + | values[j] = newval[j]; | ||

| + | } | ||

| + | end4 = clock(); | ||

| + | block4 += double(end4 - start4)/CLOCKS_PER_SEC; | ||

| + | } | ||

| + | end0 = clock(); | ||

| + | std::cout << "Block 1: " << block1 << std::endl; | ||

| + | std::cout << "Block 2: " << block2 << std::endl; | ||

| + | std::cout << "Block 3: " << block3 << std::endl; | ||

| + | std::cout << "Block 4: " << block4 << std::endl; | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | Since function is called (1-10000000) times depending on the number of steps chosen for the user, I have calculated the sum of 4 different blocks: | ||

| + | |||

| + | |||

| + | <pre> | ||

| + | Block 1: 4.18654 | ||

| + | Block 2: 0.98329 | ||

| + | Block 3: 13.2884 | ||

| + | Block 4: 8.3342 | ||

| + | |||

| + | Block 1: 1.02494 | ||

| + | Block 2: 4.53157 | ||

| + | Block 3: 12.8947 | ||

| + | Block 4: 8.36864 | ||

| + | </pre> | ||

| + | |||

| + | As you can see, most of the time is spent in the 3rd and 4th blocks, which is where I will begin optimization. | ||

| + | |||

| + | Since the number of npoints is 800 in total, divided into separate CPU threads, we will never reach the maximum number of threads per block, 1024. | ||

| + | |||

| + | ====== Sample Output ====== | ||

| + | |||

| + | Steps: 1 | ||

| + | |||

| + | [[Image:wave_output1.jpg]] | ||

| + | |||

| + | Steps: 500 | ||

| + | |||

| + | [[Image:wave_output2.jpg]] | ||

| + | |||

| + | Steps: 1,000 | ||

| + | |||

| + | [[Image:wave_output3.jpg]] | ||

| + | |||

| + | Steps: 10,000 | ||

| + | |||

| + | [[Image:wave_output4.jpg]] | ||

| + | |||

| + | At this point, I am noticing the delay in constantly transferring data between the RAM and Video RAM. Splitting the array into multiple sections requires constant checking of the left and right columns of those arrays. Thus, I will re-factor the entire code to use only 1 CPU thread and remove MPI. | ||

| + | |||

| + | ====== Optimization ====== | ||

| + | |||

| + | After using shared memory and prefetching values to perform operations in the kernel, my GPU no longer crashes on extreme operations involving millions of steps. It also outperforms my CPU running the MPI version of this application in 4 threads running at 4.9 GHz each. | ||

| + | |||

| + | Since my video card has 48 KB of shared memory and I am not using more than 20 KB with all of my arrays, I do not need to worry about coalescing my data, since shared memory is much faster. | ||

| + | |||

| + | Due to operational limits, the kernel is being killed short of completion by the watchdog of the operation system. Thus I have updated the maximum step count to be 1 million, otherwise the kernel would need to be rethought or be run in Tesla Compute Cluster (TCC) mode with a secondary GPU not being used for display, but I just don't have that kind of money right now. | ||

| + | |||

| + | ====== Testing ====== | ||

| + | |||

| + | I have written the following script for testing purposes against the MPI implementation in dual-core and quad-core modes, and the CUDA implementation using 1 block of 800 threads: | ||

| + | |||

| + | <pre> | ||

| + | #!/usr/bin/env bash | ||

| + | |||

| + | # 1D Wave Equation Benchmark | ||

| + | # output_master() must be commented out | ||

| + | # Author: Christopher Markieta | ||

| + | |||

| + | set -e # Exit on error | ||

| + | |||

| + | MYDIR=$(dirname $0) | ||

| + | |||

| + | if [ "$1" == "mpi" ]; then | ||

| + | if [ -z $2 ]; then | ||

| + | echo "Usage: $0 mpi [2-8]" | ||

| + | exit 1 | ||

| + | fi | ||

| + | |||

| + | # Number of threads to launch | ||

| + | run="mpirun -n $2 $MYDIR/wave.o" | ||

| + | elif [ "$1" == "cuda" ]; then | ||

| + | run="$MYDIR/wave.o" | ||

| + | else | ||

| + | echo "Usage: $0 [cuda|mpi] ..." | ||

| + | exit 1 | ||

| + | fi | ||

| + | |||

| + | # 1 million | ||

| + | for steps in 1 10 100 1000 10000 100000 1000000 | ||

| + | do | ||

| + | time echo $steps | $run &> /dev/null | ||

| + | done | ||

| + | </pre> | ||

| + | |||

| + | The final results show that the optimization was a success: | ||

| + | |||

| + | [[Image:cuda_wave.jpg]] | ||

| + | |||

| + | Although this application might not profit from such large number of steps, it could be useful for scientific computation. The kernel can be improved to support infinitely large number of steps, but I am lacking the hardware and for demonstration purposes, this should be enough. | ||

Latest revision as of 03:01, 30 November 2014

GPU610/DPS915 | Student List | Group and Project Index | Student Resources | Glossary

Contents

Team AGC

Team Members

- Andy Cooc, Some responsibility

- Gabriel Castro, Some other responsibility

- Christopher Markieta, All responsibility

Progress

Assignment 1

Andy's Findings

...

Gabriel's Findings

Pillow Library (Python)

The Pillow library is an image manipulation library for python. It has many common functions like re-sizing, cropping, rotating, blur, sharpen etc. It's available on github at https://github.com/python-pillow/Pillow

I used 4 filters for testing

| filter | run time |

|---|---|

| Blur | 1.305s |

| Gaussian Blur | 4.492 |

| Auto Contrast | 0.092 |

| Grey Scale | 0.046 |

See the code on github.

Gaussian Blur

A gaussian blur is a filter that can be applied to an image to make it blurry, in its simplest form it goes through every pixel in an image and averages it with its surrounding pixels. In the Pillow library it is implemented in a C module. This helps with processing time as C is hundreds of times faster than python. A quick look at the source shows that algorithm contains a nested for loop that's O(x * y) and then one that's O(x * y * r), that means that time grows n^2 based on the size of the image and n^3 based on the size of the blur radius.

Conclusion

With many nested operations, this a really good candidate for GPU paralization.

Christopher's Findings

xor_me

xor_me is an open source, brute force password cracker for doc and xls files.

xor_me was written by Benoît Sibaud and is copyrighted under the terms of the GNU Lesser General Public License version 3 only, as published by the Free Software Foundation. Here is a link to my fork of this repository:

https://github.com/Markieta/xor_me

Here is the profile of a short run of brute_force:

Flat profile: Each sample counts as 0.01 seconds. % cumulative self self total time seconds seconds calls Ts/call Ts/call name 99.35 10.58 10.58 lclGetKey(unsigned char const*, long) 0.19 10.60 0.02 dump_exit(int) 0.00 10.60 0.00 1 0.00 0.00 _GLOBAL__sub_I__Z9lclGetKeyPKhl

As you can see, lclGetKey is the function where I will spend most of my time trying to parallelize code.

This application is quite CPU intensive, but hasn't been optimized for multiple threads. It is using 100% of 1 thread from the 8 available on my CPU. See the list of processes below for an example.

top - 23:25:32 up 1:16, 3 users, load average: 0.94, 0.65, 0.60 Tasks: 256 total, 2 running, 253 sleeping, 0 stopped, 1 zombie %Cpu(s): 13.7 us, 0.7 sy, 0.0 ni, 85.5 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st KiB Mem: 3979012 total, 3270196 used, 708816 free, 352592 buffers KiB Swap: 4124668 total, 0 used, 4124668 free. 984048 cached Mem PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 5229 markieta 20 0 12664 1064 896 R 100.0 0.0 1:06.04 brute_force 1467 root 20 0 538620 208272 166664 S 3.7 5.2 6:55.85 Xorg 2540 markieta 20 0 1084328 169500 53460 S 3.3 4.3 11:57.88 chrome 2075 markieta 9 -11 509016 6840 4500 S 2.7 0.2 3:21.47 pulseaudio 3022 markieta 20 0 1094124 79252 10220 S 2.3 2.0 3:05.77 chrome 2932 markieta 20 0 829212 43176 19848 S 1.7 1.1 0:31.29 /usr/bin/termin 2989 markieta 20 0 1039100 117292 23968 S 1.7 2.9 1:48.53 chrome 2296 markieta 20 0 1553220 170248 65592 S 1.0 4.3 3:16.43 compiz 2789 markieta 20 0 1064160 99040 20116 S 0.7 2.5 1:24.61 chrome 2993 markieta 20 0 1112596 155316 26192 S 0.7 3.9 1:31.43 chrome 3482 root 20 0 0 0 0 S 0.3 0.0 0:07.34 kworker/2:2 4364 root 20 0 0 0 0 S 0.3 0.0 0:00.96 kworker/0:0 5230 markieta 20 0 24952 1776 1176 R 0.3 0.0 0:00.14 top

Here I ran the brute_force function again to see how long it would really take. I did not run this command for too long, hence the the termination ^C after about 2 minutes.

markieta@Y560:~/Documents/xor_me$ time ./brute_force 0x499a 0xcc61 Key: 499a Hash: cc61 Password: '1950' Password: 'w2#1' ^CState: 20:22:48:46:7e:48:62:c3c0:00000184023807d008a011c010800000:6c62477d45472100 real 2m16.888s user 2m16.082s sys 0m0.640s

I am hoping to see a lot of improvement after my research and implementation with CUDA and OpenCL.

Team AGC has decided to collaborate on this project for assignment 2 onwards.

Assignment 2

Christopher's Findings

xor_me

This project has been dropped due to the complexity of the code and difficulty with respect to this assignment.

Since the majority of CPU cycles is spent in the most inner for-loop, the goal is to parallelize this code only. It is possible to parallelize all 8 for-loops (Maximum of 8 character password) because they are performing very similar tasks. However, due to the time constraint and purpose of this assignment, I will only focus on this one. See below for the following code to be optimized for our GPU:

for (o=32; o < 128; ++o) {

skipInits:

unsigned short x = nHash ^ hash;

lclRotateRight(x, 1);

if (32 <= x && x < 127) {

t[0] = static_cast<unsigned char>(x);

if (nKey == lclGetKey(t, 16)) {

std::cout << "Password: '" << t << "'" << std::endl;

}

}

hash ^= r[1];

r[1] = t[1] = o;

lclRotateLeft(r[1], 2);

hash ^= r[1];

if (o == 32) {

r[0] = '\0';

hash = lclGetHash(t, r, 16);

}

}

My fork for this application can be found here: https://github.com/Markieta/xor_me

At first it seems impossible to do because of the data dependencies: hash, r[1], t[1], and o. But after doing some research, I have learned that the XOR operation is communicative and associative, meaning that we can simply calculate all of the dependencies in parallel first, then combine the results to achieve the same data.

Here is a non-formal proof to help make my point clear. The ability to achieve the same result vertically and horizontally will be the key to solving this task.

Step 1: 101 ^ 001 ^ 111 = 001 ^ ^ ^ 111 ^ 100 ^ 001 = 010 ^ ^ ^ 100 ^ 010 ^ 001 = 111 || || || 110 111 111 Step 2: Operate with vertical and horizontal 001 ^ 010 ^ 111 = 110 110 ^ 111 ^ 111 = 110

The first iteration of this loop executes a special condition which we must deal with before anything else:

if (o == 32) {

r[0] = '\0';

hash = lclGetHash(t, r, 16);

}

Although r[1] and t[1] are equivalent for some time during the loop, we will keep them on separate arrays for simplicity (lclRotateLeft takes a reference to r at index 1):

r[1] = t[1] = o; lclRotateLeft(r[1], 2);

After struggling for a few hours I realized that I should be using Thrust instead:

// unsigned short* h_r = new unsigned short[LOOPSIZE * RT_SIZE]; // unsigned char* h_t = new unsigned char[LOOPSIZE * RT_SIZE]; // unsigned char* h_x = new unsigned char[LOOPSIZE]; thrust::host_vector<unsigned short> h_r(LOOPSIZE * RT_SIZE); thrust::host_vector<unsigned short> h_hash(LOOPSIZE); thrust::host_vector<unsigned char> h_t(LOOPSIZE * RT_SIZE); thrust::host_vector<unsigned char> h_x(LOOPSIZE); // unsigned short* d_r; // unsigned char* d_t; // unsigned char* d_x; // cudaMalloc((void**)&d_r, LOOPSIZE * RT_SIZE); // cudaMalloc((void**)&d_t, LOOPSIZE * RT_SIZE); // cudaMalloc((void**)&d_x, LOOPSIZE);

The following transformation allows the computation of all XOR operations needed in this phase:

thrust::device_vector<unsigned short> d_r = h_r;

thrust::device_vector<unsigned short> d_hash = h_hash;

thrust::device_vector<unsigned char> d_t = h_t;

thrust::device_vector<unsigned char> d_x = h_x;

thrust::device_vector<unsigned short> xor_hash(LOOPSIZE); // XOR results

// For XOR operations with r[1]

// thrust::device_ptr<unsigned short> pHash = &d_hash[0];

// thrust::device_reference<unsigned short> rHash(pHash);

thrust::transform(d_hash.begin(), d_hash.end(), d_r.begin(),

xor_hash.begin(), thrust::bit_xor<int>());

Now to check each result with x and t[1] if the password has been found, otherwise combine the XOR results by XOR'ing them together for the next iteration to use.

Assignment 3

Christopher's Findings

Due to the difficulty of Assignment 2, I have chosen a new project to parallelize.

1-D Wave Equation

The first step was to create a private repository on Bitbucket to avoid any plagiarism issues with this course for next semester students, as well as provide code revision and protection in case my progress is lost or corrupt.

The next step is to convert the following C file into C++ code that will be compatible with CUDA:

And include the following dependency in the directory:

System Requirements

This project will be built and tested on Windows 7 64-bit Fedora 20 (tutorial, remember to blacklist nouveau in your grub config.) with an Intel Core i5-4670K Haswell CPU (overclocked to 4.9 GHz) and an Nvidia GTX 480 GPU (overclocked to 830/924/1660 MHz) manufactured by Zotac with 1.5 GB of VRAM.

mpi_wave will require the OpenMPI library to compile.

Here is the profiling of the original CPU application, with an increased maximum step to better the test comparison, and calculate the curve at the given step value.

% cumulative self self total time seconds seconds calls s/call s/call name 100.04 9.62 9.62 1 9.62 9.62 update(int, int) 0.00 9.62 0.00 2 0.00 0.00 MPI::Is_initialized() 0.00 9.62 0.00 2 0.00 0.00 MPI::Comm::~Comm() 0.00 9.62 0.00 2 0.00 0.00 MPI::Comm_Null::~Comm_Null() 0.00 9.62 0.00 1 0.00 0.00 _GLOBAL__sub_I_RtoL 0.00 9.62 0.00 1 0.00 0.00 init_master() 0.00 9.62 0.00 1 0.00 0.00 output_master() 0.00 9.62 0.00 1 0.00 0.00 __static_initialization_and_destruction_0(int, int) 0.00 9.62 0.00 1 0.00 0.00 draw_wave(double*) 0.00 9.62 0.00 1 0.00 0.00 init_line()

As you can see, the majority of the CPU time is spent in the update function, which is where I will begin implementing my code.

The 1D Wave Equation is already optimized for multiple CPU threads using the standard MPI library, spreading the sections of the curve to be calculated in parallel with as many available CPU threads at a time. However, a lot of this code is better left as a serial application to be dealt with the CPU, as GPU streams will perform much slower. The CUDA cores will take advantage of the highly parallelizable code in the update function. I am hoping that the separation of CPU cores will not cause complications while they each attempt to use the device to run the kernel and access the GPU's memory, and that it will only optimize it further.

I have included calls to clock() to determine specifically where the most time is being spent in the update function:

void update(int left, int right) {

clock_t start0, start1, start2, start3, start4, end1, end2, end3, end4, end0;

start0 = clock();

double block1 = 0.0, block2 = 0.0, block3 = 0.0, block4 = 0.0;

int i, j;

double dtime, c, dx, tau, sqtau;

MPI_Status status;

dtime = 0.3;

c = 1.0;

dx = 1.0;

tau = (c * dtime / dx);

sqtau = tau * tau;

/* Update values for each point along string */

for (i = 1; i <= nsteps; i++) {

start1 = clock();

/* Exchange data with "left-hand" neighbor */

if (first != 1) {

MPI_Send(&values[1], 1, MPI_DOUBLE, left, RtoL, MPI_COMM_WORLD);

MPI_Recv(&values[0], 1, MPI_DOUBLE, left, LtoR, MPI_COMM_WORLD,

&status);

}

end1 = clock();

block1 += double(end1 - start1)/CLOCKS_PER_SEC;

start2 = clock();

/* Exchange data with "right-hand" neighbor */

if (first + npoints -1 != TPOINTS) {

MPI_Send(&values[npoints], 1, MPI_DOUBLE, right, LtoR, MPI_COMM_WORLD);

MPI_Recv(&values[npoints+1], 1, MPI_DOUBLE, right, RtoL,

MPI_COMM_WORLD, &status);

}

end2 = clock();

block2 += double(end2 - start2)/CLOCKS_PER_SEC;

start3 = clock();

/* Update points along line */

for (j = 1; j <= npoints; j++) {

/* Global endpoints */

if ((first + j - 1 == 1) || (first + j - 1 == TPOINTS))

newval[j] = 0.0;

else

/* Use wave equation to update points */

newval[j] = (2.0 * values[j]) - oldval[j]

+ (sqtau * (values[j-1] - (2.0 * values[j]) + values[j+1]));

}

end3 = clock();

block3 += double(end3 - start3)/CLOCKS_PER_SEC;

start4 = clock();

for (j = 1; j <= npoints; j++) {

oldval[j] = values[j];

values[j] = newval[j];

}

end4 = clock();

block4 += double(end4 - start4)/CLOCKS_PER_SEC;

}

end0 = clock();

std::cout << "Block 1: " << block1 << std::endl;

std::cout << "Block 2: " << block2 << std::endl;

std::cout << "Block 3: " << block3 << std::endl;

std::cout << "Block 4: " << block4 << std::endl;

}

Since function is called (1-10000000) times depending on the number of steps chosen for the user, I have calculated the sum of 4 different blocks:

Block 1: 4.18654 Block 2: 0.98329 Block 3: 13.2884 Block 4: 8.3342 Block 1: 1.02494 Block 2: 4.53157 Block 3: 12.8947 Block 4: 8.36864

As you can see, most of the time is spent in the 3rd and 4th blocks, which is where I will begin optimization.

Since the number of npoints is 800 in total, divided into separate CPU threads, we will never reach the maximum number of threads per block, 1024.

Sample Output

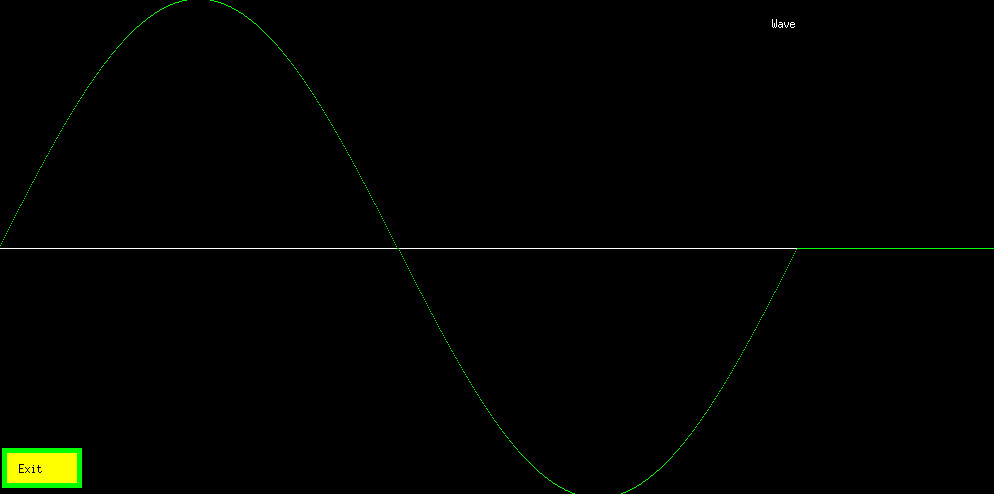

Steps: 1

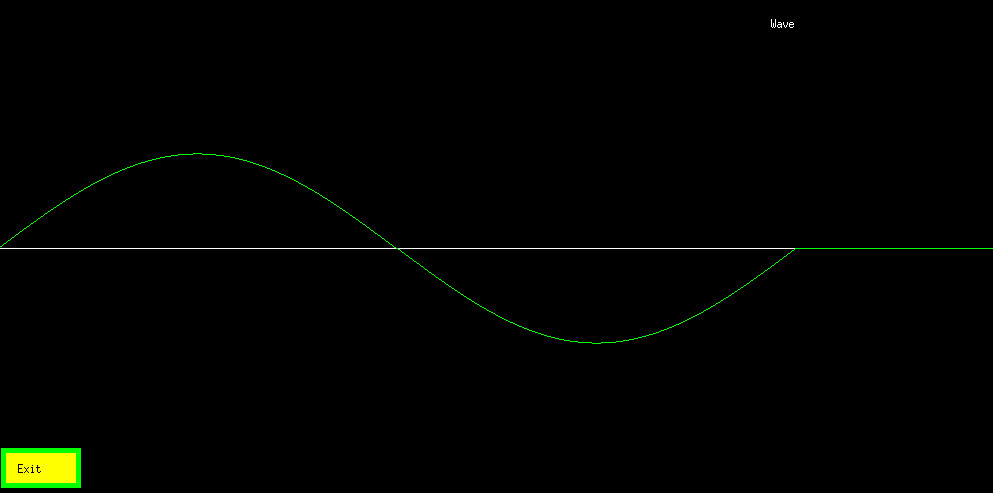

Steps: 500

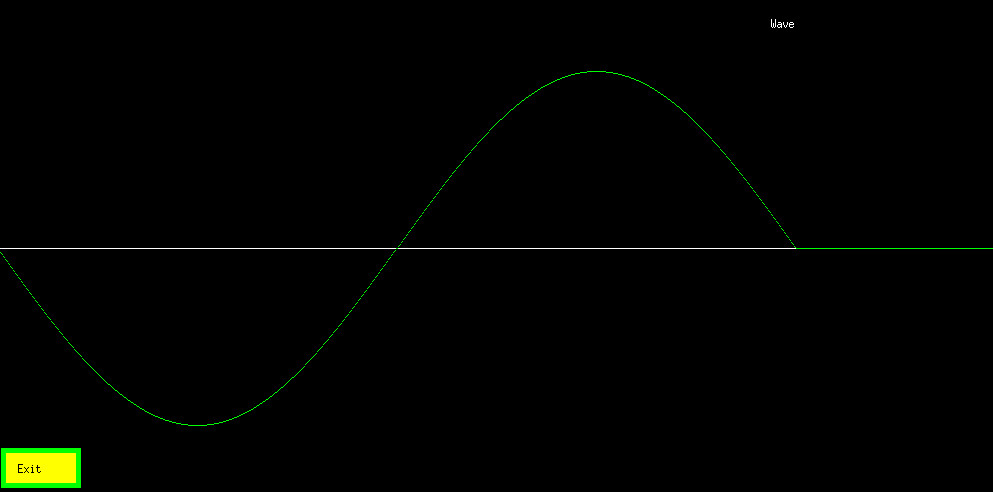

Steps: 1,000

Steps: 10,000

At this point, I am noticing the delay in constantly transferring data between the RAM and Video RAM. Splitting the array into multiple sections requires constant checking of the left and right columns of those arrays. Thus, I will re-factor the entire code to use only 1 CPU thread and remove MPI.

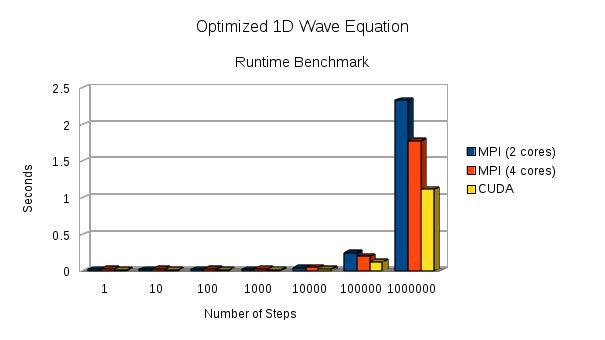

Optimization

After using shared memory and prefetching values to perform operations in the kernel, my GPU no longer crashes on extreme operations involving millions of steps. It also outperforms my CPU running the MPI version of this application in 4 threads running at 4.9 GHz each.

Since my video card has 48 KB of shared memory and I am not using more than 20 KB with all of my arrays, I do not need to worry about coalescing my data, since shared memory is much faster.

Due to operational limits, the kernel is being killed short of completion by the watchdog of the operation system. Thus I have updated the maximum step count to be 1 million, otherwise the kernel would need to be rethought or be run in Tesla Compute Cluster (TCC) mode with a secondary GPU not being used for display, but I just don't have that kind of money right now.

Testing

I have written the following script for testing purposes against the MPI implementation in dual-core and quad-core modes, and the CUDA implementation using 1 block of 800 threads:

#!/usr/bin/env bash

# 1D Wave Equation Benchmark

# output_master() must be commented out

# Author: Christopher Markieta

set -e # Exit on error

MYDIR=$(dirname $0)

if [ "$1" == "mpi" ]; then

if [ -z $2 ]; then

echo "Usage: $0 mpi [2-8]"

exit 1

fi

# Number of threads to launch

run="mpirun -n $2 $MYDIR/wave.o"

elif [ "$1" == "cuda" ]; then

run="$MYDIR/wave.o"

else

echo "Usage: $0 [cuda|mpi] ..."

exit 1

fi

# 1 million

for steps in 1 10 100 1000 10000 100000 1000000

do

time echo $steps | $run &> /dev/null

done

The final results show that the optimization was a success:

Although this application might not profit from such large number of steps, it could be useful for scientific computation. The kernel can be improved to support infinitely large number of steps, but I am lacking the hardware and for demonstration purposes, this should be enough.