Difference between revisions of "AAA Adrina Arsa Andriy"

(→Hot Spot Identification) |

(→Assignment 3) |

||

| (30 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | = | + | = AAA = |

== Team Members == | == Team Members == | ||

# [mailto:aasauvageot@myseneca.ca?subject=gpu610 Adrian Sauvageot], Developer | # [mailto:aasauvageot@myseneca.ca?subject=gpu610 Adrian Sauvageot], Developer | ||

| − | # .. | + | # [mailto:gandriy1@myseneca.ca?subject=dps915 Andriy Guzenko], Developer |

| − | [mailto:aasauvageot@myseneca.ca?subject=dps901-gpu610 Email All] | + | # [mailto:akhalid19@myseneca.ca?subject=dps915 Arsalan Khalid], Developer |

| + | [mailto:aasauvageot@myseneca.ca?subject=dps901-gpu610,mailto:gandriy1@myseneca.ca?subject=dps915,mailto:akhalid19@myseneca.ca?subject=dps915, Email All] | ||

== Progress == | == Progress == | ||

| Line 17: | Line 18: | ||

I decided to look at the application as if someone was to send a large amount of encrypted data in a large string. To encrypt the string, there is data dependency; encrypting "The Quick Brown Fox Jumped Over The Moon" could give an encrypted code of: | I decided to look at the application as if someone was to send a large amount of encrypted data in a large string. To encrypt the string, there is data dependency; encrypting "The Quick Brown Fox Jumped Over The Moon" could give an encrypted code of: | ||

| − | + | <pre>C:ÖÜ+┘~ ╦÷bܼ↨▲+¶.f¾W.▀#§cÆ▼H╣♥¿aOv:Ss╚Ðr│y!%·∟</pre> | |

If you typed the same thing twice, you would get: | If you typed the same thing twice, you would get: | ||

| − | + | <pre>C:ÖÜ+┘~ ╦÷bܼ↨▲+¶.f¾W.▀#§cÆ▼H╣♥éý┌ï0샨ï╩↑R┘^õ¡#£2ÌÕøÈ榪ç}FÉñW{òpÂ╩☺üqÿgG‗iã$(♦G]<}*▲`eJÔÓW╗õí</pre> | |

However, it would be possible take a large string, break it into several pieces, and encrypt each piece separately. | However, it would be possible take a large string, break it into several pieces, and encrypt each piece separately. | ||

| Line 27: | Line 28: | ||

For example, if a string was to be broken up after each ".", each part of the large string would be encoded separately, and sent over the network along with a header that contained the strings order. | For example, if a string was to be broken up after each ".", each part of the large string would be encoded separately, and sent over the network along with a header that contained the strings order. | ||

IE: | IE: | ||

| − | + | <pre> | |

encryptedPacket{ | encryptedPacket{ | ||

| Line 35: | Line 36: | ||

} | } | ||

| − | + | </pre> | |

The strings could be encoded parallely, and then again decoded parallely. | The strings could be encoded parallely, and then again decoded parallely. | ||

This would be able to decrease the time taken to encode the strings if they were large. The data would still be encoded while being sent over the network. | This would be able to decrease the time taken to encode the strings if they were large. The data would still be encoded while being sent over the network. | ||

| − | =====Hot Spot Identification===== | + | =====Hot Spot Identification By Profiling===== |

In order to identify the hot spots of the program, I used Visual Studio. (Unfortunately, for this library I could not use the Seneca Matrix system, as my account did not have sufficient privileges to install the library.) | In order to identify the hot spots of the program, I used Visual Studio. (Unfortunately, for this library I could not use the Seneca Matrix system, as my account did not have sufficient privileges to install the library.) | ||

| − | [[Image: | + | [[Image:Hotpath crypto.png|thumb|800px|center]] |

| + | |||

| + | This program was called with a very large string that was split into pieces by spiting it on the "." | ||

| + | |||

| + | The explode function (which is a function I created) is the function that splits the string into pieces. This function could be paralleled to speed up the process of spiting up the string. Since this takes almost 20% of the total time it would be worth speeding up. | ||

| + | |||

| + | The other function that takes up a lot of resources is the Cryptopp::StringSource::StringSource function. This is part of the function that takes the String and encodes it. In the StringSource function, the strings that are being encrypted, or decrypted are being copied, being encrypted/decrypted, and stored. | ||

| + | |||

| + | Because the data would be split into smaller pieces by the explode function, this could be paralleled. Since this takes almost 55% of the time of the program, it would be worth speeding up. | ||

| + | |||

| + | ====Andriy Guzenko’s Findings==== | ||

| + | =====Program Description===== | ||

| + | I found a jpeg-compressor of a relatively small size written in C++. The core compression algorithm is about 900 lines plus the header file. You can find the code online here: [ https://code.google.com/p/jpeg-compressor/ ] | ||

| + | |||

| + | It is a console application which requires three command line arguments for successful execution. The first is the source ‘jpg’ file with appropriate ‘read’ permissions. The second is the destination file in any format. The third argument is the quality factor which is a number between 0 and 100. The 0 quality factor will produce a better quality compressed image. | ||

| + | |||

| + | The application also contains the decompression module which allows to decompress files back into ‘jpg’ format. | ||

| + | |||

| + | Finally, the application provides a number of options for compression and decompression such as: | ||

| + | |||

| + | ‘-o’ – enables optimized Huffman tables and makes compression slower but produces smaller files | ||

| + | |||

| + | ‘-x’ – exhaustive compression test | ||

| + | |||

| + | =====Profiling===== | ||

| + | Profiling of this application revealed a number of hotspots in both compression and decompression algorithms. | ||

| + | |||

| + | The compression profile for 3.3 Mb ‘Jpg’ image which produced 0.7 Mb compressed file: | ||

| + | |||

| + | <pre> | ||

| + | > a.out img.jpg result 50 | ||

| + | |||

| + | Each sample counts as 0.01 seconds. | ||

| + | |||

| + | % cumulative self self total | ||

| + | |||

| + | time seconds seconds calls s/call s/call name | ||

| + | |||

| + | 12.18 1.66 1.66 image_compare(image_compare_results&, int, int, unsigned char const*, int, unsigned char const*, int, bool) | ||

| + | |||

| + | 8.90 2.87 1.21 YCbCr_to_RGB_row(unsigned char*, unsigned char const*, unsigned char const*, unsigned char const*, int, int) | ||

| + | |||

| + | 8.61 4.04 1.17 jpge::DCT2D(int*) | ||

| + | |||

| + | 8.46 5.18 1.15 jpge::RGB_to_YCC(unsigned char*, unsigned char const*, int) | ||

| + | |||

| + | 8.02 6.28 1.09 idct_block(unsigned char*, int, short*, unsigned char*) | ||

| + | |||

| + | 6.48 7.16 0.88 3456 0.00 0.00 jpgd::jpeg_decoder::expanded_convert() | ||

| + | |||

| + | 5.30 7.88 0.72 4214032 0.00 0.00 jpgd::Col<4>::idct(unsigned char*, int const*) | ||

| + | |||

| + | 4.12 8.44 0.56 373248 0.00 0.00 jpge::jpeg_encoder::load_quantized_coefficients(int) | ||

| + | |||

| + | 4.01 8.98 0.55 get_pixel(int*, unsigned char const*, bool, int) | ||

| + | |||

| + | 3.16 9.41 0.43 resample_row_h_2(unsigned char*, unsigned char*, unsigned char*, int, int) | ||

| + | |||

| + | 2.58 9.76 0.35 2140439 0.00 0.00 jpgd::Row<4>::idct(int*, short const*) | ||

| + | |||

| + | 2.43 10.09 0.33 373248 0.00 0.00 jpge::jpeg_encoder::code_coefficients_pass_two(int) | ||

| + | |||

| + | 2.02 10.37 0.28 decode_block(jpeg*, short*, huffman*, huffman*, int) | ||

| + | </pre> | ||

| + | |||

| + | And produced the output : | ||

| + | |||

| + | <pre> | ||

| + | jpge/jpgd example app | ||

| + | |||

| + | Source file: "img.JPG", image resolution: 4608x3456, actual comps: 3 | ||

| + | |||

| + | Writing JPEG image to file: comp | ||

| + | |||

| + | Compressed file size: 799619, bits/pixel: 0.402 | ||

| + | |||

| + | Compression time: 4609.175ms, Decompression time: 6812.550ms | ||

| + | |||

| + | Error Max: 71.000000, Mean: 5.951586, Mean^2: 20.892606, RMSE: 4.570843, PSNR: 3 | ||

| + | |||

| + | 4.930877 | ||

| + | |||

| + | Success. | ||

| + | </pre> | ||

| + | |||

| + | According to the profiling data such methods as “jpge::DCT2D(int*)” and “jpge::RGB_to_YCC” can be parallelized to improve the application performance which will be particularly useful for compressing large ‘jpg’ files at a better quality factor. | ||

| + | |||

| + | ====Arsalan Khalid Findings==== | ||

| + | =====Program To Parallelize===== | ||

| + | |||

| + | I discovered a C++ hangman application, it needs quite a bit of work but I believe that it is much more interesting than parallelizing the standard image processor. | ||

| + | Most of the processing power is spent on the main, therefore in can be better modularized into functions and contain a more complex and efficient algorithm to handle | ||

| + | more amount of words. The program's output is as so: | ||

| + | |||

| + | Welcome to hangman...Guess a country Name Each letter is represented by a star. | ||

| + | |||

| + | You have to type only one letter in one try You have 5 tries to try and guess the word. | ||

| + | |||

| + | 21:48, 3 October 2014 (EDT)21:48, 3 October 2014 (EDT)21:48, 3 October 2014 (EDT)21:48, 3 October 2014 (EDT)21:48, 3 October 2014 (EDT)21:48, 3 October 2014 (EDT)21:48, 3 October 2014 (EDT)21:48, 3 October 2014 (EDT)[[User:Arsalan Khalid|Arsalan Khalid]] | ||

| + | |||

| + | **** Guess a letter: o Whoops! That letter isn't in there!You have 4 guesses left. | ||

| + | |||

| + | **** Guess a letter: p Whoops! That letter isn't in there!You have 3 guesses left. | ||

| + | |||

| + | **** Guess a letter: i You found a letter! Isn't that exciting!You have 3 guesses left. | ||

| + | |||

| + | i*** Guess a letter: n You found a letter! Isn't that exciting!You have 3 guesses left. | ||

| + | |||

| + | i**n Guess a letter: r You found a letter! Isn't that exciting!You have 3 guesses left. | ||

| + | |||

| + | ir*n Guess a letter: a You found a letter! Isn't that exciting!You have 3 guesses left. | ||

| + | |||

| + | iran | ||

| + | |||

| + | Yeah! You got it! | ||

| + | |||

| + | The profiling was as follows: | ||

| + | |||

| + | granularity: each sample hit covers 2 byte(s) no time propagated | ||

| + | |||

| + | index % time self children called name | ||

| + | 0.00 0.00 1/1 __libc_csu_init [15] | ||

| + | [8] 0.0 0.00 0.00 1 _GLOBAL__sub_I_main [8] | ||

| + | |||

| + | Index by function name | ||

| + | |||

| + | [8] _GLOBAL__sub_I_main (hangman2.cpp) | ||

| + | |||

| + | Of course for this program there isn't too much being outputted as well as a complex algorithm behind the main program | ||

| + | and thus this program needs a lot of work. But I believe that's the beauty of it, instead of statically importing words | ||

| + | we can have words imported from a file, and even add features such as multi-player. All these features can be parallized | ||

| + | and thus be very efficient while in run time. | ||

| + | |||

| + | All in all I believe working on a project that you know everything about is essential, working off of an image processor | ||

| + | or large bits of existing code can time for a developer to learn and understand the code structure of the program. | ||

| + | |||

| + | Really looking forward to working on this if we end up doing so! | ||

=== Assignment 2 === | === Assignment 2 === | ||

| + | For our assignment 2 we chose to paralyze the searching for a letter inside the hangman game. | ||

| + | |||

| + | Because hangman usually uses a small word, paralyzing a search through a single word will not show a major increase. So we created a new game called letter search. In letter search, a "word" of length x is created at the beginning of a game. The word will contain all the letters of the alphabet chosen randomly minus 5 letters. One letter in the alphabet will be worth approximately double of the other letters. | ||

| + | |||

| + | The user tries to guess all the letters in the random character array for points. | ||

| + | |||

| + | The word can be anywhere from 1 letter, to 1000000 (The highest we have tested.) | ||

| + | |||

| + | With the parallelization, we noticed some increase in speed. Upon further experimentation, we realized that out memcopy's were what took up all the speed. When looking at the time taken for the search itself when going through the kernel, we noticed an almost 100% increase in speed. | ||

| + | |||

| + | If we are able to speed up the memcopy's we will be able to further increase the speed of the program. | ||

| + | |||

| + | The speed up times of the searches are below (including the Memcpy's). | ||

| + | |||

| + | |||

| + | '''Size 100000:''' | ||

| + | |||

| + | <u>Parallel</u> | ||

| + | |||

| + | Guess a letter: a | ||

| + | Search Time: - took - <u>0.001000 secs</u> | ||

| + | |||

| + | You found 3786 letters! Isn't that exciting! | ||

| + | You have 5 guesses left. | ||

| + | |||

| + | |||

| + | <u>Regular</u> | ||

| + | |||

| + | Guess a letter: a | ||

| + | Search Time: - took - <u>0.002000 secs</u> | ||

| + | |||

| + | You found a letter! Isn't that exciting! | ||

| + | You have 5 guesses left. | ||

| + | |||

| + | |||

| + | '''Size 500000:''' | ||

| + | |||

| + | <u>Parallel</u> | ||

| + | |||

| + | Guess a letter: a | ||

| + | Search Time: - took - <u>0.003000 secs</u> | ||

| + | |||

| + | You found 19198 letters! Isn't that exciting! | ||

| + | You have 4 guesses left. | ||

| + | |||

| + | <u>Regular</u> | ||

| + | |||

| + | Guess a letter: a | ||

| + | Search Time: - took - <u>0.006000 secs</u> | ||

| + | |||

| + | You found a letter! Isn't that exciting! | ||

| + | You have 5 guesses left. | ||

| + | |||

| + | |||

| + | '''Size 1000000:''' | ||

| + | |||

| + | <u>Parallel</u> | ||

| + | |||

| + | Guess a letter: a | ||

| + | Search Time: - took - <u>0.005000 secs</u> | ||

| + | |||

| + | You found 38256 letters! Isn't that exciting! | ||

| + | You have 5 guesses left. | ||

| + | |||

| + | |||

| + | <u>Regular</u> | ||

| + | |||

| + | Guess a letter: a | ||

| + | Search Time: - took - <u>0.012000 secs</u> | ||

| + | |||

| + | You found a letter! Isn't that exciting! | ||

| + | You have 5 guesses left. | ||

| + | |||

| + | |||

| + | The timing of the kernel command was 0.000000 secs in every case. This shows that the kernel search takes almost no time, and the time is taken by the memalcation's and the memcopy's | ||

| + | |||

| + | [[Image:Hotpath Kernel vs reg.jpg|thumb|800px|center]] | ||

| + | |||

=== Assignment 3 === | === Assignment 3 === | ||

| + | For or assignment 3 we did a few things to speed up the program, and we were able to observe an approximate speed up of around 50%. | ||

| + | |||

| + | [[Image:Hang man graph.png|thumb|800px|center]] | ||

| + | |||

| + | To observe this speed up we removed thread divergence from the kernels, and we removed some unnecessary memory copies. | ||

| + | |||

| + | By removing thread divergence, we initially saw a speed up of around 10%. We expected this speed up to be small, since when running our code, the majority of time was spent in the memory copy phase. To speed the process up slightly more, we also used shared memory within the kernel. After the kernels had all the updates applied, we realized a speed up slightly over 10%. | ||

| + | |||

| + | When we took a look at the memory copies we used in the previous version, we realized that there were several copies that did not need to be done. We also realized that some of the copies could be moved around for more efficiency. After moving these copies, we realized a speed up of just over 40%. | ||

| + | |||

| + | Our last version of the program now runs approximately 50% faster than the previous version. | ||

| + | |||

| + | |||

| + | '''To calculate the number of blocks per thread we used the CUDA calculator.''' | ||

| + | [[Image:Nvidia Occupancy Calculator on Code.jpg|thumb|800px|center]] | ||

| + | In the previous version we dynamically found the number of threads per block, we could not dynamically use the information in this version due to the fact that shared memory was used. On the school lab computers the NBPT was 1024. | ||

| + | |||

| + | '''Real World Application''' | ||

| + | To make the application more "real world" friendly, we were able to make test data load form a large dictionary file. This makes it so you can search for real words instead of gibberish. | ||

| + | |||

| + | '''What Would We Do Different?''' | ||

| + | We would have spent more time on our A1s. When we picked out A1 programs we tried to find programs that were cool, and had unique uses. We profiled the programs without taking an in depth look at the code base, and when it came to picking a topic for A2, we were stuck with only one program, since the other two were much too complex. | ||

Latest revision as of 13:26, 4 December 2014

Contents

AAA

Team Members

- Adrian Sauvageot, Developer

- Andriy Guzenko, Developer

- Arsalan Khalid, Developer

Progress

Assignment 1

About

Our group has decided to each look at a different programming problem that could be sped up through paralysing it.

Adrian Sauvageot's Findings

Program To Parallelize

I chose to look into a cryptography library. Upon searching for libraries, I was able to find an open source library called CRYPTO++. This library is able to encrypt lines of text, and then decrypt them, based on a key, which is also a string.

The library allows the programmer to select a string. The string would then be secretly shared across two platforms, where it would be stored. The data to be sent would be encrypted on a computer, sent over the network, and then decrypted on the other computer.

I decided to look at the application as if someone was to send a large amount of encrypted data in a large string. To encrypt the string, there is data dependency; encrypting "The Quick Brown Fox Jumped Over The Moon" could give an encrypted code of:

C:ÖÜ+┘~ ╦÷bܼ↨▲+¶.f¾W.▀#§cÆ▼H╣♥¿aOv:Ss╚Ðr│y!%·∟

If you typed the same thing twice, you would get:

C:ÖÜ+┘~ ╦÷bܼ↨▲+¶.f¾W.▀#§cÆ▼H╣♥éý┌ï0샨ï╩↑R┘^õ¡#£2ÌÕøÈ榪ç}FÉñW{òpÂ╩☺üqÿgG‗iã$(♦G]<}*▲`eJÔÓW╗õí

However, it would be possible take a large string, break it into several pieces, and encrypt each piece separately.

For example, if a string was to be broken up after each ".", each part of the large string would be encoded separately, and sent over the network along with a header that contained the strings order. IE:

encryptedPacket{

int id;

string encodedString;

}

The strings could be encoded parallely, and then again decoded parallely.

This would be able to decrease the time taken to encode the strings if they were large. The data would still be encoded while being sent over the network.

Hot Spot Identification By Profiling

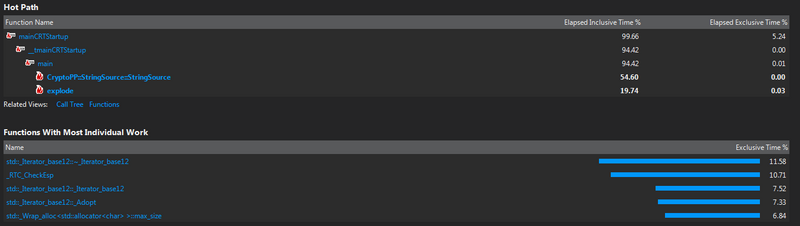

In order to identify the hot spots of the program, I used Visual Studio. (Unfortunately, for this library I could not use the Seneca Matrix system, as my account did not have sufficient privileges to install the library.)

This program was called with a very large string that was split into pieces by spiting it on the "."

The explode function (which is a function I created) is the function that splits the string into pieces. This function could be paralleled to speed up the process of spiting up the string. Since this takes almost 20% of the total time it would be worth speeding up.

The other function that takes up a lot of resources is the Cryptopp::StringSource::StringSource function. This is part of the function that takes the String and encodes it. In the StringSource function, the strings that are being encrypted, or decrypted are being copied, being encrypted/decrypted, and stored.

Because the data would be split into smaller pieces by the explode function, this could be paralleled. Since this takes almost 55% of the time of the program, it would be worth speeding up.

Andriy Guzenko’s Findings

Program Description

I found a jpeg-compressor of a relatively small size written in C++. The core compression algorithm is about 900 lines plus the header file. You can find the code online here: [ https://code.google.com/p/jpeg-compressor/ ]

It is a console application which requires three command line arguments for successful execution. The first is the source ‘jpg’ file with appropriate ‘read’ permissions. The second is the destination file in any format. The third argument is the quality factor which is a number between 0 and 100. The 0 quality factor will produce a better quality compressed image.

The application also contains the decompression module which allows to decompress files back into ‘jpg’ format.

Finally, the application provides a number of options for compression and decompression such as:

‘-o’ – enables optimized Huffman tables and makes compression slower but produces smaller files

‘-x’ – exhaustive compression test

Profiling

Profiling of this application revealed a number of hotspots in both compression and decompression algorithms.

The compression profile for 3.3 Mb ‘Jpg’ image which produced 0.7 Mb compressed file:

> a.out img.jpg result 50 Each sample counts as 0.01 seconds. % cumulative self self total time seconds seconds calls s/call s/call name 12.18 1.66 1.66 image_compare(image_compare_results&, int, int, unsigned char const*, int, unsigned char const*, int, bool) 8.90 2.87 1.21 YCbCr_to_RGB_row(unsigned char*, unsigned char const*, unsigned char const*, unsigned char const*, int, int) 8.61 4.04 1.17 jpge::DCT2D(int*) 8.46 5.18 1.15 jpge::RGB_to_YCC(unsigned char*, unsigned char const*, int) 8.02 6.28 1.09 idct_block(unsigned char*, int, short*, unsigned char*) 6.48 7.16 0.88 3456 0.00 0.00 jpgd::jpeg_decoder::expanded_convert() 5.30 7.88 0.72 4214032 0.00 0.00 jpgd::Col<4>::idct(unsigned char*, int const*) 4.12 8.44 0.56 373248 0.00 0.00 jpge::jpeg_encoder::load_quantized_coefficients(int) 4.01 8.98 0.55 get_pixel(int*, unsigned char const*, bool, int) 3.16 9.41 0.43 resample_row_h_2(unsigned char*, unsigned char*, unsigned char*, int, int) 2.58 9.76 0.35 2140439 0.00 0.00 jpgd::Row<4>::idct(int*, short const*) 2.43 10.09 0.33 373248 0.00 0.00 jpge::jpeg_encoder::code_coefficients_pass_two(int) 2.02 10.37 0.28 decode_block(jpeg*, short*, huffman*, huffman*, int)

And produced the output :

jpge/jpgd example app Source file: "img.JPG", image resolution: 4608x3456, actual comps: 3 Writing JPEG image to file: comp Compressed file size: 799619, bits/pixel: 0.402 Compression time: 4609.175ms, Decompression time: 6812.550ms Error Max: 71.000000, Mean: 5.951586, Mean^2: 20.892606, RMSE: 4.570843, PSNR: 3 4.930877 Success.

According to the profiling data such methods as “jpge::DCT2D(int*)” and “jpge::RGB_to_YCC” can be parallelized to improve the application performance which will be particularly useful for compressing large ‘jpg’ files at a better quality factor.

Arsalan Khalid Findings

Program To Parallelize

I discovered a C++ hangman application, it needs quite a bit of work but I believe that it is much more interesting than parallelizing the standard image processor. Most of the processing power is spent on the main, therefore in can be better modularized into functions and contain a more complex and efficient algorithm to handle more amount of words. The program's output is as so:

Welcome to hangman...Guess a country Name Each letter is represented by a star.

You have to type only one letter in one try You have 5 tries to try and guess the word.

21:48, 3 October 2014 (EDT)21:48, 3 October 2014 (EDT)21:48, 3 October 2014 (EDT)21:48, 3 October 2014 (EDT)21:48, 3 October 2014 (EDT)21:48, 3 October 2014 (EDT)21:48, 3 October 2014 (EDT)21:48, 3 October 2014 (EDT)Arsalan Khalid

- Guess a letter: o Whoops! That letter isn't in there!You have 4 guesses left.

- Guess a letter: p Whoops! That letter isn't in there!You have 3 guesses left.

- Guess a letter: i You found a letter! Isn't that exciting!You have 3 guesses left.

i*** Guess a letter: n You found a letter! Isn't that exciting!You have 3 guesses left.

i**n Guess a letter: r You found a letter! Isn't that exciting!You have 3 guesses left.

ir*n Guess a letter: a You found a letter! Isn't that exciting!You have 3 guesses left.

iran

Yeah! You got it!

The profiling was as follows:

granularity: each sample hit covers 2 byte(s) no time propagated

index % time self children called name

0.00 0.00 1/1 __libc_csu_init [15]

[8] 0.0 0.00 0.00 1 _GLOBAL__sub_I_main [8]

Index by function name

[8] _GLOBAL__sub_I_main (hangman2.cpp)

Of course for this program there isn't too much being outputted as well as a complex algorithm behind the main program and thus this program needs a lot of work. But I believe that's the beauty of it, instead of statically importing words we can have words imported from a file, and even add features such as multi-player. All these features can be parallized and thus be very efficient while in run time.

All in all I believe working on a project that you know everything about is essential, working off of an image processor or large bits of existing code can time for a developer to learn and understand the code structure of the program.

Really looking forward to working on this if we end up doing so!

Assignment 2

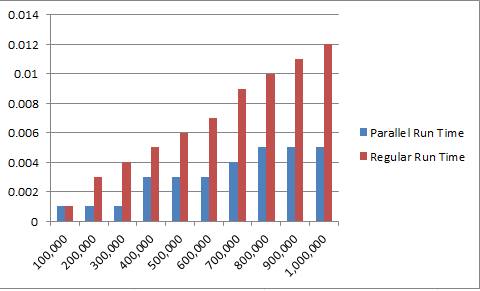

For our assignment 2 we chose to paralyze the searching for a letter inside the hangman game.

Because hangman usually uses a small word, paralyzing a search through a single word will not show a major increase. So we created a new game called letter search. In letter search, a "word" of length x is created at the beginning of a game. The word will contain all the letters of the alphabet chosen randomly minus 5 letters. One letter in the alphabet will be worth approximately double of the other letters.

The user tries to guess all the letters in the random character array for points.

The word can be anywhere from 1 letter, to 1000000 (The highest we have tested.)

With the parallelization, we noticed some increase in speed. Upon further experimentation, we realized that out memcopy's were what took up all the speed. When looking at the time taken for the search itself when going through the kernel, we noticed an almost 100% increase in speed.

If we are able to speed up the memcopy's we will be able to further increase the speed of the program.

The speed up times of the searches are below (including the Memcpy's).

Size 100000:

Parallel

Guess a letter: a Search Time: - took - 0.001000 secs

You found 3786 letters! Isn't that exciting! You have 5 guesses left.

Regular

Guess a letter: a Search Time: - took - 0.002000 secs

You found a letter! Isn't that exciting! You have 5 guesses left.

Size 500000:

Parallel

Guess a letter: a Search Time: - took - 0.003000 secs

You found 19198 letters! Isn't that exciting! You have 4 guesses left.

Regular

Guess a letter: a Search Time: - took - 0.006000 secs

You found a letter! Isn't that exciting! You have 5 guesses left.

Size 1000000:

Parallel

Guess a letter: a Search Time: - took - 0.005000 secs

You found 38256 letters! Isn't that exciting! You have 5 guesses left.

Regular

Guess a letter: a Search Time: - took - 0.012000 secs

You found a letter! Isn't that exciting! You have 5 guesses left.

The timing of the kernel command was 0.000000 secs in every case. This shows that the kernel search takes almost no time, and the time is taken by the memalcation's and the memcopy's

Assignment 3

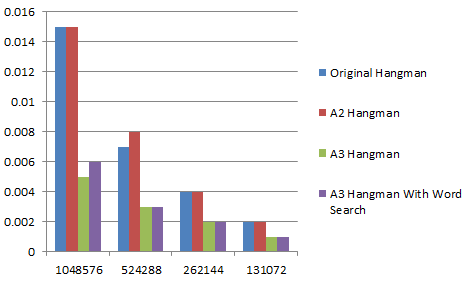

For or assignment 3 we did a few things to speed up the program, and we were able to observe an approximate speed up of around 50%.

To observe this speed up we removed thread divergence from the kernels, and we removed some unnecessary memory copies.

By removing thread divergence, we initially saw a speed up of around 10%. We expected this speed up to be small, since when running our code, the majority of time was spent in the memory copy phase. To speed the process up slightly more, we also used shared memory within the kernel. After the kernels had all the updates applied, we realized a speed up slightly over 10%.

When we took a look at the memory copies we used in the previous version, we realized that there were several copies that did not need to be done. We also realized that some of the copies could be moved around for more efficiency. After moving these copies, we realized a speed up of just over 40%.

Our last version of the program now runs approximately 50% faster than the previous version.

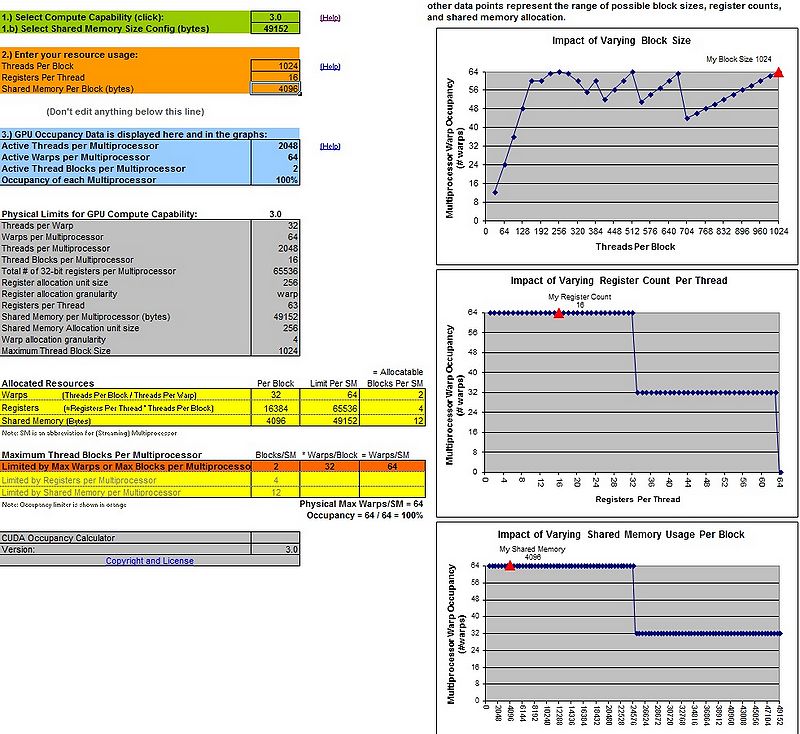

To calculate the number of blocks per thread we used the CUDA calculator.

In the previous version we dynamically found the number of threads per block, we could not dynamically use the information in this version due to the fact that shared memory was used. On the school lab computers the NBPT was 1024.

Real World Application To make the application more "real world" friendly, we were able to make test data load form a large dictionary file. This makes it so you can search for real words instead of gibberish.

What Would We Do Different? We would have spent more time on our A1s. When we picked out A1 programs we tried to find programs that were cool, and had unique uses. We profiled the programs without taking an in depth look at the code base, and when it came to picking a topic for A2, we were stuck with only one program, since the other two were much too complex.