Difference between revisions of "Team CNT"

(→Assignment 3) |

|||

| (7 intermediate revisions by the same user not shown) | |||

| Line 31: | Line 31: | ||

| − | === Assignment 2 === | + | === Assignment 2 === |

I am not sure we are doing the Team work or individual. But since I did not hear anything from my team mate I decided to go with my first assignment. I met many difficulties to adapt the existing c++ code to transfer some computations on GPU. In my first assignment I am doing different manipulations with the image. Image is a class. And this is one of the reason of delay with my second assignment. Apparently, I can't pass class to the Kernel. Kernel accepts only low types variable. So all the time I was trying different approaches how to parallelize my code. I even bought a new computer with CUDA compatible GPU card to be able to spend more time on the tasks. And finally, I found that method image.negate() (not image rotation) would be easy to try to compute on Kernel.I decided to make 1000 negates of image to make sense of parallelyzing the code. I rewrote some code in my first assignment, remade the profile for first assignment (1000 negate operations). It took around 13 seconds on Linux. I profiled my code with Kernel computations on CUDA profiler, and.....it took around 8 seconds....So here is my new profile for assignment 1: | I am not sure we are doing the Team work or individual. But since I did not hear anything from my team mate I decided to go with my first assignment. I met many difficulties to adapt the existing c++ code to transfer some computations on GPU. In my first assignment I am doing different manipulations with the image. Image is a class. And this is one of the reason of delay with my second assignment. Apparently, I can't pass class to the Kernel. Kernel accepts only low types variable. So all the time I was trying different approaches how to parallelize my code. I even bought a new computer with CUDA compatible GPU card to be able to spend more time on the tasks. And finally, I found that method image.negate() (not image rotation) would be easy to try to compute on Kernel.I decided to make 1000 negates of image to make sense of parallelyzing the code. I rewrote some code in my first assignment, remade the profile for first assignment (1000 negate operations). It took around 13 seconds on Linux. I profiled my code with Kernel computations on CUDA profiler, and.....it took around 8 seconds....So here is my new profile for assignment 1: | ||

| Line 62: | Line 62: | ||

and this is screen shot of my CUDA profile: | and this is screen shot of my CUDA profile: | ||

| − | [[File: | + | [[File:negate.png]] |

| Line 78: | Line 78: | ||

</pre> | </pre> | ||

| − | + | === Assignment 3 === | |

| + | Now I realized I did not use shared memory for assignment 2. So I changed that. In my assignment 3 I am using shared memory. As for assignment 2 I am doing 1000 times image negate. Last lectures for this course helped a lot to learn to optimize. Specially coalesced access. It is amazing! In assignment 2 my negate function calculations became faster almost twice just because I was using the kernel. I was thinking there is nothing to be done more. And now my negate function calculations became faster more than twice than in assignment 2 thanks to the optimization. This is my profile for assignment 3: | ||

| + | [[Image:Profiler_scr.png]] | ||

| + | |||

| + | The code for Kernel is here: | ||

| + | <pre> | ||

| + | __global__ void cudaNegateImage2D_Coalescence(int *result, const int *work, int ni, int nj) | ||

| + | { | ||

| + | int i = blockIdx.x * blockDim.x + threadIdx.x; | ||

| + | int j = blockIdx.y * blockDim.y + threadIdx.y; | ||

| + | |||

| + | __shared__ float cache_a[NTPB][NTPB]; | ||

| + | __shared__ float cache_b[NTPB][NTPB]; | ||

| + | |||

| + | cache_a[threadIdx.y][threadIdx.x] = work[j * ni + i]; | ||

| + | //cache_b[threadIdx.x][threadIdx.y] = b[j * NTPB + j]; | ||

| + | __syncthreads(); | ||

| + | |||

| + | if (i < ni && j < nj) { | ||

| + | //result[i * nj + j] = -(work[i * nj + j]) + 255; | ||

| + | cache_b[threadIdx.y][threadIdx.x] = -cache_a[threadIdx.y][threadIdx.x] + 255; | ||

| + | } | ||

| + | __syncthreads(); | ||

| − | = | + | result[j * ni + i] = cache_b[threadIdx.y][threadIdx.x]; |

| + | } | ||

| + | </pre> | ||

| + | It is amazing course and very educational assignments. | ||

Latest revision as of 21:09, 18 April 2013

Contents

Team CNT

Team Members

Progress

Assignment 1

Natalia's Findings

I have found a C++ code which was uploaded on Dreamincode.net and is open source. The application is called "Basic Image Processor". At the beginning I was stuck with trying to convert the JPG file to be able to process through application. But after some effort I figured how to compile it. The application allows the user to select what he/she wants to do with the image including rotate the image, that is what I am thinking to parallelize. But basically, there are other options which could be interesting to try to parallelize as well. I'll see how it will be going.

Since the application was designed to ask user for options, I had to modify the code to be able to profile the code. So I hard-coded the file name of the image, and preselected the option "rotate the image".

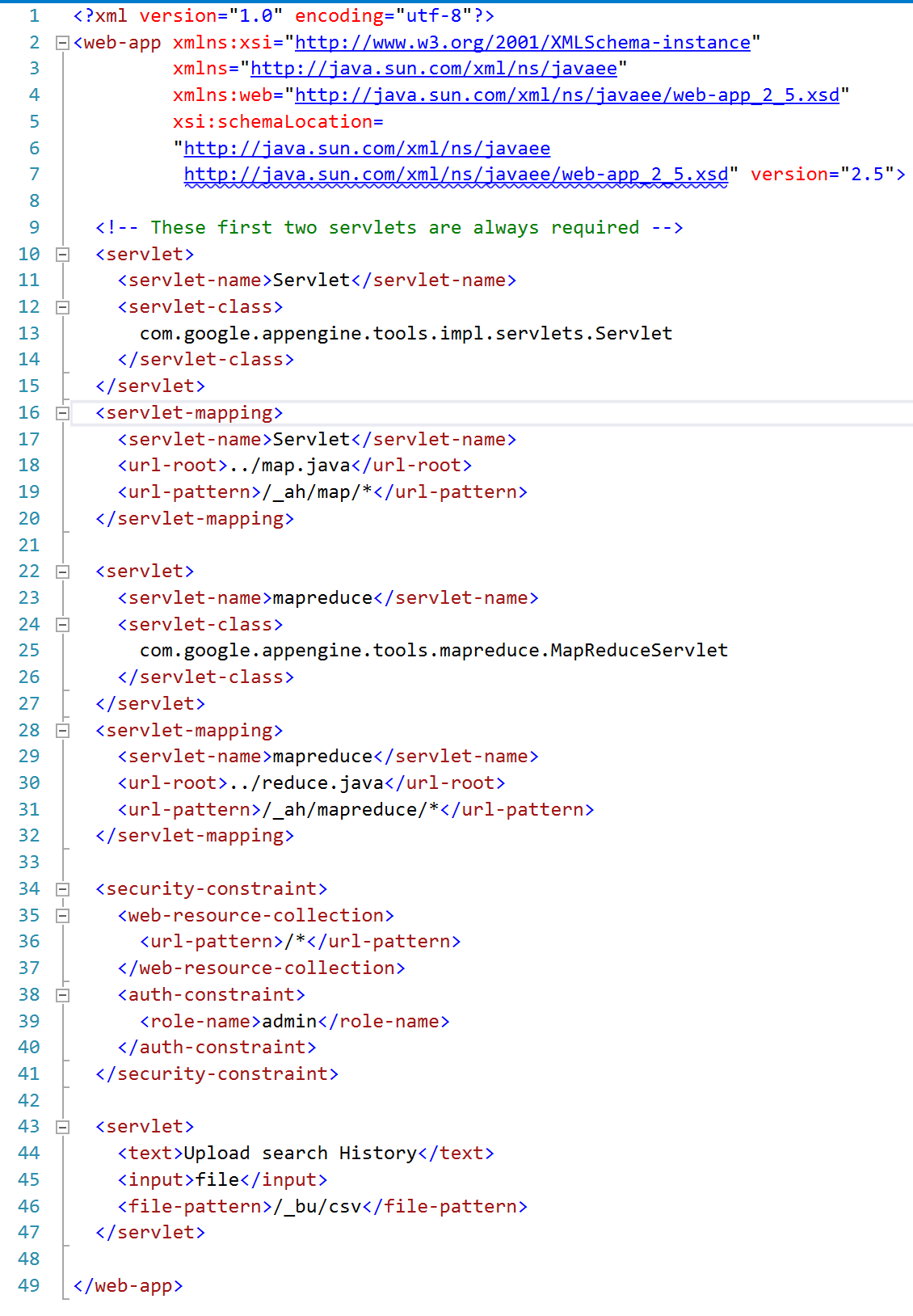

Example of profiling with pgm image of size 35M

So as you can see, most of the time are spent on rotate the image. Overall it is still not so long as I expected....So I'll try bigger picture. Either way, it is interesting to try to decrease even this time.

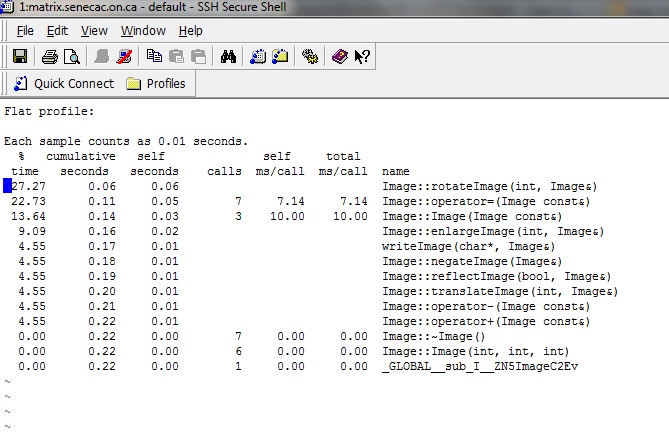

Example of profiling with pgm image of size 1.8M and with different options

I decided to try to enable all options to process the file: rotate, translate, shrink, enlarge, reflect, mean grey. What I discovered, is that the program aborts after a while. After trying with different images, I realized the image size is matters. So the program can't do everything with a big size image like 30Mb. But it works fine with smaller images. The screen shot above is profiling using image of size 1.8Mb. The screen shot under the text - using the image of size 4Mb.

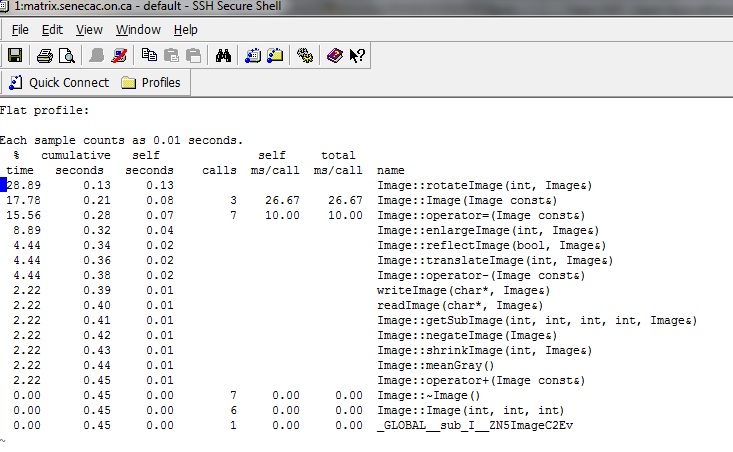

Example of profiling with pgm image of size 4M and with different options

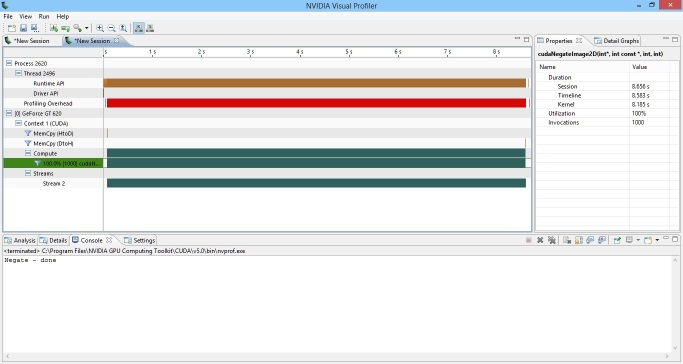

Assignment 2

I am not sure we are doing the Team work or individual. But since I did not hear anything from my team mate I decided to go with my first assignment. I met many difficulties to adapt the existing c++ code to transfer some computations on GPU. In my first assignment I am doing different manipulations with the image. Image is a class. And this is one of the reason of delay with my second assignment. Apparently, I can't pass class to the Kernel. Kernel accepts only low types variable. So all the time I was trying different approaches how to parallelize my code. I even bought a new computer with CUDA compatible GPU card to be able to spend more time on the tasks. And finally, I found that method image.negate() (not image rotation) would be easy to try to compute on Kernel.I decided to make 1000 negates of image to make sense of parallelyzing the code. I rewrote some code in my first assignment, remade the profile for first assignment (1000 negate operations). It took around 13 seconds on Linux. I profiled my code with Kernel computations on CUDA profiler, and.....it took around 8 seconds....So here is my new profile for assignment 1:

the code for negate method is here:

void Image::negateImage(Image& oldImage)

/*negates image*/

{

int rows, cols, gray;

rows = N;

cols = M;

gray = Q;

Image tempImage(N,M,Q);

for(int j=0; j<1000; j++){

for(int i = 0; i < rows; i++)

{

for(int j = 0; j < cols; j++)

tempImage.pixelVal[i][j] = -(pixelVal[i][j]) + 255;

}

}

oldImage = tempImage;

}

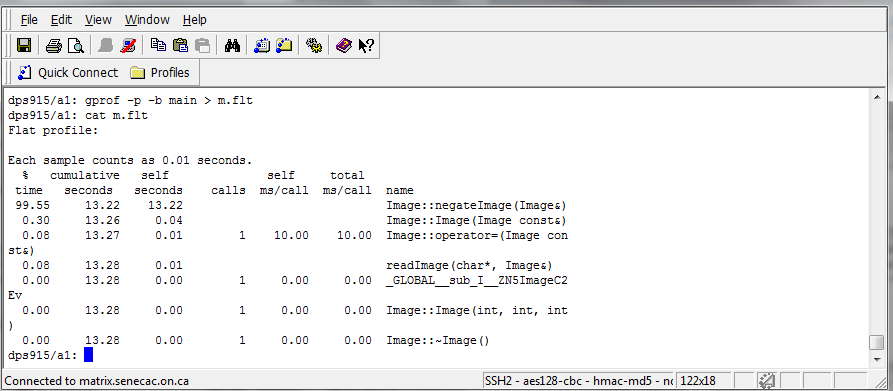

and this is screen shot of my CUDA profile:

the code for Kernel is :

__global__ void cudaNegateImage2D(int *result, const int *work, int ni, int nj)

{

int i = blockIdx.x * blockDim.x + threadIdx.x;

int j = blockIdx.y * blockDim.y + threadIdx.y;

if (i < ni && j < nj) {

result[i * nj + j] = -(work[i]) + 255;

}

}

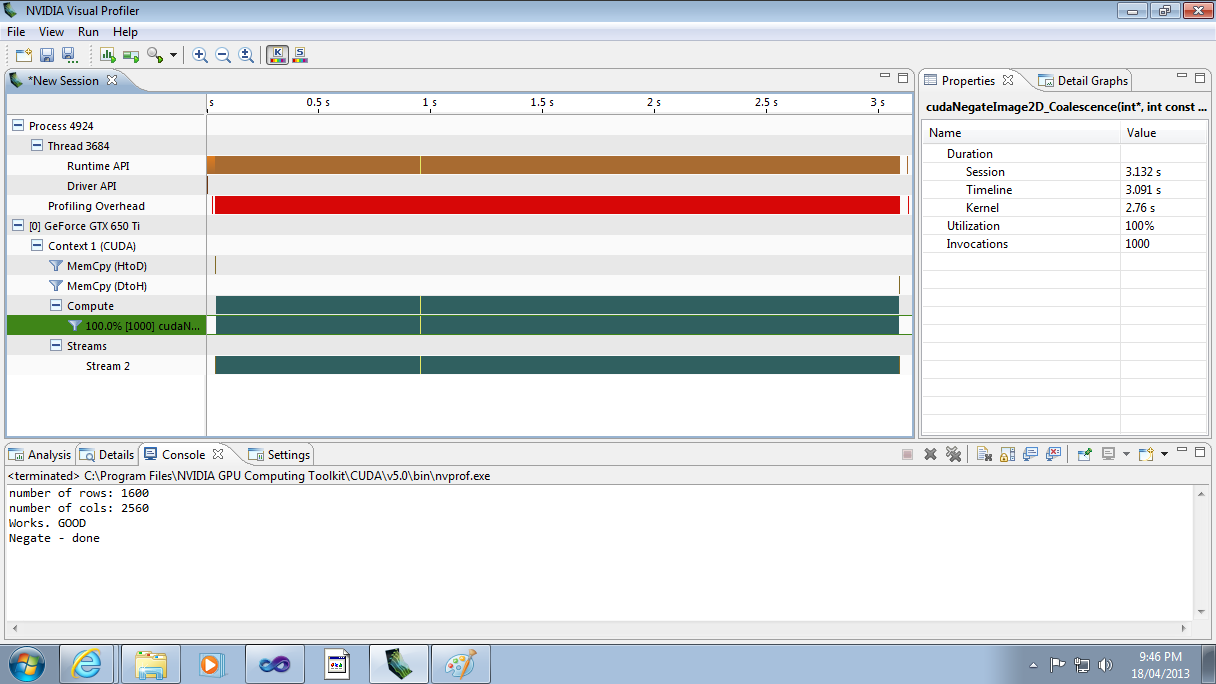

Assignment 3

Now I realized I did not use shared memory for assignment 2. So I changed that. In my assignment 3 I am using shared memory. As for assignment 2 I am doing 1000 times image negate. Last lectures for this course helped a lot to learn to optimize. Specially coalesced access. It is amazing! In assignment 2 my negate function calculations became faster almost twice just because I was using the kernel. I was thinking there is nothing to be done more. And now my negate function calculations became faster more than twice than in assignment 2 thanks to the optimization. This is my profile for assignment 3:

The code for Kernel is here:

__global__ void cudaNegateImage2D_Coalescence(int *result, const int *work, int ni, int nj)

{

int i = blockIdx.x * blockDim.x + threadIdx.x;

int j = blockIdx.y * blockDim.y + threadIdx.y;

__shared__ float cache_a[NTPB][NTPB];

__shared__ float cache_b[NTPB][NTPB];

cache_a[threadIdx.y][threadIdx.x] = work[j * ni + i];

//cache_b[threadIdx.x][threadIdx.y] = b[j * NTPB + j];

__syncthreads();

if (i < ni && j < nj) {

//result[i * nj + j] = -(work[i * nj + j]) + 255;

cache_b[threadIdx.y][threadIdx.x] = -cache_a[threadIdx.y][threadIdx.x] + 255;

}

__syncthreads();

result[j * ni + i] = cache_b[threadIdx.y][threadIdx.x];

}

It is amazing course and very educational assignments.