Difference between revisions of "GPU621/Analyzing False Sharing"

Ryan Leong (talk | contribs) |

Ryan Leong (talk | contribs) (→Use local variables for each thread) |

||

| (68 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

=Group Members= | =Group Members= | ||

| − | |||

# [mailto:rleong4@myseneca.ca?subject=GPU621 Ryan Leong] | # [mailto:rleong4@myseneca.ca?subject=GPU621 Ryan Leong] | ||

# [mailto:yppadsala@myseneca.ca?subject=GPU621 Yash Padsala] | # [mailto:yppadsala@myseneca.ca?subject=GPU621 Yash Padsala] | ||

| Line 6: | Line 5: | ||

= '''Preface''' = | = '''Preface''' = | ||

| − | + | In multicore concurrent programming, if the mutual exclusion is a "performance killer", then false sharing is a worthy "performance assassin". The difference between "killer" and "assassin" is that a "killer" can be easily detected, and we always have many ways to deal with a "killer" when we fight it, such as fighting, running away, detouring, and begging for mercy, but an "assassin" is completely different. Assassins are good at hiding in the shadows and can give a fatal blow at any time, making people defensive. | |

| − | In multicore concurrent programming, if | ||

| − | + | In our concurrent development, when we encounter situations that affect concurrency performance, we always have many ways to find and improve the concurrency performance of the program. But false sharing leaves no trace in our code that it is in the "dark" and is seriously slowing down concurrency performance, making it hard to find the root cause of the problem, let alone improve concurrency performance. | |

| − | |||

| − | |||

| − | + | = '''What you need to know before understanding false sharing''' = | |

| + | == Cache == | ||

| + | Basically, the cache is a location where data is stored for CPU use. But there are many types of memory for the CPU. Ideally, CPU can not access every data with lightning speed. So, let's talk about it in brief. In our PC and laptops, we have hard drives and SSDs which are slow in speed, but they can store big data. So, when the CPU tries to access this data it will take some time, so this process takes more computational time. | ||

| + | [[File:cache.jpg|right|400px]]<br /> | ||

| + | Moreover, DRMS is quite expensive. However, it is fast but still, DRMS is not enough for CPUs. DRMS works on electricity. Hence if power is cut that time data is not accessible. Furthermore, SRAM is very fast and smaller but expensive. SRAM memory used in cache. So, the first CPU finds data from the cache and if data is not in the cache, then the CPU starts to find it in the main memory and tries until it finds that data. So, this data transfers into the cache. This transfer works in two ways. The first way is that the same data is transferred into the cache for a short period of time and this is known as temporal locality. Another one is a spatial locality and this method also accessed nearby locations if it will be needed. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | == Cache Coherence == | ||

| + | [[File:cacheCoherence.jpg|center|800px]]<br /> | ||

| + | When the CPU accesses the data at that time data goes into the cache and this memory block is known as the cache line. But it is not possible that modify the original data when changes in the cache line. Here cache coherence helps that stored in multiple local caches. Cache coherence connects all cache. | ||

| + | == MESI == | ||

| + | First, we know that the cache line has four different states. Like, exclusive, shared, modified, and invalid. So MESI is an acronym for all four states. To understand it well we throw light on this example. | ||

| + | [[File:MESI1.jpg|400px]]<br /> | ||

| − | = | + | This picture shows that the core A read value a from the main memory. So this core also fetches some nearby values from the main memory and stores these values in the cache line and makes it exclusive because only core A operates this cache line. |

| − | When a | + | |

| + | [[File:MESI2.jpg|400px]]<br /> | ||

| + | |||

| + | Later, core B also reads the value of b from memory. Now, a and b both read the same cache line and close so, both cores are shared. | ||

| + | |||

| + | [[File:MESI3.jpg|400px]]<br /> | ||

| + | |||

| + | Moreover, core A change the value of a so, this change is stored in the buffer and cache line tagged as modified. In the end, it communicates with core B and is known as an invalid cache line. | ||

| + | |||

| + | = '''What is False Sharing?''' = | ||

| + | False Sharing is sharing pattern that happens when multiple applications run and share the same memory. When more than one thread read or updates the same data in logical memory it ends up in the same catch line. Each application’s cache is a copy of a common source. So, when we modify one cache it will cause others to reload from the common source. In other words, when one program makes a change in one cache and it does not change the data which is used by the second program, this also forces another cache to reload so, in this scenario reload cache is a useless system resource and it may create a negative impact on the performance of the program. It is not easy to catch false sharing and stop it. However, there are some ways which help us to overcome false sharing. | ||

| + | |||

| + | The foremost reason why false sharing happens can be found in how operating systems read and write data. When a program reads or writes data from the hard drive or other sources at that time this data loads into a temporary cache. Because it is a very fast way to access it. This knows as a cache line. | ||

| + | |||

| + | = '''False sharing example''' = | ||

| + | #include <thread> | ||

| + | #include <vector> | ||

| + | #include <iostream> | ||

| + | #include <chrono> | ||

| + | using namespace std; | ||

| + | const int sizeOfNumbers = 10000; // Size of vector numbers | ||

| + | const int sizeOfSum = 2; // Size of vector sum | ||

| + | void sumUp(const vector<int> numbers, vector<long>& sum, int id) { | ||

| + | for (int i = 0; i < numbers.size(); i++) { | ||

| + | if (i % sizeOfSum == id) { | ||

| + | sum[id] += i; | ||

| + | } | ||

| + | } | ||

| + | // The output of this sum | ||

| + | cout << "sum " << id << " = " << sum[id] << endl; | ||

| + | } | ||

| + | int main() { | ||

| + | vector<int> numbers; | ||

| + | for (int i = 0; i < sizeOfNumbers; i++) { //Initialize vector numbers to 0 to 100 | ||

| + | numbers.push_back(i); | ||

| + | } | ||

| + | cout << "-----Thread-----" << endl; | ||

| + | // Thread block | ||

| + | { | ||

| + | vector<long> sum(sizeOfSum, 0); //Set size=sizeOfSum and all to 0 | ||

| + | auto start = chrono::steady_clock::now(); | ||

| + | vector<thread> thread; | ||

| + | for (int i = 0; i < sizeOfSum; i++) { | ||

| + | thread.emplace_back(sumUp, numbers, ref(sum), i); | ||

| + | } | ||

| + | for (int i = 0; i < sizeOfSum; i++) { | ||

| + | thread[i].join(); | ||

| + | } | ||

| + | auto end = chrono::steady_clock::now(); | ||

| + | cout << "Thread time consumption: " << chrono::duration_cast<chrono::microseconds>(end - start).count() << "ms" << endl; | ||

| + | } | ||

| + | cout << endl << "-----Serial-----" << endl; | ||

| + | // Serial block | ||

| + | { | ||

| + | vector<long> sum(sizeOfSum, 0); | ||

| + | auto start = chrono::steady_clock::now(); | ||

| + | for (int i = 0; i < sizeOfSum; i++) { | ||

| + | sumUp(numbers, sum, i); | ||

| + | } | ||

| + | auto end = chrono::steady_clock::now(); | ||

| + | cout << "Serial time consumption: " << chrono::duration_cast<chrono::microseconds>(end - start).count() << "ms" << endl; | ||

| + | } | ||

| + | } | ||

| + | |||

| + | In this program code, we can see that at the beginning of the main function we declare a vector numbers and initialize it from 0 to 10000. We can also see in the main function that the code is divided into two main blocks. The first block is the Thread block, which is executed concurrently using multiple threads. While the second block is the Serial block, it is executed concurrently using normal serial logic. | ||

| + | |||

| + | The main purpose of the sumUp function is to calculate the sum of odd elements or even elements based on the data in the first vector argument in the argument list. Also, the sum will be recorded in the corresponding position of the second vector argument using the int argument as the index. | ||

| + | |||

| + | Which block of code do you think will take less time? | ||

| + | |||

| + | Theoretically, this code should be executed faster on a multicore machine with a Thread block than a serial block. But the result is. | ||

| + | |||

| + | [[File:exampleOutput1.jpg|400px]]<br /> | ||

| + | |||

| + | Or | ||

| + | |||

| + | [[File:exampleOutput2.jpg|400px]]<br /> | ||

| + | |||

| + | Or this | ||

| + | |||

| + | [[File:exampleOutput3.jpg|400px]]<br /> | ||

| + | |||

| + | To our surprise, the serial block took much less time, no matter how many times I ran it. This turned our existing knowledge upside down, but don't worry, it's because you don't understand False Sharing yet. | ||

| + | |||

| + | = '''Solutions Of False Sharing''' = | ||

| + | == Use local variables for each thread == | ||

| + | |||

| + | As we said above, the smallest unit of CPU operation on the cache is the size of a cache line, which is 64 bytes. As you can see in our program code, the sum is a vector that stores two data with long type. The two long data are in fact located on the same cache line. When two threads read and write sum[0] and sum[1] separately, it looks like they are not using the same variable, but in fact, they are affecting each other.For example, if a thread updates sum[0] in CPU0, this causes the cache line of sum[1] in CPU1 to be invalidated. This causes the program to take longer to run, as it needs to re-read the data. | ||

| + | |||

| + | We try to change the sizeOfSum to 8, which means that changing the size of the vector sum to 8 and the if condition in the sumUp function to i%8==id will give the program more data to process. | ||

| + | |||

| + | [[File:sizeOfSum8.jpg|400px]]<br /> | ||

| + | |||

| + | Nevertheless, the result still does not bring the time between the Serial block and Thread block closer and the Serial block still takes less time. | ||

| + | |||

| + | What would it take to show the advantages of multicore? As you can see in our program, multiple threads are not operating on the same variable, but only a single thread is reading and writing a lot of variables in the same cache line, so you can introduce a local variable. | ||

| + | |||

| + | We implement another function newSumUp in the program code: | ||

| + | |||

| + | void newSumUP(const vector<int> numbers, vector<long>& sum, int id) { | ||

| + | long thisSum = 0; | ||

| + | for (int i = 0; i < numbers.size(); ++i) { | ||

| + | if (i % sizeOfSum == id) { | ||

| + | thisSum += i; | ||

| + | } | ||

| + | } | ||

| + | sum[id] = thisSum; | ||

| + | cout << "sum " << id << " = " << sum[id] << endl; | ||

| + | } | ||

| + | |||

| + | Also, we add the following code to our main function: | ||

| + | |||

| + | cout << endl << "----Local variable block---" << endl; | ||

| + | // Local variable block | ||

| + | { | ||

| + | vector<long> sum(sizeOfSum, 0); | ||

| + | auto start = chrono::steady_clock::now(); | ||

| + | vector<thread> thread; | ||

| + | for (int i = 0; i < sizeOfSum; ++i) { | ||

| + | thread.emplace_back(newSumUP, numbers, ref(sum), i); | ||

| + | } | ||

| + | for (int i = 0; i < sizeOfSum; ++i) { | ||

| + | thread[i].join(); | ||

| + | } | ||

| + | auto end = chrono::steady_clock::now(); | ||

| + | cout << "Local variable block consumption: " << chrono::duration_cast<chrono::microseconds>(end - start).count() << "ms" << endl; | ||

| + | } | ||

| + | |||

| + | When we ran the program again, we came to this conclusion: | ||

| + | |||

| + | [[File:newBlockOutput(2).jpg|400px]]<br /> | ||

| + | |||

| + | We can see that the Local variable block is already much faster than the Thread block, and even comparable to the Serial block is almost the same. But overall the Serial block is still the least time-consuming because the Local variable block still needs to incur extra overhead for thread creation and scheduling. | ||

| + | |||

| + | We could try increasing sizeOfNumbers to 1000000 as well, which would allow the program to process more data, thus compensating for the extra overhead of thread creation and scheduling. | ||

| + | |||

| + | [[File:newBlockOutput1000000(new).jpg|400px]]<br /> | ||

| + | |||

| + | Now we can already see the advantage of multi-threading. Even when the vector numbers reach the size of 1000000, the Thread block even runs faster than the Serial block. | ||

| + | |||

| + | So the problem of false sharing sometimes cannot be generalized, and the occurrence of false sharing does not necessarily make performance lower. But no matter how the Local variable block is the optimal solution. | ||

| + | |||

| + | == Byte alignment == | ||

| + | |||

| + | If you think the above example is too limiting, not all cases can be abstracted to such a local variable. So let's let the two variables exist on different cache lines! | ||

| + | |||

| + | #include <thread> | ||

| + | #include <iostream> | ||

| + | #include <chrono> | ||

| + | using namespace std; | ||

| + | struct Number { | ||

| + | int num1; | ||

| + | int num2; | ||

| + | }; | ||

| + | void increment1(Number& number) { | ||

| + | for (int i = 0; i < 1000000; ++i) { | ||

| + | number.num1++; | ||

| + | } | ||

| + | cout << "number.num1:" << number.num1 << endl; | ||

| + | } | ||

| + | void increment2(Number& number) { | ||

| + | for (int i = 0; i < 1000000; ++i) { | ||

| + | number.num2++; | ||

| + | } | ||

| + | cout << "number.num2:" << number.num2 << endl; | ||

| + | } | ||

| + | int main() { | ||

| + | Number number = { 0, 0 }; | ||

| + | auto start = chrono::steady_clock::now(); | ||

| + | thread thread1(increment1, ref(number)); | ||

| + | thread thread2(increment2, ref(number)); | ||

| + | thread1.join(); | ||

| + | thread2.join(); | ||

| + | auto end = chrono::steady_clock::now(); | ||

| + | cout << "Consumption: " << chrono::duration_cast<chrono::microseconds>(end - start).count() << "ms" << endl; | ||

| + | } | ||

| + | |||

| + | In this program code, we can easily see that the Number struct has two member variables (num1 and num2). They are for loop in two different threads to execute the increment operator 1000000 times. This time we use Linux with multiple cores to compile (note that here we are not using any optimization). | ||

| + | |||

| + | g++ -std=c++11 -pthread false_sharing.cpp | ||

| + | |||

| + | This is the result after running: | ||

| + | |||

| + | [[File:LinuxOutput.jpg|400px]]<br /> | ||

| + | |||

| + | [[File:LinuxOutput2.jpg|400px]]<br /> | ||

| + | |||

| + | [[File:LinuxOutput3.jpg|400px]]<br /> | ||

| + | |||

| + | We can see from this result that the runtime is actually unstable and takes a long time. | ||

| + | |||

| + | Although the fields within struct have their own default byte alignment, they are generally only aligned to the word length. In this code example, that is, 8-byte alignment, which does not result in num1 and num2 being in separate cache lines. So we need to use the gcc extension syntax to align the program to 64 bytes. | ||

| + | |||

| + | We can define a macro at the beginning of the program code to use the gcc attribute function. | ||

| + | |||

| + | #define ALIGN __attribute__((aligned(64))) | ||

| + | |||

| + | We also need to redefine the Number struct: | ||

| + | |||

| + | struct Number { | ||

| + | int ALIGN num1; | ||

| + | int ALIGN num2; | ||

| + | }; | ||

| + | |||

| + | This allows a member variable with a macro name added to the Number struct to be 64 bytes.If you don't believe me you can try to test Number with sizeof, the result is 128 bytes. But you can see that sizeof num1 and num2 are still 4 bytes respectively, that's because the padded bytes are not counted in the field. | ||

| + | |||

| + | |||

| + | [[File:testBytes.jpg|400px]]<br /> | ||

| + | |||

| + | ''Here is a reminder: not all machines are 64 bytes. If your CPU is a three-level cache, you should look at the size of the L3 cache line, not the L1! If your CPU does not have L3, then it will be based on L2.'' | ||

| + | |||

| + | When we execute the program code again after the bytes are aligned, we get this result: | ||

| + | |||

| + | [[File:LinuxOutput4.jpg|400px]]<br /> | ||

| + | |||

| + | [[File:LinuxOutput5.jpg|400px]]<br /> | ||

| + | |||

| + | [[File:LinuxOutput6.jpg|400px]]<br /> | ||

| + | |||

| + | We can notice that the time taken for multiple executions is significantly more stable and shorter than before. | ||

| + | |||

| + | Let's take another look at what happens when O2 is turned on. | ||

| + | |||

| + | g++ -std=c++11 -pthread -O2 false_sharing.cpp | ||

| + | |||

| + | Output('''with''' byte alignment): | ||

| + | |||

| + | [[File:with1.jpg|400px]]<br /> | ||

| + | |||

| + | [[File:with2.jpg|400px]]<br /> | ||

| + | |||

| + | Output('''without''' byte alignment): | ||

| + | |||

| + | [[File:without1.jpg|400px]]<br /> | ||

| + | |||

| + | [[File:without2.jpg|400px]]<br /> | ||

| + | |||

| + | After running it, I found that the difference between the runtime with and without byte alignment is very small and there is no instability, the runtime is around 200ms. | ||

| + | |||

| + | But the unexpected thing is that when I execute increment1() and increment2() in serial code only, it only consumes 16ms! | ||

| + | |||

| + | [[File:mySerial.jpg|400px]]<br /> | ||

| + | |||

| + | Have you learned? The so-called false sharing can make performance worse in fact this cannot be generalized. We can see that with O2 on, the CPU and compiler are able to optimize the serial version of the code very well, while the multi-threaded code is not well optimized, which leads to higher performance of the serial version of the code. Even using a byte-aligned cache line size does not help. In the first example, we increased the number of vector numbers, which caused the serial code to slow down significantly. The differences in the two examples are related to the specific execution logic. | ||

| + | |||

| + | So don't treat false sharing as a beast, and don't treat 64-byte alignment as a panacea. Everything has to be actually tested to know. Don't throw out the conclusion under O0 and then take it as a guideline, that makes no sense ...... | ||

| + | |||

| + | = '''Conclusion''' = | ||

| + | In conclusion, the term false sharing refers to the use of shared memory by multiple threads at the same time. A multiprocessor environment decreases performance. Shared data is modified by multiple processors simultaneously. This is a very common occurrence in the loop. In many cases, false sharing can be overlooked, resulting in a program's inability to scale. In parallel programming, where performance is fundamental, keeping an eye out for problems and recognizing them quickly is essential. | ||

| + | |||

| + | Finally, we discussed some solutions to minimize false sharing by making as much private data as possible to reduce how often shared data needs to be updated. Making use of the compiler's optimization features to reduce memory loads and stores. Also, we can improve performance by byte alignment. | ||

| + | |||

| + | = References = | ||

| + | https://www.easytechjunkie.com/what-is-false-sharing.htm | ||

| + | |||

| + | https://levelup.gitconnected.com/false-sharing-the-lesser-known-performance-killer-bbb6c1354f07 | ||

| + | |||

| + | https://wiki.cdot.senecacollege.ca/wiki/GPU621/False_Sharing | ||

| + | |||

| + | https://www.baeldung.com/java-false-sharing-contended#:~:text=In%20the%20MESI%20protocol%2C%20each,the%20acronym%20of%20these%20states. | ||

| + | |||

| + | https://learn.microsoft.com/en-us/archive/msdn-magazine/2008/october/net-matters-false-sharing | ||

| + | |||

| + | http://www.nic.uoregon.edu/~khuck/ts/acumem-report/manual_html/multithreading_problems.html | ||

Latest revision as of 14:14, 4 December 2022

Contents

Group Members

Preface

In multicore concurrent programming, if the mutual exclusion is a "performance killer", then false sharing is a worthy "performance assassin". The difference between "killer" and "assassin" is that a "killer" can be easily detected, and we always have many ways to deal with a "killer" when we fight it, such as fighting, running away, detouring, and begging for mercy, but an "assassin" is completely different. Assassins are good at hiding in the shadows and can give a fatal blow at any time, making people defensive.

In our concurrent development, when we encounter situations that affect concurrency performance, we always have many ways to find and improve the concurrency performance of the program. But false sharing leaves no trace in our code that it is in the "dark" and is seriously slowing down concurrency performance, making it hard to find the root cause of the problem, let alone improve concurrency performance.

What you need to know before understanding false sharing

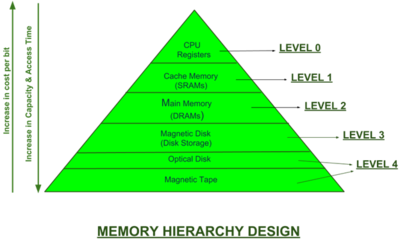

Cache

Basically, the cache is a location where data is stored for CPU use. But there are many types of memory for the CPU. Ideally, CPU can not access every data with lightning speed. So, let's talk about it in brief. In our PC and laptops, we have hard drives and SSDs which are slow in speed, but they can store big data. So, when the CPU tries to access this data it will take some time, so this process takes more computational time.

Moreover, DRMS is quite expensive. However, it is fast but still, DRMS is not enough for CPUs. DRMS works on electricity. Hence if power is cut that time data is not accessible. Furthermore, SRAM is very fast and smaller but expensive. SRAM memory used in cache. So, the first CPU finds data from the cache and if data is not in the cache, then the CPU starts to find it in the main memory and tries until it finds that data. So, this data transfers into the cache. This transfer works in two ways. The first way is that the same data is transferred into the cache for a short period of time and this is known as temporal locality. Another one is a spatial locality and this method also accessed nearby locations if it will be needed.

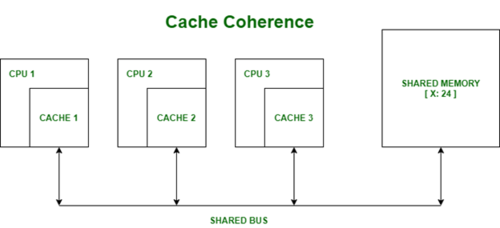

Cache Coherence

When the CPU accesses the data at that time data goes into the cache and this memory block is known as the cache line. But it is not possible that modify the original data when changes in the cache line. Here cache coherence helps that stored in multiple local caches. Cache coherence connects all cache.

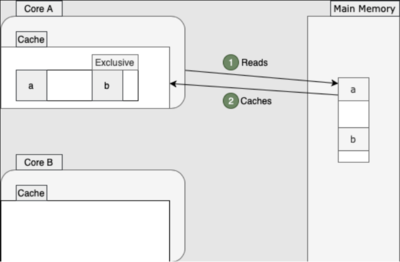

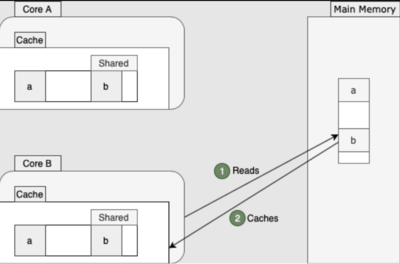

MESI

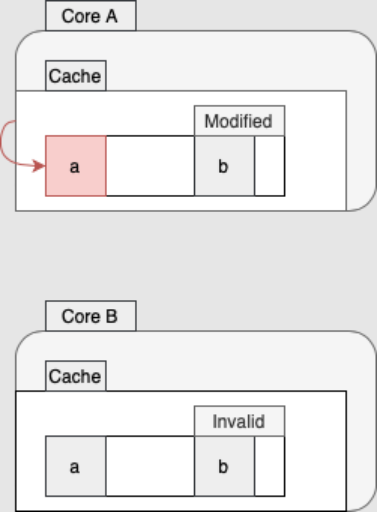

First, we know that the cache line has four different states. Like, exclusive, shared, modified, and invalid. So MESI is an acronym for all four states. To understand it well we throw light on this example.

This picture shows that the core A read value a from the main memory. So this core also fetches some nearby values from the main memory and stores these values in the cache line and makes it exclusive because only core A operates this cache line.

Later, core B also reads the value of b from memory. Now, a and b both read the same cache line and close so, both cores are shared.

Moreover, core A change the value of a so, this change is stored in the buffer and cache line tagged as modified. In the end, it communicates with core B and is known as an invalid cache line.

What is False Sharing?

False Sharing is sharing pattern that happens when multiple applications run and share the same memory. When more than one thread read or updates the same data in logical memory it ends up in the same catch line. Each application’s cache is a copy of a common source. So, when we modify one cache it will cause others to reload from the common source. In other words, when one program makes a change in one cache and it does not change the data which is used by the second program, this also forces another cache to reload so, in this scenario reload cache is a useless system resource and it may create a negative impact on the performance of the program. It is not easy to catch false sharing and stop it. However, there are some ways which help us to overcome false sharing.

The foremost reason why false sharing happens can be found in how operating systems read and write data. When a program reads or writes data from the hard drive or other sources at that time this data loads into a temporary cache. Because it is a very fast way to access it. This knows as a cache line.

False sharing example

#include <thread>

#include <vector>

#include <iostream>

#include <chrono>

using namespace std;

const int sizeOfNumbers = 10000; // Size of vector numbers

const int sizeOfSum = 2; // Size of vector sum

void sumUp(const vector<int> numbers, vector<long>& sum, int id) {

for (int i = 0; i < numbers.size(); i++) {

if (i % sizeOfSum == id) {

sum[id] += i;

}

}

// The output of this sum

cout << "sum " << id << " = " << sum[id] << endl;

}

int main() {

vector<int> numbers;

for (int i = 0; i < sizeOfNumbers; i++) { //Initialize vector numbers to 0 to 100

numbers.push_back(i);

}

cout << "-----Thread-----" << endl;

// Thread block

{

vector<long> sum(sizeOfSum, 0); //Set size=sizeOfSum and all to 0

auto start = chrono::steady_clock::now();

vector<thread> thread;

for (int i = 0; i < sizeOfSum; i++) {

thread.emplace_back(sumUp, numbers, ref(sum), i);

}

for (int i = 0; i < sizeOfSum; i++) {

thread[i].join();

}

auto end = chrono::steady_clock::now();

cout << "Thread time consumption: " << chrono::duration_cast<chrono::microseconds>(end - start).count() << "ms" << endl;

}

cout << endl << "-----Serial-----" << endl;

// Serial block

{

vector<long> sum(sizeOfSum, 0);

auto start = chrono::steady_clock::now();

for (int i = 0; i < sizeOfSum; i++) {

sumUp(numbers, sum, i);

}

auto end = chrono::steady_clock::now();

cout << "Serial time consumption: " << chrono::duration_cast<chrono::microseconds>(end - start).count() << "ms" << endl;

}

}

In this program code, we can see that at the beginning of the main function we declare a vector numbers and initialize it from 0 to 10000. We can also see in the main function that the code is divided into two main blocks. The first block is the Thread block, which is executed concurrently using multiple threads. While the second block is the Serial block, it is executed concurrently using normal serial logic.

The main purpose of the sumUp function is to calculate the sum of odd elements or even elements based on the data in the first vector argument in the argument list. Also, the sum will be recorded in the corresponding position of the second vector argument using the int argument as the index.

Which block of code do you think will take less time?

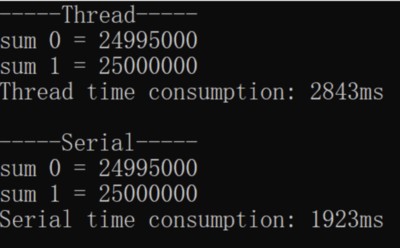

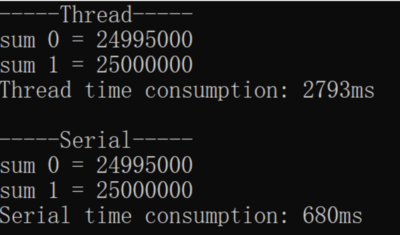

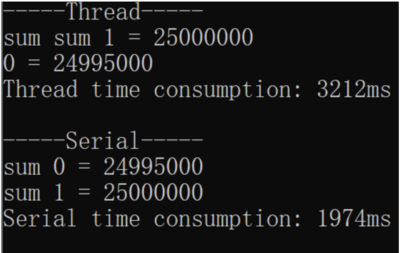

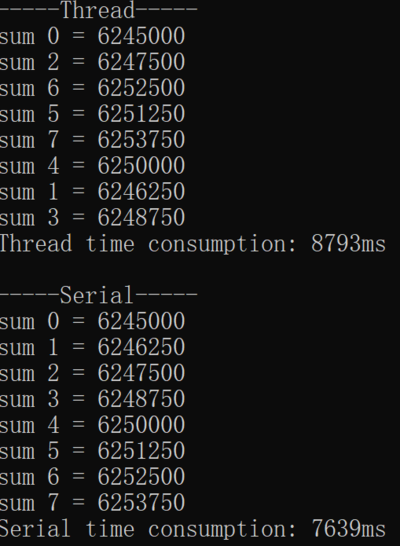

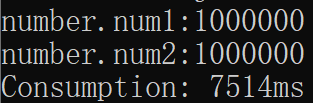

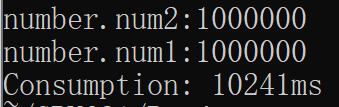

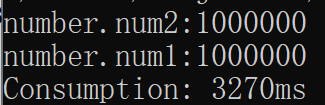

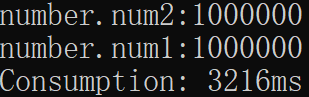

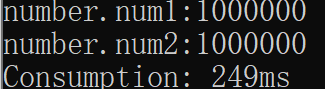

Theoretically, this code should be executed faster on a multicore machine with a Thread block than a serial block. But the result is.

Or

Or this

To our surprise, the serial block took much less time, no matter how many times I ran it. This turned our existing knowledge upside down, but don't worry, it's because you don't understand False Sharing yet.

Solutions Of False Sharing

Use local variables for each thread

As we said above, the smallest unit of CPU operation on the cache is the size of a cache line, which is 64 bytes. As you can see in our program code, the sum is a vector that stores two data with long type. The two long data are in fact located on the same cache line. When two threads read and write sum[0] and sum[1] separately, it looks like they are not using the same variable, but in fact, they are affecting each other.For example, if a thread updates sum[0] in CPU0, this causes the cache line of sum[1] in CPU1 to be invalidated. This causes the program to take longer to run, as it needs to re-read the data.

We try to change the sizeOfSum to 8, which means that changing the size of the vector sum to 8 and the if condition in the sumUp function to i%8==id will give the program more data to process.

Nevertheless, the result still does not bring the time between the Serial block and Thread block closer and the Serial block still takes less time.

What would it take to show the advantages of multicore? As you can see in our program, multiple threads are not operating on the same variable, but only a single thread is reading and writing a lot of variables in the same cache line, so you can introduce a local variable.

We implement another function newSumUp in the program code:

void newSumUP(const vector<int> numbers, vector<long>& sum, int id) {

long thisSum = 0;

for (int i = 0; i < numbers.size(); ++i) {

if (i % sizeOfSum == id) {

thisSum += i;

}

}

sum[id] = thisSum;

cout << "sum " << id << " = " << sum[id] << endl;

}

Also, we add the following code to our main function:

cout << endl << "----Local variable block---" << endl;

// Local variable block

{

vector<long> sum(sizeOfSum, 0);

auto start = chrono::steady_clock::now();

vector<thread> thread;

for (int i = 0; i < sizeOfSum; ++i) {

thread.emplace_back(newSumUP, numbers, ref(sum), i);

}

for (int i = 0; i < sizeOfSum; ++i) {

thread[i].join();

}

auto end = chrono::steady_clock::now();

cout << "Local variable block consumption: " << chrono::duration_cast<chrono::microseconds>(end - start).count() << "ms" << endl;

}

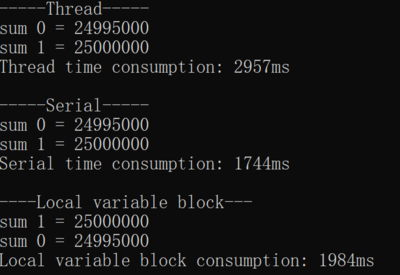

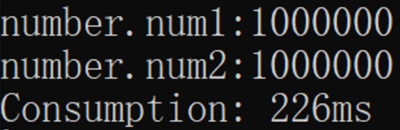

When we ran the program again, we came to this conclusion:

We can see that the Local variable block is already much faster than the Thread block, and even comparable to the Serial block is almost the same. But overall the Serial block is still the least time-consuming because the Local variable block still needs to incur extra overhead for thread creation and scheduling.

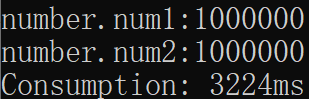

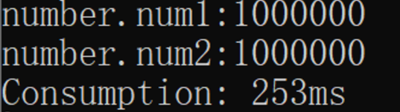

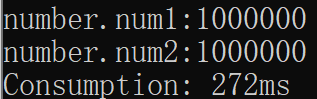

We could try increasing sizeOfNumbers to 1000000 as well, which would allow the program to process more data, thus compensating for the extra overhead of thread creation and scheduling.

Now we can already see the advantage of multi-threading. Even when the vector numbers reach the size of 1000000, the Thread block even runs faster than the Serial block.

So the problem of false sharing sometimes cannot be generalized, and the occurrence of false sharing does not necessarily make performance lower. But no matter how the Local variable block is the optimal solution.

Byte alignment

If you think the above example is too limiting, not all cases can be abstracted to such a local variable. So let's let the two variables exist on different cache lines!

#include <thread>

#include <iostream>

#include <chrono>

using namespace std;

struct Number {

int num1;

int num2;

};

void increment1(Number& number) {

for (int i = 0; i < 1000000; ++i) {

number.num1++;

}

cout << "number.num1:" << number.num1 << endl;

}

void increment2(Number& number) {

for (int i = 0; i < 1000000; ++i) {

number.num2++;

}

cout << "number.num2:" << number.num2 << endl;

}

int main() {

Number number = { 0, 0 };

auto start = chrono::steady_clock::now();

thread thread1(increment1, ref(number));

thread thread2(increment2, ref(number));

thread1.join();

thread2.join();

auto end = chrono::steady_clock::now();

cout << "Consumption: " << chrono::duration_cast<chrono::microseconds>(end - start).count() << "ms" << endl;

}

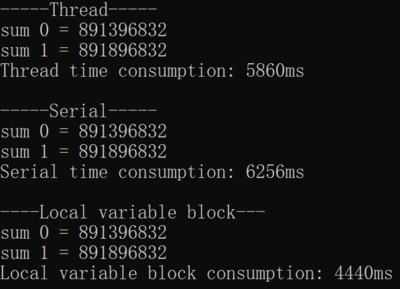

In this program code, we can easily see that the Number struct has two member variables (num1 and num2). They are for loop in two different threads to execute the increment operator 1000000 times. This time we use Linux with multiple cores to compile (note that here we are not using any optimization).

g++ -std=c++11 -pthread false_sharing.cpp

This is the result after running:

We can see from this result that the runtime is actually unstable and takes a long time.

Although the fields within struct have their own default byte alignment, they are generally only aligned to the word length. In this code example, that is, 8-byte alignment, which does not result in num1 and num2 being in separate cache lines. So we need to use the gcc extension syntax to align the program to 64 bytes.

We can define a macro at the beginning of the program code to use the gcc attribute function.

#define ALIGN __attribute__((aligned(64)))

We also need to redefine the Number struct:

struct Number {

int ALIGN num1;

int ALIGN num2;

};

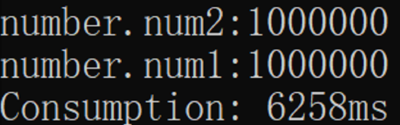

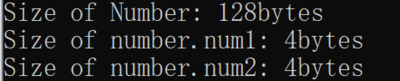

This allows a member variable with a macro name added to the Number struct to be 64 bytes.If you don't believe me you can try to test Number with sizeof, the result is 128 bytes. But you can see that sizeof num1 and num2 are still 4 bytes respectively, that's because the padded bytes are not counted in the field.

Here is a reminder: not all machines are 64 bytes. If your CPU is a three-level cache, you should look at the size of the L3 cache line, not the L1! If your CPU does not have L3, then it will be based on L2.

When we execute the program code again after the bytes are aligned, we get this result:

We can notice that the time taken for multiple executions is significantly more stable and shorter than before.

Let's take another look at what happens when O2 is turned on.

g++ -std=c++11 -pthread -O2 false_sharing.cpp

Output(with byte alignment):

Output(without byte alignment):

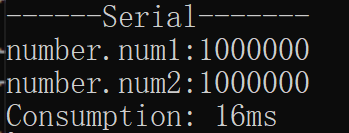

After running it, I found that the difference between the runtime with and without byte alignment is very small and there is no instability, the runtime is around 200ms.

But the unexpected thing is that when I execute increment1() and increment2() in serial code only, it only consumes 16ms!

Have you learned? The so-called false sharing can make performance worse in fact this cannot be generalized. We can see that with O2 on, the CPU and compiler are able to optimize the serial version of the code very well, while the multi-threaded code is not well optimized, which leads to higher performance of the serial version of the code. Even using a byte-aligned cache line size does not help. In the first example, we increased the number of vector numbers, which caused the serial code to slow down significantly. The differences in the two examples are related to the specific execution logic.

So don't treat false sharing as a beast, and don't treat 64-byte alignment as a panacea. Everything has to be actually tested to know. Don't throw out the conclusion under O0 and then take it as a guideline, that makes no sense ......

Conclusion

In conclusion, the term false sharing refers to the use of shared memory by multiple threads at the same time. A multiprocessor environment decreases performance. Shared data is modified by multiple processors simultaneously. This is a very common occurrence in the loop. In many cases, false sharing can be overlooked, resulting in a program's inability to scale. In parallel programming, where performance is fundamental, keeping an eye out for problems and recognizing them quickly is essential.

Finally, we discussed some solutions to minimize false sharing by making as much private data as possible to reduce how often shared data needs to be updated. Making use of the compiler's optimization features to reduce memory loads and stores. Also, we can improve performance by byte alignment.

References

https://www.easytechjunkie.com/what-is-false-sharing.htm

https://levelup.gitconnected.com/false-sharing-the-lesser-known-performance-killer-bbb6c1354f07

https://wiki.cdot.senecacollege.ca/wiki/GPU621/False_Sharing

https://learn.microsoft.com/en-us/archive/msdn-magazine/2008/october/net-matters-false-sharing

http://www.nic.uoregon.edu/~khuck/ts/acumem-report/manual_html/multithreading_problems.html