Difference between revisions of "GPU621/Intel Data Analytics Acceleration Library"

| Line 42: | Line 42: | ||

* Services | * Services | ||

| − | [[File:compo.png|Left | + | [[File:compo.png|Left]] |

==== Data Management ==== | ==== Data Management ==== | ||

Latest revision as of 06:30, 9 December 2021

GPU621/DPS921 | Participants | Groups and Projects | Resources | Glossary

Contents

Intel Data Analytics Acceleration Library

Group Members

- Adrian Ng

- Milosz Zapolski

- Muhammad Faaiz

Progress

100/100

Intel Data Analytics Acceleration

Introduction

Intel Data Analytics Acceleration Library is a library of optimized algorithmic building blocks for data analysis. It provides tools to build compute-intense applications that run fast on Intel architecture. It is optimized for CPUs and GPUs and includes algorithms for analysis functions, math functions, and training and library prediction functions for C++, Java and machine-learning Python libraries.

What can you do with it?

Intel Data Analytics Acceleration Library is a toolkit specifically designed to provide the user the tools it needs to optimize compute-intense applications that use intel architecture. This involves analyzing datasets with the limited compute resources the user has available, optimize predictions created by systems to make them run faster, optimize data ingestion and algorithmic compute simultaneously. All of this while still being able to be used in offline, streaming or even distributed models allowing you to download the library or use it from cloud.

Why use it?

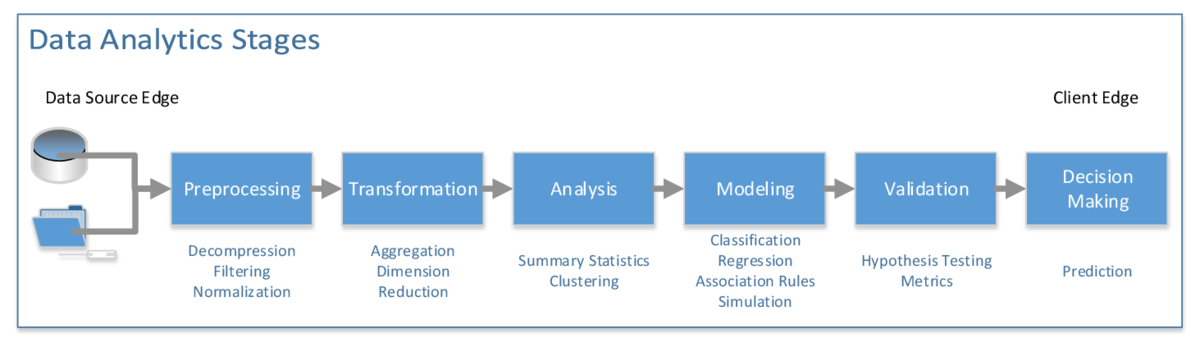

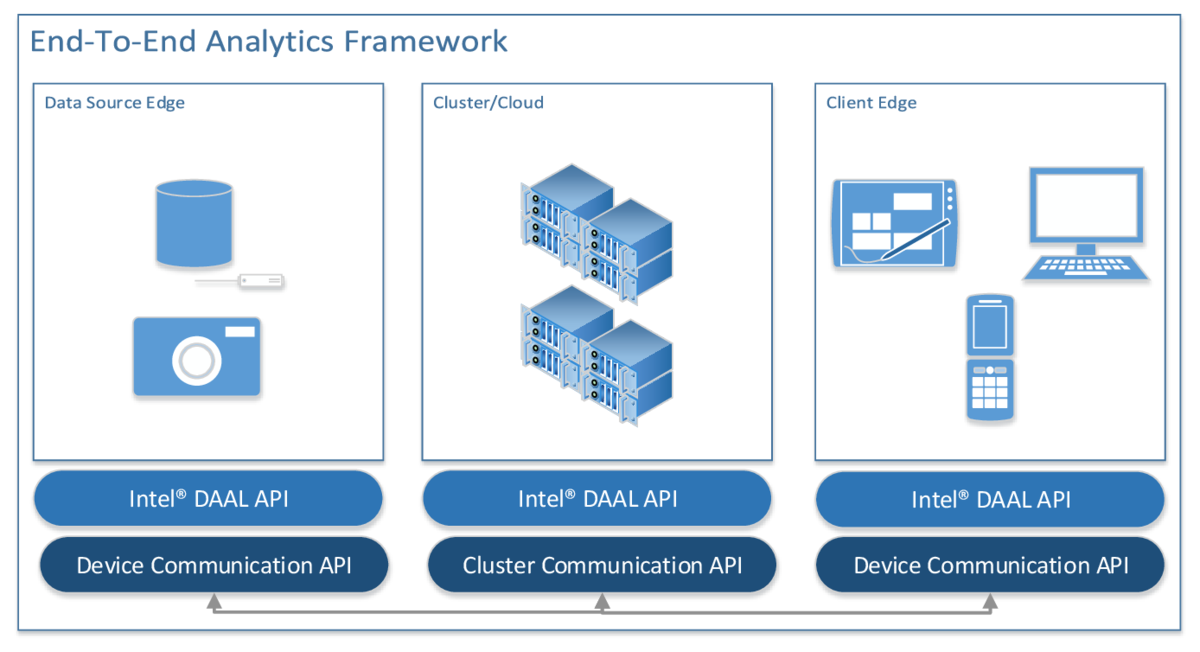

When working with Intel DAAL is supports the concept of the end to end analytics when some of data analytics stages are preformed on edge devices. These edge devices are close to where data is generated and consumed for example a router. For example, an edge device could be any device that controls data flow at the boundary of two networks like a phone, computer or IoT sensor. Intel DAAL APIs are agnostic about a particular cross-device communication technology this way they can actually be used with different types of end to end analytical frameworks.

Features

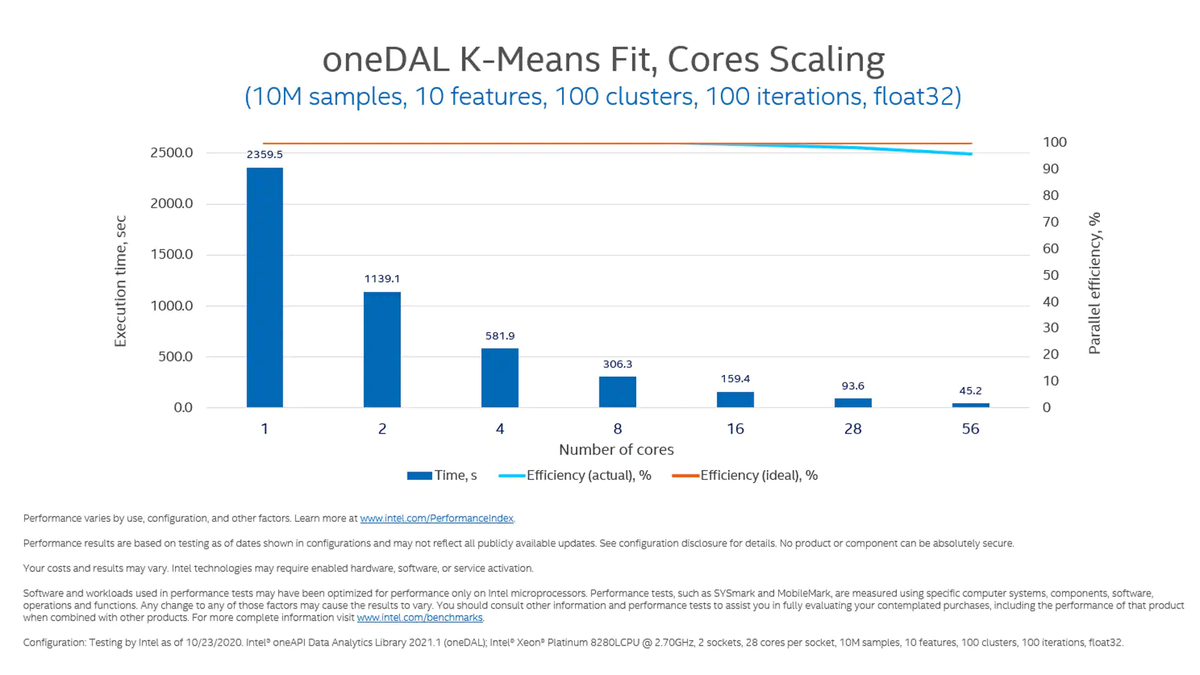

Performance

Intel Data Analytics Acceleration Library is a high specialized toolkit that uses specific algorithms to analyze, train, predict or process the datasets given to it. This allows the user to algorithm and function to write data in order to maximize the performance. Each algorithm within Intel Data Analytics Acceleration Library is highly specialized for very specific scenarios ensuring that every single resource available is used to it's full potential.

Portability

Working with Intel Data Analytics Acceleration Library gives a range of languages it can support and integrate with. These languages are Python, Java, C and C++. As these languages are common in modern coding Intel Data Analytics Acceleration Library will be able to integrate with most applications.

Components

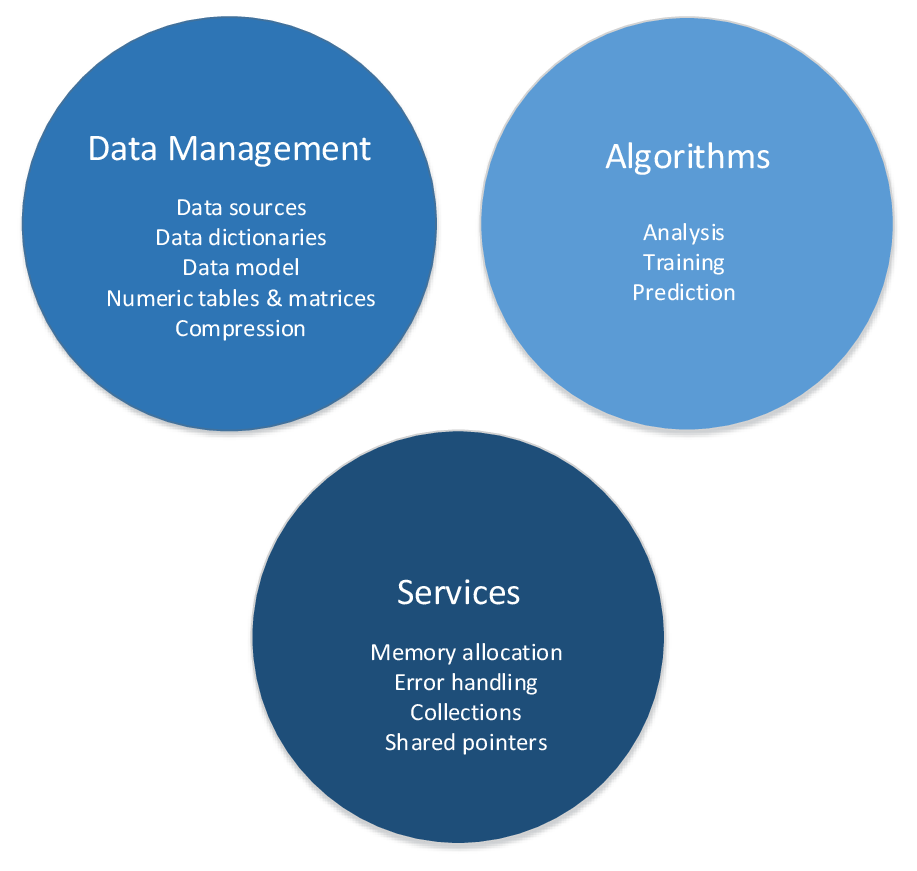

Intel DAAL consist of three major components:

- Data management

- Algorithms

- Services

Data Management

The data management portion of the Intel DAAL includes the classes and utilities used for data acquisition, initial preprocessing and normalization, “for” data conversion into numeric formats done by a supported data source and “for” model representation. An example would be the NumericTable class which is used for in-memory data manipulation. There is also the Model class which would be used to mimic any actual data that would be missing. If we move more towards more generic methods for dictionary manipulation there is DataSourceDictionary and NumericTableDictionary which are for things like accessing a particular data feature, adding a new feature or setting and retrieving the number of features.

Algorithms

As we move to look at algorithms it’s important to note that we are referring to classes that implement algorithms for data analysis and data modeling. They can be broke up into three computation modes: Batch processing, Online processing and Distributed processing.

Batch processing mode, the algorithm will work with the entire data set in mind to produce the output/results. This can be an issue when an entire data set is not available or the data set is too large to fit onto in the device’s memory.

Online Processing mode will incrementally update the results with partial results as the data set is streamed into the device’s memory in blocks.

Distributed processing mode will work on the data set across several devices. While it is working on the data set the devices will create partial results and upon the final result being completed all results will be merged into one.

Services

When looking at the services component it will include classes that are used across data management and algorithm components. To be more specific these classes will enable memory allocation, error handling and implementation of collections and shared pointers. These come in the form of collects which allow you to store different types of objects in a unified way. This comes in use when handling outputs or inputs of algorithms in use and at times can be used for error handling. How Intel DAAL will handle enabling memory for operations that require them or any deallocation is with shared pointers.

In-Depth Algorithm Support

Supported Algorithms

- Apriori for Association Rules Mining

- Correlation and Variance-Covariance Matrices

- Decision Forest for Classification and Regression

- Expectation-Maximization Using a Gaussian Mixture Model (EM-GMM)

- Gradient Boosted Trees (GBT) for Classification and Regression

- Alternating Least Squares (ALS) for Collaborative Filtering

- Multinomial Naïve Bayes Classifier

- Multiclass Classification Using a One-Against-One Strategy

- Limited-Memory BFGS (L-BFGS) Optimization Solver

- Logistic Regression with L1 and L2 regularization support

- Limited-Memory BFGS (L-BFGS) Optimization Solver

- Linear Regression

Deeper analysis on supported algorithms

Analysis Algorithms:

- Low Order Moments: Includes computing min, max, deviation, variance for the data set

- Principal Components Analysis: Used for dimensionality reduction

- Clustering: Taking groups of data and putting them into unlabeled groups.

- Outlier detection: Identifying data that abnormal compared to typical data.

- Association rules mining: This will identify any cooccurrence patterns.

- Quantiles: Splitting data into equal groups

- Data transformation through matrix decomposition

- Correlation matrix and variance-covariance matrix:For understanding statistical dependence among variables given

- Cosine distance matrix: Determining pairwise distance using cosine

- Correlation distance matrix: Determining pairwise distance by using other objects/items

Supported CPU & GPU Algorithms via DPC++ Interfaces

- K-Means Clustering

- K-Nearest Neighbor (KNN)

- Support Vector Machines (SVM) with Linear and Radial Basis Function (RBF) Kernels

- Principal Components Analysis (PCA)

- Density-based Special Clustering of Applications with Noise (DBSCAN)

- Random Forest

Training and prediction algorithms

Regression

- Ex. Linear regression: Predicting by using a linear equation to try to predict the relationship between variables that are explanatory (Known) or dependent.

Classification

- This would be trying to build a model to assign items into groups that would be uniquely labeled.

Recommendation Systems

- These systems would try to predict “preferences” a user would give by using commonly recognized examples

Neural networks

- Using algorithms they try to identify hidden patterns and any correlations that are within raw data, cluster and classifying it (More of a trial and error)

Code Examples

The examples shown below will demonstrate the algorithms used in Intel Data Analytics Acceleration Library along with how to access oneAPI code samples in tool command line or IDE:

Benchmark Example

Intel Data Analytics Acceleration Library addresses all stages of data analytics pipeline, it pre-processes the data, transforms it, analyses it, models, validates and makes decisions based on the dataset given to it.

Conclusion

The Intel Data Analytics Acceleration Library is a great tool to use if we are looking for something to maximize performance speed while still being able to process large amounts of data without losing accuracy. Due to it's flexible nature it can be run within the cloud which allows users to access large amounts of compute power from their home. The Intel Data Analytics Acceleration Library is a part of the OneAPI Base Toolkit and is often updated to ensure it's continued reliability.