Difference between revisions of "GPU621/Intel Advisor Assistant"

(→Roofline Chart Data) |

Jpkoradiya (talk | contribs) |

||

| (43 intermediate revisions by 3 users not shown) | |||

| Line 2: | Line 2: | ||

== Group Members == | == Group Members == | ||

| + | 1 [mailto:rramirez2@myseneca.ca?subject=GPU621 Ricardo Ramirez] | ||

| + | |||

| + | 2 [mailto:pparichehreh1@myseneca.ca?subject=GPU621 Parsa Parichehreh] | ||

| + | |||

| + | 3 [mailto:jpkoradiya@myseneca.ca?subject=GPU621 Jigarkumar Pritamkumar Koradiya] | ||

== Introduction to Intel Advisor == | == Introduction to Intel Advisor == | ||

| Line 19: | Line 24: | ||

• Threading | • Threading | ||

| + | |||

| + | [[File:Intel Advisor Selector.png]] | ||

| Line 32: | Line 39: | ||

== How to install Intel Advisor == | == How to install Intel Advisor == | ||

| − | + | Intel Advisor is part of the oneAPI Base Toolkit. It is also possible to download a standalone tool which is available on Intel’s official website at | |

| + | |||

| + | https://www.intel.com/content/www/us/en/developer/tools/oneapi/advisor.html | ||

== Advantages of Using Intel Advisor == | == Advantages of Using Intel Advisor == | ||

| Line 42: | Line 51: | ||

As it is, with Visual Studio, having optimization turned off simply has the compiler create assembly code for your code as written. With optimization Visual Studio uses Profile-Guided Optimization (PGO) to produce a more optimized code, but no where near as optimized as is possible. | As it is, with Visual Studio, having optimization turned off simply has the compiler create assembly code for your code as written. With optimization Visual Studio uses Profile-Guided Optimization (PGO) to produce a more optimized code, but no where near as optimized as is possible. | ||

| + | |||

| + | == Example of How to Use Intel Advisor == | ||

| + | Intel Advisor is available in either stand alone or as part of OneApi. Using it as part of its integration into Visual Studio, here we run through some basic steps using the code from Workshop 03 (Matmul) for demonstration: | ||

| + | Step One – Launching Advisor | ||

| + | Launching Intel Advisor, found under Tools/Intel Advisor: | ||

| + | |||

| + | [[File:IntelAdv06.png]] | ||

| + | |||

| + | Which opens the following window: | ||

| + | |||

| + | [[File:IntelAdv07.png]] | ||

| + | |||

| + | Here we will select Vectorization and Code Insights first and get the when running a survey (using the play key on the left menu): | ||

| + | |||

| + | [[File:IntelAdv08.png]] | ||

| + | |||

| + | Selecting the Characterization section on the right and running that will give us a Roofline report: | ||

| + | |||

| + | [[File:IntelAdv09.png]] | ||

== How It Displays Information == | == How It Displays Information == | ||

| − | A key feature of Intel Advisor is it offers information to the user via a graphical user interface (GUI) | + | A key feature of Intel Advisor is that it offers information to the user via a graphical user interface (GUI) and Command Line as well. |

| − | Here in an example | + | Here in an example where we can see an example of vectorization and code insights offered by the advisor: |

| − | [[File: | + | [[File:IntelAdvVectandCode.png]] |

Here this offers several important pieces of information such as: | Here this offers several important pieces of information such as: | ||

| Line 75: | Line 103: | ||

Memory Access Patterns will check for memory access issues with such as non-contiguous parts. | Memory Access Patterns will check for memory access issues with such as non-contiguous parts. | ||

Dependencies analysis will check for real data dependencies in loops which the compiler failed to vectorize. | Dependencies analysis will check for real data dependencies in loops which the compiler failed to vectorize. | ||

| + | |||

| + | [[File:Survey Report Example.png]] | ||

| + | |||

| + | Here above we have a survey report run on MatLab code. This displays quick information on code, such as points where Intel Advisor feels would benefit from vectorization. | ||

| + | |||

| + | It shows as well information on number of times a loop was iterated on and recommendations which a programmer could use to vectorize their code: | ||

| + | [[File:Intel Advisor Unrolled.png]] | ||

== Important Features of Intel Advisor == | == Important Features of Intel Advisor == | ||

Below are few of the main features of the Intel Advisor: | Below are few of the main features of the Intel Advisor: | ||

| − | =Optimize Vectorization for Better Performance= | + | ===Optimize Vectorization for Better Performance=== |

Vectorization is one of the most important features of the Intel Advisor, it is an operation of Single instruction Multiple Data(SIMD) instructions on multiple data objects in parallel in single core of a CPU. This can very effectively increase performance by reducing loop overhead and making better use of math unit. | Vectorization is one of the most important features of the Intel Advisor, it is an operation of Single instruction Multiple Data(SIMD) instructions on multiple data objects in parallel in single core of a CPU. This can very effectively increase performance by reducing loop overhead and making better use of math unit. | ||

| Line 93: | Line 128: | ||

• Get actionable user code-centric guidance to improve vectorization efficiency. | • Get actionable user code-centric guidance to improve vectorization efficiency. | ||

| − | + | ===Model, Tune, and Test Multiple Threading Designs=== | |

| − | |||

| − | =Model, Tune, and Test Multiple Threading Designs= | ||

The Threading Advisor tool is applied to the model and tests the performance of various multi-threading designs such as OpenMP, Threading Building Blocks (TBB), and Microsoft Task Parallel Library (TPL) without limiting the development of the project. The tool achieves this by helping you with prototyping, testing the project's scalability for larger systems, and optimizing faster. It will also help identify issues before implementing parallelization. | The Threading Advisor tool is applied to the model and tests the performance of various multi-threading designs such as OpenMP, Threading Building Blocks (TBB), and Microsoft Task Parallel Library (TPL) without limiting the development of the project. The tool achieves this by helping you with prototyping, testing the project's scalability for larger systems, and optimizing faster. It will also help identify issues before implementing parallelization. | ||

| Line 101: | Line 134: | ||

The tool is primarily used for adding threading to the C, C++, C#, and Fortran languages. | The tool is primarily used for adding threading to the C, C++, C#, and Fortran languages. | ||

| − | + | [[File:Image27.png]] | |

| − | + | ===Optimize for Memory and Compute=== | |

| − | |||

| − | =Optimize for Memory and Compute= | ||

The analysis gives a realistic visual representation of application performance against hardware-imposed conditions, such as memory bandwidth and computer capacity. | The analysis gives a realistic visual representation of application performance against hardware-imposed conditions, such as memory bandwidth and computer capacity. | ||

| − | + | With automated roofline analysis, you can: | |

• See performance headroom against hardware limitations | • See performance headroom against hardware limitations | ||

| Line 120: | Line 151: | ||

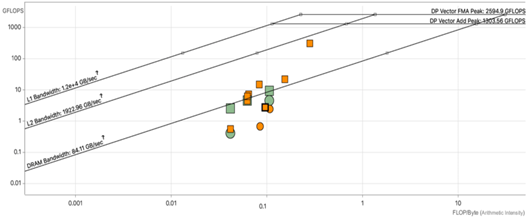

[[File:IntelAdv03.png]] | [[File:IntelAdv03.png]] | ||

| − | =Roofline Chart Data= | + | ====Roofline Chart Data==== |

| − | The Roofline | + | The Roofline model is a visual performance model used to deliver performance estimations of a given application running on multi-core or many-core. |

| − | • Arithmetic intensity (x-axis) - measured in number of floating-point operations (FLOPs) or integer operations (INTOPs) per byte, based on the loop algorithm, and transferred between CPU/VPU and memory | + | • Arithmetic intensity (x-axis) - measured in number of floating-point operations (FLOPs) or integer operations (INTOPs) per byte, based on the loop algorithm, and transferred between CPU/VPU and memory |

| − | + | • Performance (y-axis) - measured in billions of floating-point operations per second (GFLOPS) or billions of integer operations per second (GINTOPS) | |

| − | • Performance (y-axis) - measured in billions of floating-point operations per second (GFLOPS) or billions of integer operations per second (GINTOPS) | ||

[[File:IntelAdv04.png]] | [[File:IntelAdv04.png]] | ||

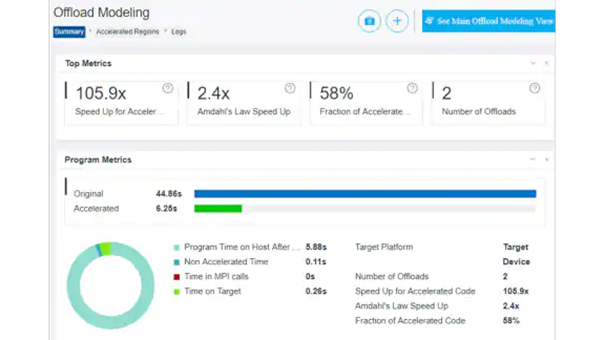

| − | =Efficiently Offload Your Code to GPUs= | + | ===Efficiently Offload Your Code to GPUs=== |

The Offload Advisor is an extended version of the Intel® Advisor, a code modernization, and performance estimation tool that supports OpenCL and SYCL/Data-Parallel C++ languages on CPU and GPU. | The Offload Advisor is an extended version of the Intel® Advisor, a code modernization, and performance estimation tool that supports OpenCL and SYCL/Data-Parallel C++ languages on CPU and GPU. | ||

| Line 140: | Line 170: | ||

| − | • It will | + | • It will estimate data-transfer costs and get guidance on how to optimize data transfer. |

• Identify offload opportunities where it pays off the most. | • Identify offload opportunities where it pays off the most. | ||

| − | + | [[File:IntelAdv05.png]] | |

| − | =Create, Visualize, and Analyze Task and Dependency Computation Graphs= | + | ===Create, Visualize, and Analyze Task and Dependency Computation Graphs=== |

Flow Graph Analyzer (FGA) is a rapid visual prototyping environment for applications that can be expressed as flow graphs using Intel Threading Building Blocks (Intel TBB) applications. | Flow Graph Analyzer (FGA) is a rapid visual prototyping environment for applications that can be expressed as flow graphs using Intel Threading Building Blocks (Intel TBB) applications. | ||

| Line 156: | Line 186: | ||

• Apart from TBB, it helps visualize DPC++ asynchronous task graphs, provides insights to task scheduling inefficiencies and analyzes open MP task dependencies for better performance | • Apart from TBB, it helps visualize DPC++ asynchronous task graphs, provides insights to task scheduling inefficiencies and analyzes open MP task dependencies for better performance | ||

| − | == | + | ==Sources== |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | Design code for parallelism and offloading with Intel® advisor. Intel. (n.d.). Retrieved November 29, 2021, from https://www.intel.com/content/www/us/en/developer/tools/oneapi/advisor.html#gs.hpo78b. | ||

| − | + | Run a roofline analysis. Intel. (n.d.). Retrieved November 29, 2021, from https://www.intel.com/content/www/us/en/develop/documentation/advisor-tutorial-roofline/top/run-a-roofline-analysis.html. | |

| + | Design code for parallelism and offloading with Intel® advisor. Intel. (n.d.). Retrieved December 6, 2021, from https://www.intel.com/content/www/us/en/developer/tools/oneapi/advisor.html. | ||

| − | + | Get started with Intel advisor. Intel. (n.d.). Retrieved December 6, 2021, from https://www.intel.com/content/www/us/en/develop/documentation/get-started-with-advisor/top.html. | |

Latest revision as of 21:24, 8 December 2021

Contents

Intel Advisor

Group Members

3 Jigarkumar Pritamkumar Koradiya

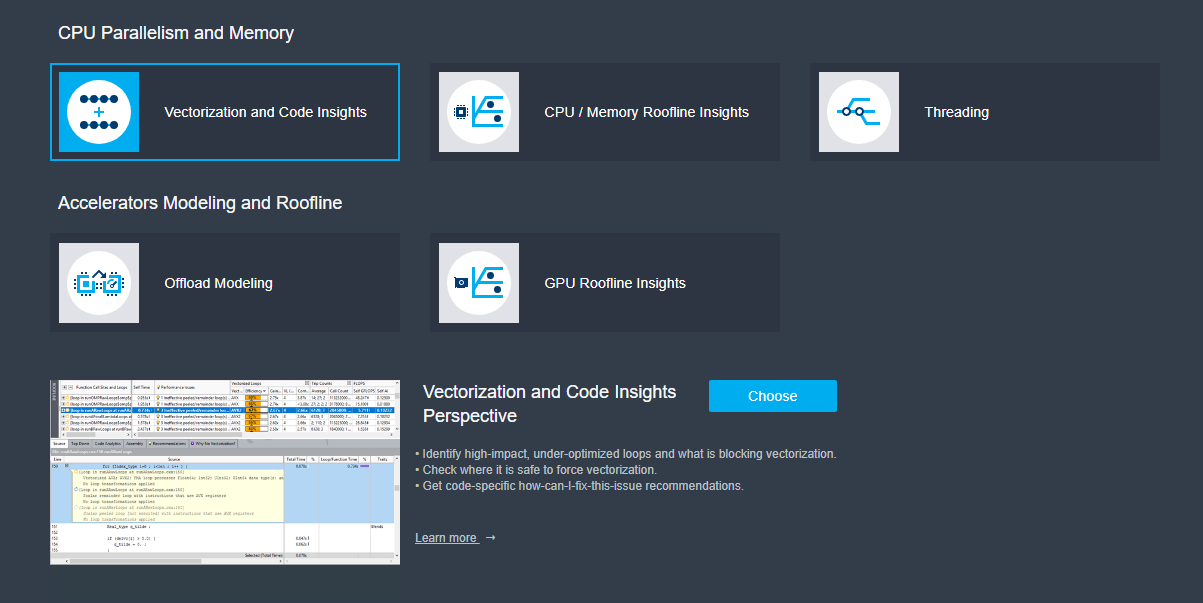

Introduction to Intel Advisor

Intel advisor is a tool used by developers writing code in Fortran, C, C++, Data Parallel C++ (DPC++), OpenMP, and Python. It will analyze your code written in these languages and help you figure out where you can optimize, and make your application run faster.

It is a part of the Intel OneAPI Base Toolkit that we used in our course – GPU621.

This tool will take your code and help with its performance, it does this through analyzing your code through following key points:

• Vectorization and Code Insights

• CPU/ Memory Roofline Insights and GPU Roofline Insights

• Offload Modeling

• GPU Roofline Insights

• Threading

Intel Advisor will not write your code, but it will help with optimization. It allows you to:

• Model your application performance on an accelerator

• Visualize performance bottlenecks on a CPU or GPU using a Roofline chart

• Check vectorization efficiency

• Prototype threading designs

How to install Intel Advisor

Intel Advisor is part of the oneAPI Base Toolkit. It is also possible to download a standalone tool which is available on Intel’s official website at

https://www.intel.com/content/www/us/en/developer/tools/oneapi/advisor.html

Advantages of Using Intel Advisor

To talk about the advantages of using Intel Advisor we need to discuss a major concern of programmers today; CPU speeds are not increasing and there is a larger emphasize on producing code that will perform more efficiently with the resources available.

With newer processors more options for multi-threading processes become available to coders, as such code produced in this era of computing needs to be parallelized to reach its capabilities.

Intel Advisor is a tool designed to help you take advantage of all the cores available on your CPU. Compiling your code with the proper instruction set will allow your program to take full advantage all the processing power of your CPU.

As it is, with Visual Studio, having optimization turned off simply has the compiler create assembly code for your code as written. With optimization Visual Studio uses Profile-Guided Optimization (PGO) to produce a more optimized code, but no where near as optimized as is possible.

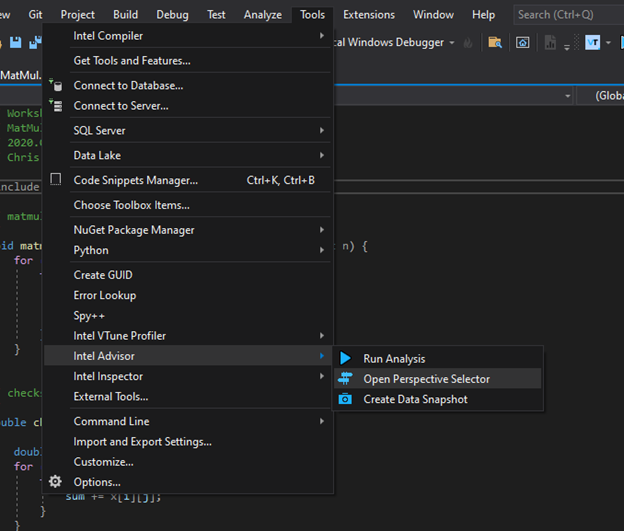

Example of How to Use Intel Advisor

Intel Advisor is available in either stand alone or as part of OneApi. Using it as part of its integration into Visual Studio, here we run through some basic steps using the code from Workshop 03 (Matmul) for demonstration: Step One – Launching Advisor Launching Intel Advisor, found under Tools/Intel Advisor:

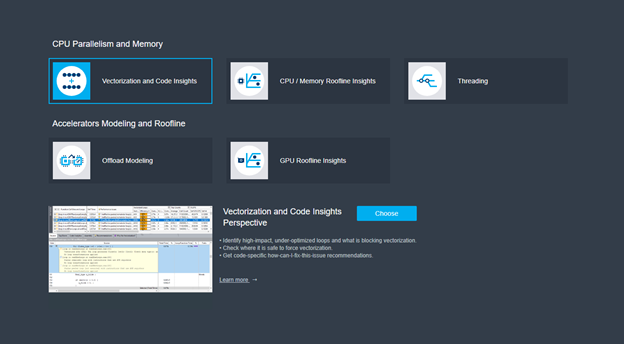

Which opens the following window:

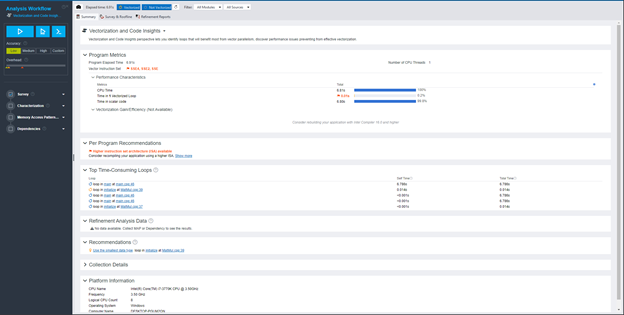

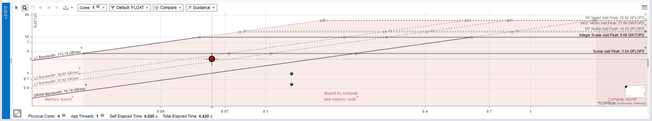

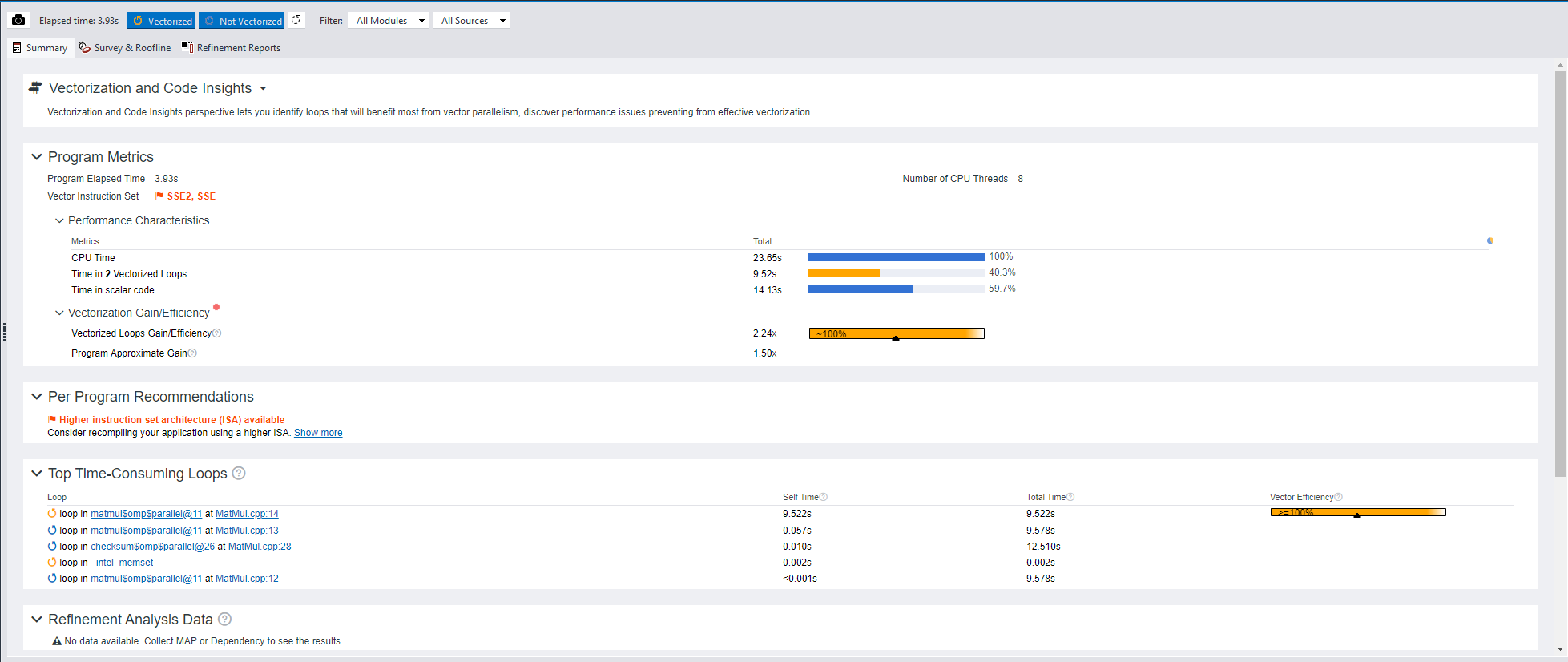

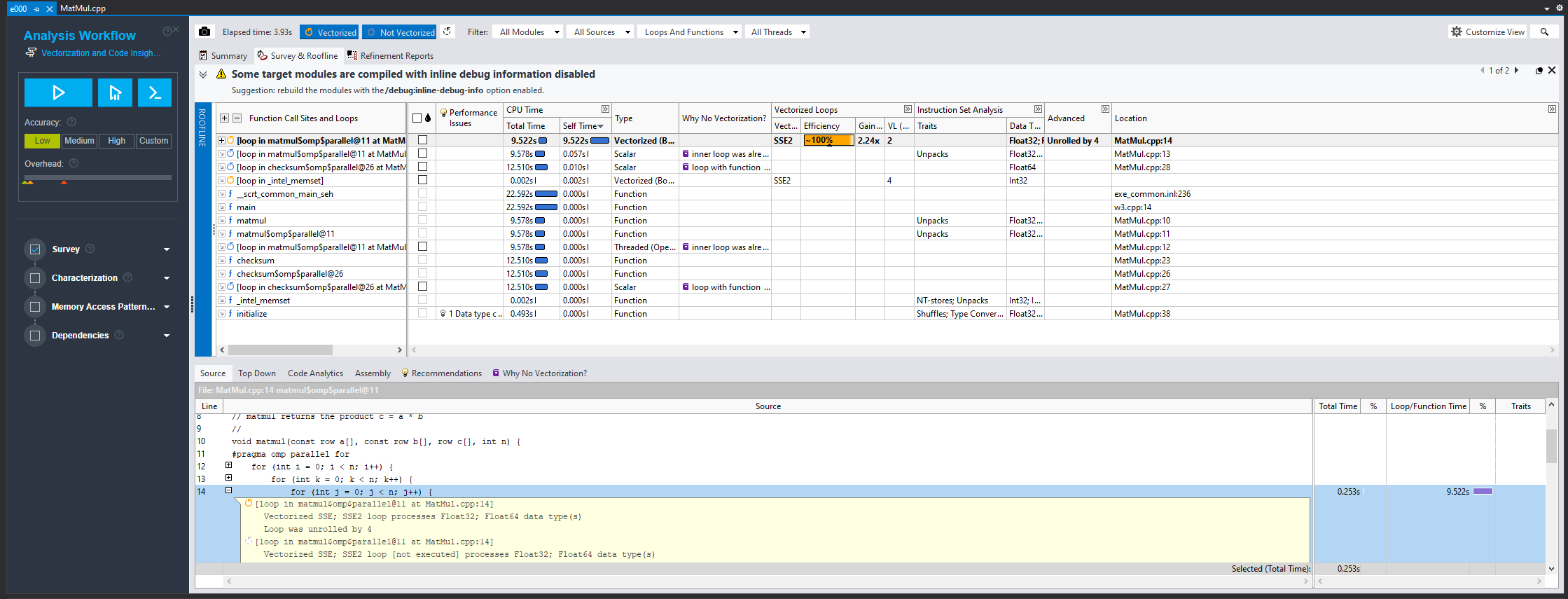

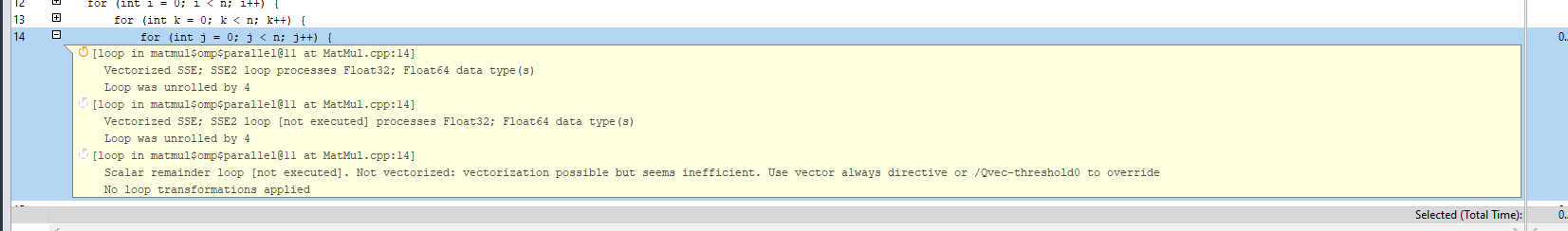

Here we will select Vectorization and Code Insights first and get the when running a survey (using the play key on the left menu):

Selecting the Characterization section on the right and running that will give us a Roofline report:

How It Displays Information

A key feature of Intel Advisor is that it offers information to the user via a graphical user interface (GUI) and Command Line as well. Here in an example where we can see an example of vectorization and code insights offered by the advisor:

Here this offers several important pieces of information such as:

• Performance metrics

• Top time-consuming loops as sorted by time

• A recommendation list for fixing performance issues with your code

How Vectorization and Code Insights works :

• Get integrated compiler report data and performance data by running a survey analysis

• Identifies the number of times loops are invoked and execute and the number of floating-point and integer operations by running a Characteristic analysis

• Checks for various memory issues by running the Memory Access Patterns (MAP) analysis

• Checks for data dependencies in loops that the compiler did not vectorize by running the Dependencies analysis.

To expand on a few of these points.

Survey analysis is the process where Intel Advisor looks to identify loop hotspots and will use that to provide recommendations on how to fix vectorization issues.

Memory Access Patterns will check for memory access issues with such as non-contiguous parts. Dependencies analysis will check for real data dependencies in loops which the compiler failed to vectorize.

Here above we have a survey report run on MatLab code. This displays quick information on code, such as points where Intel Advisor feels would benefit from vectorization.

It shows as well information on number of times a loop was iterated on and recommendations which a programmer could use to vectorize their code:

Important Features of Intel Advisor

Below are few of the main features of the Intel Advisor:

Optimize Vectorization for Better Performance

Vectorization is one of the most important features of the Intel Advisor, it is an operation of Single instruction Multiple Data(SIMD) instructions on multiple data objects in parallel in single core of a CPU. This can very effectively increase performance by reducing loop overhead and making better use of math unit.

It does the following:

• Find loops that will benefit from better vectorization.

• Identify where it is safe to force compiler vectorization.

• Pinpoint memory-access issues that may cause slowdowns.

• Get actionable user code-centric guidance to improve vectorization efficiency.

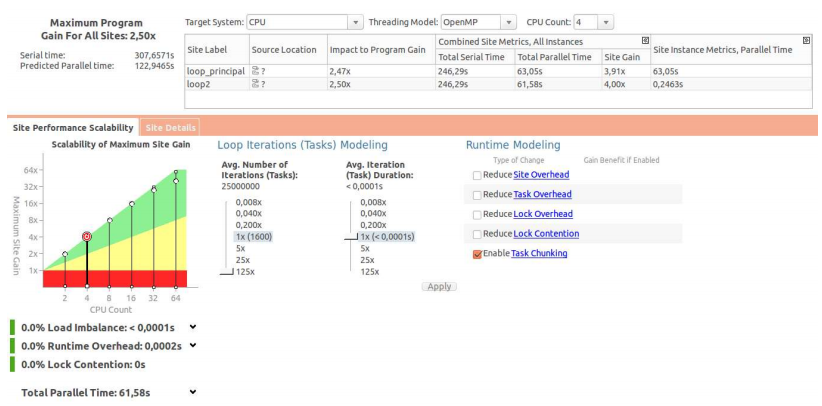

Model, Tune, and Test Multiple Threading Designs

The Threading Advisor tool is applied to the model and tests the performance of various multi-threading designs such as OpenMP, Threading Building Blocks (TBB), and Microsoft Task Parallel Library (TPL) without limiting the development of the project. The tool achieves this by helping you with prototyping, testing the project's scalability for larger systems, and optimizing faster. It will also help identify issues before implementing parallelization.

The tool is primarily used for adding threading to the C, C++, C#, and Fortran languages.

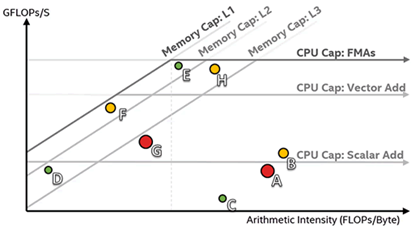

Optimize for Memory and Compute

The analysis gives a realistic visual representation of application performance against hardware-imposed conditions, such as memory bandwidth and computer capacity.

With automated roofline analysis, you can:

• See performance headroom against hardware limitations

• Get insights into a practical optimization roadmap

• Visualize optimization progress

Roofline Chart Data

The Roofline model is a visual performance model used to deliver performance estimations of a given application running on multi-core or many-core.

• Arithmetic intensity (x-axis) - measured in number of floating-point operations (FLOPs) or integer operations (INTOPs) per byte, based on the loop algorithm, and transferred between CPU/VPU and memory

• Performance (y-axis) - measured in billions of floating-point operations per second (GFLOPS) or billions of integer operations per second (GINTOPS)

Efficiently Offload Your Code to GPUs

The Offload Advisor is an extended version of the Intel® Advisor, a code modernization, and performance estimation tool that supports OpenCL and SYCL/Data-Parallel C++ languages on CPU and GPU.

Benefits of using Offload Advisor:

• It will estimate data-transfer costs and get guidance on how to optimize data transfer.

• Identify offload opportunities where it pays off the most.

Create, Visualize, and Analyze Task and Dependency Computation Graphs

Flow Graph Analyzer (FGA) is a rapid visual prototyping environment for applications that can be expressed as flow graphs using Intel Threading Building Blocks (Intel TBB) applications.

• It helps construct, validate and model application design and performance before coding in Intel TBB.

• It provides insights into the parallel algorithm efficiency

• Apart from TBB, it helps visualize DPC++ asynchronous task graphs, provides insights to task scheduling inefficiencies and analyzes open MP task dependencies for better performance

Sources

Design code for parallelism and offloading with Intel® advisor. Intel. (n.d.). Retrieved November 29, 2021, from https://www.intel.com/content/www/us/en/developer/tools/oneapi/advisor.html#gs.hpo78b.

Run a roofline analysis. Intel. (n.d.). Retrieved November 29, 2021, from https://www.intel.com/content/www/us/en/develop/documentation/advisor-tutorial-roofline/top/run-a-roofline-analysis.html.

Design code for parallelism and offloading with Intel® advisor. Intel. (n.d.). Retrieved December 6, 2021, from https://www.intel.com/content/www/us/en/developer/tools/oneapi/advisor.html.

Get started with Intel advisor. Intel. (n.d.). Retrieved December 6, 2021, from https://www.intel.com/content/www/us/en/develop/documentation/get-started-with-advisor/top.html.