Difference between revisions of "GPU621/False Sharing"

m (→Cache Coherence and Cache Line) |

m (→Presentation) |

||

| (21 intermediate revisions by the same user not shown) | |||

| Line 19: | Line 19: | ||

So why do we have so many types? | So why do we have so many types? | ||

| − | In an ideal world, the CPU would have lightning-fast access to all data on your machine at any given time to maximize the power and speed of modern-day CPUs. In reality, there are limitations and trade-offs when balancing speed, storage | + | In an ideal world, the CPU would have lightning-fast access to all data on your machine at any given time to maximize the power and speed of modern-day CPUs. In reality, there are limitations and trade-offs when balancing speed, storage capacity, and cost. To compromise, a computer utilizes many types of memory. |

| − | If the CPU | + | Near the bottom, we have secondary memory like hard drives, flash drives and SSDs which can permanently hold vast quantities of data, but are relatively slow. Hard drives in particular have mechanical parts that must physically move. If the CPU relied on this memory type, the slow speeds would become a massive bottleneck in computation time. The CPU will spend a majority of time idling waiting for data. To the end user, your machine would appear to be extremely sluggish and non-responsive. |

| − | + | Next is Dynamic Random Access Memory(DRAM) which use capacitors, transistors and electricity to store data. They require constant refreshing with electricity to store data making them volatile as their data disappears when power is cut. DRAM is more expensive, but smaller and faster. However, this is still not fast enough for the CPU. | |

| + | |||

| + | Last, we have Static Random Access Memory(SRAM), which is extremely fast, but even smaller and more expensive. This type of memory is what is used in the cache. | ||

| + | |||

| + | When it needs data, the CPU looks in the cache first. If it is there, it is a cache hit. If it is not there, it is a cache miss and the CPU must search main memory or even further down the hierarchy until it finds it. Minimizing the number of cache misses ensures the CPU has a steady flow of data it can quickly retrieve and compute. The cache operates on locality of reference which refers to the tendency of programs to access the same set of memory locations repeatedly over a short period of time. From here, there are two major types. Temporal locality is when one memory location is accessed, it will likely be accessed again in the near future. Spatial locality means if one memory locations is accessed, nearby memory locations will likely be needed as well. Using these principles and complex algorithms, data is brought into the cache ahead of time to minimize the number of cache misses. | ||

=== Cache Coherence and Cache Line === | === Cache Coherence and Cache Line === | ||

| Line 29: | Line 33: | ||

[[File:cache_coherence.png|right|500px|thumb|Cache structure in a multi-processor system with shared memory.]] | [[File:cache_coherence.png|right|500px|thumb|Cache structure in a multi-processor system with shared memory.]] | ||

| − | Each processor has their own local cache. When data is needed, | + | Each processor has their own local cache. When data is needed, blocks of memory are transferred to the cache; these block are known as cache lines. |

However, if they each modify their own copy, how do we know which one is correct? We can’t store all the different versions or pick a random one to be the correct version, this would create havoc in our computer systems. | However, if they each modify their own copy, how do we know which one is correct? We can’t store all the different versions or pick a random one to be the correct version, this would create havoc in our computer systems. | ||

| − | The answer is we need cache coherence. Cache coherence is defined as the uniformity of shared resource data that ends up stored in multiple local caches. In other words, we must keep all the local caches synchronized. The challenge of doing so is known as the cache coherence problem. To solve this problem, multi-processor systems rely on cache coherence protocols to manage and maintain the cache. While the details of these protocols are beyond this assignment | + | The answer is we need cache coherence. Cache coherence is defined as the uniformity of shared resource data that ends up stored in multiple local caches. In other words, we must keep all the local caches synchronized. The challenge of doing so is known as the cache coherence problem. To solve this problem, multi-processor systems rely on cache coherence protocols to manage and maintain the cache. While the details of these protocols are beyond the scope of this assignment, more information on cache coherence protocols can be found here: https://en.wikipedia.org/wiki/Cache_coherency_protocols_(examples). |

<br><br><br><br><br><br> | <br><br><br><br><br><br> | ||

| Line 43: | Line 47: | ||

Now that we understand the basics of a cache, how does this relate to the concept of false sharing? [[File:CPUCacheline.png|thumb|400px|Cache line sharing.]] | Now that we understand the basics of a cache, how does this relate to the concept of false sharing? [[File:CPUCacheline.png|thumb|400px|Cache line sharing.]] | ||

| − | False sharing occurs when multiple processors modify data that resides on the same cache line. When this data is written back to memory, the shared cache lines are marked as invalid or dirty. Processors must fetch an updated copy | + | False sharing occurs when multiple processors modify data that resides on the same cache line. When this data is written back to memory, the shared cache lines are marked as invalid or dirty. Processors must fetch an updated copy from memory resulting in significant delays. If false sharing is not minimized, this issue quickly spirals out of control when increasing the number of processors. |

The key thing to note is that you do not need to modify the same piece of data. If the modified data happens to belong to the same cache line, the cache will be invalidated, forcing a memory update to maintain cache coherency. | The key thing to note is that you do not need to modify the same piece of data. If the modified data happens to belong to the same cache line, the cache will be invalidated, forcing a memory update to maintain cache coherency. | ||

| − | <br><br><br><br> | + | <br><br><br><br><br> |

=== Example === | === Example === | ||

| Line 53: | Line 57: | ||

In workshop 2, we briefly encountered false sharing. However, we did not get a formal explanation in lecture, so this section will serve to provide more context to the problem. | In workshop 2, we briefly encountered false sharing. However, we did not get a formal explanation in lecture, so this section will serve to provide more context to the problem. | ||

| − | We were asked to multi-thread a serial version of a simple algorithm that calculated PI by integrating 1/(1 + x^2). The serial version utilized a scalar sum variable to accumulate the calculations. For our naïve attempt, we identified a potential issue with using a scalar sum in a multi-threaded program. We have no direct control on the order a thread finishes their assigned work and leaving it up to chance resulted in a race condition. This is not good, but we still need a global variable, so the threads can return their values. To get around this we came up with the idea to change sum to an array. Now, each thread can store their calculations in the array indexed by their individual thread | + | We were asked to multi-thread a serial version of a simple algorithm that calculated PI by integrating 1/(1 + x^2). The serial version utilized a scalar sum variable to accumulate the calculations. For our naïve attempt, we identified a potential issue with using a scalar sum in a multi-threaded program. We have no direct control on the order a thread finishes their assigned work and leaving it up to chance resulted in a race condition. This is not good, but we still need a global variable, so the threads can return their values. To get around this we came up with the idea to change sum to an array. Now, each thread can store their calculations in the array indexed by their individual thread id instead of competing against each other to update the scalar sum. |

<pre> | <pre> | ||

| Line 134: | Line 138: | ||

=== Results === | === Results === | ||

| − | [[File:naive_implementation.png| | + | [[File:naive_implementation.png|500px|thumb|Execution time of naive implementation without any optimization levels (Od).]] |

| − | The algorithm calculates the correct answer, but the performance is absolutely terrible. The reason is that an int array is a contiguous block of memory with each integer taking up 4 bytes. | + | The algorithm calculates the correct answer, but the performance is absolutely terrible. The reason is that an int array is a contiguous block of memory with each integer taking up 4 bytes. Even though each thread modifies their own indexed element, due to spatial locality, the system will bring in the other elements in the array as part of the cache line. Although there were cases where higher thread count produced better results, there were many cases that performed worse than a single thread. This is due to the scheduling of thread execution that is out of the programmer's hands. It is possible that the selected schedule managed to minimize the frequency of false sharing giving better performance. However, this is extremely unreliable, so we need a better solution to false sharing. |

| + | <br><br><br><br><br><br><br><br><br><br><br><br> | ||

== Solutions to False Sharing == | == Solutions to False Sharing == | ||

=== Padding === | === Padding === | ||

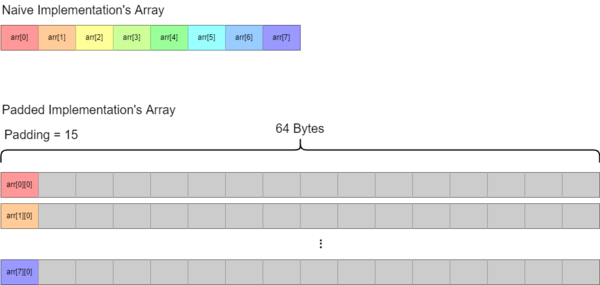

| − | + | [[File:naive_vs_padded_arr.png|600px|thumb|<br>Array comparison between naïve and padded implementation. Colored blocks represent index handled by individual threads. Grey blocks represent empty space.]] | |

| + | Assuming a 64 byte cache line, what if each element in the array was the only piece of data in the cache line for each thread? | ||

This observation was the basis for our first solution to false sharing. The idea was to pad out each element in the array with enough space to act as a boundary or separator for the cache line. If we can force each thread to only bring in their array index to their cache then each individual thread will have a distinct cache line, thus eliminating false sharing. | This observation was the basis for our first solution to false sharing. The idea was to pad out each element in the array with enough space to act as a boundary or separator for the cache line. If we can force each thread to only bring in their array index to their cache then each individual thread will have a distinct cache line, thus eliminating false sharing. | ||

| − | + | <br><br><br><br><br><br><br><br><br><br><br> | |

In the workshop, we accomplished this by making several modifications to the original code: | In the workshop, we accomplished this by making several modifications to the original code: | ||

| Line 176: | Line 182: | ||

=== Drawbacks === | === Drawbacks === | ||

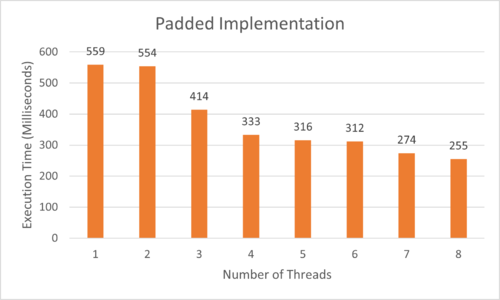

| − | While | + | While the idea works, there were several issues that prevented it from being an ideal solution: [[File:padded_implementation.png|500px|thumb|Execution time of padded implementation without optimization (Od).]] |

| − | '''- | + | '''- wasted memory''' |

| − | For every 4 bytes for storing a thread's calculation, 60 bytes is empty padding. | + | For every 4 bytes for storing a thread's calculation, 60 bytes is empty padding which grows for every new thread that is added. Even though it is not a lot, it is still very inefficient. |

| − | '''- the cache line only contains one piece of data with the rest being | + | '''- the cache line only contains one piece of data with the rest being padding''' |

| − | + | We want to minimize cache misses, but by hogging valuable cache space this reduces the effectiveness of the cache. If we had a more complex program, we could have tons of other data the thread could have fit on the same cache line. | |

| Line 235: | Line 241: | ||

Identifying false sharing requires detailed code inspection as everything will appear to work normally on the surface. However, when left unchecked false sharing will be detrimental to the program's performance and scalability. This article discussed the relation between the cache and false sharing. In addition, using a simple example, we explained how you can use padding, synchronization, and thread local variables to reduce the frequency of false sharing. | Identifying false sharing requires detailed code inspection as everything will appear to work normally on the surface. However, when left unchecked false sharing will be detrimental to the program's performance and scalability. This article discussed the relation between the cache and false sharing. In addition, using a simple example, we explained how you can use padding, synchronization, and thread local variables to reduce the frequency of false sharing. | ||

| + | |||

| + | == Presentation == | ||

| + | PDF [[File:kchou_false_sharing.pdf]] | ||

== References == | == References == | ||

| + | * Gillis, A. S. (2019, November 7). ''What is DRAM (Dynamic Random Access Memory)? how does it work?''. SearchStorage. Retrieved December 6, 2021, from https://searchstorage.techtarget.com/definition/DRAM. | ||

* Intel ISN. (2010, June 2). ''Avoiding and identifying false sharing among threads.'' CodeProject. Retrieved December 4, 2021, from https://www.codeproject.com/Articles/85356/Avoiding-and-Identifying-False-Sharing-Among-Threa. | * Intel ISN. (2010, June 2). ''Avoiding and identifying false sharing among threads.'' CodeProject. Retrieved December 4, 2021, from https://www.codeproject.com/Articles/85356/Avoiding-and-Identifying-False-Sharing-Among-Threa. | ||

* Jain, R. (2018, December 17). ''Memory hierarchy design and its characteristics.'' GeeksforGeeks. Retrieved December 4, 2021, from https://www.geeksforgeeks.org/memory-hierarchy-design-and-its-characteristics/. | * Jain, R. (2018, December 17). ''Memory hierarchy design and its characteristics.'' GeeksforGeeks. Retrieved December 4, 2021, from https://www.geeksforgeeks.org/memory-hierarchy-design-and-its-characteristics/. | ||

* Jha, A. K. (2020, August 24). ''Cache Coherence.'' GeeksforGeeks. Retrieved December 4, 2021, from https://www.geeksforgeeks.org/cache-coherence/. | * Jha, A. K. (2020, August 24). ''Cache Coherence.'' GeeksforGeeks. Retrieved December 4, 2021, from https://www.geeksforgeeks.org/cache-coherence/. | ||

| + | * Knerl, L. (2021, April 19). ''What is DRAM (Dynamic Random Access Memory)?: HP® Tech takes. What is DRAM (Dynamic Random Access Memory)? | HP® Tech Takes''. Retrieved December 6, 2021, from https://www.hp.com/us-en/shop/tech-takes/what-is-dram-dynamic-random-access-memory. | ||

* Neso Academy. (2021, September 26). Cache Coherence Problem & Cache Coherency Protocols [Video]. YouTube. https://www.youtube.com/watch?v=r_ZE1XVT8Ao&ab_channel=NesoAcademy | * Neso Academy. (2021, September 26). Cache Coherence Problem & Cache Coherency Protocols [Video]. YouTube. https://www.youtube.com/watch?v=r_ZE1XVT8Ao&ab_channel=NesoAcademy | ||

| − | * OpenMP. (2013, December 6). Introduction to OpenMP: 06 Discussion 2 [Video]. Youtube. https://www.youtube.com/watch?v=OuzYICZUthM | + | * OpenMP. (2013, December 6). ''Introduction to OpenMP: 06 Discussion 2'' [Video]. Youtube. https://www.youtube.com/watch?v=OuzYICZUthM |

| − | * OpenMP. (2013, December 6). Introduction to OpenMP: 08 Discussion 3 [Video]. YouTube. https://www.youtube.com/watch?v=pLa972Rgl1I | + | * OpenMP. (2013, December 6). ''Introduction to OpenMP: 08 Discussion 3'' [Video]. YouTube. https://www.youtube.com/watch?v=pLa972Rgl1I |

* Oracle. (2015, February 17). ''8.2.1 What is False Sharing?'' What is false sharing? - Oracle® Solaris Studio 12.4: OpenMP API User's Guide. Retrieved December 4, 2021, from https://docs.oracle.com/cd/E37069_01/html/E37081/aewcy.html#scrolltoc. | * Oracle. (2015, February 17). ''8.2.1 What is False Sharing?'' What is false sharing? - Oracle® Solaris Studio 12.4: OpenMP API User's Guide. Retrieved December 4, 2021, from https://docs.oracle.com/cd/E37069_01/html/E37081/aewcy.html#scrolltoc. | ||

* PCMag. (n.d.). ''Definition of Cache Line.'' Definition of a cache line | PCMag. Retrieved December 4, 2021, from https://www.pcmag.com/encyclopedia/term/cache-line#:~:text=Browse%20Encyclopedia-,A,size%20by%20the%20system%20designer. | * PCMag. (n.d.). ''Definition of Cache Line.'' Definition of a cache line | PCMag. Retrieved December 4, 2021, from https://www.pcmag.com/encyclopedia/term/cache-line#:~:text=Browse%20Encyclopedia-,A,size%20by%20the%20system%20designer. | ||

| + | * PCMag. (n.d.). ''Definition of locality of reference''. PCMag. Retrieved December 3, 2021, from https://www.pcmag.com/encyclopedia/term/locality-of-reference. | ||

* PowerCert Animated Videos. (2016, November 27). CPU Cache Explained - What is Cache Memory? [Video]. YouTube. https://www.youtube.com/watch?v=yi0FhRqDJfo&ab_channel=PowerCertAnimatedVideos | * PowerCert Animated Videos. (2016, November 27). CPU Cache Explained - What is Cache Memory? [Video]. YouTube. https://www.youtube.com/watch?v=yi0FhRqDJfo&ab_channel=PowerCertAnimatedVideos | ||

| + | * Roomi, M. (2020, March 6). ''5 advantages and disadvantages of Hard Disk Drive: Weaknesses & benefits of Hard Disk Drive''. HiTechWhizz. Retrieved December 6, 2021, from https://www.hitechwhizz.com/2020/03/5-advantages-and-disadvantages-drawbacks-benefits-of-hard-disk-drive.html. | ||

| + | * Singh, B. (2019, August 21). ''Locality of reference and cache operation in Cache Memory''. GeeksforGeeks. Retrieved December 6, 2021, from https://www.geeksforgeeks.org/locality-of-reference-and-cache-operation-in-cache-memory/. | ||

| + | * Techquickie. (2016, June 15). ''What is CPU Cache?'' [Video]. YouTube. https://www.youtube.com/watch?v=sHqNMHf2UNI | ||

| + | * Thampson. (2018, February 23). ''Types of SSDs and Which Ones to Buy''. Techbytes. Retrieved December 5, 2021, from https://blogs.umass.edu/Techbytes/2018/02/23/types-of-ssds-and-which-ones-to-buy/. | ||

Latest revision as of 23:44, 7 December 2021

Contents

Analyzing False Sharing

Group Members

- Kevin Chou

Introduction

False sharing is a well-known problem that can occur when taking advantage of parallelization in modern multi-processor computers with shared memory. Although the results will be correct, frequent false sharing results in significant performance loss and reduction in scalability, effectively nullifying the benefits of parallel computing.

The Cache

What is a Cache?

Before we can talk about false sharing, we need a brief overview of a cache. Like other storage mediums, a cache is a place used to store data and instructions that the CPU may use.

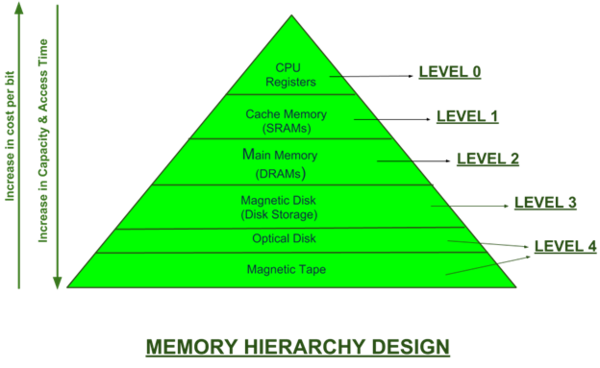

So why do we have so many types?

In an ideal world, the CPU would have lightning-fast access to all data on your machine at any given time to maximize the power and speed of modern-day CPUs. In reality, there are limitations and trade-offs when balancing speed, storage capacity, and cost. To compromise, a computer utilizes many types of memory.

Near the bottom, we have secondary memory like hard drives, flash drives and SSDs which can permanently hold vast quantities of data, but are relatively slow. Hard drives in particular have mechanical parts that must physically move. If the CPU relied on this memory type, the slow speeds would become a massive bottleneck in computation time. The CPU will spend a majority of time idling waiting for data. To the end user, your machine would appear to be extremely sluggish and non-responsive.

Next is Dynamic Random Access Memory(DRAM) which use capacitors, transistors and electricity to store data. They require constant refreshing with electricity to store data making them volatile as their data disappears when power is cut. DRAM is more expensive, but smaller and faster. However, this is still not fast enough for the CPU.

Last, we have Static Random Access Memory(SRAM), which is extremely fast, but even smaller and more expensive. This type of memory is what is used in the cache.

When it needs data, the CPU looks in the cache first. If it is there, it is a cache hit. If it is not there, it is a cache miss and the CPU must search main memory or even further down the hierarchy until it finds it. Minimizing the number of cache misses ensures the CPU has a steady flow of data it can quickly retrieve and compute. The cache operates on locality of reference which refers to the tendency of programs to access the same set of memory locations repeatedly over a short period of time. From here, there are two major types. Temporal locality is when one memory location is accessed, it will likely be accessed again in the near future. Spatial locality means if one memory locations is accessed, nearby memory locations will likely be needed as well. Using these principles and complex algorithms, data is brought into the cache ahead of time to minimize the number of cache misses.

Cache Coherence and Cache Line

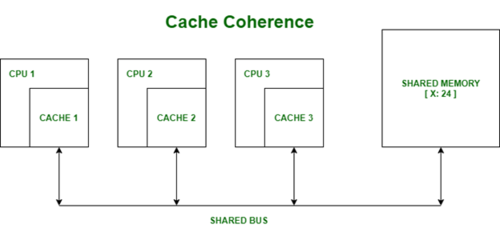

Each processor has their own local cache. When data is needed, blocks of memory are transferred to the cache; these block are known as cache lines.

However, if they each modify their own copy, how do we know which one is correct? We can’t store all the different versions or pick a random one to be the correct version, this would create havoc in our computer systems.

The answer is we need cache coherence. Cache coherence is defined as the uniformity of shared resource data that ends up stored in multiple local caches. In other words, we must keep all the local caches synchronized. The challenge of doing so is known as the cache coherence problem. To solve this problem, multi-processor systems rely on cache coherence protocols to manage and maintain the cache. While the details of these protocols are beyond the scope of this assignment, more information on cache coherence protocols can be found here: https://en.wikipedia.org/wiki/Cache_coherency_protocols_(examples).

False Sharing

What is False Sharing?

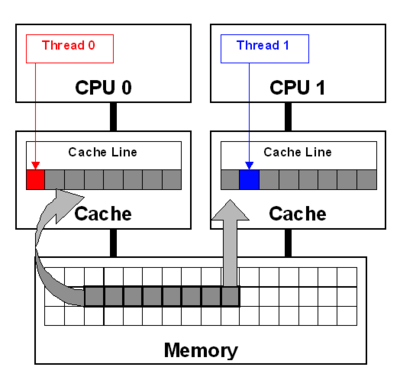

Now that we understand the basics of a cache, how does this relate to the concept of false sharing?False sharing occurs when multiple processors modify data that resides on the same cache line. When this data is written back to memory, the shared cache lines are marked as invalid or dirty. Processors must fetch an updated copy from memory resulting in significant delays. If false sharing is not minimized, this issue quickly spirals out of control when increasing the number of processors.

The key thing to note is that you do not need to modify the same piece of data. If the modified data happens to belong to the same cache line, the cache will be invalidated, forcing a memory update to maintain cache coherency.

Example

In workshop 2, we briefly encountered false sharing. However, we did not get a formal explanation in lecture, so this section will serve to provide more context to the problem.

We were asked to multi-thread a serial version of a simple algorithm that calculated PI by integrating 1/(1 + x^2). The serial version utilized a scalar sum variable to accumulate the calculations. For our naïve attempt, we identified a potential issue with using a scalar sum in a multi-threaded program. We have no direct control on the order a thread finishes their assigned work and leaving it up to chance resulted in a race condition. This is not good, but we still need a global variable, so the threads can return their values. To get around this we came up with the idea to change sum to an array. Now, each thread can store their calculations in the array indexed by their individual thread id instead of competing against each other to update the scalar sum.

#include <iostream>

#include <iomanip>

#include <cstdlib>

#include <chrono>

#include <omp.h>

#define NUM_THREADS 8 // number of threads to request

using namespace std::chrono;

// report system time

void reportTime(const char* msg, steady_clock::duration span)

{

auto ms = duration_cast<milliseconds>(span);

std::cout << msg << " - took - " <<

ms.count() << " milliseconds" << std::endl;

}

int main(int argc, char** argv)

{

if (argc != 2)

{

std::cerr << argv[0] << ": invalid number of arguments\n";

std::cerr << "Usage: " << argv[0] << " no_of_slices\n";

return 1;

}

int n = std::atoi(argv[1]);

steady_clock::time_point ts, te;

// calculate pi by integrating the area under 1/(1 + x^2) in n steps

ts = steady_clock::now();

int actual_thread_count;

double pi = 0.0f;

double sum[NUM_THREADS] = { 0.0f };

double step = 1.0 / (double)n;

omp_set_num_threads(NUM_THREADS);

#pragma omp parallel

{

int id, num_threads;

double x;

id = omp_get_thread_num();

num_threads = omp_get_num_threads();

// get master thread to return how many threads were actually created

if (id == 0)

{

actual_thread_count = num_threads;

}

// each thread is responsible for calculating the area of a specific set of sections underneath the curve

for (int i = id; i < n; i = i + num_threads)

{

x = ((double)i + 0.5f) * step;

sum[id] += 1.0f / (1.0f + x * x);

}

}

// sum up each calculation to get approximation of pi

for (int i = 0; i < actual_thread_count; i++)

{

pi += 4 * sum[i] * step;

}

te = steady_clock::now();

std::cout << "n = " << n <<

std::fixed << std::setprecision(15) <<

"\n pi(exact) = " << 3.141592653589793 <<

"\n pi(calcd) = " << pi << std::endl;

reportTime("Integration", te - ts);

}

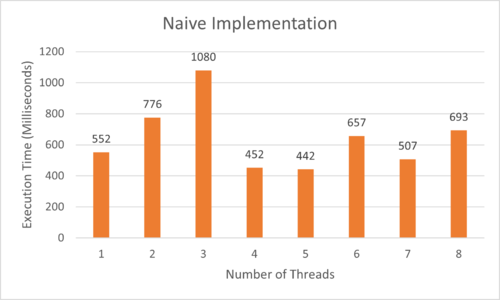

Results

The algorithm calculates the correct answer, but the performance is absolutely terrible. The reason is that an int array is a contiguous block of memory with each integer taking up 4 bytes. Even though each thread modifies their own indexed element, due to spatial locality, the system will bring in the other elements in the array as part of the cache line. Although there were cases where higher thread count produced better results, there were many cases that performed worse than a single thread. This is due to the scheduling of thread execution that is out of the programmer's hands. It is possible that the selected schedule managed to minimize the frequency of false sharing giving better performance. However, this is extremely unreliable, so we need a better solution to false sharing.

Solutions to False Sharing

Padding

Assuming a 64 byte cache line, what if each element in the array was the only piece of data in the cache line for each thread?

This observation was the basis for our first solution to false sharing. The idea was to pad out each element in the array with enough space to act as a boundary or separator for the cache line. If we can force each thread to only bring in their array index to their cache then each individual thread will have a distinct cache line, thus eliminating false sharing.

In the workshop, we accomplished this by making several modifications to the original code:

1. Defining padding size to match 64 byte cache line.

#define PAD 15

2. Change sum to a 2D array.

double sum[NUM_THREADS][PAD + 1] = { 0.0f };

3. Modify loops to only store data in the first element of each row of the 2D array

for (int i = id; i < n; i = i + num_threads)

{

x = ((double)i + 0.5f) * step;

sum[id][0] += 1.0f / (1.0f + x * x);

}

for (int i = 0; i < actual_thread_count; i++)

{

pi += 4 * sum[i][0] * step;

}

Drawbacks

While the idea works, there were several issues that prevented it from being an ideal solution:

- wasted memory

For every 4 bytes for storing a thread's calculation, 60 bytes is empty padding which grows for every new thread that is added. Even though it is not a lot, it is still very inefficient.

- the cache line only contains one piece of data with the rest being padding

We want to minimize cache misses, but by hogging valuable cache space this reduces the effectiveness of the cache. If we had a more complex program, we could have tons of other data the thread could have fit on the same cache line.

- must know cache size.

The cache size can vary between machines making the code not very portable.

Synchronization and Thread Local Variables

Thus, we searched for a more elegant solution that avoids race conditions and cache line sharing without resorting to padding.

#pragma omp parallel

{

int id, num_threads;

double x, sum = 0.0f;

id = omp_get_thread_num();

num_threads = omp_get_num_threads();

// get master thread to return how many threads were actually created

if (id == 0)

{

actual_thread_count = num_threads;

}

// each thread is responsible for calculating the area of a specific set of sections underneath the curve

for (int i = id; i < n; i = i + num_threads)

{

x = ((double)i + 0.5f) * step;

sum += 1.0f / (1.0f + x * x);

}

#pragma omp critical

{

// sum up each calculation to get approximation of pi

pi += 4 * sum * step;

}

}

To solve the concurrency issue, we utilized OpenMP's critical construct allowing more direct control over thread execution. Originally, we faced an issue where depending on the order of thread execution the program would yield different results. Now by marking a region as a critical section, we can ensure that only one thread can access whatever is inside this region. Other threads must wait until the region becomes unoccupied before it is their turn to execute it. One thing we had to be careful was how large we set the critical region. Making it too large reduced the effectiveness of multi-threading as threads may become idle for too long waiting their turn. Although the rest of the parallel region can be done in any order we definitely don't want ambiguity surrounding which thread has the most up-to-date version of the pi. Using #pragma omp critical guaranteed that pi will only have one version.

The next issue was tackling cache line sharing. With an array, the memory was contiguous making it highly likely the data would share the same cache line. However, by allocating a local sum variable for each thread, it becomes less likely threads will share the same cache line mitigating the impact of false sharing.

Conclusion

Identifying false sharing requires detailed code inspection as everything will appear to work normally on the surface. However, when left unchecked false sharing will be detrimental to the program's performance and scalability. This article discussed the relation between the cache and false sharing. In addition, using a simple example, we explained how you can use padding, synchronization, and thread local variables to reduce the frequency of false sharing.

Presentation

PDF File:Kchou false sharing.pdf

References

- Gillis, A. S. (2019, November 7). What is DRAM (Dynamic Random Access Memory)? how does it work?. SearchStorage. Retrieved December 6, 2021, from https://searchstorage.techtarget.com/definition/DRAM.

- Intel ISN. (2010, June 2). Avoiding and identifying false sharing among threads. CodeProject. Retrieved December 4, 2021, from https://www.codeproject.com/Articles/85356/Avoiding-and-Identifying-False-Sharing-Among-Threa.

- Jain, R. (2018, December 17). Memory hierarchy design and its characteristics. GeeksforGeeks. Retrieved December 4, 2021, from https://www.geeksforgeeks.org/memory-hierarchy-design-and-its-characteristics/.

- Jha, A. K. (2020, August 24). Cache Coherence. GeeksforGeeks. Retrieved December 4, 2021, from https://www.geeksforgeeks.org/cache-coherence/.

- Knerl, L. (2021, April 19). What is DRAM (Dynamic Random Access Memory)?: HP® Tech takes. What is DRAM (Dynamic Random Access Memory)? | HP® Tech Takes. Retrieved December 6, 2021, from https://www.hp.com/us-en/shop/tech-takes/what-is-dram-dynamic-random-access-memory.

- Neso Academy. (2021, September 26). Cache Coherence Problem & Cache Coherency Protocols [Video]. YouTube. https://www.youtube.com/watch?v=r_ZE1XVT8Ao&ab_channel=NesoAcademy

- OpenMP. (2013, December 6). Introduction to OpenMP: 06 Discussion 2 [Video]. Youtube. https://www.youtube.com/watch?v=OuzYICZUthM

- OpenMP. (2013, December 6). Introduction to OpenMP: 08 Discussion 3 [Video]. YouTube. https://www.youtube.com/watch?v=pLa972Rgl1I

- Oracle. (2015, February 17). 8.2.1 What is False Sharing? What is false sharing? - Oracle® Solaris Studio 12.4: OpenMP API User's Guide. Retrieved December 4, 2021, from https://docs.oracle.com/cd/E37069_01/html/E37081/aewcy.html#scrolltoc.

- PCMag. (n.d.). Definition of Cache Line. Definition of a cache line | PCMag. Retrieved December 4, 2021, from https://www.pcmag.com/encyclopedia/term/cache-line#:~:text=Browse%20Encyclopedia-,A,size%20by%20the%20system%20designer.

- PCMag. (n.d.). Definition of locality of reference. PCMag. Retrieved December 3, 2021, from https://www.pcmag.com/encyclopedia/term/locality-of-reference.

- PowerCert Animated Videos. (2016, November 27). CPU Cache Explained - What is Cache Memory? [Video]. YouTube. https://www.youtube.com/watch?v=yi0FhRqDJfo&ab_channel=PowerCertAnimatedVideos

- Roomi, M. (2020, March 6). 5 advantages and disadvantages of Hard Disk Drive: Weaknesses & benefits of Hard Disk Drive. HiTechWhizz. Retrieved December 6, 2021, from https://www.hitechwhizz.com/2020/03/5-advantages-and-disadvantages-drawbacks-benefits-of-hard-disk-drive.html.

- Singh, B. (2019, August 21). Locality of reference and cache operation in Cache Memory. GeeksforGeeks. Retrieved December 6, 2021, from https://www.geeksforgeeks.org/locality-of-reference-and-cache-operation-in-cache-memory/.

- Techquickie. (2016, June 15). What is CPU Cache? [Video]. YouTube. https://www.youtube.com/watch?v=sHqNMHf2UNI

- Thampson. (2018, February 23). Types of SSDs and Which Ones to Buy. Techbytes. Retrieved December 5, 2021, from https://blogs.umass.edu/Techbytes/2018/02/23/types-of-ssds-and-which-ones-to-buy/.