Difference between revisions of "GPU621/Braindead"

(Updated STL section with Container Class Templates) |

(Completed Report) |

||

| (One intermediate revision by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{GPU621/DPS921 Index | 20214}} | {{GPU621/DPS921 Index | 20214}} | ||

= C++11 STL Comparison to TBB - Case Studies = | = C++11 STL Comparison to TBB - Case Studies = | ||

| − | + | The objective of this research project is to compare the STL to the TBB. This project specifically covers the container classes in each of the STL and TBB, and compares their performance by using graphs. | |

== Group Members == | == Group Members == | ||

| − | Joseph Chan | + | Joseph Chan | Bowen Chu | Henry Xu |

| − | Bowen Chu | ||

| − | Henry Xu | ||

== Background == | == Background == | ||

| − | C++11 introduces many improvements and modifications, such as the addition of function objects and hash tables. These can be | + | C++11 introduces many improvements and modifications, such as the addition of function objects and hash tables. These can be found within the STL, which stands for Standard Template Library. It consists of commonly used containers, algorithms, functions and iterator templates. |

| − | found within the STL, which stands for Standard Template Library. It consists of commonly used containers, algorithms, | ||

| − | functions and iterator templates. | ||

| − | The C++11 STL has container classes such as a list and deque. However, the methods within these classes do not have built-in | + | The C++11 STL has container classes such as a list and deque. However, the methods within these classes do not have built-in concurrency, despite the STL having thread classes that may be used for parallelization. Intel's oneAPI Threading Building Blocks (oneTBB) implements similar container classes that, unlike the STL container classes, has built-in concurrency which allows operations performed with them to be executed in parallel. Thus, oneTBB is one of many tools that can be used for parallel programming with containers. |

| − | concurrency, despite the STL having thread classes that may be used for parallelization. Intel's oneAPI Threading Building | ||

| − | Blocks (oneTBB) implements similar container classes that, unlike the STL container classes, has built-in concurrency which | ||

| − | allows operations performed with them to be executed in parallel. Thus, oneTBB is one of many tools that can be used for | ||

| − | parallel programming with containers. | ||

== Standard Template Library (STL) == | == Standard Template Library (STL) == | ||

| − | The STL has many container classes. In this section, only three of the container types will be covered: vector, queue, and | + | The STL has many container classes. In this section, only three of the container types will be covered: vector, queue, and hash table. |

| − | hash table. | ||

=== Container Class Templates === | === Container Class Templates === | ||

| Line 68: | Line 59: | ||

=== Container Performance === | === Container Performance === | ||

| + | Simple programs will be used to measure the performance of the STL containers, as seen below. | ||

| + | |||

==== Vector ==== | ==== Vector ==== | ||

| + | Code: | ||

| + | <pre> | ||

| + | // std_vector.cpp | ||

| + | #include <iostream> | ||

| + | #include <chrono> | ||

| + | #include <string> | ||

| + | #include <vector> | ||

| + | using namespace std; | ||

| + | using namespace std::chrono; | ||

| + | |||

| + | // reports time | ||

| + | void reportTime(const char* msg, steady_clock::duration span) { | ||

| + | auto ms = duration_cast<microseconds>(span); | ||

| + | cout << msg << " - took - " << ms.count() << " microseconds" << endl; | ||

| + | } | ||

| + | |||

| + | int main() | ||

| + | { | ||

| + | vector<int> v; | ||

| + | int size = 10; | ||

| + | steady_clock::time_point ts, te; | ||

| + | ts = steady_clock::now(); | ||

| + | for (int i = 0; i < size; i++) { | ||

| + | v.push_back(i); | ||

| + | } | ||

| + | te = steady_clock::now(); | ||

| + | reportTime("STL Vector", te - ts); | ||

| + | |||

| + | cout << "Size of " << size << endl; | ||

| + | cout << "Contains: " << endl; | ||

| + | for (auto i = v.begin(); i != v.end(); i++) { | ||

| + | std::cout << *i << " "; | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | This program creates a vector to store elements and a size variable which specifies the number of elements to add to the vector. The time between the insertion of elements is recorded and printed out at the end, along with the size and the vector’s contents. | ||

| + | |||

| + | Output: | ||

| + | <pre> | ||

| + | STL Vector - took - 24 microseconds | ||

| + | Size of 10 | ||

| + | Contains: | ||

| + | 0 1 2 3 4 5 6 7 8 9 | ||

| + | </pre> | ||

| + | |||

==== Queue ==== | ==== Queue ==== | ||

| − | ==== | + | Code: |

| + | <pre> | ||

| + | // std_queue.cpp | ||

| + | #include <iostream> | ||

| + | #include <chrono> | ||

| + | #include <string> | ||

| + | #include <queue> | ||

| + | using namespace std; | ||

| + | using namespace std::chrono; | ||

| + | |||

| + | // reports time | ||

| + | void reportTime(const char* msg, steady_clock::duration span) { | ||

| + | auto ms = duration_cast<microseconds>(span); | ||

| + | cout << msg << " - took - " << ms.count() << " microseconds" << endl; | ||

| + | } | ||

| + | |||

| + | int main() | ||

| + | { | ||

| + | queue<int> q; | ||

| + | int size = 10; | ||

| + | steady_clock::time_point ts, te; | ||

| + | ts = steady_clock::now(); | ||

| + | for (int i = 0; i < size; i++) { | ||

| + | q.push(i); | ||

| + | } | ||

| + | te = steady_clock::now(); | ||

| + | reportTime("STL Queue", te - ts); | ||

| + | |||

| + | cout << "Size of " << size << endl; | ||

| + | cout << "Contains: " << endl; | ||

| + | for (int i = 0; i < size; i++) { | ||

| + | std::cout << q.front() << " "; | ||

| + | q.pop(); | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | The code for testing the queue is similar to the vector code, except it will use the push() method to add elements into it. Iterators cannot be used to iterate through queues because there should be no access to the elements in the middle of the queue, only the front and back. We will use a normal loop to print each element by retrieving the element in front and popping it off. | ||

| + | |||

| + | Output: | ||

| + | <pre> | ||

| + | STL Queue - took - 11 microseconds | ||

| + | Size of 10 | ||

| + | Contains: | ||

| + | 0 1 2 3 4 5 6 7 8 9 | ||

| + | </pre> | ||

| + | |||

| + | ==== Unordered Map ==== | ||

| + | Code: | ||

| + | <pre> | ||

| + | // std_unordered_map.cpp | ||

| + | #include <iostream> | ||

| + | #include <chrono> | ||

| + | #include <string> | ||

| + | #include <unordered_map> | ||

| + | using namespace std; | ||

| + | using namespace std::chrono; | ||

| + | |||

| + | // reports time | ||

| + | void reportTime(const char* msg, steady_clock::duration span) { | ||

| + | auto ms = duration_cast<microseconds>(span); | ||

| + | cout << msg << " - took - " << ms.count() << " microseconds" << endl; | ||

| + | } | ||

| + | |||

| + | int main() | ||

| + | { | ||

| + | unordered_map<std::string, int> u; | ||

| + | int size = 10; | ||

| + | steady_clock::time_point ts, te; | ||

| + | ts = steady_clock::now(); | ||

| + | for (int i = 0; i < size; i++) { | ||

| + | u.emplace(to_string(i), i); | ||

| + | } | ||

| + | te = steady_clock::now(); | ||

| + | reportTime("STL Hash Map", te - ts); | ||

| + | |||

| + | cout << "Size of " << size << endl; | ||

| + | cout << "Contains: " << endl; | ||

| + | for (auto i : u) { | ||

| + | cout << "Key: " << i.first << " | Value: " << i.second << endl; | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | This program is similar to the previous two, except that key-value pairs are being added to the hash map. | ||

| + | |||

| + | Output: | ||

| + | <pre> | ||

| + | STL Hash Map - took - 91 microseconds | ||

| + | Size of 10 | ||

| + | Contains: | ||

| + | Key: 0 | Value: 0 | ||

| + | Key: 1 | Value: 1 | ||

| + | Key: 2 | Value: 2 | ||

| + | Key: 3 | Value: 3 | ||

| + | Key: 4 | Value: 4 | ||

| + | Key: 5 | Value: 5 | ||

| + | Key: 6 | Value: 6 | ||

| + | Key: 7 | Value: 7 | ||

| + | Key: 8 | Value: 8 | ||

| + | Key: 9 | Value: 9 | ||

| + | </pre> | ||

| + | |||

| + | === Concurrency === | ||

| + | As mentioned before, the STL container classes do not have built-in concurrency functionality. However, it is still possible to parallelize the operations that are to be performed with them, by using the std::thread class from the STL or a threading library such as OpenMP or TBB. With operations being performed on the container in parallel, race conditions may occur with the elements and unexpectedly modify the data in a way that is not desirable. In this case, we could solve the problem by using mutexes or atomics. However, doing this may serialize the operations rather than parallelize them, so it is not reliable to use such a solution unless it is absolutely needed. The most reliable solution would be to create your own container class templates and manually parallelize each of their methods. Fortunately, it is not necessary to do so since we can simply use the TBB library, which has implemented many container class templates similar to the STL templates, but with built-in concurrency. | ||

| + | |||

| + | == Threading Building Blocks (TBB) == | ||

| + | TBB refers to Intel's oneAPI Threading Building Blocks (although it is actually called oneTBB). It is a library used for parallel programming, featuring the scheduling of threads and breakdown of computations into tasks that run concurrently. | ||

| − | |||

=== Container Class Templates === | === Container Class Templates === | ||

| + | All of the below containers are similar to the STL. There may be a few differences, but the functionality is mostly the same. | ||

==== tbb::concurrent_vector ==== | ==== tbb::concurrent_vector ==== | ||

| + | The tbb::concurrent_vector is just like std::vector in the STL. Unlike std::vector, it enables the vector to be safely grown concurrently without the threads interfering with each other. | ||

| + | |||

| + | Member functions: | ||

| + | * push_back - adds an element to the end | ||

| + | * grow_by - increases the size by the specified number of elements | ||

| + | * grow_to_at_least - same as grow_by, but increases the capacity to at least the specified size | ||

| + | * size - returns the number of elements, but may return values under concurrent construction | ||

| + | |||

==== tbb::concurrent_queue ==== | ==== tbb::concurrent_queue ==== | ||

| − | ==== tbb:: | + | The tbb::concurrent_queue is just like std::queue in the STL, except with enhanced concurrency. There is a variant tbb::concurrent_bounded_queue which adds blocking operations to its methods. |

| + | |||

| + | Member functions: | ||

| + | * pop - removes an element | ||

| + | * push - adds an element | ||

| + | * try_push - adds an element only if it does not exceed the capacity | ||

| + | * size - returns the number of elements, but may return values under concurrent construction | ||

| + | |||

| + | ==== tbb::concurrent_unordered_map ==== | ||

| + | The tbb::concurrent_unordered_map is just like std::unordered_map in the STL, except with enhanced concurrency. There is a variant tbb::concurrent_unordered_multimap which stores a counter for each pair with a duplicate key. | ||

| + | |||

| + | Member functions: | ||

| + | * insert - inserts an element | ||

| + | * find - finds an element | ||

| + | * emplace - constructs and inserts an element | ||

| + | * size - returns the number of elements, but may return values under concurrent construction | ||

| + | |||

=== Container Performance === | === Container Performance === | ||

| + | The below programs use very similar code that were in the programs for STL container performance. | ||

==== Concurrent Vector ==== | ==== Concurrent Vector ==== | ||

| + | Code: | ||

| + | <pre> | ||

| + | // tbb_concurrent_vector.cpp | ||

| + | #include <iostream> | ||

| + | #include <chrono> | ||

| + | #include <string> | ||

| + | #include <tbb/tbb.h> | ||

| + | using namespace std; | ||

| + | using namespace std::chrono; | ||

| + | |||

| + | // reports time | ||

| + | void reportTime(const char* msg, steady_clock::duration span) { | ||

| + | auto ms = duration_cast<microseconds>(span); | ||

| + | cout << msg << " - took - " << ms.count() << " microseconds" << endl; | ||

| + | } | ||

| + | |||

| + | int main() | ||

| + | { | ||

| + | tbb::concurrent_vector<int> v({ 0 }); | ||

| + | int size = 10; | ||

| + | int grainsize = 10000000; | ||

| + | steady_clock::time_point ts, te; | ||

| + | tbb::blocked_range<int> range(0, size, grainsize); | ||

| + | auto setV = [&](tbb::blocked_range<int>& r) { | ||

| + | for (auto i = r.begin(); i != r.end(); i++) { | ||

| + | v.push_back(i); | ||

| + | } | ||

| + | }; | ||

| + | ts = steady_clock::now(); | ||

| + | tbb:parallel_for(range, setV); | ||

| + | te = steady_clock::now(); | ||

| + | reportTime("TBB Concurrent Vector", te - ts); | ||

| + | |||

| + | std::cout << "Size of " << size << std::endl; | ||

| + | std::cout << "Grainsize of " << grainsize << std::endl; | ||

| + | std::cout << "Contains: " << std::endl; | ||

| + | for (auto i = v.begin(); i != v.end(); i++) { | ||

| + | std::cout << *i << " "; | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | Output: | ||

| + | <pre> | ||

| + | TBB Concurrent Vector - took - 2304 microseconds | ||

| + | Size of 10 | ||

| + | Grainsize of 10000000 | ||

| + | Contains: | ||

| + | 0 0 1 2 3 4 5 6 7 8 9 | ||

| + | </pre> | ||

==== Concurrent Queue ==== | ==== Concurrent Queue ==== | ||

| − | ==== Concurrent | + | Code: |

| + | <pre> | ||

| + | // tbb_concurrent_queue.cpp | ||

| + | #include <iostream> | ||

| + | #include <chrono> | ||

| + | #include <string> | ||

| + | #include <tbb/tbb.h> | ||

| + | using namespace std; | ||

| + | using namespace std::chrono; | ||

| + | |||

| + | // reports time | ||

| + | void reportTime(const char* msg, steady_clock::duration span) { | ||

| + | auto ms = duration_cast<microseconds>(span); | ||

| + | cout << msg << " - took - " << ms.count() << " microseconds" << endl; | ||

| + | } | ||

| + | |||

| + | int main() | ||

| + | { | ||

| + | tbb::concurrent_queue<int> q; | ||

| + | int val, size = 10; | ||

| + | int grainsize = 10000000; | ||

| + | steady_clock::time_point ts, te; | ||

| + | tbb::blocked_range<int> range(0, size, grainsize); | ||

| + | auto setQ = [&](tbb::blocked_range<int>& r) { | ||

| + | for (int i = 0; i < size; i++) { | ||

| + | q.push(i); | ||

| + | } | ||

| + | }; | ||

| + | ts = steady_clock::now(); | ||

| + | tbb:parallel_for(range, setQ); | ||

| + | te = steady_clock::now(); | ||

| + | reportTime("TBB Concurrent Queue", te - ts); | ||

| + | |||

| + | cout << "Size of " << size << endl; | ||

| + | cout << "Grainsize of " << grainsize << endl; | ||

| + | cout << "Contains: " << endl; | ||

| + | for (int i = 0; i < size; i++) { | ||

| + | q.try_pop(val); | ||

| + | std::cout << val << " "; | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | Output: | ||

| + | <pre> | ||

| + | TBB Concurrent Queue - took - 1308 microseconds | ||

| + | Size of 10 | ||

| + | Grainsize of 10000000 | ||

| + | Contains: | ||

| + | 0 1 2 3 4 5 6 7 8 9 | ||

| + | </pre> | ||

| + | ==== Concurrent Unordered Map ==== | ||

| + | Code: | ||

| + | <pre> | ||

| + | // tbb_concurrent_unordered_map.cpp | ||

| + | #include <iostream> | ||

| + | #include <chrono> | ||

| + | #include <string> | ||

| + | #include <tbb/tbb.h> | ||

| + | using namespace std; | ||

| + | using namespace std::chrono; | ||

| + | |||

| + | // reports time | ||

| + | void reportTime(const char* msg, steady_clock::duration span) { | ||

| + | auto ms = duration_cast<microseconds>(span); | ||

| + | cout << msg << " - took - " << ms.count() << " microseconds" << endl; | ||

| + | } | ||

| + | |||

| + | int main() | ||

| + | { | ||

| + | tbb::concurrent_unordered_map<std::string, int> u; | ||

| + | int size = 10; | ||

| + | int grainsize = 10000000; | ||

| + | steady_clock::time_point ts, te; | ||

| + | tbb::blocked_range<int> range(0, size, grainsize); | ||

| + | auto setU = [&](tbb::blocked_range<int>& r) { | ||

| + | for (auto i = r.begin(); i != r.end(); i++) { | ||

| + | u.emplace(to_string(i), i); | ||

| + | } | ||

| + | }; | ||

| + | ts = steady_clock::now(); | ||

| + | tbb::parallel_for(range, setU); | ||

| + | te = steady_clock::now(); | ||

| + | reportTime("STL Hash Map (TBB Parallelized)", te - ts); | ||

| + | |||

| + | cout << "Size of " << size << endl; | ||

| + | cout << "Grainsize of " << grainsize << endl; | ||

| + | cout << "Contains: " << endl; | ||

| + | for (auto i : u) { | ||

| + | cout << "Key: " << i.first << " | Value: " << i.second << endl; | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | Output: | ||

| + | <pre> | ||

| + | STL Hash Map (TBB Parallelized) - took - 3235 microseconds | ||

| + | Size of 10 | ||

| + | Grainsize of 10000000 | ||

| + | Contains: | ||

| + | Key: 5 | Value: 5 | ||

| + | Key: 9 | Value: 9 | ||

| + | Key: 1 | Value: 1 | ||

| + | Key: 3 | Value: 3 | ||

| + | Key: 7 | Value: 7 | ||

| + | Key: 6 | Value: 6 | ||

| + | Key: 2 | Value: 2 | ||

| + | Key: 4 | Value: 4 | ||

| + | Key: 8 | Value: 8 | ||

| + | Key: 0 | Value: 0 | ||

| + | </pre> | ||

| + | |||

| + | === Concurrency === | ||

| + | The containers of TBB feature fine-grained locking (locking small sections or elements rather than entire sections) and lock-free techniques (improves thread safety without the use of locks) when parallelizing code, allowing for task-based programming. However, they have a drawback of a large amount of parallel overhead, potentially outweighing the speed gained over serial containers on a small scale. On top of that, despite not using mutexes, two-thirds of the containers still block in some way, causing increased wait time between threads. | ||

== STL and TBB Comparison == | == STL and TBB Comparison == | ||

=== Advantages and Disadvantages === | === Advantages and Disadvantages === | ||

| + | Pros of STL: | ||

| + | * Faster serial execution | ||

| + | Cons of STL: | ||

| + | * User needs to ensure that threads don't access the same elements | ||

| + | |||

| + | Pros of TBB | ||

| + | * Built-in locks and waiting | ||

| + | * Built-in correction | ||

| + | Cons of STL: | ||

| + | * Larger parallel overhead | ||

| + | |||

| + | === Graph Analysis === | ||

| + | ==== Vector ==== | ||

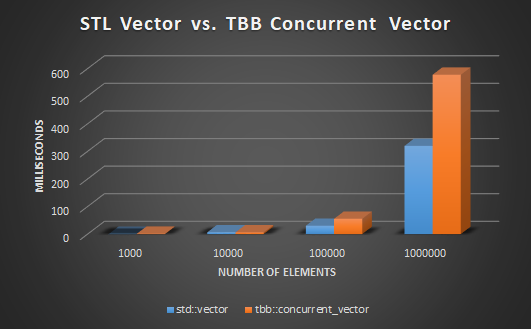

| + | [[File:STL_TBB_Vector_Graph.png]] | ||

| + | |||

| + | It appears that tbb::concurrent_vector is significantly slower than the std::vector as the number of elements increase. This could be because the amount of parallel overhead scales with the number of elements; the more elements there are, the higher the parallel overhead. | ||

| + | |||

| + | ==== Queue ==== | ||

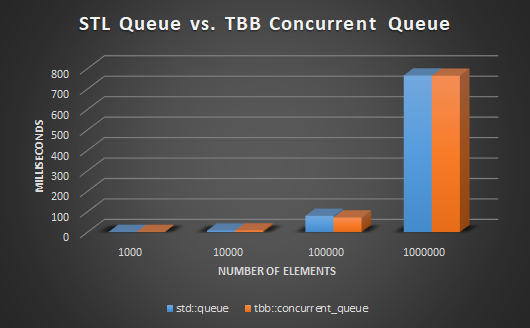

| + | [[File:STL_TBB_Queue_Graph.png]] | ||

| + | |||

| + | They have almost the same execution time (although they look identical). The reason for this is unknown to us. | ||

| + | |||

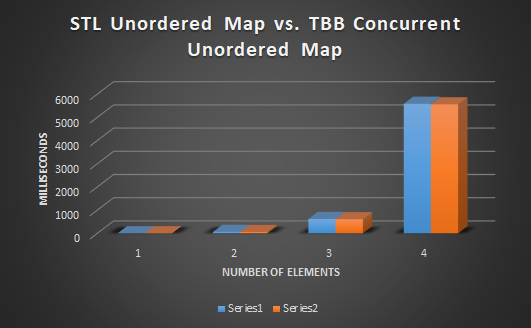

| + | ==== Unordered Map ==== | ||

| + | [[File:STL_TBB_UnorderedMap_Graph.png]] | ||

| + | |||

| + | They have almost the same execution time (although they look identical). The reason for this is unknown to us. | ||

Latest revision as of 04:28, 12 August 2021

GPU621/DPS921 | Participants | Groups and Projects | Resources | Glossary

Contents

C++11 STL Comparison to TBB - Case Studies

The objective of this research project is to compare the STL to the TBB. This project specifically covers the container classes in each of the STL and TBB, and compares their performance by using graphs.

Group Members

Joseph Chan | Bowen Chu | Henry Xu

Background

C++11 introduces many improvements and modifications, such as the addition of function objects and hash tables. These can be found within the STL, which stands for Standard Template Library. It consists of commonly used containers, algorithms, functions and iterator templates.

The C++11 STL has container classes such as a list and deque. However, the methods within these classes do not have built-in concurrency, despite the STL having thread classes that may be used for parallelization. Intel's oneAPI Threading Building Blocks (oneTBB) implements similar container classes that, unlike the STL container classes, has built-in concurrency which allows operations performed with them to be executed in parallel. Thus, oneTBB is one of many tools that can be used for parallel programming with containers.

Standard Template Library (STL)

The STL has many container classes. In this section, only three of the container types will be covered: vector, queue, and hash table.

Container Class Templates

std::vector

The std::vector template has an underlying data structure of a dynamic array. That is, the std::vector stores elements in a sequence akin to an array, but also has the functionality of resizing to match the capacity to the number of elements in the container. Upon adding or removing elements, the std::vector will resize itself to create or remove space depending on the number of elements that will be added.

Member functions:

- at - accesses the specified element but throws an exception if it is out of bounds

- operator[] - accesses the specified element

- front - accesses the front element

- back - accesses the back element

- empty - checks whether the container is empty

- size - returns the number of elements

- clear - deletes all elements

- insert - inserts elements

- emplace - constructs and inserts an element

- erase - deletes an element

- push_back - adds an element to the end

std::queue

The std::queue template has the same properties as std::vector in that it is a dynamically resizing array, but implements the First-In First-Out (FIFO) approach. This means that the first element to be added into the std::queue will be the first element to be removed from the queue. It also implies that the last element to be added will be the last element to be removed.

Member functions:

- front - accesses the first element

- back - accesses the last element

- empty - checks whether the container is empty

- size - returns the number of elements

- push - inserts an element at the front

- emplace - constructs and inserts an element at the front

- push - inserts an element at the front

- pop - removes the first element

std::unordered_map

The std::unordered_map template has an underlying data structure of a hash map (or hash table). A hash map is an unsorted table of key-value pairs that have unique keys. In other words, there cannot be two or more pairs in the table that have the same key. In a hash table, the keys of the pairs are hashed by using a hash function.

Member functions:

- at - accesses the specified element but throws an exception if it is out of bounds

- operator[] - accesses or inserts a specified element

- find - finds an element with a specific key

- empty - checks whether the container is empty

- size - returns the number of elements

- clear - deletes all elements

- insert - inserts elements

- emplace - constructs and inserts an element

- erase - deletes an element

Container Performance

Simple programs will be used to measure the performance of the STL containers, as seen below.

Vector

Code:

// std_vector.cpp

#include <iostream>

#include <chrono>

#include <string>

#include <vector>

using namespace std;

using namespace std::chrono;

// reports time

void reportTime(const char* msg, steady_clock::duration span) {

auto ms = duration_cast<microseconds>(span);

cout << msg << " - took - " << ms.count() << " microseconds" << endl;

}

int main()

{

vector<int> v;

int size = 10;

steady_clock::time_point ts, te;

ts = steady_clock::now();

for (int i = 0; i < size; i++) {

v.push_back(i);

}

te = steady_clock::now();

reportTime("STL Vector", te - ts);

cout << "Size of " << size << endl;

cout << "Contains: " << endl;

for (auto i = v.begin(); i != v.end(); i++) {

std::cout << *i << " ";

}

}

This program creates a vector to store elements and a size variable which specifies the number of elements to add to the vector. The time between the insertion of elements is recorded and printed out at the end, along with the size and the vector’s contents.

Output:

STL Vector - took - 24 microseconds Size of 10 Contains: 0 1 2 3 4 5 6 7 8 9

Queue

Code:

// std_queue.cpp

#include <iostream>

#include <chrono>

#include <string>

#include <queue>

using namespace std;

using namespace std::chrono;

// reports time

void reportTime(const char* msg, steady_clock::duration span) {

auto ms = duration_cast<microseconds>(span);

cout << msg << " - took - " << ms.count() << " microseconds" << endl;

}

int main()

{

queue<int> q;

int size = 10;

steady_clock::time_point ts, te;

ts = steady_clock::now();

for (int i = 0; i < size; i++) {

q.push(i);

}

te = steady_clock::now();

reportTime("STL Queue", te - ts);

cout << "Size of " << size << endl;

cout << "Contains: " << endl;

for (int i = 0; i < size; i++) {

std::cout << q.front() << " ";

q.pop();

}

}

The code for testing the queue is similar to the vector code, except it will use the push() method to add elements into it. Iterators cannot be used to iterate through queues because there should be no access to the elements in the middle of the queue, only the front and back. We will use a normal loop to print each element by retrieving the element in front and popping it off.

Output:

STL Queue - took - 11 microseconds Size of 10 Contains: 0 1 2 3 4 5 6 7 8 9

Unordered Map

Code:

// std_unordered_map.cpp

#include <iostream>

#include <chrono>

#include <string>

#include <unordered_map>

using namespace std;

using namespace std::chrono;

// reports time

void reportTime(const char* msg, steady_clock::duration span) {

auto ms = duration_cast<microseconds>(span);

cout << msg << " - took - " << ms.count() << " microseconds" << endl;

}

int main()

{

unordered_map<std::string, int> u;

int size = 10;

steady_clock::time_point ts, te;

ts = steady_clock::now();

for (int i = 0; i < size; i++) {

u.emplace(to_string(i), i);

}

te = steady_clock::now();

reportTime("STL Hash Map", te - ts);

cout << "Size of " << size << endl;

cout << "Contains: " << endl;

for (auto i : u) {

cout << "Key: " << i.first << " | Value: " << i.second << endl;

}

}

This program is similar to the previous two, except that key-value pairs are being added to the hash map.

Output:

STL Hash Map - took - 91 microseconds Size of 10 Contains: Key: 0 | Value: 0 Key: 1 | Value: 1 Key: 2 | Value: 2 Key: 3 | Value: 3 Key: 4 | Value: 4 Key: 5 | Value: 5 Key: 6 | Value: 6 Key: 7 | Value: 7 Key: 8 | Value: 8 Key: 9 | Value: 9

Concurrency

As mentioned before, the STL container classes do not have built-in concurrency functionality. However, it is still possible to parallelize the operations that are to be performed with them, by using the std::thread class from the STL or a threading library such as OpenMP or TBB. With operations being performed on the container in parallel, race conditions may occur with the elements and unexpectedly modify the data in a way that is not desirable. In this case, we could solve the problem by using mutexes or atomics. However, doing this may serialize the operations rather than parallelize them, so it is not reliable to use such a solution unless it is absolutely needed. The most reliable solution would be to create your own container class templates and manually parallelize each of their methods. Fortunately, it is not necessary to do so since we can simply use the TBB library, which has implemented many container class templates similar to the STL templates, but with built-in concurrency.

Threading Building Blocks (TBB)

TBB refers to Intel's oneAPI Threading Building Blocks (although it is actually called oneTBB). It is a library used for parallel programming, featuring the scheduling of threads and breakdown of computations into tasks that run concurrently.

Container Class Templates

All of the below containers are similar to the STL. There may be a few differences, but the functionality is mostly the same.

tbb::concurrent_vector

The tbb::concurrent_vector is just like std::vector in the STL. Unlike std::vector, it enables the vector to be safely grown concurrently without the threads interfering with each other.

Member functions:

- push_back - adds an element to the end

- grow_by - increases the size by the specified number of elements

- grow_to_at_least - same as grow_by, but increases the capacity to at least the specified size

- size - returns the number of elements, but may return values under concurrent construction

tbb::concurrent_queue

The tbb::concurrent_queue is just like std::queue in the STL, except with enhanced concurrency. There is a variant tbb::concurrent_bounded_queue which adds blocking operations to its methods.

Member functions:

- pop - removes an element

- push - adds an element

- try_push - adds an element only if it does not exceed the capacity

- size - returns the number of elements, but may return values under concurrent construction

tbb::concurrent_unordered_map

The tbb::concurrent_unordered_map is just like std::unordered_map in the STL, except with enhanced concurrency. There is a variant tbb::concurrent_unordered_multimap which stores a counter for each pair with a duplicate key.

Member functions:

- insert - inserts an element

- find - finds an element

- emplace - constructs and inserts an element

- size - returns the number of elements, but may return values under concurrent construction

Container Performance

The below programs use very similar code that were in the programs for STL container performance.

Concurrent Vector

Code:

// tbb_concurrent_vector.cpp

#include <iostream>

#include <chrono>

#include <string>

#include <tbb/tbb.h>

using namespace std;

using namespace std::chrono;

// reports time

void reportTime(const char* msg, steady_clock::duration span) {

auto ms = duration_cast<microseconds>(span);

cout << msg << " - took - " << ms.count() << " microseconds" << endl;

}

int main()

{

tbb::concurrent_vector<int> v({ 0 });

int size = 10;

int grainsize = 10000000;

steady_clock::time_point ts, te;

tbb::blocked_range<int> range(0, size, grainsize);

auto setV = [&](tbb::blocked_range<int>& r) {

for (auto i = r.begin(); i != r.end(); i++) {

v.push_back(i);

}

};

ts = steady_clock::now();

tbb:parallel_for(range, setV);

te = steady_clock::now();

reportTime("TBB Concurrent Vector", te - ts);

std::cout << "Size of " << size << std::endl;

std::cout << "Grainsize of " << grainsize << std::endl;

std::cout << "Contains: " << std::endl;

for (auto i = v.begin(); i != v.end(); i++) {

std::cout << *i << " ";

}

}

Output:

TBB Concurrent Vector - took - 2304 microseconds Size of 10 Grainsize of 10000000 Contains: 0 0 1 2 3 4 5 6 7 8 9

Concurrent Queue

Code:

// tbb_concurrent_queue.cpp

#include <iostream>

#include <chrono>

#include <string>

#include <tbb/tbb.h>

using namespace std;

using namespace std::chrono;

// reports time

void reportTime(const char* msg, steady_clock::duration span) {

auto ms = duration_cast<microseconds>(span);

cout << msg << " - took - " << ms.count() << " microseconds" << endl;

}

int main()

{

tbb::concurrent_queue<int> q;

int val, size = 10;

int grainsize = 10000000;

steady_clock::time_point ts, te;

tbb::blocked_range<int> range(0, size, grainsize);

auto setQ = [&](tbb::blocked_range<int>& r) {

for (int i = 0; i < size; i++) {

q.push(i);

}

};

ts = steady_clock::now();

tbb:parallel_for(range, setQ);

te = steady_clock::now();

reportTime("TBB Concurrent Queue", te - ts);

cout << "Size of " << size << endl;

cout << "Grainsize of " << grainsize << endl;

cout << "Contains: " << endl;

for (int i = 0; i < size; i++) {

q.try_pop(val);

std::cout << val << " ";

}

}

Output:

TBB Concurrent Queue - took - 1308 microseconds Size of 10 Grainsize of 10000000 Contains: 0 1 2 3 4 5 6 7 8 9

Concurrent Unordered Map

Code:

// tbb_concurrent_unordered_map.cpp

#include <iostream>

#include <chrono>

#include <string>

#include <tbb/tbb.h>

using namespace std;

using namespace std::chrono;

// reports time

void reportTime(const char* msg, steady_clock::duration span) {

auto ms = duration_cast<microseconds>(span);

cout << msg << " - took - " << ms.count() << " microseconds" << endl;

}

int main()

{

tbb::concurrent_unordered_map<std::string, int> u;

int size = 10;

int grainsize = 10000000;

steady_clock::time_point ts, te;

tbb::blocked_range<int> range(0, size, grainsize);

auto setU = [&](tbb::blocked_range<int>& r) {

for (auto i = r.begin(); i != r.end(); i++) {

u.emplace(to_string(i), i);

}

};

ts = steady_clock::now();

tbb::parallel_for(range, setU);

te = steady_clock::now();

reportTime("STL Hash Map (TBB Parallelized)", te - ts);

cout << "Size of " << size << endl;

cout << "Grainsize of " << grainsize << endl;

cout << "Contains: " << endl;

for (auto i : u) {

cout << "Key: " << i.first << " | Value: " << i.second << endl;

}

}

Output:

STL Hash Map (TBB Parallelized) - took - 3235 microseconds Size of 10 Grainsize of 10000000 Contains: Key: 5 | Value: 5 Key: 9 | Value: 9 Key: 1 | Value: 1 Key: 3 | Value: 3 Key: 7 | Value: 7 Key: 6 | Value: 6 Key: 2 | Value: 2 Key: 4 | Value: 4 Key: 8 | Value: 8 Key: 0 | Value: 0

Concurrency

The containers of TBB feature fine-grained locking (locking small sections or elements rather than entire sections) and lock-free techniques (improves thread safety without the use of locks) when parallelizing code, allowing for task-based programming. However, they have a drawback of a large amount of parallel overhead, potentially outweighing the speed gained over serial containers on a small scale. On top of that, despite not using mutexes, two-thirds of the containers still block in some way, causing increased wait time between threads.

STL and TBB Comparison

Advantages and Disadvantages

Pros of STL:

- Faster serial execution

Cons of STL:

- User needs to ensure that threads don't access the same elements

Pros of TBB

- Built-in locks and waiting

- Built-in correction

Cons of STL:

- Larger parallel overhead

Graph Analysis

Vector

It appears that tbb::concurrent_vector is significantly slower than the std::vector as the number of elements increase. This could be because the amount of parallel overhead scales with the number of elements; the more elements there are, the higher the parallel overhead.

Queue

They have almost the same execution time (although they look identical). The reason for this is unknown to us.

Unordered Map

They have almost the same execution time (although they look identical). The reason for this is unknown to us.