Difference between revisions of "GPU621/CUDA"

(→SLIDESHOW) |

|||

| (9 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{GPU621/DPS921 Index | 20207}} | {{GPU621/DPS921 Index | 20207}} | ||

| + | |||

| + | [[File:Nvidia_CUDA_Logo.jpg|right]] | ||

| + | |||

= Project Name = | = Project Name = | ||

CUDA | CUDA | ||

| Line 7: | Line 10: | ||

== Project Description == | == Project Description == | ||

| − | This project will thoroughly introduce NVIDIA’s CUDA, starting with an overview on the history and current state of CPUs and GPUs, including an analysis of architectural differences between these computing units and architectural differences between different manufacturers of the same computing units. Also included will be an analysis on differences of how software programming models, frameworks, and toolkits maximize the potential of these architectures. Then CUDA Application Domains will be analyzed. Use cases for parallel computing with GPUs will be analyzed, as well as implemented. Tests will be made regarding the performance of CUDA vs OpenCL. | + | This project will thoroughly introduce NVIDIA’s CUDA, starting with an overview on the history and current state of CPUs and GPUs, including an analysis of architectural differences between these computing units and architectural differences between different manufacturers of the same computing units. Also included will be an analysis on differences of how software programming models, frameworks, and toolkits maximize the potential of these architectures. Then CUDA Application Domains will be analyzed. Use cases for parallel computing with GPUs will be analyzed, as well as implemented. Tests will be made regarding the performance of CUDA vs OpenCL and OpenMP. |

= What is CUDA? = | = What is CUDA? = | ||

| Line 16: | Line 19: | ||

For research in this assignment, a CUDA enabled GPU will be used for implementing use cases and testing the performance of CUDA vs other parallel programming platforms. | For research in this assignment, a CUDA enabled GPU will be used for implementing use cases and testing the performance of CUDA vs other parallel programming platforms. | ||

Similarly, like how MPI can be deployed to manage a distributed memory system containing thousands of Central Processing Units, CUDA can be deployed in a distributed memory context to manage cloud installations with thousands of Graphics Processing Units. CUDA helps graphics processing for scientific applications including visualization of galaxies in astronomy, and molecular visualization for biology and medicine. CUDA enabled devices range in a wide variety from embedded systems to cloud and data center. | Similarly, like how MPI can be deployed to manage a distributed memory system containing thousands of Central Processing Units, CUDA can be deployed in a distributed memory context to manage cloud installations with thousands of Graphics Processing Units. CUDA helps graphics processing for scientific applications including visualization of galaxies in astronomy, and molecular visualization for biology and medicine. CUDA enabled devices range in a wide variety from embedded systems to cloud and data center. | ||

| − | https://developer.nvidia.com/cuda-toolkit | + | [https://developer.nvidia.com/cuda-toolkit CUDA Toolkit] |

= History & Current State of Central & Graphics Processing Units = | = History & Current State of Central & Graphics Processing Units = | ||

== History of GPUs == | == History of GPUs == | ||

| − | “The graphics processing unit (GPU), first invented by NVIDIA in 1999, is the most pervasive parallel processor to date.” | + | “The graphics processing unit (GPU), first invented by NVIDIA in 1999, is the most pervasive parallel processor to date.” [https://www.sciencedirect.com/topics/engineering/graphics-processing-unit Source] |

| − | GeForce 256 was marketed as | + | GeForce 256 was marketed as the worlds first GPU. Designed on a single chip, this product was a champion in it’s time, delivering “480 million 8-sample fully filtered pixels per second.” [https://www.anandtech.com/show/429/3 Source] It featured hardware Transform & Lighting and Cube Environment Mapping which were graphics rendering methods of the day. There is support for Direct3D 7.0 and OpenGL 1.2, which run on Windows and Linux respectively. |

== Current State of GPUs == | == Current State of GPUs == | ||

Today, Graphics processing units, or GPUs exist in many different forms: | Today, Graphics processing units, or GPUs exist in many different forms: | ||

| + | |||

• Dedicated or discreet graphics cards are the most powerful form and usually are connected to a PCIe lane. Although these units have their own dedicated memory as well as memory that is shared with the CPU, they cannot replace the CPU and when used with CPUs do not constitute as distributed memory systems which are systems with multiple CPUs each with their own memory. Dedicated or discreet graphics cards can be found in PCs that require extensive dedicated graphics rendering power, such as workstations for scientific visualizations and PCs for gaming. | • Dedicated or discreet graphics cards are the most powerful form and usually are connected to a PCIe lane. Although these units have their own dedicated memory as well as memory that is shared with the CPU, they cannot replace the CPU and when used with CPUs do not constitute as distributed memory systems which are systems with multiple CPUs each with their own memory. Dedicated or discreet graphics cards can be found in PCs that require extensive dedicated graphics rendering power, such as workstations for scientific visualizations and PCs for gaming. | ||

| + | |||

• Integrated graphics processing or (iGPU) units share RAM with the CPU and can appear on the CPU or near it depending on the architecture. Integrated GPUs usually ship with notebook style laptops to accomplish daily graphics rendering tasks. At 2.6 trillion floating point operations per second, the recently released M1 chip from Apple with integrated graphics is currently the fastest in the world. | • Integrated graphics processing or (iGPU) units share RAM with the CPU and can appear on the CPU or near it depending on the architecture. Integrated GPUs usually ship with notebook style laptops to accomplish daily graphics rendering tasks. At 2.6 trillion floating point operations per second, the recently released M1 chip from Apple with integrated graphics is currently the fastest in the world. | ||

| + | |||

• Hybrid graphics processing units have earned their name by scoring in between dedicated and integrated GPUs in price and performance. An example is NVIDIA’s TurboCache, which shares main system memory. | • Hybrid graphics processing units have earned their name by scoring in between dedicated and integrated GPUs in price and performance. An example is NVIDIA’s TurboCache, which shares main system memory. | ||

| + | |||

• General Purpose Graphics Processing Units (GPGPUs) are modified vector processors (found on CPUs) with compute kernels designed for high throughput. These modifications allow them to support APIs such as OpenMP. Since CUDA was released on June 23, 2007, NVIDIA’s G8x series and onwards (all their GPUs released after November 8, 2006) are GPGPU capable. | • General Purpose Graphics Processing Units (GPGPUs) are modified vector processors (found on CPUs) with compute kernels designed for high throughput. These modifications allow them to support APIs such as OpenMP. Since CUDA was released on June 23, 2007, NVIDIA’s G8x series and onwards (all their GPUs released after November 8, 2006) are GPGPU capable. | ||

| + | |||

• External GPUs are surprisingly located outside the housing of the computer and have their own housing and power supply. They need to be connected to a PCIe port of a computer, which can be accessed through a Thunderbolt 3 or 4 port. So, if you have a compatible computer and are looking for more graphics processing power, eGPUs can be a cost-saving solution. | • External GPUs are surprisingly located outside the housing of the computer and have their own housing and power supply. They need to be connected to a PCIe port of a computer, which can be accessed through a Thunderbolt 3 or 4 port. So, if you have a compatible computer and are looking for more graphics processing power, eGPUs can be a cost-saving solution. | ||

| + | == History and Current State of CUDA == | ||

| + | [[File:Arch_NVIDIA.jpg|right]] | ||

| + | |||

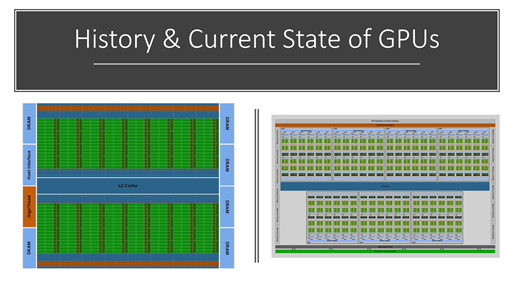

NVIDIA’s first CUDA architecture was called Fermi. | NVIDIA’s first CUDA architecture was called Fermi. | ||

“NVIDIA’s Fermi GPU architecture consists of multiple streaming multiprocessors (SMs), each consisting of 32 cores, each of which can execute one floatingpoint or integer instruction per clock. The SMs are supported by a second-level cache, host interface, GigaThread scheduler, and multiple DRAM interfaces.” | “NVIDIA’s Fermi GPU architecture consists of multiple streaming multiprocessors (SMs), each consisting of 32 cores, each of which can execute one floatingpoint or integer instruction per clock. The SMs are supported by a second-level cache, host interface, GigaThread scheduler, and multiple DRAM interfaces.” | ||

| − | https://www.nvidia.com/content/PDF/fermi_white_papers/P.Glaskowsky_NVIDIA's_Fermi-The_First_Complete_GPU_Architecture.pdf | + | [https://www.nvidia.com/content/PDF/fermi_white_papers/P.Glaskowsky_NVIDIA's_Fermi-The_First_Complete_GPU_Architecture.pdf NVIDIA Fermi] |

| + | |||

A CUDA core is approximately comparable to a CPU core. | A CUDA core is approximately comparable to a CPU core. | ||

| + | |||

NVIDIA’s latest CUDA architecture is called Ampere GA102 | NVIDIA’s latest CUDA architecture is called Ampere GA102 | ||

“Each SM in GA10x GPUs contain 128 CUDA Cores, four third-generation Tensor Cores, a 256 KB Register File, four Texture Units, one second-generation Ray Tracing Core, and 128 KB of L1/Shared Memory, which can be configured for differing capacities depending on the needs of the compute or graphics workloads. The memory subsystem of GA102 consists of twelve 32-bit memory controllers (384-bit total). 512 KB of L2 cache is paired with each 32-bit memory controller, for a total of 6144 KB on the full GA102 GPU.” | “Each SM in GA10x GPUs contain 128 CUDA Cores, four third-generation Tensor Cores, a 256 KB Register File, four Texture Units, one second-generation Ray Tracing Core, and 128 KB of L1/Shared Memory, which can be configured for differing capacities depending on the needs of the compute or graphics workloads. The memory subsystem of GA102 consists of twelve 32-bit memory controllers (384-bit total). 512 KB of L2 cache is paired with each 32-bit memory controller, for a total of 6144 KB on the full GA102 GPU.” | ||

| − | https://www.nvidia.com/content/PDF/nvidia-ampere-ga-102-gpu-architecture-whitepaper-v2.pdf | + | [https://www.nvidia.com/content/PDF/nvidia-ampere-ga-102-gpu-architecture-whitepaper-v2.pdf NVIDIA Ampere GA102] |

| + | |||

As the amount of processing power that went into GPUs increased beyond the point necessary for rendering pixels on the screen, a method was needed to utilize the wasted computing power on Graphics Processing Units. General Purpose Graphics Processing Units can perform General Purpose computations and Graphics Processing computations. With the release of CUDA, all CUDA-enabled GPUs can perform general purpose processing, with double floating-point precision supported since the first major compute capability version. | As the amount of processing power that went into GPUs increased beyond the point necessary for rendering pixels on the screen, a method was needed to utilize the wasted computing power on Graphics Processing Units. General Purpose Graphics Processing Units can perform General Purpose computations and Graphics Processing computations. With the release of CUDA, all CUDA-enabled GPUs can perform general purpose processing, with double floating-point precision supported since the first major compute capability version. | ||

| Line 50: | Line 64: | ||

== CPUs vs. GPUs == | == CPUs vs. GPUs == | ||

CPUs have a faster clock speed and can execute a wide array of general-purpose instructions used for controlling computers. At the time of their creation, GPUs could not do this. GPUs are focused on having as many cores as possible with a limited instruction set to provide sheer computing power in specialized scenarios. This higher number of cores in GPUs allows modern GPUs to achieve more floating-point operations per second than modern CPUs. It is highly debated whether CPUs will replace GPUs or the other way around, neither of which I believe will happen due to the various roles these units can take in any given system. A CPU is the physical and logical component that any operating system uses to run programs and manage hardware. If a computer has a screen, it needs a GPU. Depending on the level of depth and layers that graphics rending required will determine what GPU should be used. | CPUs have a faster clock speed and can execute a wide array of general-purpose instructions used for controlling computers. At the time of their creation, GPUs could not do this. GPUs are focused on having as many cores as possible with a limited instruction set to provide sheer computing power in specialized scenarios. This higher number of cores in GPUs allows modern GPUs to achieve more floating-point operations per second than modern CPUs. It is highly debated whether CPUs will replace GPUs or the other way around, neither of which I believe will happen due to the various roles these units can take in any given system. A CPU is the physical and logical component that any operating system uses to run programs and manage hardware. If a computer has a screen, it needs a GPU. Depending on the level of depth and layers that graphics rending required will determine what GPU should be used. | ||

| + | |||

== NVIDIA vs. AMD == | == NVIDIA vs. AMD == | ||

Main architectural differences across AMD and NVIDIA GPUs are architecture of execution units, cache hierarchy, and graphics pipelines. Graphics pipelines are conceptual models used by GPUs to render 3D images on a 2D screen. Conceptually and theoretically these devices are quite different, but their practical uses and diagrams of modern GPUs from both companies are quite similar. | Main architectural differences across AMD and NVIDIA GPUs are architecture of execution units, cache hierarchy, and graphics pipelines. Graphics pipelines are conceptual models used by GPUs to render 3D images on a 2D screen. Conceptually and theoretically these devices are quite different, but their practical uses and diagrams of modern GPUs from both companies are quite similar. | ||

NVIDIA and AMD have different product lineups, audiences, and business visions. AMD offers CPUs and GPUs, while NVIDIA focuses on GPUs. Having been founded nearly thirty years prior to NVIDIA, AMD currently sits at 28% of their competitors market capitalization. Intel’s market cap is currently 43% of NVIDIAs, making NVIDIA the largest company of the three big chip makers. | NVIDIA and AMD have different product lineups, audiences, and business visions. AMD offers CPUs and GPUs, while NVIDIA focuses on GPUs. Having been founded nearly thirty years prior to NVIDIA, AMD currently sits at 28% of their competitors market capitalization. Intel’s market cap is currently 43% of NVIDIAs, making NVIDIA the largest company of the three big chip makers. | ||

Traditionally in the world of PC building, NVIDIA and Intel usually release the more expensive but most performing hardware, while AMD provides the best valued hardware in price-to-performance. | Traditionally in the world of PC building, NVIDIA and Intel usually release the more expensive but most performing hardware, while AMD provides the best valued hardware in price-to-performance. | ||

| − | https://www.hardwaretimes.com/amd-navi-vs-nvidia-turing-comparing-the-radeon-and-geforce-graphics-architectures/ | + | |

| − | https://ycharts.com/companies/NVDA/r_and_d_expense | + | [https://www.hardwaretimes.com/amd-navi-vs-nvidia-turing-comparing-the-radeon-and-geforce-graphics-architectures/ Source] |

| − | https://www.statista.com/statistics/267873/amds-expenditure-on-research-and-development-since-2001/ | + | |

| + | [https://ycharts.com/companies/NVDA/r_and_d_expense Source] | ||

| + | |||

| + | [https://www.statista.com/statistics/267873/amds-expenditure-on-research-and-development-since-2001/ Source] | ||

= Software Development Strategies = | = Software Development Strategies = | ||

| Line 66: | Line 84: | ||

oneAPI, branded by Intel as “A Unified X-Architecture Programming Model” with applications for the CPU covered in this course, but oneAPI is designed for diverse architectures and can be used to simplify programming across GPUs. Intel oneAPI DPC++ (Data Parallel C++) compiler includes CUDA backend support, which was initially released almost two years ago. Considering Intel oneAPI was officially released December 2020, oneAPI seems to live up to its brand name. | oneAPI, branded by Intel as “A Unified X-Architecture Programming Model” with applications for the CPU covered in this course, but oneAPI is designed for diverse architectures and can be used to simplify programming across GPUs. Intel oneAPI DPC++ (Data Parallel C++) compiler includes CUDA backend support, which was initially released almost two years ago. Considering Intel oneAPI was officially released December 2020, oneAPI seems to live up to its brand name. | ||

| − | https://phoronix.com/scan.php?page=news_item&px=Intel-oneAPI-DPCpp-CUDA-Release | + | |

| − | https://codematters.online/intel-oneapi-faq-part-1-what-is-oneapi/ | + | [https://phoronix.com/scan.php?page=news_item&px=Intel-oneAPI-DPCpp-CUDA-Release Source] |

| + | [https://codematters.online/intel-oneapi-faq-part-1-what-is-oneapi/ Source] | ||

== Software Programming Frameworks == | == Software Programming Frameworks == | ||

Software Programming Frameworks are structures in which libraries reside. APIs pull source code from frameworks. | Software Programming Frameworks are structures in which libraries reside. APIs pull source code from frameworks. | ||

| + | |||

OpenMP API | OpenMP API | ||

| + | |||

oneAPI Thread Building Blocks | oneAPI Thread Building Blocks | ||

| + | |||

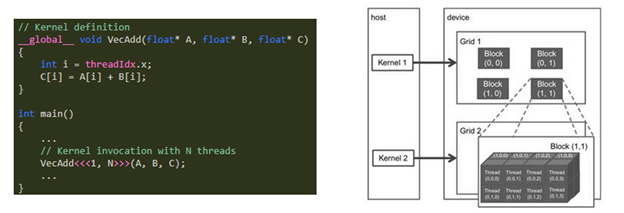

CUDA API – extension of C and C++ programming language allowing for thread level parallelism. It is a high-level abstraction allowing for developers to easily maximize the potential of CUDA enabled devices. Through the CUDA API, parallel blocks of code are identified as kernels. A kernel will get executed in parallel by CUDA threads. CUDA threads are collected into blocks, and blocks are organized into grids. | CUDA API – extension of C and C++ programming language allowing for thread level parallelism. It is a high-level abstraction allowing for developers to easily maximize the potential of CUDA enabled devices. Through the CUDA API, parallel blocks of code are identified as kernels. A kernel will get executed in parallel by CUDA threads. CUDA threads are collected into blocks, and blocks are organized into grids. | ||

| + | |||

| + | [[File:CUDA_code.png]] | ||

CUDA special syntax parameters <<<…>>> identified in the kernel invocation execution configuration are number of blocks (1) and number of threads per block (N). | CUDA special syntax parameters <<<…>>> identified in the kernel invocation execution configuration are number of blocks (1) and number of threads per block (N). | ||

| − | https://www.kdnuggets.com/2016/11/parallelism-machine-learning-gpu-cuda-threading.html | + | |

| − | https://docs.nvidia.com/cuda/cuda-c-programming-guide/index.html | + | [https://www.kdnuggets.com/2016/11/parallelism-machine-learning-gpu-cuda-threading.html Source] |

| + | [https://docs.nvidia.com/cuda/cuda-c-programming-guide/index.html Source] | ||

| + | |||

Common libraries contained in the CUDA Framework, accessible through the CUDA API: | Common libraries contained in the CUDA Framework, accessible through the CUDA API: | ||

| + | |||

cuBLAS - “the CUDA Basic Linear Algebra Subroutine library.” | cuBLAS - “the CUDA Basic Linear Algebra Subroutine library.” | ||

| + | |||

cuFFT - “the CUDA Fast Fourier Transform library.” | cuFFT - “the CUDA Fast Fourier Transform library.” | ||

== Software Programming Toolkits == | == Software Programming Toolkits == | ||

Where Frameworks are the structures with libraries containing code we access, toolkits are software programs written to help other software developers to develop programs and maintain code. | Where Frameworks are the structures with libraries containing code we access, toolkits are software programs written to help other software developers to develop programs and maintain code. | ||

| + | |||

oneAPI HPC Toolkit | oneAPI HPC Toolkit | ||

| + | |||

CUDA Toolkit – available for download from NVIDIA’s website. Includes extensions for IDEs for features such as syntax highlighting and easily creating CUDA projects similarly to OpenMP, TBB & MPI. Also included in CUDA toolkit is the CUDA compiler, framework, NSight Profiler for Visual Studio for monitoring performance of applications and much more. | CUDA Toolkit – available for download from NVIDIA’s website. Includes extensions for IDEs for features such as syntax highlighting and easily creating CUDA projects similarly to OpenMP, TBB & MPI. Also included in CUDA toolkit is the CUDA compiler, framework, NSight Profiler for Visual Studio for monitoring performance of applications and much more. | ||

= CUDA Application Domains = | = CUDA Application Domains = | ||

| + | |||

| + | [[File:CUDA_App_Domains.jpg|right]] | ||

| + | |||

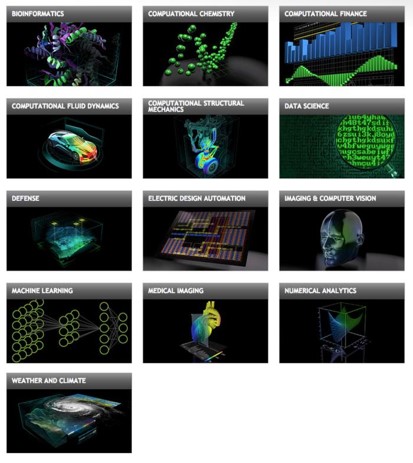

“CUDA and Nvidia GPUs have been adopted in many areas that need high floating-point computing performance, as summarized pictorially in the image above. A more comprehensive list includes: | “CUDA and Nvidia GPUs have been adopted in many areas that need high floating-point computing performance, as summarized pictorially in the image above. A more comprehensive list includes: | ||

| + | |||

1. Computational finance | 1. Computational finance | ||

| + | |||

2. Climate, weather, and ocean modeling | 2. Climate, weather, and ocean modeling | ||

| + | |||

3. Data science and analytics | 3. Data science and analytics | ||

| + | |||

4. Deep learning and machine learning | 4. Deep learning and machine learning | ||

| + | |||

5. Defense and intelligence | 5. Defense and intelligence | ||

| + | |||

6. Manufacturing/AEC (Architecture, Engineering, and Construction): CAD and CAE (including computational fluid dynamics, computational structural mechanics, design and visualization, and electronic design automation) | 6. Manufacturing/AEC (Architecture, Engineering, and Construction): CAD and CAE (including computational fluid dynamics, computational structural mechanics, design and visualization, and electronic design automation) | ||

| − | 7. Media and entertainment (including animation, modeling, and rendering; color correction and grain management; compositing; finishing and effects; editing; encoding and digital distribution; on-air graphics; on-set, review, and stereo tools; and weather graphics) | + | |

| + | 7. Media and entertainment (including animation, modeling, and rendering; color correction and grain management; compositing; finishing and effects; editing; encoding and digital distribution; on-air graphics; on-set, | ||

| + | review, and stereo tools; and weather graphics) | ||

| + | |||

8. Medical imaging | 8. Medical imaging | ||

| + | |||

9. Oil and gas | 9. Oil and gas | ||

| + | |||

10. Research: Higher education and supercomputing (including computational chemistry and biology, numerical analytics, physics, and scientific visualization) | 10. Research: Higher education and supercomputing (including computational chemistry and biology, numerical analytics, physics, and scientific visualization) | ||

| + | |||

11. Safety and security | 11. Safety and security | ||

| + | |||

12. Tools and management” | 12. Tools and management” | ||

| − | https://www.infoworld.com/article/3299703/what-is-cuda-parallel-programming-for-gpus.html | + | |

| + | [https://www.infoworld.com/article/3299703/what-is-cuda-parallel-programming-for-gpus.html Source] | ||

= Use Cases for Parallel Computing with GPUs = | = Use Cases for Parallel Computing with GPUs = | ||

| Line 107: | Line 154: | ||

== Video Processing == | == Video Processing == | ||

My favourite use case to fall under this bucket, Video Games! Originally the exclusive use case for the Graphics Processing Unit was… Graphics Processing. Otherwise known as pushing pixels to the screen, gaming graphics cards have been optimized for this behaviour since their conception. Interestingly, one of the most important calculations in graphics is matrix multiplication. Matrices are generic operators required for processing graphic transformations such as: translation, rotation, scaling, reflection, and shearing. Of course, video rendering techniques have evolved greatly in the last couple of decades, from 2D to 3D rendering methods and additional layers of graphical computation resulting in continuously increasing realism. | My favourite use case to fall under this bucket, Video Games! Originally the exclusive use case for the Graphics Processing Unit was… Graphics Processing. Otherwise known as pushing pixels to the screen, gaming graphics cards have been optimized for this behaviour since their conception. Interestingly, one of the most important calculations in graphics is matrix multiplication. Matrices are generic operators required for processing graphic transformations such as: translation, rotation, scaling, reflection, and shearing. Of course, video rendering techniques have evolved greatly in the last couple of decades, from 2D to 3D rendering methods and additional layers of graphical computation resulting in continuously increasing realism. | ||

| + | |||

Average screen is 1920 by 1080 pixels, average refresh rate is 60 frames per second, resulting in the number of operations to update all pixels on the screen per second to be multiplied by 124,416,000. From a computation standpoint that is not a very big number especially considering a 2 gigahertz CPU can perform two billion operations per second, so that begs the question why GPUs? The answer is because the number of calculations to update all pixels on the screen per second can reach insanely large numbers resulting in more than two billion operations per second. This is in intense graprics rendering sceneriaos. However, they are simple operations like matrix multiplication. This helps clearly understand the role of the GPU which is to perform massive amounts of simple operations in parallel. As a result of GPU being able to perform so many calculations per clock cycle, clock speeds aren’t as high. | Average screen is 1920 by 1080 pixels, average refresh rate is 60 frames per second, resulting in the number of operations to update all pixels on the screen per second to be multiplied by 124,416,000. From a computation standpoint that is not a very big number especially considering a 2 gigahertz CPU can perform two billion operations per second, so that begs the question why GPUs? The answer is because the number of calculations to update all pixels on the screen per second can reach insanely large numbers resulting in more than two billion operations per second. This is in intense graprics rendering sceneriaos. However, they are simple operations like matrix multiplication. This helps clearly understand the role of the GPU which is to perform massive amounts of simple operations in parallel. As a result of GPU being able to perform so many calculations per clock cycle, clock speeds aren’t as high. | ||

| − | https://superuser.com/questions/461022/how-does-the-cpu-and-gpu-interact-in-displaying-computer-graphics | + | [https://superuser.com/questions/461022/how-does-the-cpu-and-gpu-interact-in-displaying-computer-graphics Source] |

| − | https://stackoverflow.com/questions/40337748/graphics-matrix-by-matrix-multiplication-necessary-for-transformations | + | [https://stackoverflow.com/questions/40337748/graphics-matrix-by-matrix-multiplication-necessary-for-transformations Source] |

| − | https://www.includehelp.com/computer-graphics/types-of-transformations.aspx | + | [https://www.includehelp.com/computer-graphics/types-of-transformations.aspx Source] |

Modern day GPUs have dedicated physical units for tasks such as 3D rendering, Copying, Video Encoding & Decoding, and more. As you may expect, 3D rendering is the concept of converting 3D models into 2D images on a computer, video encoding occurs on outbound data and video decoding occurs on inbound data. For small tasks such as video calls, and watching YouTube videos, integrated graphics will suffice and are usually shipped with notebook style laptops for this reason. For larger tasks such as 4K video editing, or 4K gaming, dedicated hardware is required. | Modern day GPUs have dedicated physical units for tasks such as 3D rendering, Copying, Video Encoding & Decoding, and more. As you may expect, 3D rendering is the concept of converting 3D models into 2D images on a computer, video encoding occurs on outbound data and video decoding occurs on inbound data. For small tasks such as video calls, and watching YouTube videos, integrated graphics will suffice and are usually shipped with notebook style laptops for this reason. For larger tasks such as 4K video editing, or 4K gaming, dedicated hardware is required. | ||

| + | |||

=== Real Time === | === Real Time === | ||

3D rendering often occurs in real time, especially in video games and simulations such as those that occur in software development for robotic applications. | 3D rendering often occurs in real time, especially in video games and simulations such as those that occur in software development for robotic applications. | ||

| + | |||

=== Non-Real Time or Pre-Rendering === | === Non-Real Time or Pre-Rendering === | ||

Any time we watch or upload a video through a computer, whether it is stored on the device or being streamed over a network, data containing the video must be encoded before it is stored or transmitted and decoded before it can be displayed. This is where pre-rendering occurs. | Any time we watch or upload a video through a computer, whether it is stored on the device or being streamed over a network, data containing the video must be encoded before it is stored or transmitted and decoded before it can be displayed. This is where pre-rendering occurs. | ||

| Line 120: | Line 170: | ||

== Machine Learning == | == Machine Learning == | ||

Machine learning has gained extreme popularity in recent years due to a combination of factors including big data, computational advances, and cloud business models. Every industry can benefit from Machine Learning Artificial Intelligence technologies, whether through data analysis to improve operation quality or embedded robotic systems to automate physical tasks. | Machine learning has gained extreme popularity in recent years due to a combination of factors including big data, computational advances, and cloud business models. Every industry can benefit from Machine Learning Artificial Intelligence technologies, whether through data analysis to improve operation quality or embedded robotic systems to automate physical tasks. | ||

| − | https://www.zdnet.com/article/three-reasons-why-ai-is-taking-off-right-now-and-what-you-need-to-do-about-it/ | + | |

| − | https://www.techcenturion.com/tensor-cores/ | + | [https://www.zdnet.com/article/three-reasons-why-ai-is-taking-off-right-now-and-what-you-need-to-do-about-it/ Source] |

| + | |||

| + | [https://www.techcenturion.com/tensor-cores/ Source] | ||

== Science & Research == | == Science & Research == | ||

| Line 127: | Line 179: | ||

= Use Case Implementation = | = Use Case Implementation = | ||

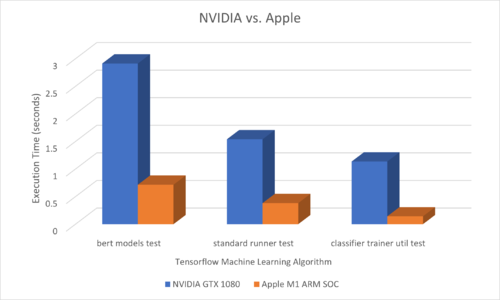

| − | Machine Learning (TensorFlow) | + | == Machine Learning (TensorFlow) == |

| − | “NVIDIA's GTX 1080 does around 8.9 teraflops” | + | |

| − | https://www.ign.com/articles/2019/03/19/what-are-teraflops-and-what-do-they-tell-us-about-googles-stadia-performance | + | The following models have been tested on a CUDA-enabled GPU, versus an Apple Neural Engine from an M1. The Python TensorFlow implementation abstracts away from hardware and allows the same code to be ran on various devices such as these. The code for these models reside in the [https://github.com/tensorflow/models/ TensorFlow Model Garden]. |

| − | Apple M1 does around 2.6 teraflops | + | |

| − | https://www.tomshardware.com/news/Apple-M1-Chip-Everything-We-Know | + | TensorFlow Machine Learning Algorithms Tested: |

| + | |||

| + | [https://github.com/tensorflow/models/tree/master/official/nlp/bert BERT (Bidirectional Encoder Representations from Transformers) Models Test] | ||

| + | |||

| + | [https://github.com/tensorflow/models/tree/master/orbit Orbit Standard Runner Test] | ||

| + | |||

| + | [https://github.com/tensorflow/models/tree/238922e98dd0e8254b5c0921b241a1f5a151782f/official/vision/image_classification Image Classifier Trainer Util Test] | ||

| + | |||

| + | [[File:NVIDIA-Apple.png|500px]] | ||

| + | |||

| + | “NVIDIA's GTX 1080 does around 8.9 teraflops” [https://www.ign.com/articles/2019/03/19/what-are-teraflops-and-what-do-they-tell-us-about-googles-stadia-performance Source] | ||

| + | |||

| + | Apple M1 does around 2.6 teraflops [https://www.tomshardware.com/news/Apple-M1-Chip-Everything-We-Know Source] | ||

| + | |||

8.9 / 2.6 = 3.4230769230769230769230769230769 | 8.9 / 2.6 = 3.4230769230769230769230769230769 | ||

| + | |||

1.136666667 / 0.142666667 = 7.9672897033474539641414627006041 | 1.136666667 / 0.142666667 = 7.9672897033474539641414627006041 | ||

| + | |||

Although the GTX 1080 does around 9 teraflops which is about three and a half times faster than the Apple M1, the M1 can consistently perform about 8 times faster for TensorFlow machine learning algorithms. This is most likely since the GTX 1080 is a Graphics Processing Unit meant for gaming, and the Apple M1 chip has a dedicated 16-core Neural Engine component for Machine Learning. The test results prove that this dedicated Neural component greatly accelerates Machine Learning as Apple claims. Unfortunately, an NVIDIA Volta or Turing GPU was not available for testing in this scenario. Volta and Turing are NVIDIAs machine learning architectures, and they offer a wide range of devices for Machine Learning uses from embedded devices to datacenter and cloud. The architecture of these devices includes tensor cores that are dedicated for machine learning and greatly increase efficiency. | Although the GTX 1080 does around 9 teraflops which is about three and a half times faster than the Apple M1, the M1 can consistently perform about 8 times faster for TensorFlow machine learning algorithms. This is most likely since the GTX 1080 is a Graphics Processing Unit meant for gaming, and the Apple M1 chip has a dedicated 16-core Neural Engine component for Machine Learning. The test results prove that this dedicated Neural component greatly accelerates Machine Learning as Apple claims. Unfortunately, an NVIDIA Volta or Turing GPU was not available for testing in this scenario. Volta and Turing are NVIDIAs machine learning architectures, and they offer a wide range of devices for Machine Learning uses from embedded devices to datacenter and cloud. The architecture of these devices includes tensor cores that are dedicated for machine learning and greatly increase efficiency. | ||

| − | https://developer.nvidia.com/deep-learning | + | |

| − | https://www.howtogeek.com/701804/how-unified-memory-speeds-up-apples-m1-arm-macs/ | + | [https://developer.nvidia.com/deep-learning Source] |

| + | [https://www.howtogeek.com/701804/how-unified-memory-speeds-up-apples-m1-arm-macs/ Source] | ||

= CUDA Performance Testing = | = CUDA Performance Testing = | ||

| − | Matrix Multiplication – CUDA vs. OpenMP | + | |

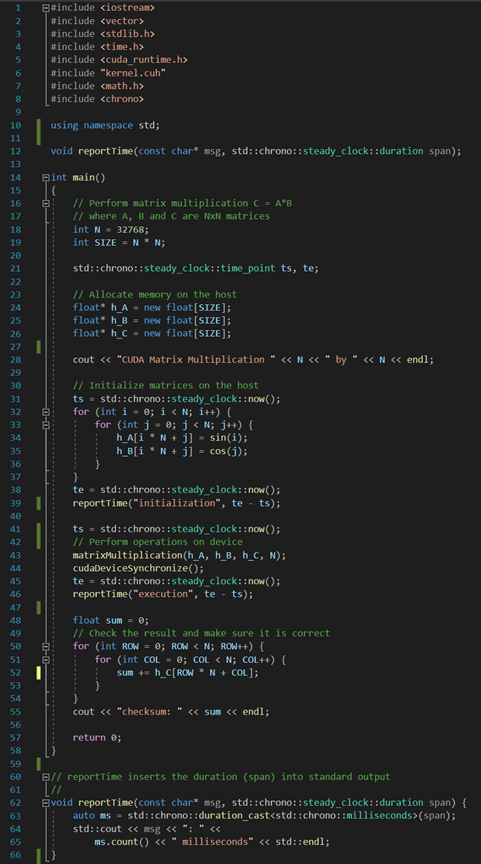

| + | == CUDA Code == | ||

| + | |||

| + | The following CUDA Matrix Multiplication Code was used for all CUDA Matrix Multiplication tests: | ||

| + | |||

| + | === CUDA MATRIX MULTIPLICATION HOST CODE === | ||

| + | [[File:CUDA_Host_Code.png]] | ||

| + | |||

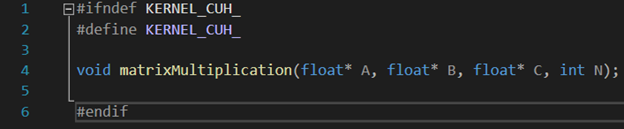

| + | === CUDA MATRIX MULTIPLICATION DEVICE HEADER === | ||

| + | [[File:CUDA_Device_Header_Code.png]] | ||

| + | |||

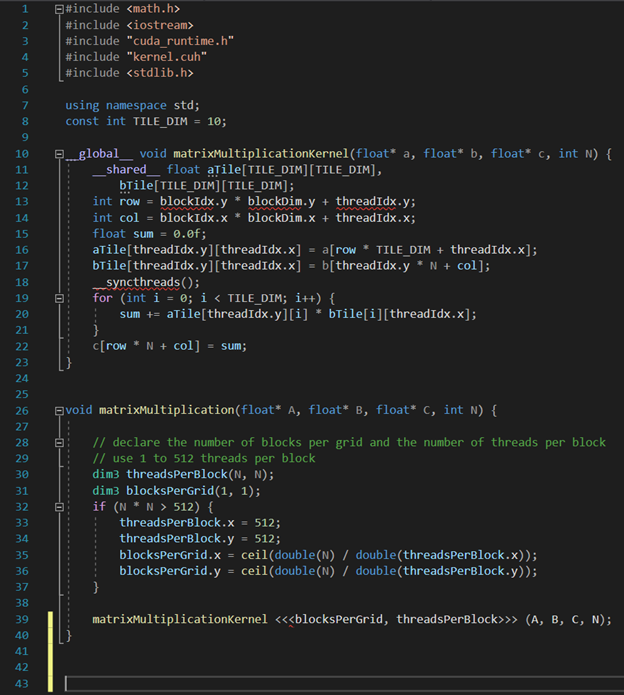

| + | === CUDA MATRIX MULTIPLICATION DEVICE CODE === | ||

| + | [[File:CUDA_Device_Code.png]] | ||

| + | |||

| + | |||

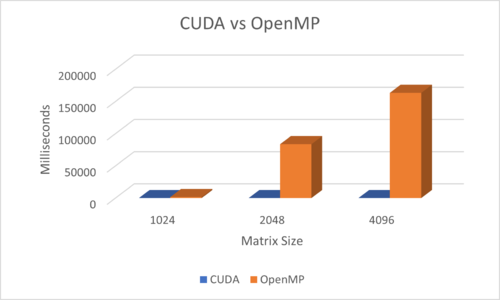

| + | == OpenCL Code == | ||

| + | |||

| + | The following OpenCL Matrix Multiplication Code was used for all Matrix Multiplication tests on an OpenCL compatible GPU (same GPU for CUDA tests): | ||

| + | |||

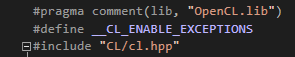

| + | === OpenCL COMPILER DIRECTIVES === | ||

| + | [[File:OpenCL_Complier_Directives.png]] | ||

| + | |||

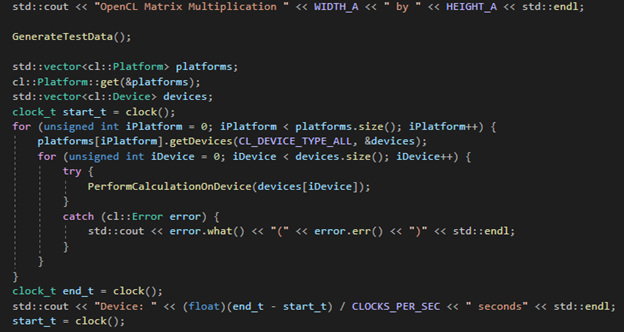

| + | === OpenCL HOST CODE === | ||

| + | [[File:OpenCL_Host_Code.png]] | ||

| + | |||

| + | === OpenCL DEVICE CODE === | ||

| + | [[File:OpenCL_Device_Code.png]] | ||

| + | |||

| + | |||

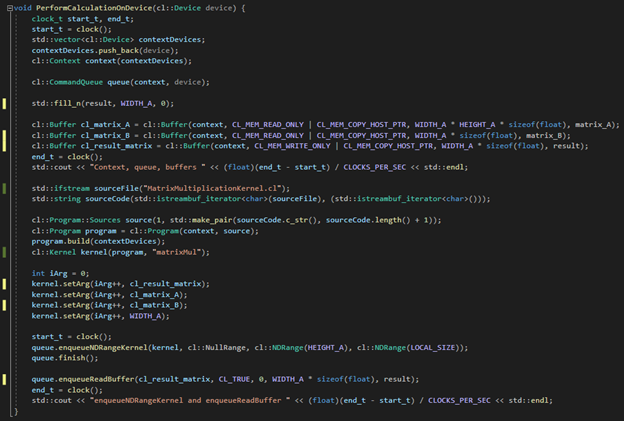

| + | == Matrix Multiplication – CUDA vs. OpenMP == | ||

| + | |||

| + | The OpenMP Matrix Multiplication solution from Workshop 3 was used for testing in this scenario. | ||

| + | |||

| + | [[File:CUDA-OpenMP.png|500px]] | ||

| + | |||

For this test, the CUDA code was ran on a CUDA enabled GPU and OpenMP code was ran on the CPU, a truer test of CUDA vs. OpenMP would be to have the OpenMP code run on the GPU. | For this test, the CUDA code was ran on a CUDA enabled GPU and OpenMP code was ran on the CPU, a truer test of CUDA vs. OpenMP would be to have the OpenMP code run on the GPU. | ||

After learning that matrix multiplication operations are optimized for GPUs and of course CUDA is optimized for NVIDIA GPUs, this seems like an unfair test, with CUDA crushing OpenMP. | After learning that matrix multiplication operations are optimized for GPUs and of course CUDA is optimized for NVIDIA GPUs, this seems like an unfair test, with CUDA crushing OpenMP. | ||

| − | Matrix Multiplication - CUDA vs OpenCL | + | |

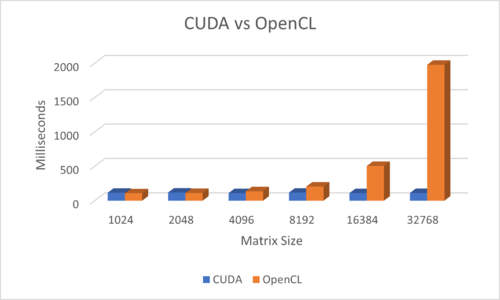

| + | == Matrix Multiplication - CUDA vs OpenCL == | ||

| + | |||

| + | [[File:CUDA-OpenCL.png|500px]] | ||

| + | |||

As covered earlier, CUDA is proprietary to NVIDIA and only works on CUDA enabled NVIDIA GPUs. This is not the case for OpenCL, which is open source and runs on a wide variety of GPUs and CPUs. This should provide the expected result that CUDA will outperform OpenCL on any given CUDA enabled device. | As covered earlier, CUDA is proprietary to NVIDIA and only works on CUDA enabled NVIDIA GPUs. This is not the case for OpenCL, which is open source and runs on a wide variety of GPUs and CPUs. This should provide the expected result that CUDA will outperform OpenCL on any given CUDA enabled device. | ||

Interestingly, with a small array size, OpenCL seems to outperform CUDA, this is presumably due to parallel overhead. | Interestingly, with a small array size, OpenCL seems to outperform CUDA, this is presumably due to parallel overhead. | ||

| Line 149: | Line 255: | ||

= CUDA Limitations = | = CUDA Limitations = | ||

Main limitations of CUDA: No recursive functions. Minimum unit block of 32 threads. | Main limitations of CUDA: No recursive functions. Minimum unit block of 32 threads. | ||

| − | http://ixbtlabs.com/articles3/video/cuda-1-p3.html#:~:text=Main%20limitations%20of%20CUDA%3A,unit%20block%20of%2032%20threads | + | |

| + | [http://ixbtlabs.com/articles3/video/cuda-1-p3.html#:~:text=Main%20limitations%20of%20CUDA%3A,unit%20block%20of%2032%20threads Source] | ||

| + | |||

Due to its proprietary nature, a limitation of CUDA is the number of devices supported and systems it can extend. This proprietary nature allows NVIDIA to completely maximize performance, resulting in CUDA outperforming OpenCL, at least on any given CUDA enabled device. | Due to its proprietary nature, a limitation of CUDA is the number of devices supported and systems it can extend. This proprietary nature allows NVIDIA to completely maximize performance, resulting in CUDA outperforming OpenCL, at least on any given CUDA enabled device. | ||

| + | |||

| + | = SLIDESHOW = | ||

| + | [[File:CUDA_pt_1.gif]] | ||

| + | [[File:CUDA_pt_2.gif]] | ||

| + | [[File:CUDA_pt_3.gif]] | ||

| + | |||

| + | [[Media:CUDA.pdf]] | ||

= SOURCES = | = SOURCES = | ||

Latest revision as of 15:57, 11 August 2021

GPU621/DPS921 | Participants | Groups and Projects | Resources | Glossary

Contents

- 1 Project Name

- 2 What is CUDA?

- 3 History & Current State of Central & Graphics Processing Units

- 4 Architectural Differences

- 5 Software Development Strategies

- 6 CUDA Application Domains

- 7 Use Cases for Parallel Computing with GPUs

- 8 Use Case Implementation

- 9 CUDA Performance Testing

- 10 CUDA Limitations

- 11 SLIDESHOW

- 12 SOURCES

Project Name

CUDA

Group Members

Project Description

This project will thoroughly introduce NVIDIA’s CUDA, starting with an overview on the history and current state of CPUs and GPUs, including an analysis of architectural differences between these computing units and architectural differences between different manufacturers of the same computing units. Also included will be an analysis on differences of how software programming models, frameworks, and toolkits maximize the potential of these architectures. Then CUDA Application Domains will be analyzed. Use cases for parallel computing with GPUs will be analyzed, as well as implemented. Tests will be made regarding the performance of CUDA vs OpenCL and OpenMP.

What is CUDA?

NVIDIA released the Compute Unified Device Architecture in 2007. CUDA exposes compatible GPU computing for general purpose. CUDA enabled Graphics Processing Units are capable of general-purpose processing. The Compute Unified Device Architecture has evolved into an extensive platform which is comprised of NVIDIAs propriety computing platform and programming model for parallel computation, specialized hardware and software APIs and frameworks, compilers, and lots of documentation from NVIDIAs Developer Zone. Developers can download and install the CUDA toolkit and learn how to use CUDA APIs and development tools with in-depth documentation on NVIDIAs website. For research in this assignment, a CUDA enabled GPU will be used for implementing use cases and testing the performance of CUDA vs other parallel programming platforms. Similarly, like how MPI can be deployed to manage a distributed memory system containing thousands of Central Processing Units, CUDA can be deployed in a distributed memory context to manage cloud installations with thousands of Graphics Processing Units. CUDA helps graphics processing for scientific applications including visualization of galaxies in astronomy, and molecular visualization for biology and medicine. CUDA enabled devices range in a wide variety from embedded systems to cloud and data center. CUDA Toolkit

History & Current State of Central & Graphics Processing Units

History of GPUs

“The graphics processing unit (GPU), first invented by NVIDIA in 1999, is the most pervasive parallel processor to date.” Source GeForce 256 was marketed as the worlds first GPU. Designed on a single chip, this product was a champion in it’s time, delivering “480 million 8-sample fully filtered pixels per second.” Source It featured hardware Transform & Lighting and Cube Environment Mapping which were graphics rendering methods of the day. There is support for Direct3D 7.0 and OpenGL 1.2, which run on Windows and Linux respectively.

Current State of GPUs

Today, Graphics processing units, or GPUs exist in many different forms:

• Dedicated or discreet graphics cards are the most powerful form and usually are connected to a PCIe lane. Although these units have their own dedicated memory as well as memory that is shared with the CPU, they cannot replace the CPU and when used with CPUs do not constitute as distributed memory systems which are systems with multiple CPUs each with their own memory. Dedicated or discreet graphics cards can be found in PCs that require extensive dedicated graphics rendering power, such as workstations for scientific visualizations and PCs for gaming.

• Integrated graphics processing or (iGPU) units share RAM with the CPU and can appear on the CPU or near it depending on the architecture. Integrated GPUs usually ship with notebook style laptops to accomplish daily graphics rendering tasks. At 2.6 trillion floating point operations per second, the recently released M1 chip from Apple with integrated graphics is currently the fastest in the world.

• Hybrid graphics processing units have earned their name by scoring in between dedicated and integrated GPUs in price and performance. An example is NVIDIA’s TurboCache, which shares main system memory.

• General Purpose Graphics Processing Units (GPGPUs) are modified vector processors (found on CPUs) with compute kernels designed for high throughput. These modifications allow them to support APIs such as OpenMP. Since CUDA was released on June 23, 2007, NVIDIA’s G8x series and onwards (all their GPUs released after November 8, 2006) are GPGPU capable.

• External GPUs are surprisingly located outside the housing of the computer and have their own housing and power supply. They need to be connected to a PCIe port of a computer, which can be accessed through a Thunderbolt 3 or 4 port. So, if you have a compatible computer and are looking for more graphics processing power, eGPUs can be a cost-saving solution.

History and Current State of CUDA

NVIDIA’s first CUDA architecture was called Fermi. “NVIDIA’s Fermi GPU architecture consists of multiple streaming multiprocessors (SMs), each consisting of 32 cores, each of which can execute one floatingpoint or integer instruction per clock. The SMs are supported by a second-level cache, host interface, GigaThread scheduler, and multiple DRAM interfaces.” NVIDIA Fermi

A CUDA core is approximately comparable to a CPU core.

NVIDIA’s latest CUDA architecture is called Ampere GA102 “Each SM in GA10x GPUs contain 128 CUDA Cores, four third-generation Tensor Cores, a 256 KB Register File, four Texture Units, one second-generation Ray Tracing Core, and 128 KB of L1/Shared Memory, which can be configured for differing capacities depending on the needs of the compute or graphics workloads. The memory subsystem of GA102 consists of twelve 32-bit memory controllers (384-bit total). 512 KB of L2 cache is paired with each 32-bit memory controller, for a total of 6144 KB on the full GA102 GPU.” NVIDIA Ampere GA102

As the amount of processing power that went into GPUs increased beyond the point necessary for rendering pixels on the screen, a method was needed to utilize the wasted computing power on Graphics Processing Units. General Purpose Graphics Processing Units can perform General Purpose computations and Graphics Processing computations. With the release of CUDA, all CUDA-enabled GPUs can perform general purpose processing, with double floating-point precision supported since the first major compute capability version.

History & Current State of CPUs

Winding back time to 1971, the Intel 4004 microprocessor spawned the microprocessor revolution, around the time Moore’s law was coined, and then came the need to split registers into multiple cores and the need for multi-threading on CPUs. These topics are all too familiar, so let us continue with brief details. 4004 – had 5120 bits RAM and 32768 bits of ROM, could add two eight-digit numbers in about half of a second. Architectural leaps and bounds forward, Willow Cove features 80 KiB L1 cache per core, 1.25MB L2 cache per core and 3MB L3 cache per core. The L1 cache is restricted to the CPU itself, while the L2 and L3 caches are shared with the RAM. Having the correct driver on the operating system allows the CPU to talk to the GPU, and assign it tasks as the user needs.

Architectural Differences

CPUs vs. GPUs

CPUs have a faster clock speed and can execute a wide array of general-purpose instructions used for controlling computers. At the time of their creation, GPUs could not do this. GPUs are focused on having as many cores as possible with a limited instruction set to provide sheer computing power in specialized scenarios. This higher number of cores in GPUs allows modern GPUs to achieve more floating-point operations per second than modern CPUs. It is highly debated whether CPUs will replace GPUs or the other way around, neither of which I believe will happen due to the various roles these units can take in any given system. A CPU is the physical and logical component that any operating system uses to run programs and manage hardware. If a computer has a screen, it needs a GPU. Depending on the level of depth and layers that graphics rending required will determine what GPU should be used.

NVIDIA vs. AMD

Main architectural differences across AMD and NVIDIA GPUs are architecture of execution units, cache hierarchy, and graphics pipelines. Graphics pipelines are conceptual models used by GPUs to render 3D images on a 2D screen. Conceptually and theoretically these devices are quite different, but their practical uses and diagrams of modern GPUs from both companies are quite similar. NVIDIA and AMD have different product lineups, audiences, and business visions. AMD offers CPUs and GPUs, while NVIDIA focuses on GPUs. Having been founded nearly thirty years prior to NVIDIA, AMD currently sits at 28% of their competitors market capitalization. Intel’s market cap is currently 43% of NVIDIAs, making NVIDIA the largest company of the three big chip makers. Traditionally in the world of PC building, NVIDIA and Intel usually release the more expensive but most performing hardware, while AMD provides the best valued hardware in price-to-performance.

Software Development Strategies

The following platforms consist of software programming models, frameworks, toolkits, and compilers released by major tech companies such as NVIDIA and Intel that enable maximization of performance for their hardware. The focus will be on NVIDIA’s CUDA. By creating the architectures, hardware backed by those architectures and abstracting all software development methods through platforms to maximize the potential of their hardware; these companies have created complete computing systems that are extremely powerful.

Software Programming Models

Architectural models we have covered in this course include shared and distributed memory models, implementation models include SPMD and MPMD. These models abstract away from hardware and programming languages resulting in hardware agnostic and language agnostic models to allow developers to focus on designing solutions for problems that should work on various systems in various environments. Programming models are required in parallel programming to understand which solution to choose for a given problem. Complexities and dependencies in the solution will help determine which programming model to use, and which hardware is best suited for the solution if feasible. The diverse array of hardware available for parallel computing and the complex systems constructed for various use cases in turn require parallel programming models to be equally diverse and complex.

oneAPI, branded by Intel as “A Unified X-Architecture Programming Model” with applications for the CPU covered in this course, but oneAPI is designed for diverse architectures and can be used to simplify programming across GPUs. Intel oneAPI DPC++ (Data Parallel C++) compiler includes CUDA backend support, which was initially released almost two years ago. Considering Intel oneAPI was officially released December 2020, oneAPI seems to live up to its brand name.

Software Programming Frameworks

Software Programming Frameworks are structures in which libraries reside. APIs pull source code from frameworks.

OpenMP API

oneAPI Thread Building Blocks

CUDA API – extension of C and C++ programming language allowing for thread level parallelism. It is a high-level abstraction allowing for developers to easily maximize the potential of CUDA enabled devices. Through the CUDA API, parallel blocks of code are identified as kernels. A kernel will get executed in parallel by CUDA threads. CUDA threads are collected into blocks, and blocks are organized into grids.

CUDA special syntax parameters <<<…>>> identified in the kernel invocation execution configuration are number of blocks (1) and number of threads per block (N).

Common libraries contained in the CUDA Framework, accessible through the CUDA API:

cuBLAS - “the CUDA Basic Linear Algebra Subroutine library.”

cuFFT - “the CUDA Fast Fourier Transform library.”

Software Programming Toolkits

Where Frameworks are the structures with libraries containing code we access, toolkits are software programs written to help other software developers to develop programs and maintain code.

oneAPI HPC Toolkit

CUDA Toolkit – available for download from NVIDIA’s website. Includes extensions for IDEs for features such as syntax highlighting and easily creating CUDA projects similarly to OpenMP, TBB & MPI. Also included in CUDA toolkit is the CUDA compiler, framework, NSight Profiler for Visual Studio for monitoring performance of applications and much more.

CUDA Application Domains

“CUDA and Nvidia GPUs have been adopted in many areas that need high floating-point computing performance, as summarized pictorially in the image above. A more comprehensive list includes:

1. Computational finance

2. Climate, weather, and ocean modeling

3. Data science and analytics

4. Deep learning and machine learning

5. Defense and intelligence

6. Manufacturing/AEC (Architecture, Engineering, and Construction): CAD and CAE (including computational fluid dynamics, computational structural mechanics, design and visualization, and electronic design automation)

7. Media and entertainment (including animation, modeling, and rendering; color correction and grain management; compositing; finishing and effects; editing; encoding and digital distribution; on-air graphics; on-set, review, and stereo tools; and weather graphics)

8. Medical imaging

9. Oil and gas

10. Research: Higher education and supercomputing (including computational chemistry and biology, numerical analytics, physics, and scientific visualization)

11. Safety and security

12. Tools and management”

Use Cases for Parallel Computing with GPUs

Video Processing

My favourite use case to fall under this bucket, Video Games! Originally the exclusive use case for the Graphics Processing Unit was… Graphics Processing. Otherwise known as pushing pixels to the screen, gaming graphics cards have been optimized for this behaviour since their conception. Interestingly, one of the most important calculations in graphics is matrix multiplication. Matrices are generic operators required for processing graphic transformations such as: translation, rotation, scaling, reflection, and shearing. Of course, video rendering techniques have evolved greatly in the last couple of decades, from 2D to 3D rendering methods and additional layers of graphical computation resulting in continuously increasing realism.

Average screen is 1920 by 1080 pixels, average refresh rate is 60 frames per second, resulting in the number of operations to update all pixels on the screen per second to be multiplied by 124,416,000. From a computation standpoint that is not a very big number especially considering a 2 gigahertz CPU can perform two billion operations per second, so that begs the question why GPUs? The answer is because the number of calculations to update all pixels on the screen per second can reach insanely large numbers resulting in more than two billion operations per second. This is in intense graprics rendering sceneriaos. However, they are simple operations like matrix multiplication. This helps clearly understand the role of the GPU which is to perform massive amounts of simple operations in parallel. As a result of GPU being able to perform so many calculations per clock cycle, clock speeds aren’t as high. Source Source Source

Modern day GPUs have dedicated physical units for tasks such as 3D rendering, Copying, Video Encoding & Decoding, and more. As you may expect, 3D rendering is the concept of converting 3D models into 2D images on a computer, video encoding occurs on outbound data and video decoding occurs on inbound data. For small tasks such as video calls, and watching YouTube videos, integrated graphics will suffice and are usually shipped with notebook style laptops for this reason. For larger tasks such as 4K video editing, or 4K gaming, dedicated hardware is required.

Real Time

3D rendering often occurs in real time, especially in video games and simulations such as those that occur in software development for robotic applications.

Non-Real Time or Pre-Rendering

Any time we watch or upload a video through a computer, whether it is stored on the device or being streamed over a network, data containing the video must be encoded before it is stored or transmitted and decoded before it can be displayed. This is where pre-rendering occurs.

Machine Learning

Machine learning has gained extreme popularity in recent years due to a combination of factors including big data, computational advances, and cloud business models. Every industry can benefit from Machine Learning Artificial Intelligence technologies, whether through data analysis to improve operation quality or embedded robotic systems to automate physical tasks.

Science & Research

CUDA helps graphics processing for scientific applications including visualization of galaxies in astronomy, and molecular visualization for biology and medicine.

Use Case Implementation

Machine Learning (TensorFlow)

The following models have been tested on a CUDA-enabled GPU, versus an Apple Neural Engine from an M1. The Python TensorFlow implementation abstracts away from hardware and allows the same code to be ran on various devices such as these. The code for these models reside in the TensorFlow Model Garden.

TensorFlow Machine Learning Algorithms Tested:

BERT (Bidirectional Encoder Representations from Transformers) Models Test

Image Classifier Trainer Util Test

“NVIDIA's GTX 1080 does around 8.9 teraflops” Source

Apple M1 does around 2.6 teraflops Source

8.9 / 2.6 = 3.4230769230769230769230769230769

1.136666667 / 0.142666667 = 7.9672897033474539641414627006041

Although the GTX 1080 does around 9 teraflops which is about three and a half times faster than the Apple M1, the M1 can consistently perform about 8 times faster for TensorFlow machine learning algorithms. This is most likely since the GTX 1080 is a Graphics Processing Unit meant for gaming, and the Apple M1 chip has a dedicated 16-core Neural Engine component for Machine Learning. The test results prove that this dedicated Neural component greatly accelerates Machine Learning as Apple claims. Unfortunately, an NVIDIA Volta or Turing GPU was not available for testing in this scenario. Volta and Turing are NVIDIAs machine learning architectures, and they offer a wide range of devices for Machine Learning uses from embedded devices to datacenter and cloud. The architecture of these devices includes tensor cores that are dedicated for machine learning and greatly increase efficiency.

CUDA Performance Testing

CUDA Code

The following CUDA Matrix Multiplication Code was used for all CUDA Matrix Multiplication tests:

CUDA MATRIX MULTIPLICATION HOST CODE

CUDA MATRIX MULTIPLICATION DEVICE HEADER

CUDA MATRIX MULTIPLICATION DEVICE CODE

OpenCL Code

The following OpenCL Matrix Multiplication Code was used for all Matrix Multiplication tests on an OpenCL compatible GPU (same GPU for CUDA tests):

OpenCL COMPILER DIRECTIVES

OpenCL HOST CODE

OpenCL DEVICE CODE

Matrix Multiplication – CUDA vs. OpenMP

The OpenMP Matrix Multiplication solution from Workshop 3 was used for testing in this scenario.

For this test, the CUDA code was ran on a CUDA enabled GPU and OpenMP code was ran on the CPU, a truer test of CUDA vs. OpenMP would be to have the OpenMP code run on the GPU. After learning that matrix multiplication operations are optimized for GPUs and of course CUDA is optimized for NVIDIA GPUs, this seems like an unfair test, with CUDA crushing OpenMP.

Matrix Multiplication - CUDA vs OpenCL

As covered earlier, CUDA is proprietary to NVIDIA and only works on CUDA enabled NVIDIA GPUs. This is not the case for OpenCL, which is open source and runs on a wide variety of GPUs and CPUs. This should provide the expected result that CUDA will outperform OpenCL on any given CUDA enabled device. Interestingly, with a small array size, OpenCL seems to outperform CUDA, this is presumably due to parallel overhead. Memory access optimization is always critical in High Performance Computing and must always be considered. Memory access can make or break the efficiency of an algorithm.

CUDA Limitations

Main limitations of CUDA: No recursive functions. Minimum unit block of 32 threads.

Due to its proprietary nature, a limitation of CUDA is the number of devices supported and systems it can extend. This proprietary nature allows NVIDIA to completely maximize performance, resulting in CUDA outperforming OpenCL, at least on any given CUDA enabled device.

SLIDESHOW

SOURCES

https://www.apple.com/newsroom/2020/11/apple-unleashes-m1/

http://www.vintagecalculators.com/html/busicom_141-pf_and_intel_4004.html

https://upload.wikimedia.org/wikipedia/commons/8/87/4004_arch.svg

https://www.nextplatform.com/2020/05/28/diving-deep-into-the-nvidia-ampere-gpu-architecture/

https://www.extremetech.com/extreme/314956-intel-confirms-8-core-tiger-lake-cpus-are-on-the-way

https://hexus.net/tech/reviews/cpu/147440-intel-core-i9-11900k/

https://www.anandtech.com/show/391

https://www.omnisci.com/technical-glossary/cpu-vs-gpu

https://www.sciencedirect.com/topics/computer-science/programming-model

https://en.wikichip.org/wiki/intel/microarchitectures/sunny_cove#MSROM_.26_Stack_Engine

https://upload.wikimedia.org/wikipedia/en/b/b9/Nvidia_CUDA_Logo.jpg