Difference between revisions of "OPS435 Assignment 2 for Section A"

(Created page with "Category:OPS435-PythonCategory:rchan =Assignment 2 - Usage Report= '''Weight:''' 10% of the overall grade '''Due Date:''' Please follow the three stages of submissio...") |

Eric.brauer (talk | contribs) (update for summer) |

||

| Line 1: | Line 1: | ||

| − | [[Category:OPS435-Python]][[Category: | + | [[Category:OPS435-Python]][[Category:ebrauer]] |

| + | = Overview: du Improved = | ||

| + | <code>du</code> is a tool for inspecting directories. It will return the contents of a directory along with how much drive space they are using. However, it can be parse its output quickly, as it usually returns file sizes as a number of bytes: | ||

| − | + | <code><b>user@host ~ $ du --max-depth 1 /usr/local/lib</b></code> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

<pre> | <pre> | ||

| − | + | 164028 /usr/local/lib/heroku | |

| − | + | 11072 /usr/local/lib/python2.7 | |

| − | + | 92608 /usr/local/lib/node_modules | |

| − | + | 8 /usr/local/lib/python3.8 | |

| − | + | 267720 /usr/local/lib | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

</pre> | </pre> | ||

| − | + | You will therefore be creating a tool called <b>duim (du improved></b>. Your script will call du and return the contents of a specified directory, and generate a bar graph for each subdirectory. The bar graph will represent the drive space as percent of the total drive space for the specified directory. | |

| + | An example of the finished code your script might produce is this: | ||

| − | + | <code><b>user@host ~ $ ./duim.py /usr/local/lib</b></code> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

<pre> | <pre> | ||

| − | [ | + | 61 % [============ ] 160.2 MiB /usr/local/lib/heroku |

| − | + | 4 % [= ] 10.8 MiB /usr/local/lib/python2.7 | |

| − | + | 34 % [======= ] 90.4 MiB /usr/local/lib/node_modules | |

| − | + | 0 % [ ] 8.0 kiB /usr/local/lib/python3.8 | |

| − | + | Total: 261.4 MiB /usr/local/lib | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

</pre> | </pre> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | The details of the final output will be up to you, but you will be required to fulfill some specific requirements before completing your script. Read on... | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | = Assignment Requirements = | |

| + | == Permitted Modules == | ||

| + | <b><font color='blue'>Your python script is allowed to import only the <u>os, subprocess and sys</u> modules from the standard library.</font></b> | ||

| − | == | + | == Required Functions == |

| − | + | You will need to complete the functions inside the provided file called <code>duim.py</code>. The provided <code>checkA1.py</code> will be used to test these functions. | |

| − | |||

| − | |||

| − | < | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | * <code>call_du_sub()</code> should take the target directory as an argument and return a list of strings returned by the command <b>du -d 1<target directory></b>. | |

| − | + | ** Use subprocess.Popen. | |

| − | + | ** '-d 1' specifies a <i>max depth</i> of 1. Your list shouldn't include files, just a list of subdirectories in the target directory. | |

| − | + | ** Your list should <u>not</u> contain newline characters. | |

| − | The | + | * <code>percent_to_graph()</code> should take two arguments: percent and the total chars. It should return a 'bar graph' as a string. |

| + | ** Your function should check that the percent argument is a valid number between 0 and 100. It should fail if it isn't. You can <code>raise ValueError</code> in this case. | ||

| + | ** <b>total chars</b> refers to the total number of characters that the bar graph will be composed of. You can use equal signs <code>=</code> or any other character that makes sense, but the empty space <b>must be composed of spaces</b>, at least until you have passed the first milestone. | ||

| + | ** The string returned by this function should only be composed of these two characters. For example, calling <code>percent_to_graph(50, 10)</code> should return: | ||

| + | '===== ' | ||

| + | * <code>create_dir_dict</code> should take a list as the argument, and should return a dictionary. | ||

| + | ** The list can be the list returned by <code>call_du_sub()</code>. | ||

| + | ** The dictionary that you return should have the full directory name as <i>key</i>, and the number of bytes in the directory as the <i>value</i>. This value should be an integer. For example, using the example of <b>/usr/local/lib</b>, the function would return: | ||

| + | {'/usr/local/lib/heroku': 164028, '/usr/local/lib/python2.7': 11072, ...} | ||

| − | == | + | == Additional Functions == |

| − | + | You may create any other functions that you think appropriate, especially when you begin to build additional functionality. Part of your evaluation will be on how "re-usable" your functions are, and sensible use of arguments and return values. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | == Use of GitHub == | |

| − | + | You will be graded partly on the quality of your Github commits. You may make as many commits as you wish, it will have no impact on your grade. The only exception to this is <b>assignments with very few commits.</b> These will receive low marks for GitHub use and may be flagged for possible academic integrity violations. | |

| − | + | <b><font color='blue'>Assignments that do not adhere to these requirements may not be accepted.</font></b> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | The | ||

| − | < | ||

| − | |||

| − | < | ||

| − | |||

| − | < | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | </ | ||

| − | + | Professionals generally follow these guidelines: | |

| − | + | * commit their code after every significant change, | |

| − | < | + | * the code <i>should hopefully</i> run without errors after each commit, and |

| − | + | * every commit has a descriptive commit message. | |

| − | </ | ||

| − | + | After completing each function, make a commit and push your code. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | After fixing a problem, make a commit and push your code. | |

| − | |||

| − | |||

| − | |||

| − | < | + | <b><u>GitHub is your backup and your proof of work.</u></b> |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | </ | ||

| − | |||

| − | + | These guidelines are not always possible, but you will be expected to follow these guidelines as much as possible. Break your problem into smaller pieces, and work iteratively to solve each small problem. Test your code after each small change you make, and address errors as soon as they arise. It will make your life easier! | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | == Coding Standard == | |

| − | + | Your python script must follow the following coding guide: | |

| − | + | * [https://www.python.org/dev/peps/pep-0008/ PEP-8 -- Style Guide for writing Python Code] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | === Documentation === | |

| + | * Please use python's docstring to document your python script (script level documentation) and each of the functions (function level documentation) you created for this assignment. The docstring should describe 'what' the function does, not 'how' it does. | ||

| + | * Your script should also include in-line comments to explain anything that isn't immediately obvious to a beginner programmer. <b><u>It is expected that you will be able to explain how each part of your code works in detail.</u></b> | ||

| + | * Refer to the docstring for after() to get an idea of the function docstrings required. | ||

| − | === | + | === Authorship Declaration === |

| − | + | All your Python code for this assignment must be placed in the provided Python file called <b>assignment1.py</b>. <u>Do not change the name of this file.</u> Please complete the declaration <b><u>as part of the docstring</u></b> in your Python source code file (replace "Student Name" with your own name). | |

| − | < | ||

| − | |||

| − | </ | ||

| − | + | = Submission Guidelines and Process = | |

| − | |||

| − | == | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | == Clone Your Repo (ASAP) == | |

| − | + | The first step will be to clone the Assignment 1 repository. The invite link will be provided to you by your professor. The repo will contain a check script, a README file, and the file where you will enter your code. | |

| − | |||

| − | + | == The First Milestone (due February 14) == | |

| − | + | For the first milestone you will have two functions to complete. | |

| − | + | * <code>call_du_sub</code> will take one argument and return a list. The argument is a target directory. The function will use <code>subprocess.Popen</code> to run the command <b>du -d l <target_directory></b>. | |

| − | + | * <code>percent_to_graph</code> will take two arguments and return a string. | |

| − | |||

| − | |||

| − | |||

| − | </ | ||

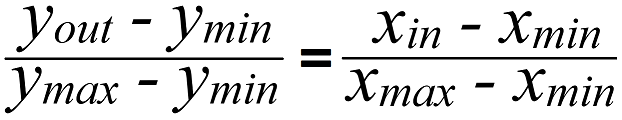

| − | + | In order to complete <code>percent_to_graph()</code>, it's helpful to know the equation for converting a number from one scale to another. | |

| − | |||

| − | < | ||

| − | |||

| − | </ | ||

| − | + | [[File:Scaling-formula.png]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | In this equation, ``x`` refers to your input value percent and ``y`` will refer to the number of symbols to print. The max of percent is 100 and the min of percent is 0. | |

| + | Be sure that you are rounding to an integer, and then print that number of symbols to represent the percentage. The number of spaces that you print will be the inverse. | ||

| − | + | Test your functions with the Python interpreter. Use <code>python3</code>, then: | |

| − | + | import duim | |

| − | + | duim.percent_to_graph(50, 10) | |

| − | |||

| − | |||

| − | + | To test with the check script, run the following: | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | < | + | <code>python3 checkA1.py -f -v TestPercent</code> |

| − | |||

| − | </ | ||

| − | + | == Second Milestone (due February 21) == | |

| − | + | For the second milestone you will have one more function to complete. | |

| − | + | * <code>create_dir_dict</code> will take your list from <code>call_du_sub</code> and return a dictionary. | |

| − | + | ** Every item in your list should create a key in your dictionary. | |

| − | + | ** Your dictionary values should be a number of bytes. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | For example: <code>{'/usr/lib/local': 33400}</code> | |

| − | + | ||

| − | + | ** Again, test using your Python interpreter or the check script. | |

| − | |||

| − | |||

| − | + | To run the check script, enter the following: | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | < | + | <code>python checkA1.py -f -v TestDirDict</code> |

| − | |||

| − | </ | ||

| − | + | == Minimum Viable Product == | |

| − | + | Once you have achieved the Milestones, you will have to do the following to get a minimum viable product: | |

| − | + | * In your <code>if __name__ == '__main__'</code> block, you will have to check command line arguments. | |

| − | + | ** If the user has entered no command line argument, use the current directory. | |

| − | + | ** If the user has entered more than one argument, or their argument isn't a valid directory, print an error message. | |

| − | + | ** Otherwise, the argument will be your target directory. | |

| − | + | * Call <code>call_du_sub</code> with the target directory. | |

| + | * Pass the return value from that function to <code>create_dir_dict</code> | ||

| + | * You may wish to create one or more functions to do the following: | ||

| + | ** Use the total size of the target directory to calculate percentage. | ||

| + | ** For each subdirectory of target directory, you will need to calculate a percentage, using the total of the target directory. | ||

| + | ** Once you've calculated percentage, call <code>percent_to_graph</code> with a max_size of your choice. | ||

| + | ** For every subdirectory, print <i>at least</i> the percent, the bar graph, and the name of the subdirectory. | ||

| + | ** The target directory <b>should not</b> have a bar graph. | ||

| − | + | == Additional Features == | |

| − | + | After completing the above, you are expected to add some additional features. Some improvements you could make are: | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | * Format the output in a way that is easy to read. | |

| + | * Add colour to the output. | ||

| + | * Add more error checking, print a usage message to the user. | ||

| + | * Convert bytes to a human-readable format. NOTE: This doesn't have to be 100% accurate to get marks. | ||

| + | * Accept more options from the user. | ||

| + | * Sort the output by percentage, or by filename. | ||

| − | + | It is expected that the additional features you provided should be useful, non-trivial, they should not require super-user privileges and should not require the installation of additional packages to work. (ie: I shouldn't have to run pip to make your assignment work). | |

| − | |||

| − | |||

| − | |||

| − | |||

| + | == The Assignment (due March 7, 11:59pm) == | ||

| + | * Be sure to make your final commit before the deadline. | ||

| + | * Then, copy the contents of your <b>duim.py</b> file into a Word document, and submit it to Blackboard. <i>I will use GitHub to evaluate your deadline, but submitting to Blackboard tells me that you wish to be evaluated.</i> | ||

| − | + | = Rubric = | |

{| class="wikitable" border="1" | {| class="wikitable" border="1" | ||

! Task !! Maximum mark !! Actual mark | ! Task !! Maximum mark !! Actual mark | ||

|- | |- | ||

| − | | | + | | Program Authorship Declaration || 5 || |

|- | |- | ||

| − | | | + | | required functions design || 5 || |

|- | |- | ||

| − | | | + | | required functions readability || 5 || |

|- | |- | ||

| − | | | + | | main loop design || 10 || |

|- | |- | ||

| − | | | + | | main loop readability || 10 || |

|- | |- | ||

| − | | | + | | output function design || 5 || |

|- | |- | ||

| − | | | + | | output function readability || 5 || |

|- | |- | ||

| − | | | + | | additional features implemented || 20 || |

|- | |- | ||

| − | | | + | | docstrings and comments || 5 || |

|- | |- | ||

| − | | | + | | First Milestone || 10 || |

|- | |- | ||

| − | | '''Total''' || 100 || | + | | Second Milestone || 10 || |

| + | |- | ||

| + | | github.com repository: Commit messages and use || 10 || | ||

| + | |- | ||

| + | |'''Total''' || 100 || | ||

| + | |||

|} | |} | ||

| − | = | + | = Due Date and Final Submission requirement = |

| − | + | ||

| − | * | + | Please submit the following files by the due date: |

| − | + | * [ ] your python script, named as 'duim.py', in your repository, and also '''submitted to Blackboard''', by March 7 at 11:59pm. | |

Revision as of 10:28, 12 July 2021

Contents

Overview: du Improved

du is a tool for inspecting directories. It will return the contents of a directory along with how much drive space they are using. However, it can be parse its output quickly, as it usually returns file sizes as a number of bytes:

user@host ~ $ du --max-depth 1 /usr/local/lib

164028 /usr/local/lib/heroku 11072 /usr/local/lib/python2.7 92608 /usr/local/lib/node_modules 8 /usr/local/lib/python3.8 267720 /usr/local/lib

You will therefore be creating a tool called duim (du improved>. Your script will call du and return the contents of a specified directory, and generate a bar graph for each subdirectory. The bar graph will represent the drive space as percent of the total drive space for the specified directory. An example of the finished code your script might produce is this:

user@host ~ $ ./duim.py /usr/local/lib

61 % [============ ] 160.2 MiB /usr/local/lib/heroku 4 % [= ] 10.8 MiB /usr/local/lib/python2.7 34 % [======= ] 90.4 MiB /usr/local/lib/node_modules 0 % [ ] 8.0 kiB /usr/local/lib/python3.8 Total: 261.4 MiB /usr/local/lib

The details of the final output will be up to you, but you will be required to fulfill some specific requirements before completing your script. Read on...

Assignment Requirements

Permitted Modules

Your python script is allowed to import only the os, subprocess and sys modules from the standard library.

Required Functions

You will need to complete the functions inside the provided file called duim.py. The provided checkA1.py will be used to test these functions.

-

call_du_sub()should take the target directory as an argument and return a list of strings returned by the command du -d 1<target directory>.- Use subprocess.Popen.

- '-d 1' specifies a max depth of 1. Your list shouldn't include files, just a list of subdirectories in the target directory.

- Your list should not contain newline characters.

-

percent_to_graph()should take two arguments: percent and the total chars. It should return a 'bar graph' as a string.- Your function should check that the percent argument is a valid number between 0 and 100. It should fail if it isn't. You can

raise ValueErrorin this case. - total chars refers to the total number of characters that the bar graph will be composed of. You can use equal signs

=or any other character that makes sense, but the empty space must be composed of spaces, at least until you have passed the first milestone. - The string returned by this function should only be composed of these two characters. For example, calling

percent_to_graph(50, 10)should return:

- Your function should check that the percent argument is a valid number between 0 and 100. It should fail if it isn't. You can

'===== '

-

create_dir_dictshould take a list as the argument, and should return a dictionary.- The list can be the list returned by

call_du_sub(). - The dictionary that you return should have the full directory name as key, and the number of bytes in the directory as the value. This value should be an integer. For example, using the example of /usr/local/lib, the function would return:

- The list can be the list returned by

{'/usr/local/lib/heroku': 164028, '/usr/local/lib/python2.7': 11072, ...}

Additional Functions

You may create any other functions that you think appropriate, especially when you begin to build additional functionality. Part of your evaluation will be on how "re-usable" your functions are, and sensible use of arguments and return values.

Use of GitHub

You will be graded partly on the quality of your Github commits. You may make as many commits as you wish, it will have no impact on your grade. The only exception to this is assignments with very few commits. These will receive low marks for GitHub use and may be flagged for possible academic integrity violations. Assignments that do not adhere to these requirements may not be accepted.

Professionals generally follow these guidelines:

- commit their code after every significant change,

- the code should hopefully run without errors after each commit, and

- every commit has a descriptive commit message.

After completing each function, make a commit and push your code.

After fixing a problem, make a commit and push your code.

GitHub is your backup and your proof of work.

These guidelines are not always possible, but you will be expected to follow these guidelines as much as possible. Break your problem into smaller pieces, and work iteratively to solve each small problem. Test your code after each small change you make, and address errors as soon as they arise. It will make your life easier!

Coding Standard

Your python script must follow the following coding guide:

Documentation

- Please use python's docstring to document your python script (script level documentation) and each of the functions (function level documentation) you created for this assignment. The docstring should describe 'what' the function does, not 'how' it does.

- Your script should also include in-line comments to explain anything that isn't immediately obvious to a beginner programmer. It is expected that you will be able to explain how each part of your code works in detail.

- Refer to the docstring for after() to get an idea of the function docstrings required.

Authorship Declaration

All your Python code for this assignment must be placed in the provided Python file called assignment1.py. Do not change the name of this file. Please complete the declaration as part of the docstring in your Python source code file (replace "Student Name" with your own name).

Submission Guidelines and Process

Clone Your Repo (ASAP)

The first step will be to clone the Assignment 1 repository. The invite link will be provided to you by your professor. The repo will contain a check script, a README file, and the file where you will enter your code.

The First Milestone (due February 14)

For the first milestone you will have two functions to complete.

-

call_du_subwill take one argument and return a list. The argument is a target directory. The function will usesubprocess.Popento run the command du -d l <target_directory>. -

percent_to_graphwill take two arguments and return a string.

In order to complete percent_to_graph(), it's helpful to know the equation for converting a number from one scale to another.

In this equation, ``x`` refers to your input value percent and ``y`` will refer to the number of symbols to print. The max of percent is 100 and the min of percent is 0. Be sure that you are rounding to an integer, and then print that number of symbols to represent the percentage. The number of spaces that you print will be the inverse.

Test your functions with the Python interpreter. Use python3, then:

import duim duim.percent_to_graph(50, 10)

To test with the check script, run the following:

python3 checkA1.py -f -v TestPercent

Second Milestone (due February 21)

For the second milestone you will have one more function to complete.

-

create_dir_dictwill take your list fromcall_du_suband return a dictionary.- Every item in your list should create a key in your dictionary.

- Your dictionary values should be a number of bytes.

For example: {'/usr/lib/local': 33400}

- Again, test using your Python interpreter or the check script.

To run the check script, enter the following:

python checkA1.py -f -v TestDirDict

Minimum Viable Product

Once you have achieved the Milestones, you will have to do the following to get a minimum viable product:

- In your

if __name__ == '__main__'block, you will have to check command line arguments.- If the user has entered no command line argument, use the current directory.

- If the user has entered more than one argument, or their argument isn't a valid directory, print an error message.

- Otherwise, the argument will be your target directory.

- Call

call_du_subwith the target directory. - Pass the return value from that function to

create_dir_dict - You may wish to create one or more functions to do the following:

- Use the total size of the target directory to calculate percentage.

- For each subdirectory of target directory, you will need to calculate a percentage, using the total of the target directory.

- Once you've calculated percentage, call

percent_to_graphwith a max_size of your choice. - For every subdirectory, print at least the percent, the bar graph, and the name of the subdirectory.

- The target directory should not have a bar graph.

Additional Features

After completing the above, you are expected to add some additional features. Some improvements you could make are:

- Format the output in a way that is easy to read.

- Add colour to the output.

- Add more error checking, print a usage message to the user.

- Convert bytes to a human-readable format. NOTE: This doesn't have to be 100% accurate to get marks.

- Accept more options from the user.

- Sort the output by percentage, or by filename.

It is expected that the additional features you provided should be useful, non-trivial, they should not require super-user privileges and should not require the installation of additional packages to work. (ie: I shouldn't have to run pip to make your assignment work).

The Assignment (due March 7, 11:59pm)

- Be sure to make your final commit before the deadline.

- Then, copy the contents of your duim.py file into a Word document, and submit it to Blackboard. I will use GitHub to evaluate your deadline, but submitting to Blackboard tells me that you wish to be evaluated.

Rubric

| Task | Maximum mark | Actual mark |

|---|---|---|

| Program Authorship Declaration | 5 | |

| required functions design | 5 | |

| required functions readability | 5 | |

| main loop design | 10 | |

| main loop readability | 10 | |

| output function design | 5 | |

| output function readability | 5 | |

| additional features implemented | 20 | |

| docstrings and comments | 5 | |

| First Milestone | 10 | |

| Second Milestone | 10 | |

| github.com repository: Commit messages and use | 10 | |

| Total | 100 |

Due Date and Final Submission requirement

Please submit the following files by the due date:

- [ ] your python script, named as 'duim.py', in your repository, and also submitted to Blackboard, by March 7 at 11:59pm.