Difference between revisions of "GPU621/History of Parallel Computing"

(→Parallel Programming vs. Concurrent Programming) |

(→Demise of Single-Core and Rise of Multi-Core Systems) |

||

| Line 40: | Line 40: | ||

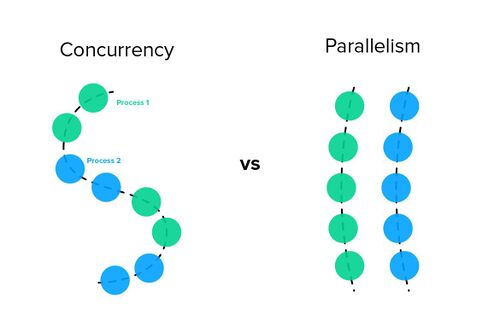

Parallel computing is the idea that large problems can be split into smaller tasks, and these tasks are independent of each other running '''simultaneously''' on '''more than one''' processor. This concept is different from concurrent programming, which is the composition of multiple processes that may begin and end at different times, but are managed by the host system’s task scheduler which frequently '''switches between them'''. This gives off the illusion of multi-tasking as multiple tasks are '''in progress''' on a '''single''' processor. Concurrent computing can occur on both single and multi-core processors, whereas parallel computing takes advantage of distributing the workload across multiple physical processors. Thus, parallel computing is hardware-dependent. | Parallel computing is the idea that large problems can be split into smaller tasks, and these tasks are independent of each other running '''simultaneously''' on '''more than one''' processor. This concept is different from concurrent programming, which is the composition of multiple processes that may begin and end at different times, but are managed by the host system’s task scheduler which frequently '''switches between them'''. This gives off the illusion of multi-tasking as multiple tasks are '''in progress''' on a '''single''' processor. Concurrent computing can occur on both single and multi-core processors, whereas parallel computing takes advantage of distributing the workload across multiple physical processors. Thus, parallel computing is hardware-dependent. | ||

| − | [[File:P v c1.jpeg|thumb| | + | [[File:P v c1.jpeg|thumb|none|500px|Source: https://miro.medium.com/max/1170/1*cFUbDHxooUtT9KiBy-0SXQ.jpeg]] |

=== Transition from Single to Multi-Core === | === Transition from Single to Multi-Core === | ||

Revision as of 23:41, 27 November 2020

GPU621/DPS921 | Participants | Groups and Projects | Resources | Glossary

History of Parallel Computing and the Advantage of Multi-core Systems

We will be looking into the history and evolution in parallel computing by focusing on three distinct timelines:

1) The earliest developments of multi-core systems and how it gave rise to the realization of parallel programming

2) How chip makers marketed this new frontier in computing, specifically towards enterprise businesses being the primary target audience

3) How quickly it gained traction and when the two semiconductor giants Intel and AMD decided to introduce multi-core processors to domestic users, thus making parallel computing more widely available

From each of these timelines, we will be inspecting certain key events and how they impacted other events in their progression. This will help us understand how parallel computing came into fruition, its role and impact in many industries today, and what the future may hold going forward.

Group Members

Omri Golan

Patrick Keating

Yuka Sadaoka

Progress

Update 1 (11/12/2020):

-> Basic definition of parallel computing

-> Research on limitation of single-core systems and subsequent advent of multi-core with respect to parallel computing capabilities

-> Earliest development and usage of parallel computing

-> History and development of supercomputers and their parallel computing nature (incl. modern supercomputers -> world's fastest supercomputer)

Demise of Single-Core and Rise of Multi-Core Systems

Parallel Programming vs. Concurrent Programming

Parallel computing is the idea that large problems can be split into smaller tasks, and these tasks are independent of each other running simultaneously on more than one processor. This concept is different from concurrent programming, which is the composition of multiple processes that may begin and end at different times, but are managed by the host system’s task scheduler which frequently switches between them. This gives off the illusion of multi-tasking as multiple tasks are in progress on a single processor. Concurrent computing can occur on both single and multi-core processors, whereas parallel computing takes advantage of distributing the workload across multiple physical processors. Thus, parallel computing is hardware-dependent.

Transition from Single to Multi-Core

The transition from single to multi-core systems came from the need to address the limitations of manufacturing technologies for single-core systems. Single-core systems suffered by several limiting factors such as individual transistor gate size, physical limits in the design of integrated circuits which caused significant heat dissipation, and synchronization issues with coherency of data. Some instruction-level parallelism methods were used to improve single-core performance such as superscalar pipelining which enables the processor to execute multiple instruction pipelines concurrently within a single clock cycle, but they were not suited for many applications. Such issues with instruction-level parallelism were predominantly dictated by the disparity between the speed by which the processor operated and the access latency of system memory, which costed the processor many cycles by having to stall and wait for the fetch operation from system memory to complete.

As manufacturing processes evolved in accordance with Moore’s Law which saw the size of a transistor shrink, it allowed for the number of transistors packed onto a single processor die (the physical silicon chip itself) to double roughly every two years. This enabled the available space on a processor die to grow, allowing more cores to fit on it than before. This led to an increased demand in thread-level parallelism which many applications benefitted from and were better suited for. The addition of multiple cores on a processor also increased the system's overall parallel computing capabilities.

Developments in the first Multi-Core Processors

The death of single-core processors came at the time of the Pentium 4, when, as mentioned above, excessive heat and power consumption became an issue. At this point, multi-core processors such as the Pentium D were introduced. However, Pentium D was not considered a “true” multi-core processor as what is considered today by definition, due to its design of being two separate single-core dies placed beside each other in the same processor package.

The world's first true multi-core processor was called the POWER4, created in 2001 by IBM. It incorporated 2 physical cores on a single CPU die and implemented IBM's PowerPC 64-bit instruction set architecture (ISA). It was used in IBM's line of workstations, servers, and supercomputers at the time, namely the RS/6000 and AS/400 systems.