Difference between revisions of "GPU621/To Be Announced"

(→Latest GPU specs (Yunseon)) |

(→Latest GPU specs (Yunseon)) |

||

| Line 38: | Line 38: | ||

| − | '''AMD | + | '''Latest AMD GPU Spec''': |

[[File:latestGpuSpecsAmd.jpg|900px|]] | [[File:latestGpuSpecsAmd.jpg|900px|]] | ||

| Line 47: | Line 47: | ||

| − | '''NVIDIA | + | '''Latest NVIDIA GPU Spec''': |

[[File:latestGpuSpecsNvidia2.jpg|900px|]] | [[File:latestGpuSpecsNvidia2.jpg|900px|]] | ||

Revision as of 15:00, 23 November 2020

GPU621/DPS921 | Participants | Groups and Projects | Resources | Glossary

Contents

- 1 OpenMP Device Offloading

- 1.1 Group Members

- 1.2 Progress

- 1.3 Difference of CPU and GPU for parallel applications (Yunseon)

- 1.4 Latest GPU specs (Yunseon)

- 1.5 Means of parallelisation on GPUs

- 1.6 Instructions

- 1.7 Programming GPUs with OpenMP

- 1.8 Code for tests (Nathan)

- 1.9 Results and Graphs (Nathan/Elena)

- 1.10 Conclusions (Nathan/Elena/Yunseon)

- 1.11 Sources

OpenMP Device Offloading

OpenMP 4.0/4.5 introduced support for heterogeneous systems such as accelerators and GPUs. The purpose of this overview is to demonstrate OpenMP's device constructs used for offloading data and code from a host device (Multicore CPU) to a target's device environment (GPU/Accelerator). We will demonstrate how to manage the device's data environment, parallelism and work-sharing. Review how data is mapped from the host data environment to the device data environment, and attempt to use different compilers that support OpenMP offloading such as LLVM/Clang or GCC.

Group Members

1. Elena Sakhnovitch

2. Nathan Olah

3. Yunseon Lee

Progress

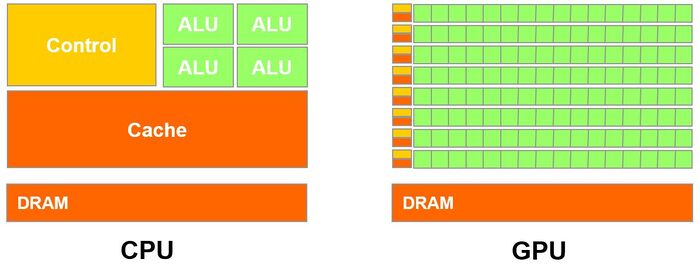

Difference of CPU and GPU for parallel applications (Yunseon)

GPU(Graphics processing unit)

GPU is designed with thousands of processor cores running simultaneously and it enables massive parallelism where each of the cores is focused on making efficient calculations which are repetitive and highly-parallel computing tasks. GPU was originally designed to create quick image rendering which is a specialized type of microprocessor. However, modern graphic processors are powerful enough to be used to accelerate calculations with a massive amount of data and others apart from image rendering. GPUs can perform parallel operations on multiple sets of data, and able to complete more work at the same time compare to CPU by using Parallelism. Even with these abilities, GPU can never fully replace the CPU because cores are limited in processing power with the limited instruction set.

CPU(Central processing unit)

CPU can work on a variety of different calculations, and it usually has less than 100 cores (8-24) which can also do parallel computing using its instruction pipelines and also cores. Each core is strong and processing power is significant. For this reason, the CPU core can execute a big instruction set, but not too many at a time. Compare to GPUs, CPUs are usually smarter and have large and wide instructions that manage every input and output of a computer.

What is the difference?

CPU can work on a variety of different calculations, while a GPU is best at focusing all the computing abilities on a specific task. Because the CPU is consisting of a few cores (up to 24) optimized for sequential serial processing. It is designed to maximize the performance of a single task within a job; however, it can do a variety of tasks. On the other hand, GPU uses thousands of processor cores running simultaneously and it enables massive parallelism where each of the cores is focused on making efficient calculations which are repetitive and highly-paralleled architecture computing tasks.

Latest GPU specs (Yunseon)

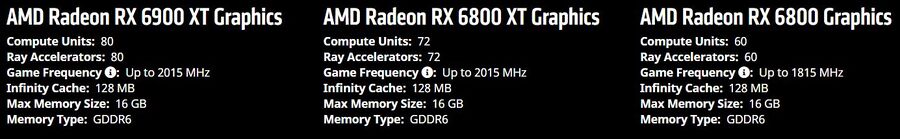

Latest AMD GPU Spec:

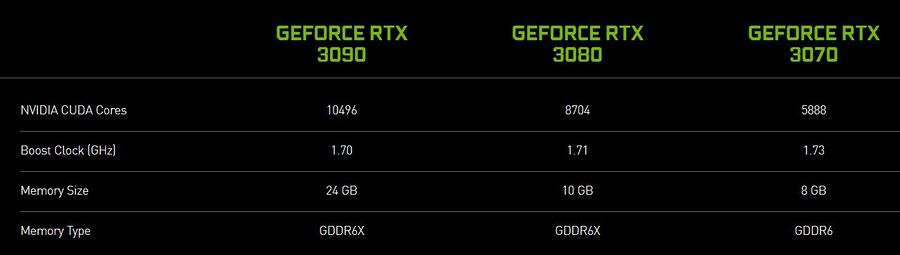

Latest NVIDIA GPU Spec:

Means of parallelisation on GPUs

short introduction and advantages and disadvantages of:

CUDA (Yunseon)

OpenMP (Elena)

HIP (Elena)

OpenCL (Nathan) https://stackoverflow.com/questions/7263193/opencl-vs-openmp-performance#7263823

Instructions

How to set up compiler and target offloading for windows, on NVIDIA GPU: (Nathan)

How to set up compiler and target offloading for Linux on AMD GPU: (Elena)

Programming GPUs with OpenMP

Target Region

- The target region is the offloading construct in OpenMP.

int main() {

// This code executes on the host (CPU)

#pragma omp target

// This code executes on the device

}

- An OpenMP program will begin executing on the host (CPU).

- When a target region is encountered the code that is within the target region will begin to execute on a device (GPU).

If no other construct is specified, for instance a construct to enable a parallelized region (#pragma omp parallel). By default, the code within the target region will execute sequentially. The target region does not express parallelism, it only expresses where the contained code is going to be executed on.

There is an implied synchronization between the host and the device at the end of a target region. At the end of a target region the host thread waits for the target region to finish execution and continues executing the next statements.

Mapping host and device data

- In order to access data inside the target region it must be mapped to the device.

- The host environment and device environment have separate memory.

- Data that has been mapped to the device from the host cannot access that data until the target region (Device) has completed its execution.

The map clause provides the ability to control a variable over a target region.

#pragma omp target map(map-type : list)

- list specifies the data variables to be mapped from the host data environment to the target's device environment.

- map-type is one of the types to, from, tofrom, or alloc.

to - copies the data to the device on execution.

from - copies the data to the host on exit.

tofrom - copies the data to the device on execution and back on exit.

alloc - allocated an uninitialized copy on the device (without copying from the host environment).

#pragma omp target map(to:A,B), map(tofrom:sum)

{

for (int i = 0; i < N; i++)

sum += A[i] + B[i];

}

Declare Target

Calling functions within the scope of a target region.

#pragma omp declare target

int combine(int a, int b);

#pragma omp end declare target

Code for tests (Nathan)

Results and Graphs (Nathan/Elena)

Conclusions (Nathan/Elena/Yunseon)

Sources

https://www.ibm.com/support/knowledgecenter/en/SSXVZZ_16.1.0/com.ibm.xlcpp161.lelinux.doc/compiler_ref/prag_omp_target.html https://www.ibm.com/support/knowledgecenter/en/SSXVZZ_16.1.0/com.ibm.xlcpp161.lelinux.doc/compiler_ref/prag_omp_declare_target.html