Difference between revisions of "DPS921/ND-R&D"

(→Using Barrier) |

(→Ordered Example) |

||

| Line 73: | Line 73: | ||

==== Ordered Example ==== | ==== Ordered Example ==== | ||

| − | [[File: | + | [[File:Capture3.png | 500px]] |

| − | |||

= Threading in C++11 = | = Threading in C++11 = | ||

Revision as of 10:09, 28 November 2018

GPU621/DPS921 | Participants | Groups and Projects | Resources | Glossary

C++11 Threads Library Comparison to OpenMP

Group Members

Daniel Bogomazov

Nick Krilis

Threading in OpenMP

OpenMP (Open Multi-Processing) is an API specification for compilers that implement an explicit SPMD programming model on shared memory architectures.OpenMP implements threading through the main thread which will fork a specific number of child threads and divide the task amongst them. The runtime environment will then allocate the threads onto multiple processors.

The standard OpenMP consists of three main components.

- Compiler directives

- Compiler directives are used in order to control the parallelism of code regions.

- The directive keyword placed after #pragma omp is telling the compiler what action needs to happen on that specific region of code. In addition to this OpenMP allows the use of clauses after the directive in order to provoke additional behaviour on that parallel region.

- Example of directives and constructs include:

- Parallel ( #pragma omp parallel)

- This defines a parallel region in which the compiler knows to form threads for parallel execution.

- Task (#pragma omp task)

- Defines an explicit task. The data environment of the task is created according to data-sharing attribute clauses on task construct and any defaults that apply

- Simd ( #pragma omp simd)

- Applied to a loop to indicate that the loop can be transformed into a SIMD loop.

- Atomic (#pragma omp atomic )

- This directive allows the use of a specific memory location atomically. It helps ensure that race conditions are avoided through the direct control of concurrent threads. Used for writing more efficient algorithms.

- Parallel ( #pragma omp parallel)

- The runtime library routines

- This include routines that deal with setting and getting the number of total threads, the current thread, etc. For example:

- omp_set_num_threads(int) sets the number of threads in the next parallel region while omp_get_num_threads() returns how many threads OpenMP actually created.

- This include routines that deal with setting and getting the number of total threads, the current thread, etc. For example:

- Environment Variables used to guide OpenMP. A widely used example includes OMP_NUM_THREADS which defines the maximum number of threads for OpenMP to attempt to use.

Creating a Thread

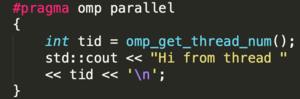

Using the components above, the programmer can setup a parallel region to run tasks in parallel. The following is an example thread creation controlled by OpenMP.

Multithreading

Control Structures

- OpenMP is made to have a very simplistic set of control structures. Most parallel applications require the use of a few control structures.

- The very basic execution of these control structures is through the use of the fork-join method. Whereas the start of each new thread would be defined by the control structure.

- OpenMP includes control structures only in instances where a compiler can provide both functionality and performance over what a user could reasonably program.

Data Environment

- Each process in OpenMP has associated clauses that define the data environment.

- Each new data environment is constructed only for new processes at the time of execution

- Using the following clauses you are able to change storage attributes for constructs that apply to the construct and not the entire parallel region

- SHARED

- PRIVATE

- FIRSTPRIVATE

- LASTPRIVATE

- DEFAULT

- By default almost all variables are shared, global variables are also shared amongst threads. However not everything is shared, stack variables that are apart of subprograms or functions in parallel regions are PRIVATE.

Synchronization

- Synchronization is a way of telling a parallel region(threads) to be completed in a specific order to the sequence in which they do things.

- The most common form of synchronization is the use of barriers. Essentially the threads will wait at a barrier until every thread in the scope of the parallel region has reached the same point.

- There are some constructs that help implement synchronization such as master. The master construct defines a block that is only executed by the master thread, which makes the other threads skip it. Another example is the ordered region. This allows the parallel region to be executed in sequential order.

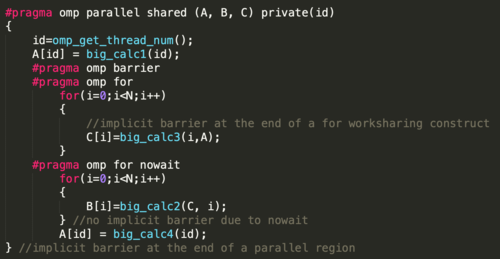

Implicit Barrier

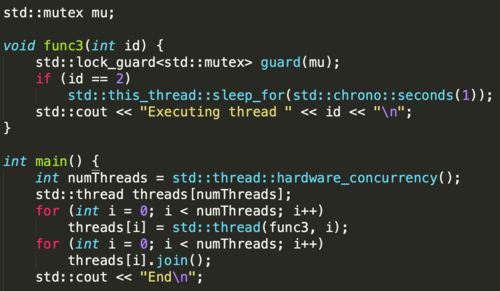

Ordered Example

Threading in C++11

Threading in C++11 is available through the <thread> library. C++11 relies mostly on joining or detaching forked subthreads.

Join vs Detach

A thread will begin running on initialization. While running in parallel, the child thread’s scope could exit before the child thread is finished. This will result in an error. The two main ways of dealing with this problem is through joining or detaching the child thread to/from the parent thread.

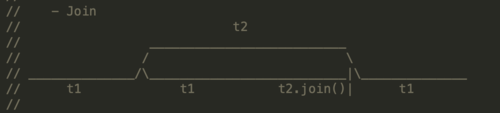

The following example shows how join works with the C++11 thread library. The thread (t1) forks of when creating a new child thread (t2). Both of these threads run in parallel. To prevent t2 from going out of scope in case t1 finishes first, t1 will call t2.join(). This will block t1 from executing code until t2 returns. Once t2 joins back, t1 can continue to execute.

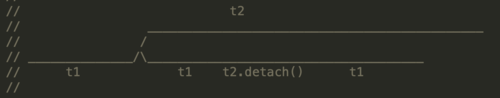

Detach, on the other hand, separates the two threads entirely. When t1 creates the new t2 thread, they both run in parallel. This time, t1 will call the detach function on t2. This will cause the two threads to continue running in parallel without t1’s scope affecting t2. Therefore, if t1 exits before t2 finishes, t2 can continue to run without any errors occurring - deallocating any memory after it itself finishes.

Creating a Thread

The basic constructor for a thread follows the following template:

The thread can take in a function, functor, or lambda expression as its first argument, followed by 0 or more arguments to be passed into the function.

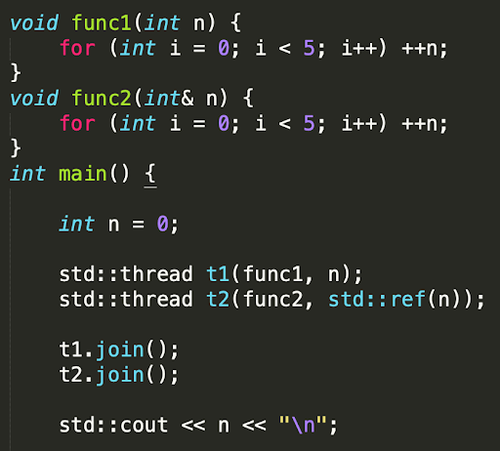

The thread constructor, by default, will treat all arguments as if you are passing them in by value, even if the function requires a variable by reference. To make sure no errors occur, the programmer needs to specify that the argument(s) passed to be treated as references by wrapping them in std::ref().

The following is an example of a thread passing in variables by value and by reference:

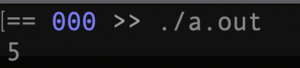

Output:

Multithreading

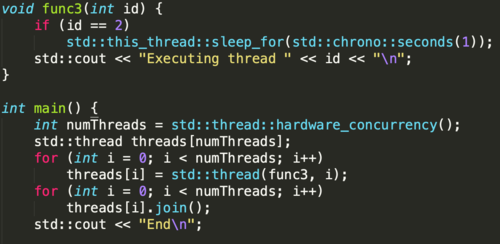

Multithreading with the C++11 thread library requires manual creation of every new thread. To define the number of threads to be created, the programmer has the option of manually setting the number of threads or using the hardware_concurrency function that will return the maximum number of threads that are available for the program to use. This works in a similar way as OpenMP’s omp_get_max_threads().

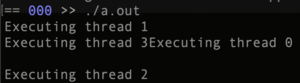

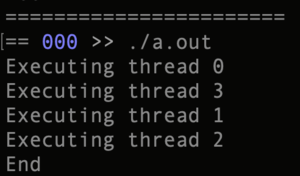

Output:

As the threads execute, they create a race condition. Because they all share the std::cout stream object, multithreading like this can result in unwanted behaviour - as seen in the above output.

Note how you can delay a thread by calling the std::this_thread::sleep_for() function.

Synchronization

Using Mutex

To prevent unwanted race conditions, we can use the mutex functionality available through the <mutex> library.

Mutex creates an exclusivity region within a thread through a lock system. Once locked, it protects shared data from being accessed by multiple threads at the same time. To prevent from a mutex lock from never unlocking - if, for example, an exception is thrown before the unlock function runs - it is advised to use std::lock_guard<std::mutex> instead to manage locking in a more exception-safe manner.

Output:

Using Atomic

Another way to manage shared data access between multiple threads is through the use of the atomic structure defined in the <atomic> library.

Atomic in the C++11 library works very similarly to how Atomic works in OpenMP. In C++, atomic is used as a wrapper for a variable type in order to give the variable atomic properties - that is, it can only be written by one thread at a time.