Difference between revisions of "Three-Star"

(→Image Profiling) |

(→Image Profiling) |

||

| Line 14: | Line 14: | ||

(main/image.h/image.cpp) | (main/image.h/image.cpp) | ||

| − | + | pulled PGM sample files from here: https://userpages.umbc.edu/~rostamia/2003-09-math625/images.html | |

| − | + | file sizes being 512x512, about 262 KB each file | |

| − | |||

>g++ -g -O2 -pg -o main main.cpp | >g++ -g -O2 -pg -o main main.cpp | ||

| + | |||

>main a.pgm result.pgm | >main a.pgm result.pgm | ||

| − | + | [Enlarged image by max permitted by program (5) to get more viewable results] | |

[[File:Imageprofilesteps.png]] | [[File:Imageprofilesteps.png]] | ||

Revision as of 22:09, 7 April 2018

GPU610/DPS915 | Student List | Group and Project Index | Student Resources | Glossary

Contents

Three-Star

Team Members

Progress

Assignment 1

Image Profiling

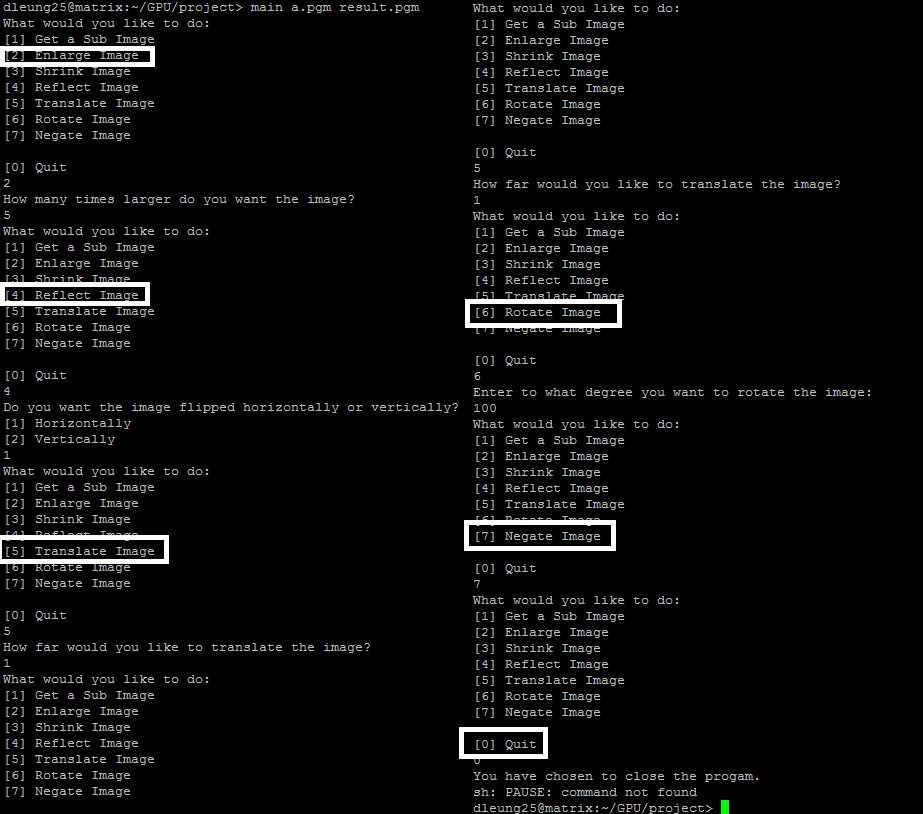

Chosen to profile image profiling as shown here: http://www.dreamincode.net/forums/topic/76816-image-processing-tutorial/ , using the sample program files (main/image.h/image.cpp)

pulled PGM sample files from here: https://userpages.umbc.edu/~rostamia/2003-09-math625/images.html

file sizes being 512x512, about 262 KB each file

>g++ -g -O2 -pg -o main main.cpp

>main a.pgm result.pgm

[Enlarged image by max permitted by program (5) to get more viewable results]

>gprof -p -b main>main.flt

The results of the flat profile:

Flat profile:

Each sample counts as 0.01 seconds.

% cumulative self self total time seconds seconds calls ms/call ms/call name 30.00 0.03 0.03 writeImage(char*, Image&) 30.00 0.06 0.03 Image::rotateImage(int, Image&) 10.00 0.07 0.01 2 5.00 5.00 Image::Image(int, int, int) 10.00 0.08 0.01 2 5.00 5.00 Image::operator=(Image const&) 10.00 0.09 0.01 Image::negateImage(Image&) 10.00 0.10 0.01 Image::Image(Image const&) 0.00 0.10 0.00 2 0.00 0.00 Image::~Image() 0.00 0.10 0.00 1 0.00 0.00 _GLOBAL__sub_I__ZN5ImageC2Ev

Out of all the functions tested, rotateImage takes up the largest amount of time. Below is the code for rotateImage:

void Image::rotateImage(int theta, Image& oldImage) {

int r0, c0;

int r1, c1;

int rows, cols;

rows = oldImage.N;

cols = oldImage.M;

Image tempImage(rows, cols, oldImage.Q);

float rads = (theta * 3.14159265)/180.0;

r0 = rows / 2;

c0 = cols / 2;

for(int r = 0; r < rows; r++)

{

for(int c = 0; c < cols; c++)

{

r1 = (int) (r0 + ((r - r0) * cos(rads)) - ((c - c0) * sin(rads)));

c1 = (int) (c0 + ((r - r0) * sin(rads)) + ((c - c0) * cos(rads)));

if(inBounds(r1,c1))

{

tempImage.pixelVal[r1][c1] = oldImage.pixelVal[r][c];

}

}

}

for(int i = 0; i < rows; i++)

{

for(int j = 0; j < cols; j++)

{

if(tempImage.pixelVal[i][j] == 0)

tempImage.pixelVal[i][j] = tempImage.pixelVal[i][j+1];

}

}

oldImage = tempImage;}

Main section to be parallelized.

LZW Data Compression Algorithm

Timothy Moy profiled.

Original algorithm: https://codereview.stackexchange.com/questions/86543/simple-lzw-compression-algorithm

Summary of Profiles

| Size (MB) | Compress() time in seconds |

|---|---|

| 10 | 0.96 |

| 15 | 1.35 |

| 20 | 1.8 |

| 25 | 2.14 |

| 30 | 2.64 |

| 35 | 3.16 |

| 40 | 3.45 |

| 45 | 4.24 |

| 50 | 4.23 |

The compress function seems to have some room for improvement as can be seen in the source code below

void compress(string input, int size, string filename) {

unordered_map<string, int> compress_dictionary(MAX_DEF);

//Dictionary initializing with ASCII

for ( int unsigned i = 0 ; i < 256 ; i++ ){

compress_dictionary[string(1,i)] = i;

}

string current_string;

unsigned int code;

unsigned int next_code = 256;

//Output file for compressed data

ofstream outputFile;

outputFile.open(filename + ".lzw");

// Possible area for improvement via reduction

for(char& c: input){

current_string = current_string + c;

if ( compress_dictionary.find(current_string) ==compress_dictionary.end() ){

if (next_code <= MAX_DEF)

compress_dictionary.insert(make_pair(current_string, next_code++));

current_string.erase(current_string.size()-1);

outputFile << convert_int_to_bin(compress_dictionary[current_string]);

current_string = c;

}

}

if (current_string.size())

outputFile << convert_int_to_bin(compress_dictionary[current_string]);

outputFile.close();

}

Note the comment above the second for loop notes we can do something like this:

for (int i = 1; i < n; i+=) a[0] += a[i];

changed to

for (int s = 1; s <= n/2; s*=2) for(int j = 0; j < n; j +=2 * s) a[j] += a[j + s];

The first for loop is constant and probably won't show much improvement if we parallelize it. As such, the major hotspot in this function is the second for loop. This is especially true since the file might be very large and we may be dealing with millions of characters!

Conclusion

We decided to go with image profiling.

There are some possible issues with working with the simple-lzw-compression-algorithm and CUDA. You cannot use the C++ string type in a kernel because CUDA does not include a device version of the C++ String library that would be able run on the GPU. Even if it was possible to use string in a kernel, it's not something you would want to do because string handles memory dynamically, which would be likely to be slow.

Char explanation (replace tmr) - https://stackoverflow.com/questions/26993351/is-there-a-penalty-to-using-char-variables-in-cuda-kernels?utm_medium=organic&utm_source=google_rich_qa&utm_campaign=google_rich_qa