Difference between revisions of "Sirius"

(→Graph) |

(→Results) |

||

| Line 135: | Line 135: | ||

<br><br> | <br><br> | ||

==== Results ==== | ==== Results ==== | ||

| + | [[File:boxFilterFinalTable.png]] | ||

| + | |||

==== Graph ==== | ==== Graph ==== | ||

[[File:boxFilterOptimize.png | 750px]] | [[File:boxFilterOptimize.png | 750px]] | ||

Revision as of 19:53, 7 April 2018

Contents

Sirius

Team Members

Progress

Assignment 1

Vehicle detection and tracking (Rosario A. Cali)

The source code for this project can be found alongside its references and test run results here.

The program uses FFmpeg to extract frames from a video and then each frame is analyzed to detect if any cars are present in the frame or not.

The analysis on each frame is done by using the Dlib Library that performs a Convolutional Neural Network based vehicle detector on each frame.

When a car is found, a rectangle will be drawn around the car and a label, identifying the front or the rear of a car, will be attached to it.

When running the application, long processing times were expected but the actual results were really bad - a lot worst than what we were expecting.

Only one test was fully run using a 10 seconds long video. We extracted the video at 25fps resulting with 251 frames with a resolution of 854 x 480 pixels.

The elapsed time for the application, using a 10 seconds long video, was of 21.02 minutes.

Here's an extract from the Flat Profile:

Flat profile:

Each sample counts as 0.01 seconds.

% cumulative self self total

time seconds seconds calls ms/call ms/call name

89.19 1124.65 1124.65 1757 640.09 640.09 dlib::enable_if_c<(dlib::ma::matrix_is_vector<dlib::matrix_op<dlib::op_pointer_to_mat<float> >, void>::value==(false))&&(dlib::ma::matrix_is_vector<dlib::matrix_op<dlib::op_trans<dlib::matrix<float, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> > >, void>::value==(false)), void>::type dlib::default_matrix_multiply<dlib::assignable_ptr_matrix<float>, dlib::matrix_op<dlib::op_pointer_to_mat<float> >, dlib::matrix_op<dlib::op_trans<dlib::matrix<float, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> > > >(dlib::assignable_ptr_matrix<float>&, dlib::matrix_op<dlib::op_pointer_to_mat<float> > const&, dlib::matrix_op<dlib::op_trans<dlib::matrix<float, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> > > const&)

10.15 1252.68 128.03 1693 75.62 75.62 dlib::cpu::img2col(dlib::matrix<float, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout>&, dlib::tensor const&, long, long, long, long, long, long, long)

0.16 1254.75 2.07 8218 0.25 0.25 dlib::enable_if_c<(dlib::is_grayscale_image<dlib::const_sub_image_proxy<dlib::matrix<float, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> > >::value&&dlib::is_grayscale_image<dlib::sub_image_proxy<dlib::matrix<float, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> > >::value)&&dlib::images_have_same_pixel_types<dlib::const_sub_image_proxy<dlib::matrix<float, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> >, dlib::sub_image_proxy<dlib::matrix<float, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> > >::value, void>::type dlib::resize_image<dlib::const_sub_image_proxy<dlib::matrix<float, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> >, dlib::sub_image_proxy<dlib::matrix<float, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> > >(dlib::const_sub_image_proxy<dlib::matrix<float, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> > const&, dlib::sub_image_proxy<dlib::matrix<float, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> >&, dlib::interpolate_bilinear)

0.16 1256.77 2.02 1506 1.34 1.34 dlib::cpu::affine_transform_conv(dlib::tensor&, dlib::tensor const&, dlib::tensor const&, dlib::tensor const&)

0.12 1258.24 1.47 1506 0.98 0.98 dlib::tt::relu(dlib::tensor&, dlib::tensor const&)

0.08 1259.22 0.99 1757 0.56 0.56 dlib::cpu::add(float, dlib::tensor&, float, dlib::tensor const&)

0.05 1259.88 0.66 844 0.78 1.03 dlib::image_display::draw(dlib::canvas const&) const

0.02 1260.17 0.29 detect_vehicles()

0.02 1260.39 0.22 251 0.88 0.88 void dlib::png_loader::get_image<dlib::matrix<dlib::rgb_pixel, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> >(dlib::matrix<dlib::rgb_pixel, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout>&) const

0.02 1260.58 0.19 347936511 0.00 0.00 dlib::enable_if_c<dlib::pixel_traits<dlib::canvas::pixel>::rgb&&dlib::pixel_traits<dlib::rgb_alpha_pixel>::rgb_alpha, void>::type dlib::assign_pixel_helpers::assign<dlib::canvas::pixel, dlib::rgb_alpha_pixel>(dlib::canvas::pixel&, dlib::rgb_alpha_pixel const&)

0.01 1260.73 0.15 251 0.60 0.60 void dlib::input_rgb_image_pyramid<dlib::pyramid_down<6u> >::to_tensor<dlib::matrix<dlib::rgb_pixel, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> const*>(dlib::matrix<dlib::rgb_pixel, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> const*, dlib::matrix<dlib::rgb_pixel, 0l, 0l, dlib::memory_manager_stateless_kernel_1<char>, dlib::row_major_layout> const*, dlib::resizable_tensor&) const

0.01 1260.80 0.07 844 0.08 1.11 dlib::drawable_window::paint(dlib::canvas const&)The full Flat profile, together with the Call Graph, can be found on the link provided above.

As we can tell from the profile, the application takes a really long time to process and it is kind of hard to tell how to optimize the code since the Dlib library is what is taking up most of the time.

There must be a way to optimize this application, but as of today (March 4, 2018) I am not sure which path to take.

For me the most important thing is to solve the problem regardless of the tools used and I think that reimplementing everything from scratch using OpenCV and CUDA is a viable solution.

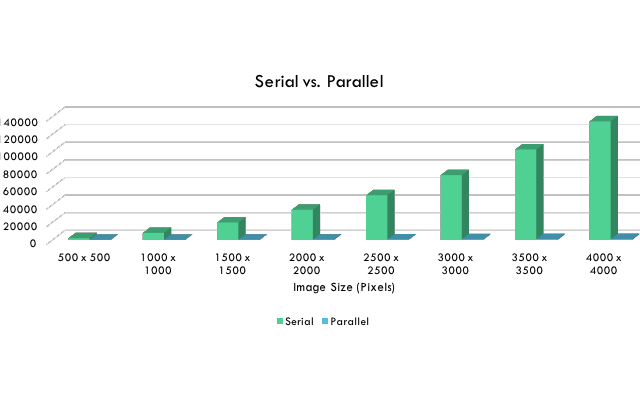

Boxblur on an image using opencv C++ Library (Max Fainshtein)

My suggested topic for the parallel program term project is an application that performs a boxblur on an image using open cv. This is made possible by using the opencv library installed through https://sourceforge.net/projects/opencvlibrary/files/opencv-win/3.3.0/opencv-3.3.0-vc14.exe/download or by using Visual Studios NuGet packages and installing opencv.win.native by Harry Y. Opencv was used to read images and access and modify the color of each pixel so that it is the average of the user defined box kernal. This application is running at O(n^2) where n is the number of pixel rows and columns for the image.

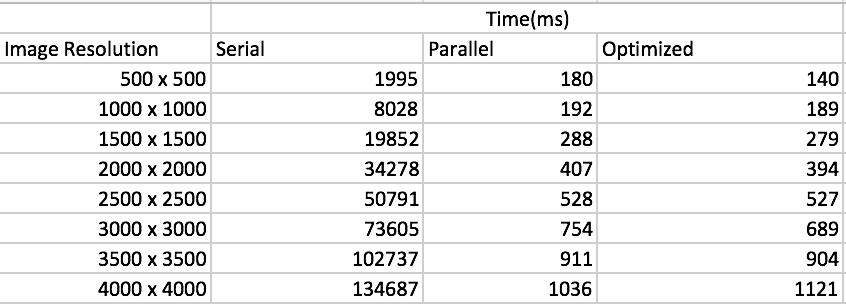

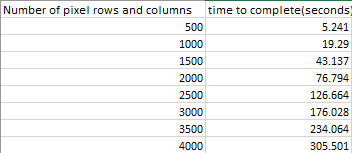

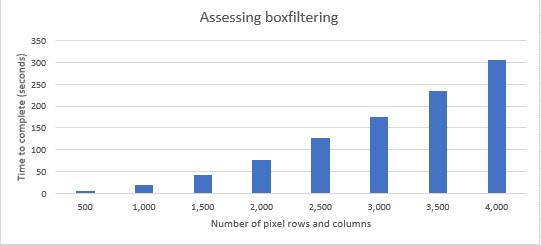

Results

Running this program for images of various sizes resulted in the following:

Graph

This is the data displayed as a bar graph.

The application has the opportunity to receive an incredible boost to performance with the addition of parallel programming as most of the computational time is made up of calculating the average of every pixel which can be calculated concurrently, while only requiring a single synchronization at the end before we display the image.

Algorithms (Joseph Pildush)

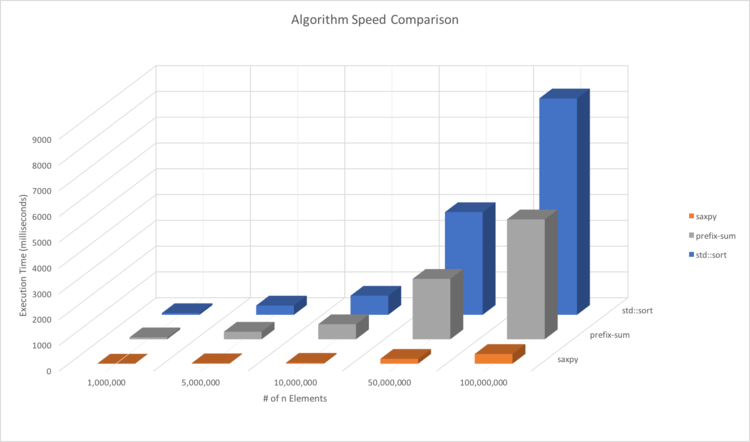

My topic is about Algorithms and the stress on the CPU of running them with large sizes of arrays. When using most algorithms with a set of arrays of a small size, the algorithms tend to finish faster then a second. When using these algorithms with larger sized arrays, based on my results it can be seen that the stress continues to increase as the size of the arrays increase, which in turn would also increase the execution time of the algorithms. In a situation when these algorithms are being called multiple times on large sized arrays, there would be an immense increase in execution time which may also result in the program becoming overall slow and/or hanging.

Algorithms Used: std::sort, saxpy, prefix-sum

Results

------------------------------------------------------------------

|# of Elements | std::sort | saxpy | prefix-sum |

| 1,000,000 | 66 | 4 | 75 |

| 5,000,000 | 360 | 18 | 293 |

| 10,000,000 | 742 | 36 | 584 |

| 50,000,000 | 3983 | 189 | 2343 |

| 100,000,000 | 8393 | 378 | 4649 |

------------------------------------------------------------------Graph

Flat Profile

Flat profile:

Each sample counts as 0.01 seconds.

% cumulative self self total

time seconds seconds calls s/call s/call name

47.03 8.24 8.24 1 8.24 11.15 prefixSum(std::vector<int, std::allocator<int> >&, int, std::chrono::time_point<std::chrono::_V2::steady_clock, std::chrono::duration<long long, std::ratio<1ll, 1000000000ll> > >, std::chrono::time_point<std::chrono::_V2::steady_clock, std::chrono::duration<long long, std::ratio<1ll, 1000000000ll> > >)

29.39 13.39 5.15 499999999 0.00 0.00 _ZNSt6vectorIiSaIiEE12emplace_backIJiEEERiDpOT_

17.41 16.44 3.05 4 0.76 1.83 setRandArr(std::vector<int, std::allocator<int> >&, int)

2.63 16.90 0.46 saxpyAlg(int, std::chrono::time_point<std::chrono::_V2::steady_clock, std::chrono::duration<long long, std::ratio<1ll, 1000000000ll> > >, std::chrono::time_point<std::chrono::_V2::steady_clock, std::chrono::duration<long long, std::ratio<1ll, 1000000000ll> > >)

2.34 17.31 0.41 stdSort(std::vector<int, std::allocator<int> >&, int, std::chrono::time_point<std::chrono::_V2::steady_clock, std::chrono::duration<long long, std::ratio<1ll, 1000000000ll> > >, std::chrono::time_point<std::chrono::_V2::steady_clock, std::chrono::duration<long long, std::ratio<1ll, 1000000000ll> > >)

1.20 17.52 0.21 112 0.00 0.00 _ZNSt6vectorIiSaIiEE17_M_realloc_insertIJRKiEEEvN9__gnu_cxx17__normal_iteratorIPiS1_EEDpOT_

0.00 17.52 0.00 5 0.00 0.00 printTiming(char const*, std::chrono::duration<long long, std::ratio<1ll, 1000000000ll> >)

0.00 17.52 0.00 1 0.00 0.00 _GLOBAL__sub_I__Z10setRandArrRSt6vectorIiSaIiEEi

0.00 17.52 0.00 1 0.00 0.00 void std::__insertion_sort<__gnu_cxx::__normal_iterator<int*, std::vector<int, std::allocator<int> > >, __gnu_cxx::__ops::_Iter_less_iter>(__gnu_cxx::__normal_iterator<int*, std::vector<int, std::allocator<int> > >, __gnu_cxx::__normal_iterator<int*, std::vector<int, std::allocator<int> > >, __gnu_cxx::__ops::_Iter_less_iter)

It can be seen that just by running these algorithms once, with large sized arrays, that they have a long execution time.

For this reason, when developing very advanced applications that would require to make multiple algorithm calls on very large sized arrays, it seems quite beneficial to use CUDA to implement parallel programming on the GPU in order to decrease the stress on other hardware as well as minimize the execution time for the algorithms.

Assignment 2

The project that has been chosen for Assignment 2 is Max Fainshtein's Assignment 1 project, Boxblur on an image using opencv C++ Library and we have decided to implement CUDA into the project in order to eliminate the stress on the CPU.

The kernel had been designed to run with 512 threads in order to ensure that this type of program would be able to run on lower compute capability CUDA supported hardware.

The grid was designed to accommodate a 4K image that would be processed with 3 colour channels.

Each block of the grid would represent a single pixel within the image that is being processed.

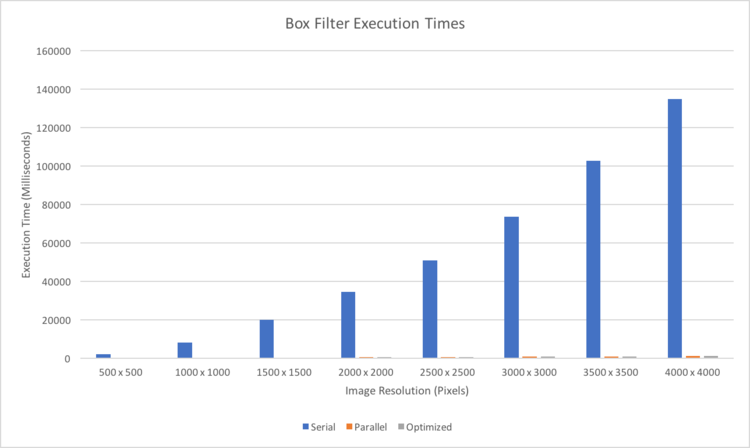

By implementing this kernel, the process time of the blur effect had made a significant improvement, compared to it's serial counter-part as illustrated in the graph below.

Graph

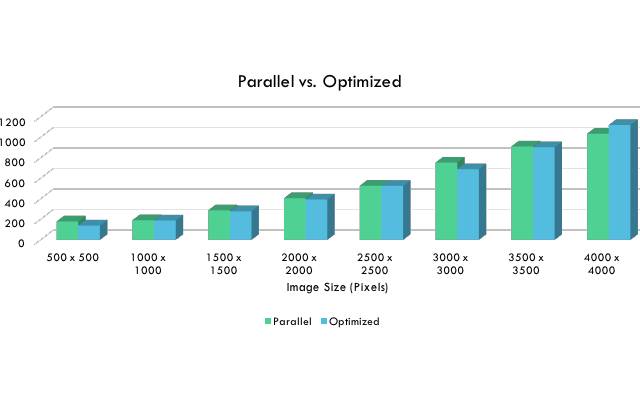

Assignment 3

We had realized that our implementation of a kernel had made some massive improvements, compared to the serial version, but after analyzing the Assignment 2 version we had noticed that we could still make improvements.

Problem:

The kernels had been executing concurrently but the percentage of concurrency was quite low.

Solution:

Initiate thread count based on Compute Capability of the CUDA device.

The number of threads that were initialized per block had been calculated based on resident threads and blocks.

The number of blocks for the grid had been recalculated to incorporate the complexity of the image and the new threads per block.