Difference between revisions of "Team False Sharing"

m |

Msivanesan4 (talk | contribs) (→Identifying False Sharing) |

||

| (27 intermediate revisions by 2 users not shown) | |||

| Line 6: | Line 6: | ||

== Introduction == | == Introduction == | ||

| + | Multicore processors are more prevalent now more than ever, and Multicore programming is essential to benefit from the power of the hardware as it allows to run our code different CPU cores. But it is very important to know and understand the underlying hardware to fully utilize it. One of the most important system resources is the cache. And most architectures have shared cache lines. And this is why false sharing is a well know know problem in multicore/multithreaded processes. | ||

'''What is False Sharing (aka cache line ping-ponging)?''' <br> | '''What is False Sharing (aka cache line ping-ponging)?''' <br> | ||

False Sharing is one of the sharing pattern that affect performance when multiple threads share data. It arises when at least two threads modify or use data that happens to be close enough in memory that they end up in the same cache line. False sharing occurs when they constantly update their respective data in a way that the cache line migrates back and forth between two threads' caches. | False Sharing is one of the sharing pattern that affect performance when multiple threads share data. It arises when at least two threads modify or use data that happens to be close enough in memory that they end up in the same cache line. False sharing occurs when they constantly update their respective data in a way that the cache line migrates back and forth between two threads' caches. | ||

| − | In this article, we will look at some examples that demonstrate false sharing, tools to analyze false sharing, and the two coding techniques we can implement to eliminate false sharing. | + | In this article, we will look at some examples that demonstrate false sharing, tools to analyze false sharing, and the two coding techniques we can implement to eliminate false sharing. |

=Cache Coherence= | =Cache Coherence= | ||

In Symmetric Multiprocessor (SMP)systems , each processor has a local cache. The local cache is a smaller, faster memory which stores copies of data from frequently used main memory locations. Cache lines are closer to the CPU than the main memory and are intended to make memory access more efficient. In a shared memory multiprocessor system with a separate cache memory for each processor, it is possible to have many copies of shared data: one copy in the main memory and one in the local cache of each processor that requested it. When one of the copies of data is changed, the other copies must reflect that change. Cache coherence is the discipline which ensures that the changes in the values of shared operands(data) are propagated throughout the system in a timely fashion. | In Symmetric Multiprocessor (SMP)systems , each processor has a local cache. The local cache is a smaller, faster memory which stores copies of data from frequently used main memory locations. Cache lines are closer to the CPU than the main memory and are intended to make memory access more efficient. In a shared memory multiprocessor system with a separate cache memory for each processor, it is possible to have many copies of shared data: one copy in the main memory and one in the local cache of each processor that requested it. When one of the copies of data is changed, the other copies must reflect that change. Cache coherence is the discipline which ensures that the changes in the values of shared operands(data) are propagated throughout the system in a timely fashion. | ||

To ensure data consistency across multiple caches, multiprocessor-capable Intel® processors follow the MESI (Modified/Exclusive/Shared/Invalid) protocol. On first load of a cache line, the processor will mark the cache line as ‘Exclusive’ access. As long as the cache line is marked exclusive, subsequent loads are free to use the existing data in cache. If the processor sees the same cache line loaded by another processor on the bus, it marks the cache line with ‘Shared’ access. If the processor stores a cache line marked as ‘S’, the cache line is marked as ‘Modified’ and all other processors are sent an ‘Invalid’ cache line message. If the processor sees the same cache line which is now marked ‘M’ being accessed by another processor, the processor stores the cache line back to memory and marks its cache line as ‘Shared’. The other processor that is accessing the same cache line incurs a cache miss. | To ensure data consistency across multiple caches, multiprocessor-capable Intel® processors follow the MESI (Modified/Exclusive/Shared/Invalid) protocol. On first load of a cache line, the processor will mark the cache line as ‘Exclusive’ access. As long as the cache line is marked exclusive, subsequent loads are free to use the existing data in cache. If the processor sees the same cache line loaded by another processor on the bus, it marks the cache line with ‘Shared’ access. If the processor stores a cache line marked as ‘S’, the cache line is marked as ‘Modified’ and all other processors are sent an ‘Invalid’ cache line message. If the processor sees the same cache line which is now marked ‘M’ being accessed by another processor, the processor stores the cache line back to memory and marks its cache line as ‘Shared’. The other processor that is accessing the same cache line incurs a cache miss. | ||

| − | [[File:Coherent.gif|500px| | + | [[File:Coherent.gif|500px|center]] |

<br style="clear:both" /> | <br style="clear:both" /> | ||

| Line 20: | Line 21: | ||

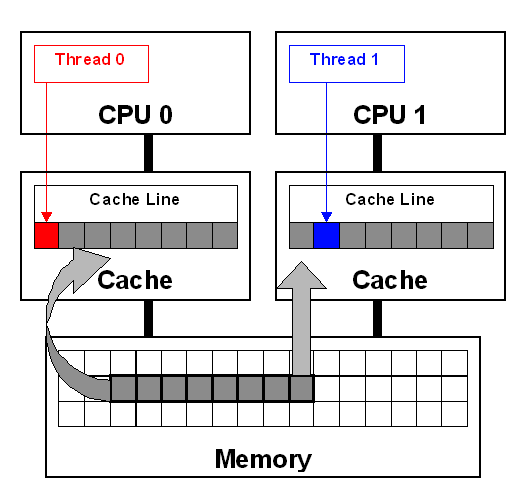

False sharing is a well-know performance issue on SMP systems, where each processor has a local cache. it occurs when treads on different processors modify varibles that reside on th the same cache line like so. | False sharing is a well-know performance issue on SMP systems, where each processor has a local cache. it occurs when treads on different processors modify varibles that reside on th the same cache line like so. | ||

<br style="clear:both" /> | <br style="clear:both" /> | ||

| − | [[File:CPUCacheline.png]] | + | [[File:CPUCacheline.png|center|frame]] |

<br style="clear:both" /> | <br style="clear:both" /> | ||

The frequent coordination required between processors when cache lines are marked ‘Invalid’ requires cache lines to be written to memory and subsequently loaded. False sharing increases this coordination and can significantly degrade application performance. | The frequent coordination required between processors when cache lines are marked ‘Invalid’ requires cache lines to be written to memory and subsequently loaded. False sharing increases this coordination and can significantly degrade application performance. | ||

| Line 26: | Line 27: | ||

<source lang="cpp"> | <source lang="cpp"> | ||

#include <iostream> | #include <iostream> | ||

| + | #include <iomanip> | ||

| + | #include <cstdlib> | ||

| + | #include <chrono> | ||

| + | #include <algorithm> | ||

#include <omp.h> | #include <omp.h> | ||

| − | # | + | #define NUM_THREADS 4 |

| − | #define | + | #define DIM 10000 |

| − | int main(int argc, | + | using namespace std::chrono; |

| − | + | ||

| − | + | int main(int argc, char** argv) { | |

| − | + | int* matrix = new int[DIM*DIM]; | |

| − | + | int odds = 0; | |

| − | int | + | // Initialize matrix to random Values |

| − | + | srand(200); | |

| + | for (int i = 0; i < DIM; i++) { | ||

| + | for(int j = 0; j < DIM; ++j){ | ||

| + | matrix[i*DIM + j] = rand()%50; | ||

| + | } | ||

| + | } | ||

| + | int* odds_local = new int[NUM_THREADS];//odd numbers in matrix local to thread | ||

| + | for(int i = 0; i < NUM_THREADS;i++){ | ||

| + | odds_local[i]=0; | ||

| + | } | ||

| + | int threads_used; | ||

omp_set_num_threads(NUM_THREADS); | omp_set_num_threads(NUM_THREADS); | ||

| − | + | double start_time = omp_get_wtime(); | |

| − | + | #pragma omp parallel | |

| − | #pragma omp | + | { |

| − | for(int i = 0; i < | + | int tid = omp_get_thread_num(); |

| − | + | odds_local[tid] = 0.0; | |

| − | + | #pragma omp for | |

| − | + | for(int i=0; i < DIM; ++i){ | |

| + | for(int j = 0; j < DIM; ++j){ | ||

| + | if(i==0 && j==0){threads_used = omp_get_num_threads();} | ||

| + | if( matrix[i*DIM + j] % 2 != 0 ) | ||

| + | ++odds_local[tid]; | ||

} | } | ||

} | } | ||

| + | #pragma omp critical | ||

| + | odds += odds_local[tid]; | ||

| + | } | ||

double time = omp_get_wtime() - start_time; | double time = omp_get_wtime() - start_time; | ||

| − | std::cout<<time<<std::endl; | + | std::cout<<"Execution Time: "<<time<<std::endl; |

| − | std::cout<<"Threads Used: "<< | + | std::cout<<"Threads Used: "<< threads_used<<std::endl; |

| + | std::cout<<"Odds: "<<odds<<std::endl; | ||

return 0; | return 0; | ||

} | } | ||

| − | |||

</source> | </source> | ||

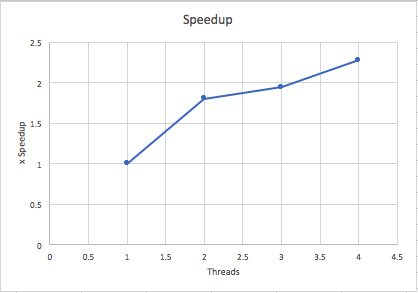

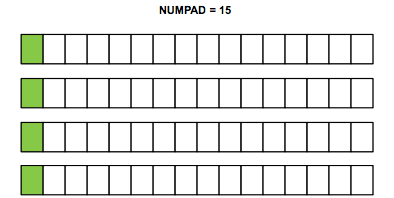

| − | [[File: | + | [[File:SpeedupFs.png|center|500px]] |

| − | + | According to Amdahl's Law the potential speedup of any application is given by Sn = 1 / ( 1 - P + P/n ). Assuming 95% of our application is parallelizable, Amdahl's law tell's use there is a maximum potential speedup of 3.478 times. This is not the case according to our results. We reach a speedup of 2.275 times the original speed. As you can tell from the graph our code is not scalable and these are results are very underwhelming. | |

=Eliminating False Sharing= | =Eliminating False Sharing= | ||

===Padding=== | ===Padding=== | ||

| − | |||

<source lang ="cpp"> | <source lang ="cpp"> | ||

| + | #define CACHE_LINE_SIZE 64 | ||

| + | template<typename T> | ||

| + | struct cache_line_storage { | ||

| + | [[ align(CACHE_LINE_SIZE) ]] T data; | ||

| + | char pad[ CACHE_LINE_SIZE > sizeof(T) | ||

| + | ? CACHE_LINE_SIZE - sizeof(T) | ||

| + | : 1 ]; | ||

| + | }; | ||

| + | |||

#include <iostream> | #include <iostream> | ||

| + | #include <iomanip> | ||

| + | #include <cstdlib> | ||

| + | #include <chrono> | ||

| + | #include <algorithm> | ||

#include <omp.h> | #include <omp.h> | ||

| − | #include " | + | #include "Padding.h" |

| − | #define | + | #define NUM_THREADS 9 |

| − | #define | + | #define DIM 1000 |

| − | int main(int argc, | + | using namespace std::chrono; |

| − | + | ||

| − | + | int main(int argc, char** argv) { | |

| − | + | ||

| − | } | + | int odds = 0; |

| − | + | int* matrix = new int[DIM*DIM]; | |

| − | + | ||

| − | + | // Initialize matrix to random Values | |

| + | srand(200); | ||

| + | for (int i = 0; i < DIM; i++) { | ||

| + | for(int j = 0; j < DIM; ++j){ | ||

| + | matrix[i*DIM + j] = rand()%50; | ||

| + | } | ||

| + | } | ||

| + | |||

| + | cache_line_storage<int> odds_local[NUM_THREADS]; | ||

| + | for(int i = 0;i<NUM_THREADS;i++){//initilize local | ||

| + | odds_local[i].data=0; | ||

| + | } | ||

| + | |||

| + | int threads_used; | ||

omp_set_num_threads(NUM_THREADS); | omp_set_num_threads(NUM_THREADS); | ||

double start_time = omp_get_wtime(); | double start_time = omp_get_wtime(); | ||

| − | #pragma omp parallel for | + | #pragma omp parallel |

| − | for(int i = 0; i < | + | { |

| − | + | int tid = omp_get_thread_num(); | |

| − | + | #pragma omp for | |

| − | + | for(int i=0; i < DIM; ++i){ | |

| + | for(int j = 0; j < DIM; ++j){ | ||

| + | if(i==0 && j==0){threads_used = omp_get_num_threads();} | ||

| + | if( matrix[i*DIM + j] % 2 != 0 ) | ||

| + | ++odds_local[tid].data; | ||

} | } | ||

} | } | ||

| + | #pragma omp critical | ||

| + | odds += odds_local[tid].data; | ||

| + | } | ||

double time = omp_get_wtime() - start_time; | double time = omp_get_wtime() - start_time; | ||

| − | std::cout<<time<<std::endl; | + | std::cout<<"Execution Time: "<<time<<std::endl; |

| − | std::cout<<"Threads Used: "<< | + | std::cout<<"Threads Used: "<< threads_used<<std::endl; |

| + | std::cout<<"Odds: "<<odds<<std::endl; | ||

return 0; | return 0; | ||

} | } | ||

| + | |||

</source> | </source> | ||

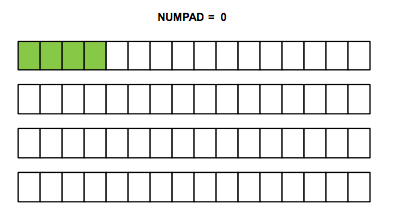

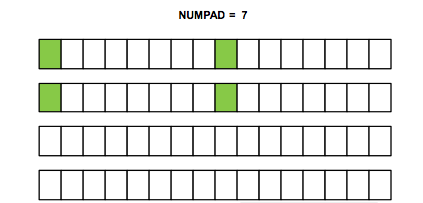

[[File:Numpad0.png]][[File:Numpad7.png]][[File:Numpad15.png]] | [[File:Numpad0.png]][[File:Numpad7.png]][[File:Numpad15.png]] | ||

| + | |||

| + | Padding your data is one way to prevent false sharing. What this does is by adding padding to the data elements sitting in a contiguous array you separate each element from each other in memory. The goal of this method is to have less data elements sitting the same cache line so when you write to memory the invalidation of a cache line doesn't prevent you from modifying data sitting on the same cache line because of cache coherence. You're goal here is to put each array element on its own cache line so if one element is modified, cache coherence will not bottleneck modifying data because each element in the array is on its own cache line. | ||

===Thread Local Variables=== | ===Thread Local Variables=== | ||

| − | <source> | + | Wasting memory to put your data on different cache lines is not ideal solution to the False Sharing problem even though it works. There are 2 problems with this solution: 1 you're wasting memory of course and 2 this solution isn't scalable because you aren't always going to know the L1 cache line size. Using variables local to each thread, instead of contiguous array locations reduces the number of times that a thread will write to a cache line that shares data with threads. The benefit to this approach is that you do not have multiple threads writing to the same cache line, invalidating the data and bottlenecking the processes. |

| + | <source lang="cpp"> | ||

#include <iostream> | #include <iostream> | ||

| + | #include <iomanip> | ||

| + | #include <cstdlib> | ||

| + | #include <chrono> | ||

| + | #include <algorithm> | ||

#include <omp.h> | #include <omp.h> | ||

| − | |||

#define NUM_THREADS 8 | #define NUM_THREADS 8 | ||

| − | int main(int argc, | + | #define DIM 1000 |

| − | + | using namespace std::chrono; | |

| − | + | ||

| − | + | int main(int argc, char** argv) { | |

| − | + | int* matrix = new int[DIM*DIM]; | |

| − | + | int odds = 0; | |

| − | + | // Initialize matrix to random Values | |

| + | srand(200); | ||

| + | for (int i = 0; i < DIM; i++) { | ||

| + | for(int j = 0; j < DIM; ++j){ | ||

| + | matrix[i*DIM + j] = rand()%50; | ||

| + | } | ||

| + | } | ||

| + | |||

| + | int threads_used; | ||

omp_set_num_threads(NUM_THREADS); | omp_set_num_threads(NUM_THREADS); | ||

| − | + | double start_time = omp_get_wtime(); | |

| − | #pragma omp parallel for | + | #pragma omp parallel |

| − | for(int i = 0; i < | + | { |

| − | + | int count_odds = 0.0; | |

| − | + | #pragma omp for | |

| − | + | for(int i=0; i < DIM; ++i){ | |

| − | + | for(int j = 0; j < DIM; ++j){ | |

| + | if(i==0 && j==0){threads_used = omp_get_num_threads();} | ||

| + | if( matrix[i*DIM + j] % 2 != 0 ) | ||

| + | ++count_odds; | ||

} | } | ||

| + | } | ||

#pragma omp critical | #pragma omp critical | ||

| − | + | odds += count_odds; | |

| − | + | } | |

double time = omp_get_wtime() - start_time; | double time = omp_get_wtime() - start_time; | ||

| − | std::cout<<time<<std::endl; | + | std::cout<<"Execution Time: "<<time<<std::endl; |

| − | std::cout<<"Threads Used: "<< | + | std::cout<<"Threads Used: "<< threads_used<<std::endl; |

| + | std::cout<<"Odds: "<<odds<<std::endl; | ||

return 0; | return 0; | ||

} | } | ||

</source> | </source> | ||

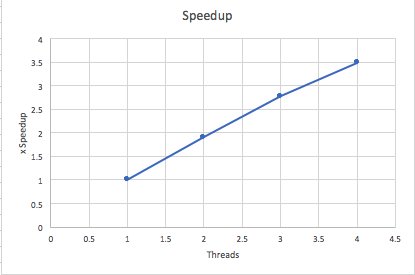

| − | + | [[File:SpeedupTl.png|800px|center|frame]] | |

| + | Here we see that the speedup increases linearly with the number of threads used. The speed up using 4 threads is 3.49 times according to our tests which is much closer to the speedup predicted by Amdahl's law (3.478 times). | ||

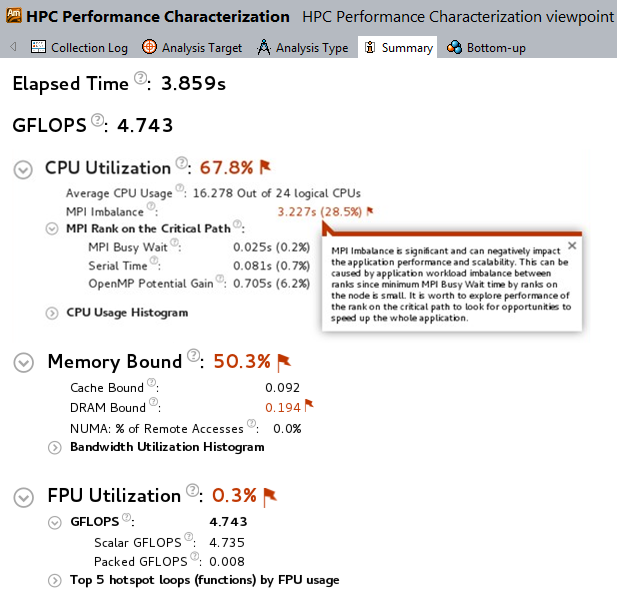

= Intel VTune Amplifier = | = Intel VTune Amplifier = | ||

| Line 135: | Line 211: | ||

* '''HotSpot Analysis''': Hotspot analysis quickly identifies the lines of code/functions that are taking up a lot of CPU time. | * '''HotSpot Analysis''': Hotspot analysis quickly identifies the lines of code/functions that are taking up a lot of CPU time. | ||

* '''High-performance computing (HPC) Analysis''': HPC analysis gives a fast overview of three critical metrics; | * '''High-performance computing (HPC) Analysis''': HPC analysis gives a fast overview of three critical metrics; | ||

| − | + | ** CPU utilizations (for both thread and MPI parallelism) | |

| − | **CPU utilizations (for both thread and MPI parallelism) | ||

**Memory access | **Memory access | ||

** FPU utilization(FLOPS) | ** FPU utilization(FLOPS) | ||

| + | [[File:HPC-1.png|frame|center]] | ||

* '''Locks and Waits''': VTune Amplifier makes it easy to understand multithreading concepts since it has a built-in understanding of parallel programming. Locks and waits analysis allows you to quickly find he common causes of slow threaded code. | * '''Locks and Waits''': VTune Amplifier makes it easy to understand multithreading concepts since it has a built-in understanding of parallel programming. Locks and waits analysis allows you to quickly find he common causes of slow threaded code. | ||

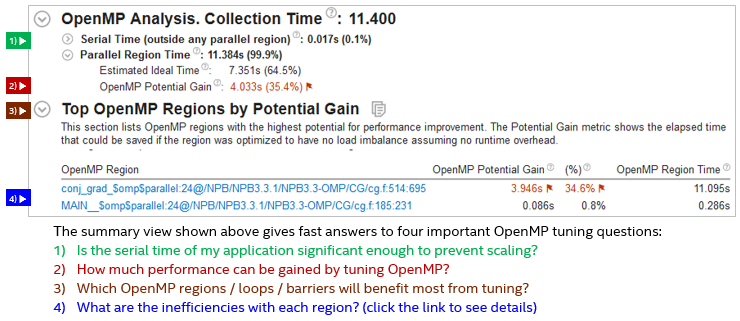

* '''Easier, More Effective OpenMP* and MPI multirank Tuning": | * '''Easier, More Effective OpenMP* and MPI multirank Tuning": | ||

** The summary report quickly gets you top four answers you need to effectively improve openMP* performance. | ** The summary report quickly gets you top four answers you need to effectively improve openMP* performance. | ||

| − | [[File:OpenMP-5.png]] | + | [[File:OpenMP-5.png|frame|center]] |

* VTune Amplifier provides hardware-based profiling to help analyze your code's efficient use of the microprocessor | * VTune Amplifier provides hardware-based profiling to help analyze your code's efficient use of the microprocessor | ||

<br> | <br> | ||

This is just a brief summary of some of the tools available within VTune Amplifier. For more details, please visit [https://software.intel.com/en-us/intel-vtune-amplifier-xe Intel VTune Amplifier website]. | This is just a brief summary of some of the tools available within VTune Amplifier. For more details, please visit [https://software.intel.com/en-us/intel-vtune-amplifier-xe Intel VTune Amplifier website]. | ||

| + | |||

| + | = Summary = | ||

| + | In conclusion, keep an eye out for false sharing; its a scalability killer. The general case to watch out for is when you have two objects or fields that are constantly accessed for reading or writing by different threads, at least one of the threads is doing writes, and the objects close enough in memory that they fall on the same cache line. Detecting false sharing isn' t always easy, so make use of CPU monitors and performance analysis tools. But Typical CPU monitors can completely mask memory waiting by regarding it as busy time, so look for code performance analysis tools that measure cycles per instruction (CPI) and/or cache misses. One such tool is Intel VTune Amplifier. You can also use visual studio's Performance Profiler. | ||

| + | <br> | ||

| + | Finally, you can avoid false sharing by reducing the frequency of updates to the falsely shared variables; for example, update local data instead of the shared variable. Also, you can ensure a variable is completely unshared by using padding or aligning data on cache line in such a what that it ensures that no other data precedes or follows a key object in the same cache line. | ||

Latest revision as of 23:42, 21 December 2017

Contents

Analyzing False Sharing and Ways to Eliminate False Sharing

Team Members

Introduction

Multicore processors are more prevalent now more than ever, and Multicore programming is essential to benefit from the power of the hardware as it allows to run our code different CPU cores. But it is very important to know and understand the underlying hardware to fully utilize it. One of the most important system resources is the cache. And most architectures have shared cache lines. And this is why false sharing is a well know know problem in multicore/multithreaded processes.

What is False Sharing (aka cache line ping-ponging)?

False Sharing is one of the sharing pattern that affect performance when multiple threads share data. It arises when at least two threads modify or use data that happens to be close enough in memory that they end up in the same cache line. False sharing occurs when they constantly update their respective data in a way that the cache line migrates back and forth between two threads' caches.

In this article, we will look at some examples that demonstrate false sharing, tools to analyze false sharing, and the two coding techniques we can implement to eliminate false sharing.

Cache Coherence

In Symmetric Multiprocessor (SMP)systems , each processor has a local cache. The local cache is a smaller, faster memory which stores copies of data from frequently used main memory locations. Cache lines are closer to the CPU than the main memory and are intended to make memory access more efficient. In a shared memory multiprocessor system with a separate cache memory for each processor, it is possible to have many copies of shared data: one copy in the main memory and one in the local cache of each processor that requested it. When one of the copies of data is changed, the other copies must reflect that change. Cache coherence is the discipline which ensures that the changes in the values of shared operands(data) are propagated throughout the system in a timely fashion. To ensure data consistency across multiple caches, multiprocessor-capable Intel® processors follow the MESI (Modified/Exclusive/Shared/Invalid) protocol. On first load of a cache line, the processor will mark the cache line as ‘Exclusive’ access. As long as the cache line is marked exclusive, subsequent loads are free to use the existing data in cache. If the processor sees the same cache line loaded by another processor on the bus, it marks the cache line with ‘Shared’ access. If the processor stores a cache line marked as ‘S’, the cache line is marked as ‘Modified’ and all other processors are sent an ‘Invalid’ cache line message. If the processor sees the same cache line which is now marked ‘M’ being accessed by another processor, the processor stores the cache line back to memory and marks its cache line as ‘Shared’. The other processor that is accessing the same cache line incurs a cache miss.

Identifying False Sharing

False sharing occurs when threads on different processors modify variables that reside on the same cache line. This invalidates the cache line and forces an update, which hurts performance.

False sharing is a well-know performance issue on SMP systems, where each processor has a local cache. it occurs when treads on different processors modify varibles that reside on th the same cache line like so.

The frequent coordination required between processors when cache lines are marked ‘Invalid’ requires cache lines to be written to memory and subsequently loaded. False sharing increases this coordination and can significantly degrade application performance.

In Figure, threads 0 and 1 require variables that are adjacent in memory and reside on the same cache line. The cache line is loaded into the caches of CPU 0 and CPU 1. Even though the threads modify different variables, the cache line is invalidated forcing a memory update to maintain cache coherency.

#include <iostream>

#include <iomanip>

#include <cstdlib>

#include <chrono>

#include <algorithm>

#include <omp.h>

#define NUM_THREADS 4

#define DIM 10000

using namespace std::chrono;

int main(int argc, char** argv) {

int* matrix = new int[DIM*DIM];

int odds = 0;

// Initialize matrix to random Values

srand(200);

for (int i = 0; i < DIM; i++) {

for(int j = 0; j < DIM; ++j){

matrix[i*DIM + j] = rand()%50;

}

}

int* odds_local = new int[NUM_THREADS];//odd numbers in matrix local to thread

for(int i = 0; i < NUM_THREADS;i++){

odds_local[i]=0;

}

int threads_used;

omp_set_num_threads(NUM_THREADS);

double start_time = omp_get_wtime();

#pragma omp parallel

{

int tid = omp_get_thread_num();

odds_local[tid] = 0.0;

#pragma omp for

for(int i=0; i < DIM; ++i){

for(int j = 0; j < DIM; ++j){

if(i==0 && j==0){threads_used = omp_get_num_threads();}

if( matrix[i*DIM + j] % 2 != 0 )

++odds_local[tid];

}

}

#pragma omp critical

odds += odds_local[tid];

}

double time = omp_get_wtime() - start_time;

std::cout<<"Execution Time: "<<time<<std::endl;

std::cout<<"Threads Used: "<< threads_used<<std::endl;

std::cout<<"Odds: "<<odds<<std::endl;

return 0;

}According to Amdahl's Law the potential speedup of any application is given by Sn = 1 / ( 1 - P + P/n ). Assuming 95% of our application is parallelizable, Amdahl's law tell's use there is a maximum potential speedup of 3.478 times. This is not the case according to our results. We reach a speedup of 2.275 times the original speed. As you can tell from the graph our code is not scalable and these are results are very underwhelming.

Eliminating False Sharing

Padding

#define CACHE_LINE_SIZE 64

template<typename T>

struct cache_line_storage {

[[ align(CACHE_LINE_SIZE) ]] T data;

char pad[ CACHE_LINE_SIZE > sizeof(T)

? CACHE_LINE_SIZE - sizeof(T)

: 1 ];

};

#include <iostream>

#include <iomanip>

#include <cstdlib>

#include <chrono>

#include <algorithm>

#include <omp.h>

#include "Padding.h"

#define NUM_THREADS 9

#define DIM 1000

using namespace std::chrono;

int main(int argc, char** argv) {

int odds = 0;

int* matrix = new int[DIM*DIM];

// Initialize matrix to random Values

srand(200);

for (int i = 0; i < DIM; i++) {

for(int j = 0; j < DIM; ++j){

matrix[i*DIM + j] = rand()%50;

}

}

cache_line_storage<int> odds_local[NUM_THREADS];

for(int i = 0;i<NUM_THREADS;i++){//initilize local

odds_local[i].data=0;

}

int threads_used;

omp_set_num_threads(NUM_THREADS);

double start_time = omp_get_wtime();

#pragma omp parallel

{

int tid = omp_get_thread_num();

#pragma omp for

for(int i=0; i < DIM; ++i){

for(int j = 0; j < DIM; ++j){

if(i==0 && j==0){threads_used = omp_get_num_threads();}

if( matrix[i*DIM + j] % 2 != 0 )

++odds_local[tid].data;

}

}

#pragma omp critical

odds += odds_local[tid].data;

}

double time = omp_get_wtime() - start_time;

std::cout<<"Execution Time: "<<time<<std::endl;

std::cout<<"Threads Used: "<< threads_used<<std::endl;

std::cout<<"Odds: "<<odds<<std::endl;

return 0;

}Padding your data is one way to prevent false sharing. What this does is by adding padding to the data elements sitting in a contiguous array you separate each element from each other in memory. The goal of this method is to have less data elements sitting the same cache line so when you write to memory the invalidation of a cache line doesn't prevent you from modifying data sitting on the same cache line because of cache coherence. You're goal here is to put each array element on its own cache line so if one element is modified, cache coherence will not bottleneck modifying data because each element in the array is on its own cache line.

Thread Local Variables

Wasting memory to put your data on different cache lines is not ideal solution to the False Sharing problem even though it works. There are 2 problems with this solution: 1 you're wasting memory of course and 2 this solution isn't scalable because you aren't always going to know the L1 cache line size. Using variables local to each thread, instead of contiguous array locations reduces the number of times that a thread will write to a cache line that shares data with threads. The benefit to this approach is that you do not have multiple threads writing to the same cache line, invalidating the data and bottlenecking the processes.

#include <iostream>

#include <iomanip>

#include <cstdlib>

#include <chrono>

#include <algorithm>

#include <omp.h>

#define NUM_THREADS 8

#define DIM 1000

using namespace std::chrono;

int main(int argc, char** argv) {

int* matrix = new int[DIM*DIM];

int odds = 0;

// Initialize matrix to random Values

srand(200);

for (int i = 0; i < DIM; i++) {

for(int j = 0; j < DIM; ++j){

matrix[i*DIM + j] = rand()%50;

}

}

int threads_used;

omp_set_num_threads(NUM_THREADS);

double start_time = omp_get_wtime();

#pragma omp parallel

{

int count_odds = 0.0;

#pragma omp for

for(int i=0; i < DIM; ++i){

for(int j = 0; j < DIM; ++j){

if(i==0 && j==0){threads_used = omp_get_num_threads();}

if( matrix[i*DIM + j] % 2 != 0 )

++count_odds;

}

}

#pragma omp critical

odds += count_odds;

}

double time = omp_get_wtime() - start_time;

std::cout<<"Execution Time: "<<time<<std::endl;

std::cout<<"Threads Used: "<< threads_used<<std::endl;

std::cout<<"Odds: "<<odds<<std::endl;

return 0;

}Here we see that the speedup increases linearly with the number of threads used. The speed up using 4 threads is 3.49 times according to our tests which is much closer to the speedup predicted by Amdahl's law (3.478 times).

Intel VTune Amplifier

VTune Ampllifier is a trace based analysis tool used for deep analysis of a given program's runtime. Modern processors nowadays require much more than just optimizing single thread performance. High performing code must be:

- Threaded and scalable to utilize multiple CPUs

- Vectorized for efficient use of multiple FPUs

- Tuned to take advantage of non-uniform memory architectures and caches

Intel VTune Amplifier's single, user friendly analysis interface provides all these advanced profiling capabilities.

Some Key tools of VTune Amplifier

- HotSpot Analysis: Hotspot analysis quickly identifies the lines of code/functions that are taking up a lot of CPU time.

- High-performance computing (HPC) Analysis: HPC analysis gives a fast overview of three critical metrics;

- CPU utilizations (for both thread and MPI parallelism)

- Memory access

- FPU utilization(FLOPS)

- Locks and Waits: VTune Amplifier makes it easy to understand multithreading concepts since it has a built-in understanding of parallel programming. Locks and waits analysis allows you to quickly find he common causes of slow threaded code.

- Easier, More Effective OpenMP* and MPI multirank Tuning":

- The summary report quickly gets you top four answers you need to effectively improve openMP* performance.

- VTune Amplifier provides hardware-based profiling to help analyze your code's efficient use of the microprocessor

This is just a brief summary of some of the tools available within VTune Amplifier. For more details, please visit Intel VTune Amplifier website.

Summary

In conclusion, keep an eye out for false sharing; its a scalability killer. The general case to watch out for is when you have two objects or fields that are constantly accessed for reading or writing by different threads, at least one of the threads is doing writes, and the objects close enough in memory that they fall on the same cache line. Detecting false sharing isn' t always easy, so make use of CPU monitors and performance analysis tools. But Typical CPU monitors can completely mask memory waiting by regarding it as busy time, so look for code performance analysis tools that measure cycles per instruction (CPI) and/or cache misses. One such tool is Intel VTune Amplifier. You can also use visual studio's Performance Profiler.

Finally, you can avoid false sharing by reducing the frequency of updates to the falsely shared variables; for example, update local data instead of the shared variable. Also, you can ensure a variable is completely unshared by using padding or aligning data on cache line in such a what that it ensures that no other data precedes or follows a key object in the same cache line.