Difference between revisions of "DPS921/Game of Threads"

| (One intermediate revision by the same user not shown) | |||

| Line 69: | Line 69: | ||

==OpenMP and C++ Comparisons== | ==OpenMP and C++ Comparisons== | ||

| + | {| class="wikitable" | ||

| + | | | ||

| + | |OpenMP | ||

| + | |Hand Threaded C++ | ||

| + | |- | ||

| + | |Code Portability | ||

| + | | | ||

| + | *Portable | ||

| + | | | ||

| + | *Not every system supports the thread library natively or completely (android) | ||

| + | |- | ||

| + | |Codability | ||

| + | | | ||

| + | *Lower modifications to the parallel code | ||

| + | *Compiler directives can be used to specify work distribution using sections | ||

| + | *Easier codability through #pragma constructs for less control of parallelism for each thread | ||

| + | | | ||

| + | |||

| + | *Greater modifications to the serial code | ||

| + | *Work distribution must be directly coded | ||

| + | *Loops must be tuned by hand, no schedule parameter to be changed. | ||

| + | *Finer granularity of parallelism allows finer control of parallel regions. For ex barriers or critical sections may concern only some threads while in openMP they are bound to all threads. | ||

| + | |- | ||

| + | |Data sharing | ||

| + | | | ||

| + | *Facilitates making data structures thread-safe | ||

| + | *Many OpenMP clauses(lastprivate, firstprivate, critical) can be used to lock data or share | ||

| + | | | ||

| + | *Data structures containing all private information for each thread must be created | ||

| + | *Separate copy of shared data is created for each thread | ||

| + | *Much harder to synchronize data, must be done by hand | ||

| + | |- | ||

| + | |Speed | ||

| + | | | ||

| + | *Typically faster as threads are not created and deconstructed like native C++ threads. Thread pools are used | ||

| + | | | ||

| + | *Slower than OpenMP unless a thread pool is created and utilized | ||

| + | *Many speed improvements provided by OpenMP need to be recreated | ||

| + | |} | ||

| + | ==Code examples== | ||

| + | ===Example of loop decompositin=== | ||

| + | ===Classic threads=== | ||

| + | <nowiki>Size = last_index/nb_threads; | ||

| + | First = MY_TID * size; | ||

| + | Last = (MY_TID != nb_threads-1) ? first + size : last_index; | ||

| + | For(i=first; i < last; I ++) | ||

| + | Array[i] = … ;</nowiki> | ||

| + | ===OpenMP=== | ||

| + | <nowiki>#pragma omp parallel for | ||

| + | For(i=0; i < last_index; i++) | ||

| + | Array[i] = …;</nowiki> | ||

| + | ===Sample program in OpenMP and C++ threads=== | ||

| + | ===OpenMP=== | ||

| + | <nowiki>#include <chrono> | ||

| + | #include <algorithm> | ||

| + | #include <iostream> | ||

| + | #include <omp.h> | ||

| + | using namespace std::chrono; | ||

| + | static const int num_threads = omp_get_max_threads(); | ||

| + | size_t sum = 0; | ||

| + | |||

| + | int main() { | ||

| + | omp_set_num_threads(num_threads); | ||

| + | |||

| + | size_t n = 50000; | ||

| + | steady_clock::time_point ts, te; | ||

| + | |||

| + | ts = steady_clock::now(); | ||

| + | #pragma omp parallel for reduction(+:sum) | ||

| + | for (int i = 0; i <= n; i++) | ||

| + | sum += i; | ||

| + | te = steady_clock::now(); | ||

| + | |||

| + | std::cout << "sum: " << sum << std::endl; | ||

| + | auto ms = duration_cast<milliseconds>(te - ts); | ||

| + | std::cout << std::endl << "Took - " << | ||

| + | ms.count() << " milliseconds" << std::endl; | ||

| + | std::getchar(); | ||

| + | return 0; | ||

| + | }</nowiki> | ||

| + | ===C++ Threads=== | ||

| + | <nowiki>#include <iostream> | ||

| + | #include <thread> | ||

| + | #include <mutex> | ||

| + | #include <chrono> | ||

| + | #include <algorithm> | ||

| + | |||

| + | using namespace std::chrono; | ||

| + | |||

| + | static const int num_threads = 10; | ||

| + | size_t sum = 0; | ||

| + | std::mutex m; | ||

| + | |||

| + | int main() { | ||

| + | std::thread t[num_threads]; | ||

| + | size_t n = 10000000; | ||

| + | steady_clock::time_point ts, te; | ||

| + | |||

| + | //Launch a group of threads | ||

| + | ts = steady_clock::now(); | ||

| + | for (int i = 0; i < num_threads; ++i) { | ||

| + | t[i] = std::thread(std::bind([](int start, int end) {m.lock(); | ||

| + | std::cout << "start: " << start << " end: " << end << std::endl; | ||

| + | for (int i = start; i <= end; i++) | ||

| + | sum += i; | ||

| + | m.unlock(); }, i*n/num_threads, i+1 == num_threads ? n : (i+1)*n/num_threads)); | ||

| + | } | ||

| + | te = steady_clock::now(); | ||

| + | |||

| + | //Join the threads with the main thread | ||

| + | for (int i = 0; i < num_threads; ++i) { | ||

| + | t[i].join(); | ||

| + | } | ||

| + | |||

| + | std::cout << sum << std::endl; | ||

| + | auto ms = duration_cast<milliseconds>(te - ts); | ||

| + | std::cout << std::endl << "Took - " << | ||

| + | ms.count() << " milliseconds" << std::endl; | ||

| + | |||

| + | std::getchar(); | ||

| + | return 0; | ||

| + | }</nowiki> | ||

| + | |||

| + | ===Performance Comparison=== | ||

| + | Per core speedup of the multi-threaded vs sequential version of the same code (higher is faster) | ||

| + | |||

| + | [[File:speedup.png]] | ||

Latest revision as of 23:03, 17 December 2017

Contents

Game of Threads

Our project: C++11 Threads Library Comparison to OpenMP - Case Studies

Group Members

C++11 Threads

The Thread library is a very recent inclusion to C++. The C++ language has undergone major changes since its birth and has only included the Thread library in C++ 11 standard. The C++ 11 Standard Library supports multithreading and concurrency both as an inherent part of the memory model. The library has been designed to support the following: The new memory model Some atomic operations library for direct control over individual bits and bytes. These atomic types and the corresponding operations become more portable and each developer can use it, without the need to adopt platform-specific. Inter-thread synchronization Before the C++ 11 standard, thread usage and creation required the POSIX pthread (<pthread>) library.

Using the C++11 thread library, parallelism can be achieved by creating threads and using the thread library provided by the standard library. Along with the <thread> library, there is 4 supporting libraries that can be used to support and perform parallelisation.

Components

Threads <thread>

- Defined in header <thread>

- C++ includes built-in support for threads, mutual exclusion, condition variables, and futures.

- Threads enable programs to execute across several processor cores.

- The class thread represents a single thread of execution. Threads allow multiple functions to execute concurrently.

- Threads begin execution immediately upon construction of the associated thread object (pending any OS scheduling delays), starting at the top-level function provided as a constructor argument.

- The return value of the top-level function is ignored and if it terminates by throwing an exception, std::terminate is called. The top-level function may communicate its return value or an exception to the caller via std::promise or by modifying shared variables (which may require synchronization, std::mutex and std::atomic)

- std::thread objects may also be in the state that does not represent any thread (after default construction, move from, detach, or join), and a thread of execution may be not associated with any thread objects (after detach).

- No two std::thread objects may represent the same thread of execution; std::thread is not CopyConstructible or CopyAssignable, although it is MoveConstructible and MoveAssignable.

Mutual Exclusion <mutex>

- Defined in header <mutex>

- Mutual exclusion algorithms prevent multiple threads from simultaneously accessing shared resources. This prevents data races and provides support for synchronization between threads.

Condition variables <condition_variable>

- Defined in header <condition_variable>

- A condition variable is a synchronization primitive that allows multiple threads to communicate with each other.

- A condition variable allows some number of threads to wait (possibly with a timeout) for notification from another thread that they may proceed. A condition variable is always associated with a mutex.

Futures <future>

- Defined in header <future>

- The future library provides facilities to obtain values that are returned and to catch exceptions that are thrown by asynchronous tasks (i.e. functions launched in separate threads).

- Returned and captured values are communicated in a shared state, in which the asynchronous task may write its return value or store an exception, and which may be examined, waited for, and otherwise manipulated by other threads that hold instances of std::future or std::shared_future that reference that shared state.

OpenMP

Background

The OpenMP library was created as a solution for parallel programming in C++. Many approaches were considered for the OpenMP library. A pure library approach was initially considered as an alternative for what eventually became OpenMP. Two factors led to rejection of a library-only methodology. First, it is far easier to write portable code using directives because they are automatically ignored by a compiler that does not support OpenMP. Second, since directives are recognized and processed by a compiler, they offer opportunities for compiler-based optimizations. Likewise, a pure directive approach is difficult as well: some necessary functionality is quite awkward to express through directives and ends up looking like executable code in directive syntax. Therefore, a small API defined by a mixture of directives and some simple library calls was chosen.

Concerning native C++ alternative of OpenMP

Pthreads (and by extension, threads) is an accepted standard for shared memory in the low end. However it is not targeted at the technical or high-performance computing (HPC) spaces. For many HPC class C and C++ language-based applications, the Pthreads model is lower level and awkward, being more suitable for task parallelism rather than data parallelism. Portability with Pthreads, as with any standard, requires that the target platform provide a standard-conforming implementation of Pthreads.

Parallel Control Structures

- Control structures are constructs that alter the flow of control in a program. The basic execution model for OpenMP a fork/join model, and the parallel control structures are those constructs that fork (i.e., start) new threads, or give execution control to one or another set of threads.

- OpenMP provides two kinds of constructs for controlling parallelism.

- First, it provides a directive to create multiple threads of execution that execute concurrently with each other. The only instance of this is the parallel directive: it encloses a block of code and creates a set of threads that each execute this block of code concurrently.

- Second, OpenMP provides constructs to divide work among an existing set of parallel threads. An instance of this is the do directive, used for exploiting loop-level parallelism. It divides the iterations of a loop among multiple concurrently executing threads. We present examples of each of these directives in later sections.

Communication and Data Environment

- An OpenMP program always begins with a single thread of control that has associated with it an execution context or data environment (we will use the two terms interchangeably).

- This initial thread of control is referred to as the master thread. The execution context for a thread is the data address space containing all the variables specified in the program.

- This includes global variables, automatic variables within subroutines (i.e., allocated on the stack), as well as dynamically allocated variables (i.e., allocated on the heap).

- The master thread and its execution context exist for the duration of the entire program. When the master thread encounters a parallel construct, new threads of execution are created along with an execution context for each thread.

- Each thread has its own stack within its execution context. This private stack is used for stack frames for subroutines invoked by that thread. As a result, multiple threads may individually invoke subroutines and execute safely without interfering with the stack frames of other threads.

Synchronization

- Multiple OpenMP threads communicate with each other through ordinary reads and writes to shared variables. However, it is often necessary to coordinate the access to these shared variables across multiple threads.

- Without any coordination between threads, it is possible that multiple threads may simultaneously attempt to modify the same variable, or that one thread may try to read a variable even as another thread is modifying that same variable.

- Conflicting accesses can potentially lead to incorrect data values and must be avoided by explicit coordination between multiple threads. The term synchronization refers to the mechanisms by which a parallel program can coordinate the execution of multiple threads.

- The two most common forms of synchronization are mutual exclusion and event synchronization:

- Mutual exclusion: is a construct is used to control access to a shared variable by providing a thread exclusive access to a shared variable for the duration of the construct. When multiple threads are modifying the same variable, acquiring exclusive access to the variable before modifying it ensures the integrity of that variable. OpenMP provides mutual exclusion through a critical directive.

- Event synchronization: is a signal that the occurrence of an event across multiple threads. A barrier directive in a parallel program defines a point where each thread waits for all other threads to arrive.

OpenMP and C++ Comparisons

| OpenMP | Hand Threaded C++ | |

| Code Portability |

|

|

| Codability |

|

|

| Data sharing |

|

|

| Speed |

|

|

Code examples

Example of loop decompositin

Classic threads

Size = last_index/nb_threads;

First = MY_TID * size;

Last = (MY_TID != nb_threads-1) ? first + size : last_index;

For(i=first; i < last; I ++)

Array[i] = … ;

OpenMP

#pragma omp parallel for For(i=0; i < last_index; i++) Array[i] = …;

Sample program in OpenMP and C++ threads

OpenMP

#include <chrono>

#include <algorithm>

#include <iostream>

#include <omp.h>

using namespace std::chrono;

static const int num_threads = omp_get_max_threads();

size_t sum = 0;

int main() {

omp_set_num_threads(num_threads);

size_t n = 50000;

steady_clock::time_point ts, te;

ts = steady_clock::now();

#pragma omp parallel for reduction(+:sum)

for (int i = 0; i <= n; i++)

sum += i;

te = steady_clock::now();

std::cout << "sum: " << sum << std::endl;

auto ms = duration_cast<milliseconds>(te - ts);

std::cout << std::endl << "Took - " <<

ms.count() << " milliseconds" << std::endl;

std::getchar();

return 0;

}

C++ Threads

#include <iostream>

#include <thread>

#include <mutex>

#include <chrono>

#include <algorithm>

using namespace std::chrono;

static const int num_threads = 10;

size_t sum = 0;

std::mutex m;

int main() {

std::thread t[num_threads];

size_t n = 10000000;

steady_clock::time_point ts, te;

//Launch a group of threads

ts = steady_clock::now();

for (int i = 0; i < num_threads; ++i) {

t[i] = std::thread(std::bind([](int start, int end) {m.lock();

std::cout << "start: " << start << " end: " << end << std::endl;

for (int i = start; i <= end; i++)

sum += i;

m.unlock(); }, i*n/num_threads, i+1 == num_threads ? n : (i+1)*n/num_threads));

}

te = steady_clock::now();

//Join the threads with the main thread

for (int i = 0; i < num_threads; ++i) {

t[i].join();

}

std::cout << sum << std::endl;

auto ms = duration_cast<milliseconds>(te - ts);

std::cout << std::endl << "Took - " <<

ms.count() << " milliseconds" << std::endl;

std::getchar();

return 0;

}

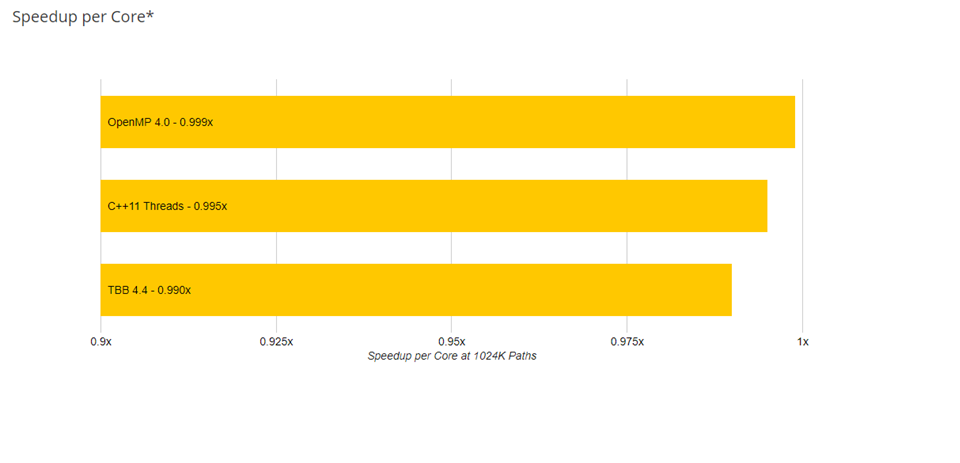

Performance Comparison

Per core speedup of the multi-threaded vs sequential version of the same code (higher is faster)