Difference between revisions of "BETTERRED"

Jkraitberg (talk | contribs) |

(→Code) |

||

| (36 intermediate revisions by 3 users not shown) | |||

| Line 23: | Line 23: | ||

The program can then be executed by running the compiled binary and it will display the time it took to generate the Mandelbrot set and save the pictures. | The program can then be executed by running the compiled binary and it will display the time it took to generate the Mandelbrot set and save the pictures. | ||

| − | == | + | {| class="wikitable mw-collapsible mw-collapsed" |

| + | ! Mandelbrot CPU( ... ) | ||

| + | |- | ||

| + | | | ||

| + | <syntaxhighlight lang="cpp"> | ||

| + | #include <iostream> | ||

| + | #include <complex> | ||

| + | #include <vector> | ||

| + | #include <chrono> | ||

| + | #include <functional> | ||

| − | + | #include "window.h" | |

| + | #include "save_image.h" | ||

| + | #include "utils.h" | ||

| − | + | // clang++ -std=c++11 -stdlib=libc++ -O3 save_image.cpp utils.cpp mandel.cpp -lfreeimage | |

| + | // Use an alias to simplify the use of complex type | ||

| + | using Complex = std::complex<float>; | ||

| − | + | // Convert a pixel coordinate to the complex domain | |

| + | Complex scale(window<int> &scr, window<float> &fr, Complex c) { | ||

| + | Complex aux(c.real() / (float)scr.width() * fr.width() + fr.x_min(), | ||

| + | c.imag() / (float)scr.height() * fr.height() + fr.y_min()); | ||

| + | return aux; | ||

| + | } | ||

| − | + | // Check if a point is in the set or escapes to infinity, return the number if iterations | |

| + | int escape(Complex c, int iter_max, const std::function<Complex( Complex, Complex)> &func) { | ||

| + | Complex z(0); | ||

| + | int iter = 0; | ||

| + | while (abs(z) < 2.0 && iter < iter_max) { | ||

| + | z = func(z, c); | ||

| + | iter++; | ||

| + | } | ||

| + | return iter; | ||

| + | } | ||

| − | === | + | // Loop over each pixel from our image and check if the points associated with this pixel escape to infinity |

| + | void get_number_iterations(window<int> &scr, window<float> &fract, int iter_max, std::vector<int> &colors, | ||

| + | const std::function<Complex( Complex, Complex)> &func) { | ||

| + | int k = 0, progress = -1; | ||

| + | for(int i = scr.y_min(); i < scr.y_max(); ++i) { | ||

| + | for(int j = scr.x_min(); j < scr.x_max(); ++j) { | ||

| + | Complex c((float)j, (float)i); | ||

| + | c = scale(scr, fract, c); | ||

| + | colors[k] = escape(c, iter_max, func); | ||

| + | k++; | ||

| + | } | ||

| + | if(progress < (int)(i*100.0/scr.y_max())){ | ||

| + | progress = (int)(i*100.0/scr.y_max()); | ||

| + | std::cout << progress << "%\n"; | ||

| + | } | ||

| + | } | ||

| + | } | ||

| − | + | void fractal(window<int> &scr, window<float> &fract, int iter_max, std::vector<int> &colors, | |

| + | const std::function<Complex( Complex, Complex)> &func, const char *fname, bool smooth_color) { | ||

| + | auto start = std::chrono::steady_clock::now(); | ||

| + | get_number_iterations(scr, fract, iter_max, colors, func); | ||

| + | auto end = std::chrono::steady_clock::now(); | ||

| + | std::cout << "Time to generate " << fname << " = " << std::chrono::duration <float, std::milli> (end - start).count() << " [ms]" << std::endl; | ||

| − | + | // Save (show) the result as an image | |

| + | plot(scr, colors, iter_max, fname, smooth_color); | ||

| + | } | ||

| − | -- | + | void mandelbrot() { |

| + | // Define the size of the image | ||

| + | window<int> scr(0, 1000, 0, 1000); | ||

| + | // The domain in which we test for points | ||

| + | window<float> fract(-2.2, 1.2, -1.7, 1.7); | ||

| − | + | // The function used to calculate the fractal | |

| + | auto func = [] (Complex z, Complex c) -> Complex {return z * z + c; }; | ||

| − | + | int iter_max = 500; | |

| + | const char *fname = "mandelbrot.png"; | ||

| + | bool smooth_color = true; | ||

| + | std::vector<int> colors(scr.size()); | ||

| − | + | // Experimental zoom (bugs ?). This will modify the fract window (the domain in which we calculate the fractal function) | |

| + | //zoom(1.0, -1.225, -1.22, 0.15, 0.16, fract); //Z2 | ||

| + | |||

| + | fractal(scr, fract, iter_max, colors, func, fname, smooth_color); | ||

| + | } | ||

| − | + | void triple_mandelbrot() { | |

| + | // Define the size of the image | ||

| + | window<int> scr(0, 2000, 0, 2000); | ||

| + | // The domain in which we test for points | ||

| + | window<float> fract(-1.5, 1.5, -1.5, 1.5); | ||

| − | + | // The function used to calculate the fractal | |

| + | auto func = [] (Complex z, Complex c) -> Complex {return z * z * z + c; }; | ||

| − | + | int iter_max = 500; | |

| + | const char *fname = "triple_mandelbrot.png"; | ||

| + | bool smooth_color = true; | ||

| + | std::vector<int> colors(scr.size()); | ||

| + | |||

| + | fractal(scr, fract, iter_max, colors, func, fname, smooth_color); | ||

| + | } | ||

| + | |||

| + | int main() { | ||

| + | |||

| + | mandelbrot(); | ||

| + | // triple_mandelbrot(); | ||

| + | |||

| + | return 0; | ||

| + | } | ||

| + | |||

| + | </syntaxhighlight> | ||

| + | |} | ||

=== Observations === | === Observations === | ||

| − | The program | + | The program takes a significant amount of time to run as the calculations are being done on the CPU. There are nested loops present within the program that can be parallelized to make the program faster. |

| + | |||

| + | The code also has the size of the image and the iterations hard-coded which can be modified to make the program significantly longer to process and make it tough on the GPU's for benchmarking and stability testing by running the process in a loop. The code is relatively straight forward and the parallelization should also be easy to implement and test. | ||

| + | |||

=== Hotspot === | === Hotspot === | ||

| − | + | Hotspot for the program was found in the fractal() function which calls the get_iterations() function that contains 2-nested for loops and a call to escape() which contains a while loop. Profiling the runtime with Instruments on OSX displayed that the fractal() function took up the most amount of runtime and this is the function that will be parallelized using CUDA. Once the function is parallelized, the iterations and size of the image can be increased in order to make the computation relatively stressful on the GPU to get a benchmark or looped in order to do stress testing for GPUs. | |

| − | |||

| − | + | === Profiling Data Screenshots === | |

| − | |||

| − | |||

| − | |||

| − | + | Profile - [https://drive.google.com/open?id=0B2Y_atB3DptbUG5oRWMyUGNQdlU Profile] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | Hotspot Code - [https://drive.google.com/open?id=0B2Y_atB3DptbRlhCUTNyeEFDbEk Hotspot Code] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | ---- | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | == Introduction : GPU Benchmarking/Testing for NBody : Joshua Kraitberg == | |

| − | + | This program uses Newtonian mechanics and a four-order symplectic Candy-Rozmus integration (a symplectic algorithm guarantees exact conservation of energy and angular momentum). The initial conditions are obtained from JPL Horizons, ahd constants (like masses, gravitational constant) are those recommended by the International Astronomical Union. The program currently does not take into account effects like general relativity, the non-spherical shapes of celestial objects, tidal effects on Earth, etc. It also does not take the 500 asteroids used by JPL Horizons into accound in its model of the Solar System. | |

| − | + | ||

| − | + | [https://github.com/fding/nbody Source] | |

| − | + | ||

| + | === Compilation Instructions: === | ||

| + | |||

| + | For Unix/Linux based systems: | ||

| + | |||

| + | g++ -std=c++11 c++/nbody.cpp | ||

| + | |||

| + | === Observations === | ||

| + | |||

| + | The program is quite fast for being a single-threaded CPU application. Almost all the CPU time is spent manipulating data and iterating in vectors. | ||

| + | |||

| + | === Hotspot === | ||

| + | Essentially all the time spent running is spent in the doing calculation on vectors. The dowork function iteratively calls the CRO_step function found in integrators.h file. The CRO_step function is where most of the vector calculations take place. A large amount of is also done in the calculate_a function which is used to calulate the acceleration on all the planets. | ||

| − | + | === Profiling Data and Screenshots === | |

| − | + | {| class="wikitable mw-collapsible mw-collapsed" | |

| − | + | ! NBody Hot Functions | |

| − | + | |- | |

| − | + | | | |

| − | + | ||

| − | + | <syntaxhighlight lang="cpp"> | |

| − | + | void dowork(double t){ | |

| − | + | int numtimes=int(abs(t/dt)); | |

| − | + | dt=t/double(numtimes+1); | |

| − | + | numtimes=numtimes+1; | |

| − | + | for (int i=0;i<numtimes;i++){ | |

| − | + | CRO_step(dt,a); | |

| − | + | } | |

| − | + | } | |

| − | + | ||

| − | + | void CRO_step(register double mydt,void (*a)()){ | |

| − | + | long double macr_a[4] = {0.5153528374311229364, -0.085782019412973646,0.4415830236164665242, 0.1288461583653841854}; | |

| − | + | long double macr_b[4] = {0.1344961992774310892, -0.2248198030794208058, 0.7563200005156682911, 0.3340036032863214255}; | |

| − | + | for (int i=0;i<4;i++){ | |

| − | 0.00 | + | a(); |

| − | 0.00 0. | + | for (int j=0;j<ncobjects;j++){ |

| − | 0.00 0.00 1/1 | + | cobjects[j]->v += cobjects[j]->a * mydt*macr_b[i]; |

| − | 0.00 0.00 1/1 | + | cobjects[j]->pos += cobjects[j]->v * mydt*macr_a[i]; |

| − | 0.00 0.00 | + | } |

| − | 0.00 0.00 | + | } //We should really expand the loop for efficiency |

| − | 0.00 0.00 | + | } |

| − | 0.00 0.00 | + | |

| − | 0.00 0.00 | + | void calculate_a(){ |

| − | + | for (int j1=0;j1<ncobjects;j1++){ | |

| − | + | cobjects[j1]->a=vect(0,0,0); | |

| − | + | } | |

| − | + | for (int j1=0; j1<ncobjects;j1++){ | |

| − | 0. | + | for (int j2=j1+1;j2<ncobjects;j2++){ |

| − | 0. | + | double m1=cobjects[j1]->m; |

| − | + | double m2=cobjects[j2]->m; | |

| − | 0.00 | + | vect dist=cobjects[j1]->pos-cobjects[j2]->pos; |

| − | + | double magd=dist.mag(); | |

| − | 0. | + | vect base=dist*(1.0/(magd*magd*magd)); |

| − | 0.00 0.00 1/1 std:: | + | cobjects[j1]->a+=base*(-m2); |

| − | + | cobjects[j2]->a+=base*m1; | |

| − | + | } | |

| − | + | } | |

| − | + | } | |

| − | 0. | + | </syntaxhighlight> |

| − | 0. | + | |

| − | + | |} | |

| − | + | ||

| − | + | {| class="wikitable mw-collapsible mw-collapsed" | |

| − | + | ! NBody Hot Spot Data | |

| − | + | |- | |

| − | + | | Call graph (explanation follows) | |

| − | + | ||

| − | + | ||

| − | + | granularity: each sample hit covers 4 byte(s) for 0.16% of 6.18 seconds | |

| − | + | ||

| − | + | index % time self children called name | |

| − | + | <spontaneous> | |

| − | + | [1] 99.7 0.00 6.16 main [1] | |

| − | + | 0.00 6.15 1/1 dowork(double) [3] | |

| − | + | 0.00 0.01 1/1 totalL() [14] | |

| − | + | 0.00 0.00 1/1 totalE() [16] | |

| − | + | 0.00 0.00 1/1 initialize() [17] | |

| − | + | 0.00 0.00 28/32712799 vect::operator-(vect const&) [8] | |

| − | + | 0.00 0.00 14/118268959 vect::operator*(double const&) [5] | |

| − | + | 0.00 0.00 14/5032775 vect::operator=(vect const&) [11] | |

| − | + | 0.00 0.00 42/42 std::vector<int, std::allocator<int> >::operator[](unsigned int) [22] | |

| − | + | 0.00 0.00 16/16 bool std::operator==<char, std::char_traits<char>, std::allocator<char> >(std::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, char const*) [33] | |

| − | + | 0.00 0.00 15/35 std::vector<int, std::allocator<int> >::size() const [23] | |

| − | + | 0.00 0.00 14/14 std::vector<int, std::allocator<int> >::push_back(int const&) [39] | |

| − | + | 0.00 0.00 14/14 getobj(int) [36] | |

| − | + | 0.00 0.00 3/3 std::vector<double, std::allocator<double> >::operator[](unsigned int) [90] | |

| − | + | 0.00 0.00 2/2 print_hline() [94] | |

| − | + | 0.00 0.00 2/10 std::vector<double, std::allocator<double> >::size() const [45] | |

| − | + | 0.00 0.00 1/1 std::ios_base::precision(int) [146] | |

| − | + | 0.00 0.00 1/1 std::vector<double, std::allocator<double> >::vector() [142] | |

| − | + | 0.00 0.00 1/1 std::vector<int, std::allocator<int> >::vector() [144] | |

| − | + | 0.00 0.00 1/1 std::vector<double, std::allocator<double> >::push_back(double const&) [141] | |

| + | 0.00 0.00 1/1 std::vector<std::string, std::allocator<std::string> >::vector() [135] | ||

| + | 0.00 0.00 1/1 std::vector<std::string, std::allocator<std::string> >::~vector() [136] | ||

| + | 0.00 0.00 1/1 JD(tm*) [103] | ||

| + | 0.00 0.00 1/1 std::vector<double, std::allocator<double> >::push_back(double&&) [140] | ||

| + | 0.00 0.00 1/1 std::vector<int, std::allocator<int> >::~vector() [145] | ||

| + | 0.00 0.00 1/1 std::vector<double, std::allocator<double> >::~vector() [143] | ||

----------------------------------------------- | ----------------------------------------------- | ||

| − | 0. | + | 0.14 6.01 89870/89870 dowork(double) [3] |

| − | + | [2] 99.6 0.14 6.01 89870 CRO_step(double, void (*)()) [2] | |

| − | 0. | + | 1.18 4.22 359480/359480 calculate_a() [4] |

| − | + | 0.20 0.29 20130880/118268959 vect::operator*(double const&) [5] | |

| + | 0.12 0.00 10065440/75490814 vect::operator+=(vect const&) [7] | ||

----------------------------------------------- | ----------------------------------------------- | ||

| − | + | 0.00 6.15 1/1 main [1] | |

| − | [ | + | [3] 99.6 0.00 6.15 1 dowork(double) [3] |

| + | 0.14 6.01 89870/89870 CRO_step(double, void (*)()) [2] | ||

| + | 0.00 0.00 1/1 std::abs(double) [147] | ||

----------------------------------------------- | ----------------------------------------------- | ||

| − | 0. | + | 1.18 4.22 359480/359480 CRO_step(double, void (*)()) [2] |

| − | 0. | + | [4] 87.5 1.18 4.22 359480 calculate_a() [4] |

| − | 0. | + | 1.00 1.39 98138040/118268959 vect::operator*(double const&) [5] |

| − | + | 0.78 0.00 65425360/75490814 vect::operator+=(vect const&) [7] | |

| + | 0.26 0.37 32712680/32712799 vect::operator-(vect const&) [8] | ||

| + | 0.32 0.00 32712680/32712785 vect::mag() [10] | ||

| + | 0.08 0.00 5032720/5032775 vect::operator=(vect const&) [11] | ||

| + | 0.01 0.00 5032720/5032775 vect::vect(double, double, double) [13] | ||

----------------------------------------------- | ----------------------------------------------- | ||

| − | 0.00 0. | + | 0.00 0.00 11/118268959 initialize() [17] |

| − | + | 0.00 0.00 14/118268959 main [1] | |

| − | 0. | + | 0.00 0.00 14/118268959 totalL() [14] |

| − | 0. | + | 0.20 0.29 20130880/118268959 CRO_step(double, void (*)()) [2] |

| − | + | 1.00 1.39 98138040/118268959 calculate_a() [4] | |

| − | + | [5] 46.5 1.20 1.67 118268959 vect::operator*(double const&) [5] | |

| + | 1.67 0.00 118268959/118268959 vect::operator*=(double const&) [6] | ||

----------------------------------------------- | ----------------------------------------------- | ||

| − | 0. | + | 1.67 0.00 118268959/118268959 vect::operator*(double const&) [5] |

| − | [ | + | [6] 27.1 1.67 0.00 118268959 vect::operator*=(double const&) [6] |

| − | 0. | + | ----------------------------------------------- |

| − | + | 0.00 0.00 14/75490814 totalL() [14] | |

| − | + | 0.12 0.00 10065440/75490814 CRO_step(double, void (*)()) [2] | |

| − | + | 0.78 0.00 65425360/75490814 calculate_a() [4] | |

| − | + | [7] 14.6 0.91 0.00 75490814 vect::operator+=(vect const&) [7] | |

| − | + | ----------------------------------------------- | |

| − | + | 0.00 0.00 28/32712799 main [1] | |

| − | + | 0.00 0.00 91/32712799 totalE() [16] | |

| − | + | 0.26 0.37 32712680/32712799 calculate_a() [4] | |

| − | + | [8] 10.4 0.27 0.38 32712799 vect::operator-(vect const&) [8] | |

| − | + | 0.38 0.00 32712799/32712799 vect::operator-=(vect const&) [9] | |

| − | + | ----------------------------------------------- | |

| − | <spontaneous> | + | 0.38 0.00 32712799/32712799 vect::operator-(vect const&) [8] |

| − | [ | + | [9] 6.1 0.38 0.00 32712799 vect::operator-=(vect const&) [9] |

| − | 0.00 | + | ----------------------------------------------- |

| − | 0.00 0. | + | 0.00 0.00 105/32712785 totalE() [16] |

| − | 0.00 0.00 | + | 0.32 0.00 32712680/32712785 calculate_a() [4] |

| − | 0.00 0. | + | [10] 5.2 0.32 0.00 32712785 vect::mag() [10] |

| − | 0. | + | ----------------------------------------------- |

| + | 0.00 0.00 14/5032775 main [1] | ||

| + | 0.00 0.00 41/5032775 initialize() [17] | ||

| + | 0.08 0.00 5032720/5032775 calculate_a() [4] | ||

| + | [11] 1.4 0.08 0.00 5032775 vect::operator=(vect const&) [11] | ||

| + | ----------------------------------------------- | ||

| + | <spontaneous> | ||

| + | [12] 0.3 0.02 0.00 vect::operator+(vect const&) [12] | ||

| + | ----------------------------------------------- | ||

| + | 0.00 0.00 14/5032775 cross(vect const&, vect const&) [15] | ||

| + | 0.00 0.00 41/5032775 initialize() [17] | ||

| + | 0.01 0.00 5032720/5032775 calculate_a() [4] | ||

| + | [13] 0.2 0.01 0.00 5032775 vect::vect(double, double, double) [13] | ||

| + | ----------------------------------------------- | ||

| + | 0.00 0.01 1/1 main [1] | ||

| + | [14] 0.1 0.00 0.01 1 totalL() [14] | ||

| + | 0.01 0.00 14/14 cross(vect const&, vect const&) [15] | ||

0.00 0.00 14/118268959 vect::operator*(double const&) [5] | 0.00 0.00 14/118268959 vect::operator*(double const&) [5] | ||

| − | 0.00 0.00 14/5032775 vect::operator=(vect const&) [11] | + | 0.00 0.00 14/75490814 vect::operator+=(vect const&) [7] |

| − | 0.00 0.00 42/42 std::vector<int, std::allocator<int> >::operator[](unsigned int) [22] | + | 0.00 0.00 1/85 vect::vect() [21] |

| − | 0.00 0.00 16/16 bool std::operator==<char, std::char_traits<char>, std::allocator<char> >(std::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, char const*) [33] | + | ----------------------------------------------- |

| − | 0.00 0.00 15/35 std::vector<int, std::allocator<int> >::size() const [23] | + | 0.01 0.00 14/14 totalL() [14] |

| − | 0.00 0.00 14/14 std::vector<int, std::allocator<int> >::push_back(int const&) [39] | + | [15] 0.1 0.01 0.00 14 cross(vect const&, vect const&) [15] |

| − | 0.00 0.00 14/14 getobj(int) [36] | + | 0.00 0.00 14/5032775 vect::vect(double, double, double) [13] |

| − | 0.00 0.00 3/3 std::vector<double, std::allocator<double> >::operator[](unsigned int) [90] | + | |} |

| + | |||

| + | {| class="wikitable mw-collapsible mw-collapsed" | ||

| + | ! NBody gprof Complete Data (Warning: long) | ||

| + | |- | ||

| + | | Call graph (explanation follows) | ||

| + | |||

| + | |||

| + | granularity: each sample hit covers 4 byte(s) for 0.16% of 6.18 seconds | ||

| + | |||

| + | index % time self children called name | ||

| + | <spontaneous> | ||

| + | [1] 99.7 0.00 6.16 main [1] | ||

| + | 0.00 6.15 1/1 dowork(double) [3] | ||

| + | 0.00 0.01 1/1 totalL() [14] | ||

| + | 0.00 0.00 1/1 totalE() [16] | ||

| + | 0.00 0.00 1/1 initialize() [17] | ||

| + | 0.00 0.00 28/32712799 vect::operator-(vect const&) [8] | ||

| + | 0.00 0.00 14/118268959 vect::operator*(double const&) [5] | ||

| + | 0.00 0.00 14/5032775 vect::operator=(vect const&) [11] | ||

| + | 0.00 0.00 42/42 std::vector<int, std::allocator<int> >::operator[](unsigned int) [22] | ||

| + | 0.00 0.00 16/16 bool std::operator==<char, std::char_traits<char>, std::allocator<char> >(std::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, char const*) [33] | ||

| + | 0.00 0.00 15/35 std::vector<int, std::allocator<int> >::size() const [23] | ||

| + | 0.00 0.00 14/14 std::vector<int, std::allocator<int> >::push_back(int const&) [39] | ||

| + | 0.00 0.00 14/14 getobj(int) [36] | ||

| + | 0.00 0.00 3/3 std::vector<double, std::allocator<double> >::operator[](unsigned int) [90] | ||

0.00 0.00 2/2 print_hline() [94] | 0.00 0.00 2/2 print_hline() [94] | ||

0.00 0.00 2/10 std::vector<double, std::allocator<double> >::size() const [45] | 0.00 0.00 2/10 std::vector<double, std::allocator<double> >::size() const [45] | ||

| Line 1,103: | Line 1,219: | ||

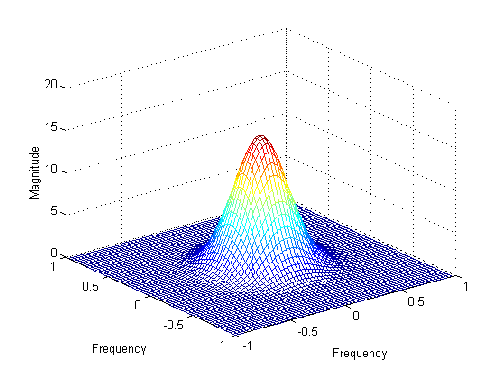

An even better way would be to integrate the Gaussian function instead of just taking point samples. Refer to the two graphs on the right.<br/> | An even better way would be to integrate the Gaussian function instead of just taking point samples. Refer to the two graphs on the right.<br/> | ||

| − | The graphs plot the continuous distribution function and the discrete kernel approximation. One thing to look out for are the tails of the distribution vs. kernel | + | The graphs plot the continuous distribution function and the discrete kernel approximation. One thing to look out for are the tails of the distribution vs. kernel weight:<br/> |

For the current configuration, we have 13.36% of the curve’s area outside the discrete kernel. Note that the weights are renormalized such that the sum of all weights is one. Or in other words:<br/> | For the current configuration, we have 13.36% of the curve’s area outside the discrete kernel. Note that the weights are renormalized such that the sum of all weights is one. Or in other words:<br/> | ||

the probability mass outside the discrete kernel is redistributed evenly to all pixels within the kernel. The weights are calculated by numerical integration of the continuous gaussian distribution<br/> | the probability mass outside the discrete kernel is redistributed evenly to all pixels within the kernel. The weights are calculated by numerical integration of the continuous gaussian distribution<br/> | ||

| Line 1,682: | Line 1,798: | ||

char *destFileName = argv[2]; | char *destFileName = argv[2]; | ||

| − | #endif /* | + | #endif /* RUN_GPROF */ |

if (showUsage) | if (showUsage) | ||

| Line 1,782: | Line 1,898: | ||

To compile and run the program: | To compile and run the program: | ||

# Navigate to the directory you want to run the program in. | # Navigate to the directory you want to run the program in. | ||

| − | # Save [http://matrix.senecac.on.ca/~cpaul12/cinque_terre.bmp this] image and place it directory you will be running the program from. | + | # Save [http://matrix.senecac.on.ca/~cpaul12/cinque_terre.bmp this] image and place it into the directory you will be running the program from. |

# Copy the Linux version of the main source code above and paste it into a [your chosen file name].cpp file. | # Copy the Linux version of the main source code above and paste it into a [your chosen file name].cpp file. | ||

# Copy the Linux version of the header source code above and paste it into a file named windows.h. | # Copy the Linux version of the header source code above and paste it into a file named windows.h. | ||

| Line 1,795: | Line 1,911: | ||

To compile and run the program: | To compile and run the program: | ||

# Navigate to the directory you want to run the program in. | # Navigate to the directory you want to run the program in. | ||

| − | # Save [http://matrix.senecac.on.ca/~cpaul12/cinque_terre.bmp this] image and place it directory you will be running the program from. | + | # Save [http://matrix.senecac.on.ca/~cpaul12/cinque_terre.bmp this] image and place it into the directory you will be running the program from. |

# Copy the Linux version of the main source code above and paste it into a [your chosen file name].cpp file. | # Copy the Linux version of the main source code above and paste it into a [your chosen file name].cpp file. | ||

# Copy the Linux version of the header source code above and paste it into a file named windows.h. | # Copy the Linux version of the header source code above and paste it into a file named windows.h. | ||

| Line 1,940: | Line 2,056: | ||

for parallelization using CUDA. The sigma (σ) and the kernel size can be increased in order to make the computation stressful on the GPU to get a significant benchmark. | for parallelization using CUDA. The sigma (σ) and the kernel size can be increased in order to make the computation stressful on the GPU to get a significant benchmark. | ||

| − | = Assignment 2 - Parallelize = | + | = Assignment 2/3 - Parallelize & Optimize = |

| + | * For gaussian blur we say it's unoptimized because we feel that there is more that can be done to reduce the execution times.<br/> | ||

| + | The code displayed in the code snippets does use CUDA parallel constructs and fine tuning techniques such as streaming - async. | ||

| + | == Gaussian Blur == | ||

{| class="wikitable mw-collapsible mw-collapsed" | {| class="wikitable mw-collapsible mw-collapsed" | ||

| − | ! | + | ! Unoptimized* - BlurImage( ... ) |

|- | |- | ||

| | | | ||

| Line 1,956: | Line 2,075: | ||

#include <windows.h> // for bitmap headers. | #include <windows.h> // for bitmap headers. | ||

#include <algorithm> | #include <algorithm> | ||

| + | #include <chrono> | ||

#include <cuda_runtime.h> | #include <cuda_runtime.h> | ||

| Line 1,962: | Line 2,082: | ||

#include <device_functions.h> | #include <device_functions.h> | ||

| − | //# | + | //#ifdef __CUDACC__ |

| − | + | //#if __CUDACC_VER_MAJOR__ == 1 | |

| − | //# | ||

//const int ntpb = 512; | //const int ntpb = 512; | ||

| + | //#else | ||

| + | //const int ntpb = 1024; | ||

| + | //#endif | ||

//#endif | //#endif | ||

| − | + | const int ntpb = 1024; | |

| − | const | + | const int STREAMS = 32; |

void check(cudaError_t error) { | void check(cudaError_t error) { | ||

| Line 2,118: | Line 2,240: | ||

} | } | ||

| − | + | struct BGRPixel { | |

| − | { | + | float b; |

| − | + | float g; | |

| − | + | float r; | |

| − | + | }; | |

| − | |||

| − | |||

| − | |||

| − | + | __global__ void blur_kernel(BGRPixel* imageIn, BGRPixel* imageOut, float* blur, int n_blur, int x, int start, int jump) { | |

| − | + | int idx = blockDim.x*blockIdx.x + threadIdx.x; // Location on the row | |

| − | + | if (idx < x) { | |

| − | + | int id = start + idx; | |

| − | + | int bstart = id - (n_blur / 2)*jump; | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | BGRPixel pixel{ 0.0f, 0.0f, 0.0f }; | |

| − | |||

| − | |||

| − | |||

| − | + | for (int i = 0; i < n_blur; ++i) { | |

| − | + | int bid = bstart + i*jump; | |

| − | + | float iblur = blur[i]; | |

| − | |||

| − | + | pixel.b += imageIn[bid].b * iblur; | |

| − | + | pixel.g += imageIn[bid].g * iblur; | |

| − | + | pixel.r += imageIn[bid].r * iblur; | |

| − | + | } | |

| − | + | imageOut[id].b = pixel.b; | |

| − | + | imageOut[id].g = pixel.g; | |

| − | + | imageOut[id].r = pixel.r; | |

| − | + | } | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

} | } | ||

void BlurImage(const SImageData& srcImage, SImageData &destImage, float xblursigma, float yblursigma, unsigned int xblursize, unsigned int yblursize) | void BlurImage(const SImageData& srcImage, SImageData &destImage, float xblursigma, float yblursigma, unsigned int xblursize, unsigned int yblursize) | ||

{ | { | ||

| − | + | int xImage = srcImage.m_width; // Width of image | |

| − | + | int yImage = srcImage.m_height; // Height of image | |

| − | float* | + | int imageSize = xImage*yImage; |

| + | |||

| + | int xPadded = xImage + (xblursize - 1); // Width including padding | ||

| + | int yPadded = yImage + (yblursize - 1); // Height including padding | ||

| + | int paddedSize = xPadded*yPadded; | ||

| + | |||

| + | int xPad = xblursize / 2; // Number of padding columns on each side | ||

| + | int yPad = yblursize / 2; | ||

| + | int padOffset = xPadded*yPad + xPad; // Offset to first pixel in padded image | ||

| + | |||

| + | float* pinnedImage = nullptr; | ||

| + | BGRPixel* d_padded1 = nullptr; | ||

| + | BGRPixel* d_padded2 = nullptr; | ||

| + | |||

| + | float* d_xblur = nullptr; // XBlur integrals | ||

| + | int n_xblur; // N | ||

| − | + | float* d_yblur = nullptr; // YBlur integrals | |

| − | int | + | int n_yblur; // N |

| − | + | // Allocate memory for host and device | |

| − | + | check(cudaHostAlloc((void**)&pinnedImage, 3 * imageSize * sizeof(float), 0)); | |

| + | check(cudaMalloc((void**)&d_padded1, paddedSize * sizeof(BGRPixel))); | ||

| + | check(cudaMalloc((void**)&d_padded2, paddedSize * sizeof(BGRPixel))); | ||

| − | + | // Copy image to pinned memory | |

| − | + | for (int i = 0; i < 3 * imageSize; ++i) { | |

| + | pinnedImage[i] = (float)srcImage.m_pixels[i]; | ||

| + | } | ||

| + | // Allocate and assign intergrals | ||

{ | { | ||

| − | + | auto row_blur = GaussianKernelIntegrals(xblursigma, xblursize); | |

| − | + | auto col_blur = GaussianKernelIntegrals(yblursigma, yblursize); | |

| − | + | ||

| − | + | // ROW | |

| + | n_xblur = row_blur.size(); | ||

| + | check(cudaMalloc((void**)&d_xblur, n_xblur * sizeof(float))); | ||

| + | check(cudaMemcpy(d_xblur, row_blur.data(), n_xblur * sizeof(float), cudaMemcpyHostToDevice)); | ||

| − | check(cudaMemcpy( | + | // COLUMN |

| + | n_yblur = col_blur.size(); | ||

| + | check(cudaMalloc((void**)&d_yblur, n_yblur * sizeof(float))); | ||

| + | check(cudaMemcpy(d_yblur, col_blur.data(), n_yblur * sizeof(float), cudaMemcpyHostToDevice)); | ||

} | } | ||

| − | |||

| − | |||

| − | |||

| − | + | cudaStream_t stream[STREAMS]; | |

| − | + | ||

| − | + | int nblks = (xImage + (ntpb - 1)) / ntpb; | |

| − | + | ||

| + | for (int i = 0; i < STREAMS; ++i) { | ||

| + | check(cudaStreamCreate(&stream[i])); | ||

| + | } | ||

| − | + | for (int i = 0; i < yImage;) { | |

| − | + | for (int j = 0; j < STREAMS && i < yImage; ++j, ++i) { | |

| + | cudaMemcpyAsync(d_padded1 + padOffset + i*xPadded, pinnedImage + (3 * i*xImage), 3 * xImage * sizeof(float), cudaMemcpyHostToDevice, stream[j]); | ||

} | } | ||

| + | } | ||

| − | + | for (int i = 0; i < yImage;) { | |

| + | for (int j = 0; j < STREAMS && i < yImage; ++j, ++i) { | ||

| + | blur_kernel << <nblks, ntpb, 0, stream[j] >> > (d_padded1, d_padded2, d_xblur, n_xblur, xImage, padOffset + i*xPadded, 1); | ||

| + | } | ||

| + | } | ||

| − | + | for (int i = 0; i < yImage;) { | |

| − | + | for (int j = 0; j < STREAMS && i < yImage; ++j, ++i) { | |

| + | blur_kernel << <nblks, ntpb, 0, stream[j] >> > (d_padded2, d_padded1, d_yblur, n_yblur, xImage, padOffset + i*xPadded, xPadded); | ||

| + | } | ||

| + | } | ||

| − | check( | + | for (int i = 0; i < yImage;) { |

| + | for (int j = 0; j < STREAMS && i < yImage; ++j, ++i) { | ||

| + | check(cudaMemcpyAsync(pinnedImage + (3 * i*xImage), d_padded1 + padOffset + i*xPadded, xImage * sizeof(BGRPixel), cudaMemcpyDeviceToHost, stream[j])); | ||

| + | } | ||

| + | } | ||

| + | |||

| + | for (int i = 0; i < STREAMS; ++i) { | ||

| + | check(cudaStreamSynchronize(stream[i])); | ||

| + | check(cudaStreamDestroy(stream[i])); | ||

} | } | ||

destImage.m_width = srcImage.m_width; | destImage.m_width = srcImage.m_width; | ||

destImage.m_height = srcImage.m_height; | destImage.m_height = srcImage.m_height; | ||

| − | destImage.m_pitch = srcImage.m_pitch; | + | destImage.m_pitch = srcImage.m_pitch; |

| − | destImage.m_pixels.resize | + | destImage.m_pixels.resize(srcImage.m_pixels.size()); |

| − | + | ||

| − | + | for (int i = 0; i < 3 * imageSize; i++) { | |

| − | + | destImage.m_pixels[i] = (uint8_t)pinnedImage[i]; | |

| − | + | }; | |

| − | + | ||

| − | + | check(cudaFree(d_xblur)); | |

| − | + | check(cudaFree(d_yblur)); | |

| − | + | ||

| − | + | check(cudaFreeHost(pinnedImage)); | |

| − | check(cudaFree( | + | check(cudaFree(d_padded1)); |

| − | check( | + | check(cudaFree(d_padded2)); |

| − | + | ||

| − | + | check(cudaDeviceReset()); | |

| − | + | } | |

| − | + | ||

| − | + | int main(int argc, char **argv) | |

| − | + | { | |

| − | + | float xblursigma, yblursigma; | |

| − | + | ||

| − | + | bool showUsage = argc < 5 || | |

| − | + | (sscanf(argv[3], "%f", &xblursigma) != 1) || | |

| − | + | (sscanf(argv[4], "%f", &yblursigma) != 1); | |

| − | + | ||

| − | // | + | char *srcFileName = argv[1]; |

| − | + | char *destFileName = argv[2]; | |

| − | + | ||

| − | + | if (showUsage) | |

| − | + | { | |

| − | + | printf("Usage: <source> <dest> <xblur> <yblur>\nBlur values are sigma\n\n"); | |

| − | + | WaitForEnter(); | |

| − | + | return 1; | |

| − | + | } | |

| − | + | ||

| − | + | // calculate pixel sizes, and make sure they are odd | |

| − | + | int xblursize = PixelsNeededForSigma(xblursigma) | 1; | |

| − | + | int yblursize = PixelsNeededForSigma(yblursigma) | 1; | |

| − | + | ||

| − | + | printf("Attempting to blur a 24 bit image.\n"); | |

| − | + | printf(" Source=%s\n Dest=%s\n blur=[%0.1f, %0.1f] px=[%d,%d]\n\n", srcFileName, destFileName, xblursigma, yblursigma, xblursize, yblursize); | |

| − | + | ||

| − | + | SImageData srcImage; | |

| − | + | if (LoadImage(srcFileName, srcImage)) | |

| − | + | { | |

| − | + | printf("%s loaded\n", srcFileName); | |

| − | + | SImageData destImage; | |

| − | + | ||

| − | + | auto t1 = std::chrono::high_resolution_clock::now(); | |

| − | + | BlurImage(srcImage, destImage, xblursigma, yblursigma, xblursize, yblursize); | |

| − | + | auto t2 = std::chrono::high_resolution_clock::now(); | |

| − | + | ||

| − | + | std::cout << "BlurImage time: " << std::chrono::duration_cast<std::chrono::microseconds>(t2 - t1).count() << "us" << std::endl; | |

| − | + | ||

| − | + | ||

| − | + | if (SaveImage(destFileName, destImage)) | |

| − | + | printf("Blurred image saved as %s\n", destFileName); | |

| − | + | else | |

| − | + | { | |

| − | + | printf("Could not save blurred image as %s\n", destFileName); | |

| − | + | WaitForEnter(); | |

| − | + | return 1; | |

| − | + | } | |

| − | + | } | |

| − | + | else | |

| − | + | { | |

| − | + | printf("could not read 24 bit bmp file %s\n\n", srcFileName); | |

| − | + | WaitForEnter(); | |

| − | + | return 1; | |

| − | + | } | |

| − | // | + | return 0; |

| − | + | } | |

| − | + | </syntaxhighlight> | |

| − | + | ||

| − | + | |} | |

| − | // | + | |

| − | + | == Objectives == | |

| − | + | The main objective was to not change the main function. This objective was met, although code had to be added for profiling. | |

| − | + | ||

| − | + | == Steps == | |

| − | + | === Host Memory Management === | |

| − | // | + | In the original program a bmp is loaded into an vector of uint8_t. This is not ideal for CUDA, therefore an array of pinned memory was allocated. This array contains the same amount of elements but stores them as a structure, "BGRPixel" which is three contiguous floats. The vector is then transferred over to pinned memory. |

| − | + | {| class="wikitable mw-collapsible mw-collapsed" | |

| − | } | + | ! Host Memory Management - Code( ... ) |

| + | |- | ||

| + | | | ||

| + | <syntaxhighlight lang="cpp"> | ||

| + | struct SImageData | ||

| + | { | ||

| + | SImageData() | ||

| + | : m_width(0) | ||

| + | , m_height(0) | ||

| + | { } | ||

| + | |||

| + | long m_width; | ||

| + | long m_height; | ||

| + | long m_pitch; | ||

| + | std::vector<uint8_t> m_pixels; | ||

| + | }; | ||

| + | |||

| + | struct BGRPixel { | ||

| + | float b; | ||

| + | float g; | ||

| + | float r; | ||

| + | }; | ||

| + | |||

| + | |||

| + | void BlurImage(const SImageData& srcImage, SImageData &destImage, float xblursigma, float yblursigma, unsigned int xblursize, unsigned int yblursize) | ||

| + | { | ||

| + | int xImage = srcImage.m_width; // Width of image | ||

| + | int yImage = srcImage.m_height; // Height of image | ||

| + | int imageSize = xImage*yImage; | ||

| + | |||

| + | int xPadded = xImage + (xblursize - 1); // Width including padding | ||

| + | int yPadded = yImage + (yblursize - 1); // Height including padding | ||

| + | int paddedSize = xPadded*yPadded; | ||

| + | |||

| + | int xPad = xblursize / 2; // Number of padding columns on each side | ||

| + | int yPad = yblursize / 2; | ||

| + | int padOffset = xPadded*yPad + xPad; // Offset to first pixel in padded image | ||

| + | |||

| + | float* pinnedImage = nullptr; | ||

| + | BGRPixel* d_padded1 = nullptr; | ||

| + | BGRPixel* d_padded2 = nullptr; | ||

| + | |||

| + | // ... | ||

| + | |||

| + | // Allocate memory for host and device | ||

| + | check(cudaHostAlloc((void**)&pinnedImage, 3 * imageSize * sizeof(float), 0)); | ||

| + | check(cudaMalloc((void**)&d_padded1, paddedSize * sizeof(BGRPixel))); | ||

| + | check(cudaMalloc((void**)&d_padded2, paddedSize * sizeof(BGRPixel))); | ||

| + | |||

| + | // Copy image to pinned memory | ||

| + | for (int i = 0; i < 3 * imageSize; ++i) { | ||

| + | pinnedImage[i] = (float)srcImage.m_pixels[i]; | ||

| + | } | ||

| + | |||

| + | // ... | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | |} | ||

| + | |||

| + | === Device Memory Management === | ||

| + | To get a blurred pixel the surrounding pixels must be sampled, in some cases this means sampling pixels outside the bounds of the image. In the original, a simple if check was used to determine if the pixel was outside the bounds or the image, if it was a black pixel was returned instead. This if statement most likely would have caused massive thread divergence in a kernel, therefore the images created in device memory featured additional padding of black pixels to compensate for this. Two such images were created, one to perform horizontal blur and one to perform vertical blur. Other small device arrays were also needed to store the Gaussian integrals that are used to produce the blurring effect.<br> | ||

| + | {| class="wikitable mw-collapsible mw-collapsed" | ||

| + | ! Padding example | ||

| + | |- | ||

| + | | | ||

| + | |||

| + | <div style="display:inline;"> | ||

| + | [[File:shrunk.png]] | ||

| + | </div> | ||

| + | <div style="display:inline;"> | ||

| + | [[File:pad.png]] | ||

| + | </div> | ||

| + | <br> | ||

| + | This is how the image would be padded for 3x3 sigma blur. | ||

| + | |||

| + | The original image is 2560x1600 -> 11.7MB | ||

| + | |||

| + | With blur sigmas [x = 3, y = 3] and conversion to float the padded images will be 2600x1640 -> 48.8MB | ||

| + | |||

| + | Increase of 4.1% pixels and with the conversion for uint8_t to float total increase of 317% in memory requirements on the GPU | ||

| + | |||

| + | Since two padded images are needed at least 97.6MB will be on the GPU | ||

| + | |||

| + | |} | ||

| + | |||

| + | === Host to Device === | ||

| + | To copy the pinned image to the device an array of streams was used to asynchronously copy each row of the image over. Doing so allowed the rows to be easily copied over while avoiding infringing on the extra padding pixels. | ||

| + | === Kernels === | ||

| + | First one image is blurred horizontally. One image is used as a reference while the other is written to. Kernels are also executed using the streams, so that each stream will blur a single row at a time. After the horizontal blur is finished the vertical blur is launched in the same manner, except that the previously written to image is used as a reference while the previous reference is now written to. The two blur are able to use the same kernel due to the fact that the pixel sampling technique works by iterating through pixels because of this the step size can be changed to sample across the row or down the column. | ||

| + | === Device to Host === | ||

| + | After that is done the image is copied back using the streams in the same way it was copied over. | ||

| + | === Code === | ||

| + | |||

| + | {| class="wikitable mw-collapsible mw-collapsed" | ||

| + | ! Unoptimized* - BlurImage -- Exert( ... ) | ||

| + | |- | ||

| + | | | ||

| + | <syntaxhighlight lang="cpp"> | ||

| + | const int ntpb = 1024; | ||

| + | const int STREAMS = 32; | ||

| + | |||

| + | void check(cudaError_t error) { | ||

| + | if (error != cudaSuccess) { | ||

| + | throw std::exception(cudaGetErrorString(error)); | ||

| + | } | ||

| + | } | ||

| + | |||

| + | struct SImageData | ||

| + | { | ||

| + | SImageData() | ||

| + | : m_width(0) | ||

| + | , m_height(0) | ||

| + | { } | ||

| + | |||

| + | long m_width; | ||

| + | long m_height; | ||

| + | long m_pitch; | ||

| + | std::vector<uint8_t> m_pixels; | ||

| + | }; | ||

| + | |||

| + | float Gaussian(float sigma, float x) | ||

| + | { | ||

| + | return expf(-(x*x) / (2.0f * sigma*sigma)); | ||

| + | } | ||

| + | |||

| + | float GaussianSimpsonIntegration(float sigma, float a, float b) | ||

| + | { | ||

| + | return | ||

| + | ((b - a) / 6.0f) * | ||

| + | (Gaussian(sigma, a) + 4.0f * Gaussian(sigma, (a + b) / 2.0f) + Gaussian(sigma, b)); | ||

| + | } | ||

| + | |||

| + | std::vector<float> GaussianKernelIntegrals(float sigma, int taps) | ||

| + | { | ||

| + | std::vector<float> ret; | ||

| + | float total = 0.0f; | ||

| + | for (int i = 0; i < taps; ++i) | ||

| + | { | ||

| + | float x = float(i) - float(taps / 2); | ||

| + | float value = GaussianSimpsonIntegration(sigma, x - 0.5f, x + 0.5f); | ||

| + | ret.push_back(value); | ||

| + | total += value; | ||

| + | } | ||

| + | // normalize it | ||

| + | for (unsigned int i = 0; i < ret.size(); ++i) | ||

| + | { | ||

| + | ret[i] /= total; | ||

| + | } | ||

| + | return ret; | ||

| + | } | ||

| + | |||

| + | struct BGRPixel { | ||

| + | float b; | ||

| + | float g; | ||

| + | float r; | ||

| + | }; | ||

| + | |||

| + | __global__ void blur_kernel(BGRPixel* imageIn, BGRPixel* imageOut, float* blur, int n_blur, int x, int start, int jump) { | ||

| + | int idx = blockDim.x*blockIdx.x + threadIdx.x; // Location on the row | ||

| + | |||

| + | if (idx < x) { | ||

| + | int id = start + idx; | ||

| + | int bstart = id - (n_blur / 2)*jump; | ||

| + | |||

| + | BGRPixel pixel{ 0.0f, 0.0f, 0.0f }; | ||

| + | |||

| + | for (int i = 0; i < n_blur; ++i) { | ||

| + | int bid = bstart + i*jump; | ||

| + | float iblur = blur[i]; | ||

| + | |||

| + | pixel.b += imageIn[bid].b * iblur; | ||

| + | pixel.g += imageIn[bid].g * iblur; | ||

| + | pixel.r += imageIn[bid].r * iblur; | ||

| + | } | ||

| + | |||

| + | imageOut[id].b = pixel.b; | ||

| + | imageOut[id].g = pixel.g; | ||

| + | imageOut[id].r = pixel.r; | ||

| + | } | ||

| + | } | ||

| + | |||

| + | void BlurImage(const SImageData& srcImage, SImageData &destImage, float xblursigma, float yblursigma, unsigned int xblursize, unsigned int yblursize) | ||

| + | { | ||

| + | int xImage = srcImage.m_width; // Width of image | ||

| + | int yImage = srcImage.m_height; // Height of image | ||

| + | int imageSize = xImage*yImage; | ||

| + | |||

| + | int xPadded = xImage + (xblursize - 1); // Width including padding | ||

| + | int yPadded = yImage + (yblursize - 1); // Height including padding | ||

| + | int paddedSize = xPadded*yPadded; | ||

| + | |||

| + | int xPad = xblursize / 2; // Number of padding columns on each side | ||

| + | int yPad = yblursize / 2; | ||

| + | int padOffset = xPadded*yPad + xPad; // Offset to first pixel in padded image | ||

| + | |||

| + | float* pinnedImage = nullptr; | ||

| + | BGRPixel* d_padded1 = nullptr; | ||

| + | BGRPixel* d_padded2 = nullptr; | ||

| + | |||

| + | float* d_xblur = nullptr; // XBlur integrals | ||

| + | int n_xblur; // N | ||

| + | |||

| + | float* d_yblur = nullptr; // YBlur integrals | ||

| + | int n_yblur; // N | ||

| + | |||

| + | // Allocate memory for host and device | ||

| + | check(cudaHostAlloc((void**)&pinnedImage, 3 * imageSize * sizeof(float), 0)); | ||

| + | check(cudaMalloc((void**)&d_padded1, paddedSize * sizeof(BGRPixel))); | ||

| + | check(cudaMalloc((void**)&d_padded2, paddedSize * sizeof(BGRPixel))); | ||

| + | |||

| + | // Copy image to pinned memory | ||

| + | for (int i = 0; i < 3 * imageSize; ++i) { | ||

| + | pinnedImage[i] = (float)srcImage.m_pixels[i]; | ||

| + | } | ||

| + | |||

| + | // Allocate and assign intergrals | ||

| + | { | ||

| + | auto row_blur = GaussianKernelIntegrals(xblursigma, xblursize); | ||

| + | auto col_blur = GaussianKernelIntegrals(yblursigma, yblursize); | ||

| + | |||

| + | // ROW | ||

| + | n_xblur = row_blur.size(); | ||

| + | check(cudaMalloc((void**)&d_xblur, n_xblur * sizeof(float))); | ||

| + | check(cudaMemcpy(d_xblur, row_blur.data(), n_xblur * sizeof(float), cudaMemcpyHostToDevice)); | ||

| + | |||

| + | // COLUMN | ||

| + | n_yblur = col_blur.size(); | ||

| + | check(cudaMalloc((void**)&d_yblur, n_yblur * sizeof(float))); | ||

| + | check(cudaMemcpy(d_yblur, col_blur.data(), n_yblur * sizeof(float), cudaMemcpyHostToDevice)); | ||

| + | } | ||

| + | |||

| + | |||

| + | cudaStream_t stream[STREAMS]; | ||

| + | |||

| + | int nblks = (xImage + (ntpb - 1)) / ntpb; | ||

| + | |||

| + | for (int i = 0; i < STREAMS; ++i) { | ||

| + | check(cudaStreamCreate(&stream[i])); | ||

| + | } | ||

| + | |||

| + | for (int i = 0; i < yImage;) { | ||

| + | for (int j = 0; j < STREAMS && i < yImage; ++j, ++i) { | ||

| + | cudaMemcpyAsync(d_padded1 + padOffset + i*xPadded, pinnedImage + (3 * i*xImage), 3 * xImage * sizeof(float), cudaMemcpyHostToDevice, stream[j]); | ||

| + | } | ||

| + | } | ||

| + | |||

| + | for (int i = 0; i < yImage;) { | ||

| + | for (int j = 0; j < STREAMS && i < yImage; ++j, ++i) { | ||

| + | blur_kernel << <nblks, ntpb, 0, stream[j] >> > (d_padded1, d_padded2, d_xblur, n_xblur, xImage, padOffset + i*xPadded, 1); | ||

| + | } | ||

| + | } | ||

| + | |||

| + | for (int i = 0; i < yImage;) { | ||

| + | for (int j = 0; j < STREAMS && i < yImage; ++j, ++i) { | ||

| + | blur_kernel << <nblks, ntpb, 0, stream[j] >> > (d_padded2, d_padded1, d_yblur, n_yblur, xImage, padOffset + i*xPadded, xPadded); | ||

| + | } | ||

| + | } | ||

| + | |||

| + | for (int i = 0; i < yImage;) { | ||

| + | for (int j = 0; j < STREAMS && i < yImage; ++j, ++i) { | ||

| + | check(cudaMemcpyAsync(pinnedImage + (3 * i*xImage), d_padded1 + padOffset + i*xPadded, xImage * sizeof(BGRPixel), cudaMemcpyDeviceToHost, stream[j])); | ||

| + | } | ||

| + | } | ||

| + | |||

| + | for (int i = 0; i < STREAMS; ++i) { | ||

| + | check(cudaStreamSynchronize(stream[i])); | ||

| + | check(cudaStreamDestroy(stream[i])); | ||

| + | } | ||

| + | |||

| + | destImage.m_width = srcImage.m_width; | ||

| + | destImage.m_height = srcImage.m_height; | ||

| + | destImage.m_pitch = srcImage.m_pitch; | ||

| + | destImage.m_pixels.resize(srcImage.m_pixels.size()); | ||

| + | |||

| + | for (int i = 0; i < 3 * imageSize; i++) { | ||

| + | destImage.m_pixels[i] = (uint8_t)pinnedImage[i]; | ||

| + | }; | ||

| + | |||

| + | check(cudaFree(d_xblur)); | ||

| + | check(cudaFree(d_yblur)); | ||

| + | |||

| + | check(cudaFreeHost(pinnedImage)); | ||

| + | check(cudaFree(d_padded1)); | ||

| + | check(cudaFree(d_padded2)); | ||

| + | |||

| + | check(cudaDeviceReset()); | ||

| + | } | ||

| + | |||

| + | </syntaxhighlight> | ||

| + | |||

| + | |} | ||

| + | |||

| + | == Results == | ||

| + | Obtained using Quadro K620<br> | ||

| + | [[File:uvso2.png]] | ||

| + | [[File:usession.png]] | ||

| + | [[File:ktimes.png]] | ||

| + | <br> | ||

| + | Using a Quadro K2000 | ||

| + | <br> | ||

| + | [[File:streams.png]] | ||

| + | |||

| + | == Output Images == | ||

| + | [http://imgur.com/a/CtMOc Image Gallery] | ||

| + | [https://seneca-my.sharepoint.com/personal/jkraitberg_myseneca_ca/_layouts/15/guestaccess.aspx?docid=099a13c42168943b587de4b59e4634e06&authkey=Afl_iMqjNyFhoYu3bopOw5E 135MB Image] | ||

| + | [https://seneca-my.sharepoint.com/personal/jkraitberg_myseneca_ca/_layouts/15/guestaccess.aspx?docid=007880dac1dd74d09b74fc448dc3fac38&authkey=AdqHCKEjZCXzlyftjZWxFCA 135MB 3x3 Result] | ||

| + | |||

| + | == Mandelbrot == | ||

| + | {| class="wikitable mw-collapsible mw-collapsed" | ||

| + | ! Unoptimized - Mandelbrot( ... ) | ||

| + | |- | ||

| + | | | ||

| + | <syntaxhighlight lang="cpp"> | ||

| + | //C++ Includes | ||

| + | #include <iostream> | ||

| + | #include <complex> | ||

| + | #include <vector> | ||

| + | #include <chrono> | ||

| + | #include <functional> | ||

| + | #include <cuda_runtime.h> | ||

| + | |||

| + | //CUDA Complex Numbers | ||

| + | #include <cuComplex.h> | ||

| + | |||

| + | //Helper Includes | ||

| + | #include "window.h" | ||

| + | #include "save_image.h" | ||

| + | #include "utils.h" | ||

| + | |||

| + | const int ntpb = 32; | ||

| + | |||

| + | //Compute Color for each pixel | ||

| + | __global__ void computeMandelbrot( int iter_max, int* d_colors, | ||

| + | int fract_width, int fract_height, | ||

| + | int scr_width, int scr_height, | ||

| + | int fract_xmin, int fract_ymin){ | ||

| + | |||

| + | int row = blockIdx.y * blockDim.y + threadIdx.y; //Row | ||

| + | int col = blockIdx.x * blockDim.x + threadIdx.x; //Col | ||

| + | |||

| + | int idx = row * scr_width + col; //Pixel Index | ||

| + | |||

| + | if(col < scr_width && row < scr_height){ | ||

| + | |||

| + | //Use Floating Complex Numbers to calculate color for each pixel | ||

| + | int result = 0; | ||

| + | cuFloatComplex c = make_cuFloatComplex((float)col, (float)row); | ||

| + | cuFloatComplex d = make_cuFloatComplex(cuCrealf(c) / (float)scr_width * fract_width + fract_xmin , cuCimagf(c) / (float)scr_height * fract_height + fract_ymin); | ||

| + | cuFloatComplex z = make_cuFloatComplex(0.0f, 0.0f); | ||

| + | |||

| + | while((cuCabsf(z) < 2.0f) && (result < iter_max)){ | ||

| + | z = (cuCaddf(cuCmulf(z,z),d)); | ||

| + | result++; | ||

| + | } | ||

| + | d_colors[idx] = result; //Output | ||

| + | } | ||

| + | } | ||

| + | |||

| + | void mandelbrot(){ | ||

| + | window<int> scr(0, 1000, 0, 1000); //Image Size | ||

| + | window<float> fract(-2.2,1.2,-1.7,1.7); //Fractal Size | ||

| + | int iter_max = 500; //Iterations | ||

| + | const char* fname = "mandlebrot_gpu.png"; //Output File Name | ||

| + | bool smooth_color = true; //Color Smoothing | ||

| + | |||

| + | int nblks = (scr.width() + ntpb - 1)/ ntpb; //Blocks | ||

| + | std::vector<int> colors(scr.size()); //Output Vector | ||

| + | |||

| + | //Allocate Device Memory | ||

| + | int* d_colors; | ||

| + | cudaMalloc((void**)&d_colors, scr.size() * sizeof(int)); | ||

| + | |||

| + | //Grid Layout | ||

| + | dim3 dGrid(nblks, nblks); | ||

| + | dim3 dBlock(ntpb, ntpb); | ||

| + | |||

| + | //Execute Kernel | ||

| + | auto start = std::chrono::steady_clock::now(); | ||

| + | computeMandelbrot<<<dGrid, dBlock>>>(iter_max, d_colors, fract.width(), fract.height(), scr.width(), scr.height(), fract.x_min(), fract.y_min()); | ||

| + | cudaDeviceSynchronize(); | ||

| + | auto end = std::chrono::steady_clock::now(); | ||

| + | |||

| + | //Output Time | ||

| + | std::cout << "Time to generate " << fname << " = " << std::chrono::duration <float, std::milli> (end - start).count() << " [ms]" << std::endl; | ||

| + | |||

| + | //Copy Data back to Host | ||

| + | cudaMemcpy(colors.data(), d_colors, scr.size() * sizeof(int), cudaMemcpyDeviceToHost); | ||

| + | |||

| + | //Plot Data and Free Memory | ||

| + | plot(scr, colors, iter_max, fname, smooth_color); | ||

| + | cudaFree(d_colors); | ||

| + | } | ||

| + | |||

| + | int main(){ | ||

| + | mandelbrot(); | ||

| + | return 0; | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |} | ||

| − | + | === Objectives === | |

| − | + | The main objective was refactor the get_number_iterations() function and the subsequent functions called that created the nested loops. The objective was met as all the functions were refactored into a single device function that did the calculation for a single pixel of the image. As the original program was done with doubles, all of the doubles were changed to floats. | |

| − | |||

| − | + | === Steps === | |

| − | |||

| − | |||

| − | + | === Host Memory Management === | |

| − | + | No changes were needed to the Host Memory as no data is copied from the host to the device. The vector on the host that contains the data was not changed and data from the device was copied to this vector to be output the plot file. | |

| − | + | === Device Memory Management === | |

| − | + | Only a single array to hold the value for each pixel was created on the device. This array has a size of image width * image height and the row and columns for each image are calculated from this which are used in the complex number calculations along with the values that specify the parameters of the fractal. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | === Kernels === | |

| − | + | The three functions from the original code ( get_number_iterations() , escape() and scale() were refactored into a single computeMandelbrot() function. The device kernel calculates the row and column for the pixel and then uses the row and colmn values along with the picture width and fractal parameters to calculate the value. Complex floating point numbers are used using the cuComplex.h header file which also includes the operations for the complex numbers as well. As threads are not reliant on each other for any data, no use of __syncthreads() is required. As threads complete computing the values, they output the value to the d_colors array. | |

| − | |||

| − | + | === Device to Host === | |

| − | + | After that is done the image is copied back using a single memcpy to the host. | |

| − | + | === Results === | |

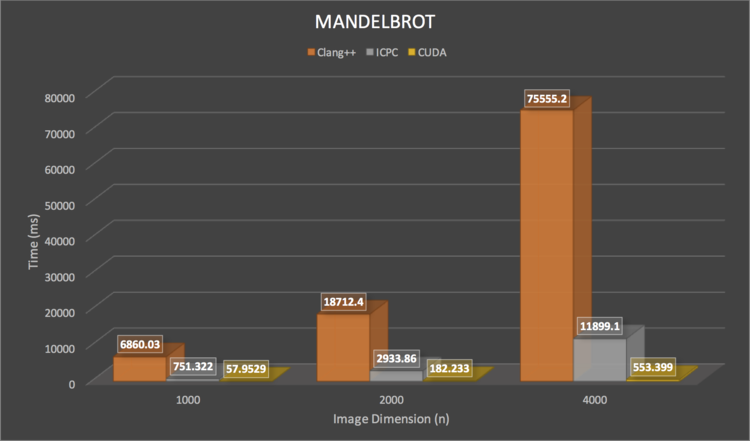

| − | + | The program was compiled using clang++ , icpc (Intel Parallel Studio Compiler) and NVCC for the GPU. Runtimes for the standard clang++ version were extremely slow as the size of the resultant image increased. Compiling the program using the icpc compiler brought in significant changes without modifying any code and reduced runtimes drastically for running purely on a CPU. Using the parallel version based on CUDA improved the runtime massively over the clang++ compiled version and even the icpc version as more values could be calculated in parallel. | |

| − | + | [[Image:Mandelbrot.png | 750px]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | === Output Images === | |

| + | [http://imgur.com/a/R3ZAH Image Output] | ||

| − | = | + | === Future Optimizations === |

| + | As there isn't any data intensive tasks in this program, further optimizations would include creating streams of kernels and having them execute concurrently in order to improve runtime of the current solution. | ||

Latest revision as of 20:30, 12 April 2017

Contents

[hide]- 1 Assignment 1 - Select and Assess

- 2 Assignment 2/3 - Parallelize & Optimize

Assignment 1 - Select and Assess

Introduction : GPU Benchmarking/Testing using Mandelbrot Sets : Kartik Nagarajan

This program generates Mandelbrot sets using CPU's and then saves them to the folder as png's using the freeimage library.

The program is open-source and can be fetched directly from GitHub from https://github.com/sol-prog/Mandelbrot_Set

To compile the program, FreeImage is required to be installed.

Compilation Instructions:

For Unix based systems:

g++ -std=c++11 save_image.cpp utils.cpp mandel.cpp -lfreeimage

OSX:

clang++ -std=c++11 save_image.cpp utils.cpp mandel.cpp -lfreeimage

The program can then be executed by running the compiled binary and it will display the time it took to generate the Mandelbrot set and save the pictures.

| [Expand] Mandelbrot CPU( ... ) |

|---|

Observations

The program takes a significant amount of time to run as the calculations are being done on the CPU. There are nested loops present within the program that can be parallelized to make the program faster.

The code also has the size of the image and the iterations hard-coded which can be modified to make the program significantly longer to process and make it tough on the GPU's for benchmarking and stability testing by running the process in a loop. The code is relatively straight forward and the parallelization should also be easy to implement and test.

Hotspot

Hotspot for the program was found in the fractal() function which calls the get_iterations() function that contains 2-nested for loops and a call to escape() which contains a while loop. Profiling the runtime with Instruments on OSX displayed that the fractal() function took up the most amount of runtime and this is the function that will be parallelized using CUDA. Once the function is parallelized, the iterations and size of the image can be increased in order to make the computation relatively stressful on the GPU to get a benchmark or looped in order to do stress testing for GPUs.

Profiling Data Screenshots

Profile - Profile

Hotspot Code - Hotspot Code

Introduction : GPU Benchmarking/Testing for NBody : Joshua Kraitberg

This program uses Newtonian mechanics and a four-order symplectic Candy-Rozmus integration (a symplectic algorithm guarantees exact conservation of energy and angular momentum). The initial conditions are obtained from JPL Horizons, ahd constants (like masses, gravitational constant) are those recommended by the International Astronomical Union. The program currently does not take into account effects like general relativity, the non-spherical shapes of celestial objects, tidal effects on Earth, etc. It also does not take the 500 asteroids used by JPL Horizons into accound in its model of the Solar System.

Compilation Instructions:

For Unix/Linux based systems:

g++ -std=c++11 c++/nbody.cpp

Observations

The program is quite fast for being a single-threaded CPU application. Almost all the CPU time is spent manipulating data and iterating in vectors.

Hotspot

Essentially all the time spent running is spent in the doing calculation on vectors. The dowork function iteratively calls the CRO_step function found in integrators.h file. The CRO_step function is where most of the vector calculations take place. A large amount of is also done in the calculate_a function which is used to calulate the acceleration on all the planets.

Profiling Data and Screenshots

| [Expand] NBody Hot Functions |

|---|

| [Expand] NBody Hot Spot Data |

|---|

| [Expand] NBody gprof Complete Data (Warning: long) |

|---|

Introduction : GPU Benchmarking/Gaussian Blur Filter : Colin Paul

What is Gaussian blurring?

At a high level, Gaussian blurring works just like box blurring in that there is a weight per pixel and that for each pixel, you apply the weights to that pixel and it’s neighbors to come up

with the final value for the blurred pixel. It uses a convolution pattern which is a linear stencil that applies fixed weights to the elements of a neighborhood in the combination operation.

With true Gaussian blurring however, the function that defines the weights for each pixel technically never reaches zero, but gets smaller and smaller over distance. In theory, this makes a

Gaussian kernel infinitely large. In practice though, you can choose a cut-off point and set the bounds.

The parameters to a Gaussian blur are:

- Sigma (σ) – This defines how much blur there is. A larger number is a higher amount of blur.

- Radius – The size of the kernel in pixels. The appropriate pixel size can be calculated for a specific sigma, but more information on that lower down.

Just like box blur, a Gaussian blur is separable which means that you can either apply a 2D convolution kernel, or you can apply a 1D convolution kernel on each axis. Doing a single 2D convolution

means more calculations, but you only need one buffer to put the results into. Doing two 1D convolutions (one on each axis), ends up being fewer calculations, but requires two buffers to put the results

into (one intermediate buffer to hold the first axis results).

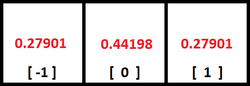

Here is a 3 pixel 1D Gaussian Kernel for a sigma of 1.0:

This kernel is useful for a two pass algorithm: First, perform a horizontal blur with the weights below and then perform a vertical blur on the resulting image (or vice versa).

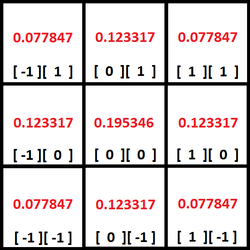

Below is a 3×3 pixel 2D Gaussian Kernel also with a sigma of 1.0. Note that this can be calculated as an outer product (tensor product) of 1D kernels:

These weights below be used directly in a single pass blur algorithm: n2 samples per pixel.

An interesting property of Gaussian blurs is that you can apply multiple smaller blurs and it will come up with the result as if you did a larger Blur. Unfortunately it’s more

calculations doing multiple smaller blurs so is not usually worth while.

If you apply multiple blurs, the equivalent blur is the square root of the sum of the squares of the blur. Taking wikipedia’s example, if you applied a blur with radius 6 and a blur

with a radius of 8, you’d end up with the equivelant of a radius 10 blur. This is because √ ( 62 + 82 ) = 10

Calculating The Kernel

There are a couple ways to calculate a Gaussian kernel.

Pascal’s triangle approaches the Gaussian bell curve as the row number reaches infinity. Pascal’s triangle also represents the numbers that each term

is calculated by after expanding binomials (x + y)N. So technically, you could use a row from Pascal’s triangle as a 1D kernel and normalize the result, but it isn’t the most accurate.

A better way is to use the Gaussian function which is this: e-x2/(2 * σ2)

Where the sigma is your blur amount and x ranges across your values from the negative to the positive. For instance, if your kernel was 5 values, it would range from -2 to +2.

An even better way would be to integrate the Gaussian function instead of just taking point samples. Refer to the two graphs on the right.

The graphs plot the continuous distribution function and the discrete kernel approximation. One thing to look out for are the tails of the distribution vs. kernel weight:

For the current configuration, we have 13.36% of the curve’s area outside the discrete kernel. Note that the weights are renormalized such that the sum of all weights is one. Or in other words:

the probability mass outside the discrete kernel is redistributed evenly to all pixels within the kernel. The weights are calculated by numerical integration of the continuous gaussian distribution

over each discrete kernel tap.

Make sure to normalize the result so that the weights add up to 1. This makes sure that your blurring doesn’t make the image get brighter (greater than 1) or dimmer (less than 1).

Calculating The Kernel Size

Given a sigma value, you can calculate the size of the kernel you need by using this formula:1 + 2 √ ( -2σ2 ln 0.0005 )

That formula makes a Kernel large enough such that it cuts off when the value in the kernel is less than 0.5%. You can adjust the number in there to higher or lower depending on your desires for

speed versus quality.

Running the program

Code

| [Expand] Windows source- Gassusan Blur Filter Main (Visual Studio) |

|---|

Ported to Linux:

| [Expand] Linux source - Gassusan Blur Filter Main (Command Line) |

|---|

| [Expand] Linux source - Gassusan Blur Filter Header (Linux cannot use Windows API, replicated the required structs. Ref: MSDN 12) |

|---|

Windows

To compile and run the program:

- Set-up an empty Visual C++ - Visual Studio project.

- Save this image and place it in your projects directory.

- Copy the Windows version of the main source code above and paste it into a [your chosen file name].cpp file.

- Go into you Debug properties of your project.

- Add four (4) values into the Debugging -> Command Arguments (outlined below)

- Run in Release x64

The command line arguments are structured as follows:

[input image filename].bmp [output image filename].bmp [x - sigma value] [y - sigmea value] => cinque_terre.bmp cinque_terre_BLURRED.bmp 3.0 3.0

Linux

To compile and run the program:

- Navigate to the directory you want to run the program in.

- Save this image and place it into the directory you will be running the program from.

- Copy the Linux version of the main source code above and paste it into a [your chosen file name].cpp file.

- Copy the Linux version of the header source code above and paste it into a file named windows.h.

Compile the binaries using the following command:

g++ -O2 -std=c++0x -Wall -pedantic [your chosen file name].cpp -o gblur

The command line arguments are structured as follows:

[input image filename].bmp [output image filename].bmp [x - sigma value] [y - sigmea value]

Run the compiled program with the required arguments

./gblur cinque_terre.bmp cinque_terre_BLURRED.bmp 3.0 3.0

Mac OS X

To compile and run the program:

- Navigate to the directory you want to run the program in.

- Save this image and place it into the directory you will be running the program from.

- Copy the Linux version of the main source code above and paste it into a [your chosen file name].cpp file.

- Copy the Linux version of the header source code above and paste it into a file named windows.h.

Compile the binaries using the following command:

clang++ -O2 -std=c++0x -Wall -pedantic [your chosen file name].cpp -o gblur

The command line arguments are structured as follows:

[input image filename].bmp [output image filename].bmp [x - sigma value] [y - sigmea value]

Run the compiled program with the required arguments

./gblur cinque_terre.bmp cinque_terre_BLURRED.bmp 3.0 3.0

Analysis

Flat profile: Each sample counts as 0.01 seconds. % cumulative self self total time seconds seconds calls ns/call ns/call name 61.38 3.37 3.37 BlurImage(SImageData const&, SImageData&, float, float, unsigned int, unsigned int) 38.62 5.49 2.12 172032000 12.32 12.32 GetPixelOrBlack(SImageData const&, int, int) 0.00 5.49 0.00 126 0.00 0.00 Gaussian(float, float) 0.00 5.49 0.00 42 0.00 0.00 GaussianSimpsonIntegration(float, float, float) 0.00 5.49 0.00 12 0.00 0.00 void std::vector<float, std::allocator<float> >::_M_insert_aux<float const&>(__gnu_cxx::__normal_iterator<float*, std::vector<float, std::allocator<float> > >, float const&&&) 0.00 5.49 0.00 3 0.00 0.00 std::vector<unsigned char, std::allocator<unsigned char> >::_M_default_append(unsigned int) 0.00 5.49 0.00 2 0.00 0.00 GaussianKernelIntegrals(float, int) 0.00 5.49 0.00 1 0.00 0.00 _GLOBAL__sub_I__Z12WaitForEnterv

Call graph:

granularity: each sample hit covers 4 byte(s) for 0.18% of 5.49 seconds

index % time self children called name

<spontaneous>

[1] 100.0 3.37 2.12 BlurImage(SImageData const&, SImageData&, float, float, unsigned int, unsigned int) [1]

2.12 0.00 172032000/172032000 GetPixelOrBlack(SImageData const&, int, int) [2]

0.00 0.00 2/2 GaussianKernelIntegrals(float, int) [11]

0.00 0.00 2/3 std::vector<unsigned char, std::allocator<unsigned char> >::_M_default_append(unsigned int) [10]

-----------------------------------------------

2.12 0.00 172032000/172032000 BlurImage(SImageData const&, SImageData&, float, float, unsigned int, unsigned int) [1]

[2] 38.6 2.12 0.00 172032000 GetPixelOrBlack(SImageData const&, int, int) [2]

-----------------------------------------------

0.00 0.00 126/126 GaussianSimpsonIntegration(float, float, float) [8]

[7] 0.0 0.00 0.00 126 Gaussian(float, float) [7]

-----------------------------------------------

0.00 0.00 42/42 GaussianKernelIntegrals(float, int) [11]

[8] 0.0 0.00 0.00 42 GaussianSimpsonIntegration(float, float, float) [8]

0.00 0.00 126/126 Gaussian(float, float) [7]

-----------------------------------------------

0.00 0.00 12/12 GaussianKernelIntegrals(float, int) [11]

[9] 0.0 0.00 0.00 12 void std::vector<float, std::allocator<float> >::_M_insert_aux<float const&>(__gnu_cxx::__normal_iterator<float*, std::vector<float, std::allocator<float> > >, float const&&&) [9]

-----------------------------------------------

0.00 0.00 1/3 LoadImage(char const*, SImageData&) [15]

0.00 0.00 2/3 BlurImage(SImageData const&, SImageData&, float, float, unsigned int, unsigned int) [1]

[10] 0.0 0.00 0.00 3 std::vector<unsigned char, std::allocator<unsigned char> >::_M_default_append(unsigned int) [10]

-----------------------------------------------

0.00 0.00 2/2 BlurImage(SImageData const&, SImageData&, float, float, unsigned int, unsigned int) [1]

[11] 0.0 0.00 0.00 2 GaussianKernelIntegrals(float, int) [11]

0.00 0.00 42/42 GaussianSimpsonIntegration(float, float, float) [8]

0.00 0.00 12/12 void std::vector<float, std::allocator<float> >::_M_insert_aux<float const&>(__gnu_cxx::__normal_iterator<float*, std::vector<float, std::allocator<float> > >, float const&&&) [9]

-----------------------------------------------

0.00 0.00 1/1 __do_global_ctors_aux [18]

[12] 0.0 0.00 0.00 1 _GLOBAL__sub_I__Z12WaitForEnterv [12]

-----------------------------------------------

Index by function name

[12] _GLOBAL__sub_I__Z12WaitForEnterv (gaussian.cpp) [8] GaussianSimpsonIntegration(float, float, float) [9] void std::vector<float, std::allocator<float> >::_M_insert_aux<float const&>(__gnu_cxx::__normal_iterator<float*, std::vector<float, std::allocator<float> > >, float const&&&)

[2] GetPixelOrBlack(SImageData const&, int, int) [7] Gaussian(float, float) [10] std::vector<unsigned char, std::allocator<unsigned char> >::_M_default_append(unsigned int)

[11] GaussianKernelIntegrals(float, int) [1] BlurImage(SImageData const&, SImageData&, float, float, unsigned int, unsigned int)

Observations

The program does not take a long time to run, but run-time depends on the values of sigma (σ) and the kernel block size. If you specify larger values for these parameters the runtime increases

significantly. The code is straight forward and parallelization should be easy to implement.

Hotspot

| [Expand] Culptit - BlurImage( ... ) |

|---|

According to the Flat profile, 61.38% of the time is spent in the BlurImage function. This function contains a set of triply-nested for-loops which equates to a run-time of T(n) is O(n3).

Referring to the Call graph we can see more supporting evidence that this application spends nearly all of its execution time in the BlurImage function. Therefore this function is the prime candidate

for parallelization using CUDA. The sigma (σ) and the kernel size can be increased in order to make the computation stressful on the GPU to get a significant benchmark.

Assignment 2/3 - Parallelize & Optimize

* For gaussian blur we say it's unoptimized because we feel that there is more that can be done to reduce the execution times.

The code displayed in the code snippets does use CUDA parallel constructs and fine tuning techniques such as streaming - async.

Gaussian Blur

| [Expand] Unoptimized* - BlurImage( ... ) |

|---|

Objectives

The main objective was to not change the main function. This objective was met, although code had to be added for profiling.

Steps

Host Memory Management

In the original program a bmp is loaded into an vector of uint8_t. This is not ideal for CUDA, therefore an array of pinned memory was allocated. This array contains the same amount of elements but stores them as a structure, "BGRPixel" which is three contiguous floats. The vector is then transferred over to pinned memory.

| [Expand] Host Memory Management - Code( ... ) |

|---|

Device Memory Management

To get a blurred pixel the surrounding pixels must be sampled, in some cases this means sampling pixels outside the bounds of the image. In the original, a simple if check was used to determine if the pixel was outside the bounds or the image, if it was a black pixel was returned instead. This if statement most likely would have caused massive thread divergence in a kernel, therefore the images created in device memory featured additional padding of black pixels to compensate for this. Two such images were created, one to perform horizontal blur and one to perform vertical blur. Other small device arrays were also needed to store the Gaussian integrals that are used to produce the blurring effect.

| [Expand] Padding example |

|---|

Host to Device

To copy the pinned image to the device an array of streams was used to asynchronously copy each row of the image over. Doing so allowed the rows to be easily copied over while avoiding infringing on the extra padding pixels.

Kernels

First one image is blurred horizontally. One image is used as a reference while the other is written to. Kernels are also executed using the streams, so that each stream will blur a single row at a time. After the horizontal blur is finished the vertical blur is launched in the same manner, except that the previously written to image is used as a reference while the previous reference is now written to. The two blur are able to use the same kernel due to the fact that the pixel sampling technique works by iterating through pixels because of this the step size can be changed to sample across the row or down the column.

Device to Host

After that is done the image is copied back using the streams in the same way it was copied over.

Code

| [Expand] Unoptimized* - BlurImage -- Exert( ... ) |

|---|

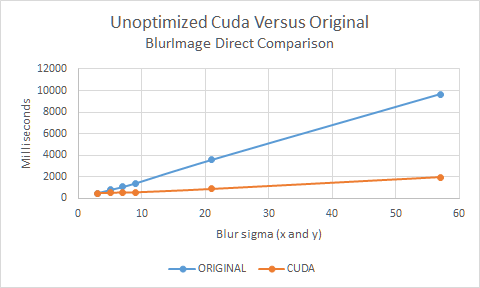

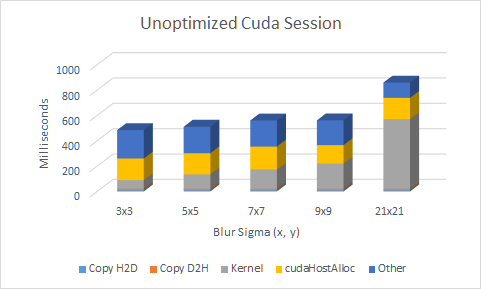

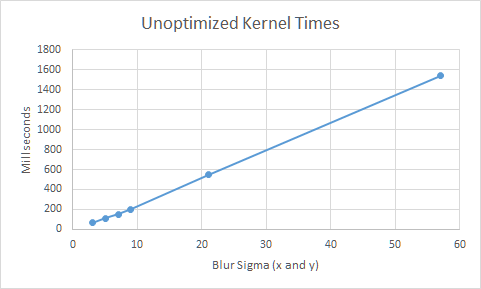

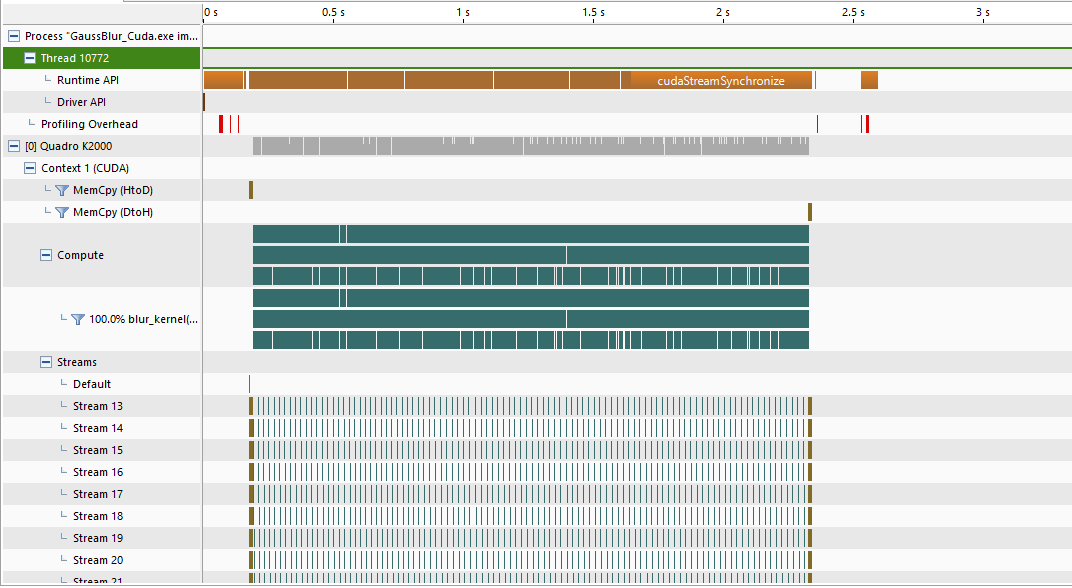

Results

Obtained using Quadro K620

Using a Quadro K2000

Output Images