Difference between revisions of "WhySoSerial?"

(→Assignment 1) |

|||

| (35 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=== Assignment 1 === | === Assignment 1 === | ||

| − | + | ||

| − | - The Application | + | == - The Application == |

| + | == '''WordProcessor''' == | ||

| + | |||

This application was built for assignment one. It is a sort of string editor/ translator was made. It accepts files to be processed by taking words from the file itself and using "codex" or dictionary, to match them to specific meanings or words. Then it replaces the string for that line and moves on to the next. Its intended purpose is to take large files and translate them into other languages. I believe this can be a highly parallelizable problem as many of the steps seem that they can be done in synchronous. | This application was built for assignment one. It is a sort of string editor/ translator was made. It accepts files to be processed by taking words from the file itself and using "codex" or dictionary, to match them to specific meanings or words. Then it replaces the string for that line and moves on to the next. Its intended purpose is to take large files and translate them into other languages. I believe this can be a highly parallelizable problem as many of the steps seem that they can be done in synchronous. | ||

| + | |||

The parts to this application are as follows; | The parts to this application are as follows; | ||

| − | |||

| − | |||

| − | |||

| − | -Issues | + | '''The codex''' - a file with word pairs separated by a delimiter |

| + | |||

| + | '''The book/file''' - a file that will be parsed and 'translated' | ||

| + | |||

| + | '''WordProcessor''' - the application that performs a tokenization of the input string, mapping of the codex, and replacing of string to file. In future, upgrades will allow WordProcessor to take in multiple files at a time and generate a translation | ||

| + | |||

| + | == -Issues == | ||

| + | |||

| + | |||

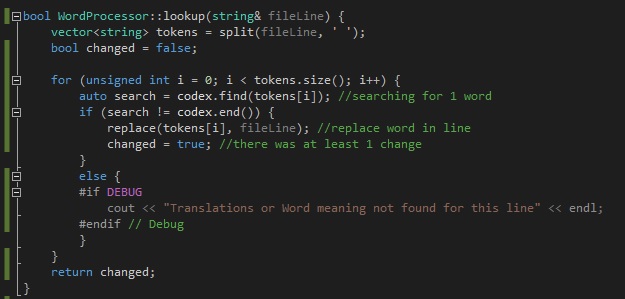

The main concern with this program is that often times it spends much of its time is looking something up and comparing it to each token generated by a split. This can be quite time consuming as it then has to go through a list of translations to find a matching pair. To increase speeds further, I had implemented the codex as a map. | The main concern with this program is that often times it spends much of its time is looking something up and comparing it to each token generated by a split. This can be quite time consuming as it then has to go through a list of translations to find a matching pair. To increase speeds further, I had implemented the codex as a map. | ||

| − | -Run-time Analysis Big-O | + | |

| + | == -Run-time Analysis Big-O == | ||

| + | |||

| + | |||

split() | split() | ||

n : Linear | n : Linear | ||

| + | |||

lookup() - Composite function, has 3 separate function calls | lookup() - Composite function, has 3 separate function calls | ||

n^2(log n) : Log Quadratic worst/ Log Linear best | n^2(log n) : Log Quadratic worst/ Log Linear best | ||

| + | |||

replace() | replace() | ||

| − | n : The complexity of string::Replace is linear according to cplusplus.com/reference/string/string/replace/ | + | n : The complexity of ''string::Replace'' is linear according to [http://http://www.cplusplus.com/reference/string/string/replace/ CPlusPlus Reference] |

| − | Replace and Split were added to this analysis because they may be improved in the future in order to add features to the application itself. This will more than likely increase their complexity and therefore its runtimes. In addition, these functions will be kept on watch as they are called from lookup(). | + | Replace and Split were added to this analysis because they may be improved in the future in order to add features to the application itself. This will more than likely increase their complexity and therefore its runtimes. In addition, these functions will be kept on watch as they are called from ''lookup()''. |

| − | -Profile Results | + | |

| − | gprof - More profiles can be found at https://github.com/Pooch11/DPS915/tree/master/TestFiles/Profiles | + | |

| + | == -Profile Results == | ||

| + | |||

| + | gprof - More profiles can be found at [https://github.com/Pooch11/DPS915/tree/master/TestFiles/Profiles this link] | ||

| + | |||

| + | [[File:lookupfunc_hotspot.jpeg]] | ||

| + | |||

| + | '''Flat profile''' | ||

Each sample counts as 0.01 seconds. | Each sample counts as 0.01 seconds. | ||

| Line 28: | Line 48: | ||

time seconds seconds calls ns/call ns/call name | time seconds seconds calls ns/call ns/call name | ||

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ||

| − | 60.00 0.06 0.06 | + | 60.00 0.06 0.06 '''WordProcessor::lookup'''(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >&) |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ||

30.00 0.09 0.03 472125 63.54 71.53 [''Split''] void std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > >::_M_emplace_back_aux<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&>(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&&&) | 30.00 0.09 0.03 472125 63.54 71.53 [''Split''] void std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > >::_M_emplace_back_aux<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&>(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&&&) | ||

| Line 36: | Line 56: | ||

0.00 0.10 0.00 112230 0.00 0.00 '''WordProcessor::replace'''(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >&) | 0.00 0.10 0.00 112230 0.00 0.00 '''WordProcessor::replace'''(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >&) | ||

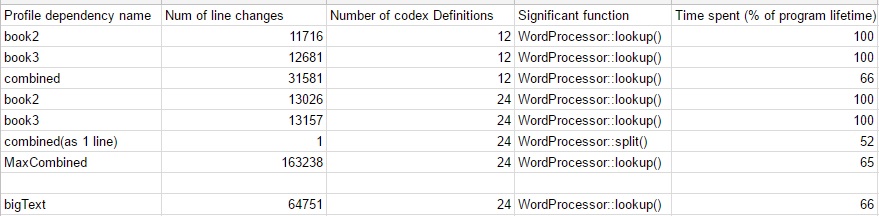

| − | Highlighting one of the | + | Highlighting one of the profiles; a significant amount of time can be observed in one particular method - ''lookup()''. It belongs to the class WordProcessor and consumes most of the lifetime of the application. It is provided each line of the file, and sends off additional calls to help parse, tokenize and replace strings within the given line. |

| + | |||

| + | Looking at the overall test data, this is a very prominent trend. ''Lookup()'' is always the most resource intensive method. This confirms assumptions made for Big-O regarding this function | ||

| − | |||

-Profile Overview | -Profile Overview | ||

| − | |||

| − | -Amdahls Predicted speed increase | + | [[File:Profilesataglance.jpg]] |

| + | |||

| + | == -Amdahls Predicted speed increase == | ||

| + | |||

| + | |||

Given the formula S(n) = 1 / 1 - P + P / N | Given the formula S(n) = 1 / 1 - P + P / N | ||

| + | |||

P = the percentage portion of parallizable code | P = the percentage portion of parallizable code | ||

| + | |||

N = the number of cores to distribute on | N = the number of cores to distribute on | ||

| + | |||

S(n) = the final speed increase as a multiplicative | S(n) = the final speed increase as a multiplicative | ||

| − | For this analysis - the Nvidia | + | For this analysis - the Nvidia GeForce GTX1070 GPU is available for testing and has a total of 1920 CUDA cores(absolute max for available hardware). |

| − | S(n) = 1 / 1 - (0.6667) + 0.6667 / 1920 | + | |

| − | + | - ~2/3 of time spent was deduced by taking a weighted average of results. Files that had low byte sizes or less lines of text were not taken into consideration . | |

| − | + | ||

| − | + | - interesting to note that when a block of text was combined as one line , 52% of time was spent splitting the string, and still ~47% of time was spent in lookup(). | |

| + | |||

| + | S(n) = 1 / 1 - (0.6667) + 0.6667 / 1920 | ||

| + | = 1 / 0.3333 + 0.00034723 | ||

| + | = 1 / 0.333647239 | ||

| + | = 2.99x | ||

| + | |||

Therefore by Amdahl's law, there is a potential speed increase of 2.99x if this component were to be paralleled. | Therefore by Amdahl's law, there is a potential speed increase of 2.99x if this component were to be paralleled. | ||

| − | -Additional Info Available @ | + | == -Additional Info & Code Available @ == |

| − | - [https://github.com/Pooch11/DPS915] GitHub Link | + | |

| + | |||

| + | - [https://github.com/Pooch11/DPS915 GitHub Link] | ||

| + | |||

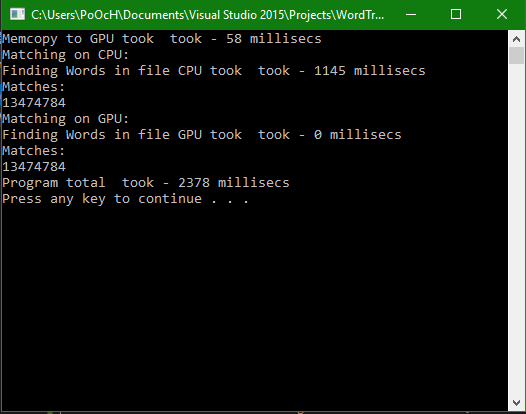

| + | === Assignment 2 === | ||

| + | |||

| + | == WordTranslator - Parallel Approach == | ||

| + | |||

| + | [https://github.com/Pooch11/DPS915/tree/Parallel Parallel Solution Branch of GitHub ] | ||

| + | |||

| + | Issues arose when attempting to change data within a kernel on device memory | ||

| + | Learning outcomes: | ||

| + | |||

| + | 1. Kernels do not accept complex objects from the host ( Maps, vectors, strings) | ||

| + | |||

| + | 2. Kernels load and execute on sequential memory. Device Pointers | ||

| + | |||

| + | 3. Replacing the data using a character pointer (char*) proved exceedingly difficult. | ||

| + | |||

| + | |||

| + | Thus the solution had to be modified in order to accommodate these issues. A couple of options were available to overcome this. | ||

| + | |||

| + | 1. We will match a pattern found by the kernel | ||

| + | |||

| + | 2. We will record where the result was found and the position that we found this match. This would allow another more sophisticated device function to make these translations. This function on a CPU would be at most O(n^2) | ||

| + | |||

| + | 3. Instead, introduce a structure to manage our complex data. (See Below) | ||

| + | |||

| + | |||

| + | [[File:Struct.PNG]] | ||

| + | |||

| + | Structures can be passed into the Kernel, but initialization and access are exceedingly difficult and slow. | ||

| + | |||

| + | '''New CPU Code to Parallelize''' | ||

| + | |||

| + | [[File:Matching_CPU.PNG]] | ||

| + | |||

| + | New CPU code - has an approximate runtime growth rate of O(n^2). | ||

| + | |||

| + | '''GPU Kernel''' | ||

| + | |||

| + | [[File:Matching_GPU.PNG]] | ||

| + | |||

| + | Internally Optimized to use shared memory for our result array. The kernel freely changes these values to true and false depending on where matches are found. | ||

| + | |||

| + | |||

| + | Notes: | ||

| + | |||

| + | Instead of using a result array __ballot(PREDICATE) could be considered (More research on this to be done). | ||

| + | |||

| + | MPI - More knowledge of Message Passing Interface might be needed for a full solution to this problem. See [https://en.wikipedia.org/wiki/Message_Passing_Interface#Dynamic_process_management MPI Wikipedia] | ||

| + | |||

| + | === Assignment 3 === | ||

| + | |||

| + | ==Optimizations== | ||

| + | |||

| + | Optimizations were different in nature than typical Optimizations for Kernel Launches. | ||

| + | |||

| + | '''Launch Configurations''' | ||

| + | First the configuration was optimized. At first threads were launched based on the length of the target text. Later, it was found more useful to launch as many threads as the device could hold for a single block. | ||

| + | |||

| + | [[File:Configurations.PNG]] | ||

| + | |||

| + | '''Pattern Matching''' | ||

| + | The algorithm used in this approach could be revised considerably since there is lots of overlap between the characters checked by threads. | ||

| + | |||

| + | |||

| + | '''''Knuth Morris-Pratt''''' | ||

| + | |||

| + | [https://en.wikipedia.org/wiki/Knuth%E2%80%93Morris%E2%80%93Pratt_algorithm Link to Wiki explanation] | ||

| + | |||

| + | '''''Horspool''''' | ||

| + | |||

| + | [https://en.wikipedia.org/wiki/Boyer%E2%80%93Moore%E2%80%93Horspool_algorithm Link to Wiki explanation] | ||

| + | |||

| + | '''''Shift Or''''' | ||

| + | |||

| + | [https://en.wikipedia.org/wiki/Bitap_algorithm Link to Wiki explanation] | ||

| + | |||

| + | '''Runtime''' | ||

| + | |||

| + | [[File:Runtime_CPUvsGPU.png]] | ||

| + | |||

| + | |||

| + | [[File:CPUvsGPU_Timing_Matching.PNG]] | ||

| − | + | '''Streaming Kernel Launch''' | |

| + | Instead of making changes to increase the efficiency of the Kernel, changes were made to incorporate the spirit of the original solution. Taking in multiple words from a lexicon and making changes to a large text. | ||

| − | + | Thus, streaming the launch of this kernel can be introduce to split the target data into manageable partitions. In addition, if the patterns are the same size, we can use streaming to look for more than one word concurrently. | |

| − | + | [[File:Streaming_Kernel.PNG]] | |

Latest revision as of 20:08, 11 April 2017

Contents

Assignment 1

- The Application

WordProcessor

This application was built for assignment one. It is a sort of string editor/ translator was made. It accepts files to be processed by taking words from the file itself and using "codex" or dictionary, to match them to specific meanings or words. Then it replaces the string for that line and moves on to the next. Its intended purpose is to take large files and translate them into other languages. I believe this can be a highly parallelizable problem as many of the steps seem that they can be done in synchronous.

The parts to this application are as follows;

The codex - a file with word pairs separated by a delimiter

The book/file - a file that will be parsed and 'translated'

WordProcessor - the application that performs a tokenization of the input string, mapping of the codex, and replacing of string to file. In future, upgrades will allow WordProcessor to take in multiple files at a time and generate a translation

-Issues

The main concern with this program is that often times it spends much of its time is looking something up and comparing it to each token generated by a split. This can be quite time consuming as it then has to go through a list of translations to find a matching pair. To increase speeds further, I had implemented the codex as a map.

-Run-time Analysis Big-O

split() n : Linear

lookup() - Composite function, has 3 separate function calls n^2(log n) : Log Quadratic worst/ Log Linear best

replace() n : The complexity of string::Replace is linear according to CPlusPlus Reference

Replace and Split were added to this analysis because they may be improved in the future in order to add features to the application itself. This will more than likely increase their complexity and therefore its runtimes. In addition, these functions will be kept on watch as they are called from lookup().

-Profile Results

gprof - More profiles can be found at this link

Flat profile

Each sample counts as 0.01 seconds.

% cumulative self self total time seconds seconds calls ns/call ns/call name

60.00 0.06 0.06 WordProcessor::lookup(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >&)

30.00 0.09 0.03 472125 63.54 71.53 [Split] void std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > >::_M_emplace_back_aux<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&>(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&&&)

10.00 0.10 0.01 1252187 7.99 7.99 [mapping] std::_Rb_tree<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::pair<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > >, std::_Select1st<std::pair<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > >, std::less<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > >::operator=(std::_Rb_tree<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::pair<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > >, std::_Select1st<std::pair<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > >, std::less<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > > const&)

0.00 0.10 0.00 112230 0.00 0.00 WordProcessor::replace(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >&)

Highlighting one of the profiles; a significant amount of time can be observed in one particular method - lookup(). It belongs to the class WordProcessor and consumes most of the lifetime of the application. It is provided each line of the file, and sends off additional calls to help parse, tokenize and replace strings within the given line.

Looking at the overall test data, this is a very prominent trend. Lookup() is always the most resource intensive method. This confirms assumptions made for Big-O regarding this function

-Profile Overview

-Amdahls Predicted speed increase

Given the formula S(n) = 1 / 1 - P + P / N

P = the percentage portion of parallizable code

N = the number of cores to distribute on

S(n) = the final speed increase as a multiplicative

For this analysis - the Nvidia GeForce GTX1070 GPU is available for testing and has a total of 1920 CUDA cores(absolute max for available hardware).

- ~2/3 of time spent was deduced by taking a weighted average of results. Files that had low byte sizes or less lines of text were not taken into consideration .

- interesting to note that when a block of text was combined as one line , 52% of time was spent splitting the string, and still ~47% of time was spent in lookup().

S(n) = 1 / 1 - (0.6667) + 0.6667 / 1920

= 1 / 0.3333 + 0.00034723

= 1 / 0.333647239

= 2.99x

Therefore by Amdahl's law, there is a potential speed increase of 2.99x if this component were to be paralleled.

-Additional Info & Code Available @

Assignment 2

WordTranslator - Parallel Approach

Parallel Solution Branch of GitHub

Issues arose when attempting to change data within a kernel on device memory Learning outcomes:

1. Kernels do not accept complex objects from the host ( Maps, vectors, strings)

2. Kernels load and execute on sequential memory. Device Pointers

3. Replacing the data using a character pointer (char*) proved exceedingly difficult.

Thus the solution had to be modified in order to accommodate these issues. A couple of options were available to overcome this.

1. We will match a pattern found by the kernel

2. We will record where the result was found and the position that we found this match. This would allow another more sophisticated device function to make these translations. This function on a CPU would be at most O(n^2)

3. Instead, introduce a structure to manage our complex data. (See Below)

Structures can be passed into the Kernel, but initialization and access are exceedingly difficult and slow.

New CPU Code to Parallelize

New CPU code - has an approximate runtime growth rate of O(n^2).

GPU Kernel

Internally Optimized to use shared memory for our result array. The kernel freely changes these values to true and false depending on where matches are found.

Notes:

Instead of using a result array __ballot(PREDICATE) could be considered (More research on this to be done).

MPI - More knowledge of Message Passing Interface might be needed for a full solution to this problem. See MPI Wikipedia

Assignment 3

Optimizations

Optimizations were different in nature than typical Optimizations for Kernel Launches.

Launch Configurations First the configuration was optimized. At first threads were launched based on the length of the target text. Later, it was found more useful to launch as many threads as the device could hold for a single block.

Pattern Matching The algorithm used in this approach could be revised considerably since there is lots of overlap between the characters checked by threads.

Knuth Morris-Pratt

Horspool

Shift Or

Runtime

Streaming Kernel Launch

Instead of making changes to increase the efficiency of the Kernel, changes were made to incorporate the spirit of the original solution. Taking in multiple words from a lexicon and making changes to a large text.

Thus, streaming the launch of this kernel can be introduce to split the target data into manageable partitions. In addition, if the patterns are the same size, we can use streaming to look for more than one word concurrently.