Difference between revisions of "Sudo"

Mateya Lucic (talk | contribs) (→Progress) |

Mateya Lucic (talk | contribs) (→Progress) |

||

| (10 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

= Sudo = | = Sudo = | ||

== Team Members == | == Team Members == | ||

| − | |||

# [mailto:mlucic3@senecacollege.ca?subject=gpu610 mlucic3], Mateya Lucic | # [mailto:mlucic3@senecacollege.ca?subject=gpu610 mlucic3], Mateya Lucic | ||

| − | |||

| − | |||

== Progress == | == Progress == | ||

=== Assignment 1 === | === Assignment 1 === | ||

| + | |||

Catmull-Clark Mesh Subdivision | Catmull-Clark Mesh Subdivision | ||

| + | |||

After browsing C++ repositories on GitHub in search for a 3D model viewer application, I came across the following repository: https://github.com/tsong/viewer | After browsing C++ repositories on GitHub in search for a 3D model viewer application, I came across the following repository: https://github.com/tsong/viewer | ||

| + | |||

This repository caught my interest as it was amongst the more straight forward 3D model viewing applications with available source code. | This repository caught my interest as it was amongst the more straight forward 3D model viewing applications with available source code. | ||

| + | |||

Upon compiling and running the application I played around with it to determine any procedures which seemed like they would benefit from an increase in speed. I found that when calling for subdivision the loaded mesh took a very long time to complete. As a result, I’ve decided to take a look at the code which is involved in that process to determine whether it can benefit from parallelizing. | Upon compiling and running the application I played around with it to determine any procedures which seemed like they would benefit from an increase in speed. I found that when calling for subdivision the loaded mesh took a very long time to complete. As a result, I’ve decided to take a look at the code which is involved in that process to determine whether it can benefit from parallelizing. | ||

| + | |||

I made some changes to how the application runs in order to profile only the subdivision procedure. I removed the GUI component, and made it so that the only thing the application would do is load a mesh, and then perform the subdivision 4 times. | I made some changes to how the application runs in order to profile only the subdivision procedure. I removed the GUI component, and made it so that the only thing the application would do is load a mesh, and then perform the subdivision 4 times. | ||

| Line 23: | Line 25: | ||

[[File:Subdivision_output.jpg]] | [[File:Subdivision_output.jpg]] | ||

| + | |||

| + | |||

'''<u>Flat Profile</u>''' | '''<u>Flat Profile</u>''' | ||

[[File:Subdivision_flat.jpg]] | [[File:Subdivision_flat.jpg]] | ||

| + | |||

'''<u>Subdivide Method</u>''' | '''<u>Subdivide Method</u>''' | ||

[[File:Subdivision_subdivide.jpg]] | [[File:Subdivision_subdivide.jpg]] | ||

| + | |||

'''<u>AddFace Method</u>''' | '''<u>AddFace Method</u>''' | ||

[[File:Subdivision_addFace.jpg]] | [[File:Subdivision_addFace.jpg]] | ||

| + | |||

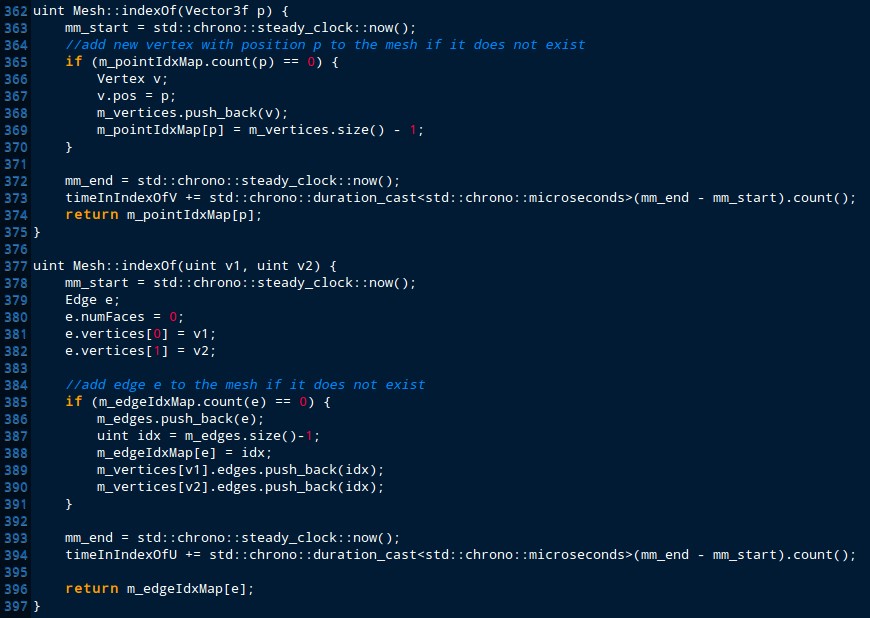

'''<u>IndexOf Methods</u>''' | '''<u>IndexOf Methods</u>''' | ||

[[File:Subdivision_indexOf.jpg]] | [[File:Subdivision_indexOf.jpg]] | ||

| + | |||

| + | |||

I did not trust the flat profile as it was not accurately reflecting the amount of time that the application took to run, so I used the std::chrono library to measure how long the various functions and sections of code took. | I did not trust the flat profile as it was not accurately reflecting the amount of time that the application took to run, so I used the std::chrono library to measure how long the various functions and sections of code took. | ||

| Line 44: | Line 53: | ||

Analyzing the code, it can be seen that the call structure goes as following: | Analyzing the code, it can be seen that the call structure goes as following: | ||

| − | -> Scene.subdivide(4) | + | -> Scene.subdivide(4) |

| − | + | -> For(4 steps) { mesh.subdivide() } | |

| − | + | -> For(each face in the current mesh) addFaces() | |

| + | |||

| + | -> indexOf() | ||

| − | |||

The vast majority of time is being occupied within the addFaces method, where a vast part of that time is occupied getting the index of the edges in the mesh. | The vast majority of time is being occupied within the addFaces method, where a vast part of that time is occupied getting the index of the edges in the mesh. | ||

| Line 56: | Line 66: | ||

| − | Parallelizable? | + | |

| + | <u>'''Parallelizable?'''</u> | ||

If the code is reorganized to first find whether a vertex or edge exists in the mesh and then separate the ones that exist from the ones that don’t and perform the necessary operations on them separately and in parallel, it could possibly result in an improvement in run-time. | If the code is reorganized to first find whether a vertex or edge exists in the mesh and then separate the ones that exist from the ones that don’t and perform the necessary operations on them separately and in parallel, it could possibly result in an improvement in run-time. | ||

| + | |||

| + | |||

| + | |||

=== Assignment 2 === | === Assignment 2 === | ||

| + | |||

| + | |||

| + | |||

| + | The application which I had profiled in assignment 1 didn't end up being very viable for parallelization due to the design of the application. I was forced to search for a new application to parallelize, and I managed to come across a small steganography application which upon profiling it became quite apparent that by performing the encoding on the GPU would yield improvements in runtime.In order to fairly compare the serial application with the parallelized version I had to rewrite the serial code slightly to perform many of the same operations, with the only difference being the encoding algorithm. | ||

| + | |||

| + | |||

| + | |||

| + | |||

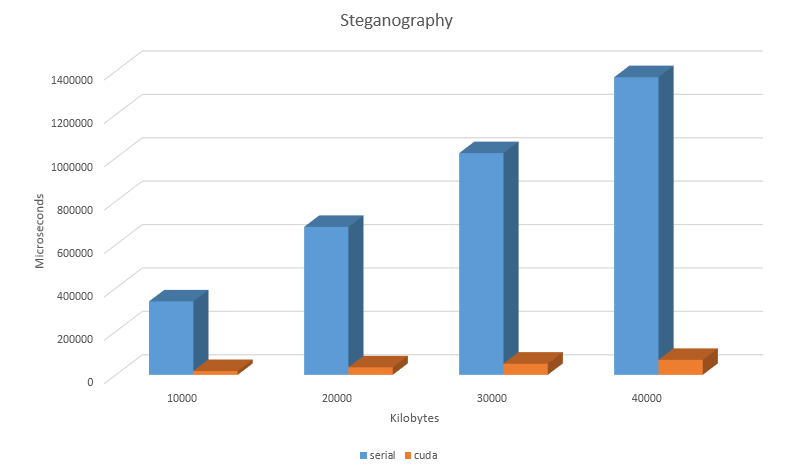

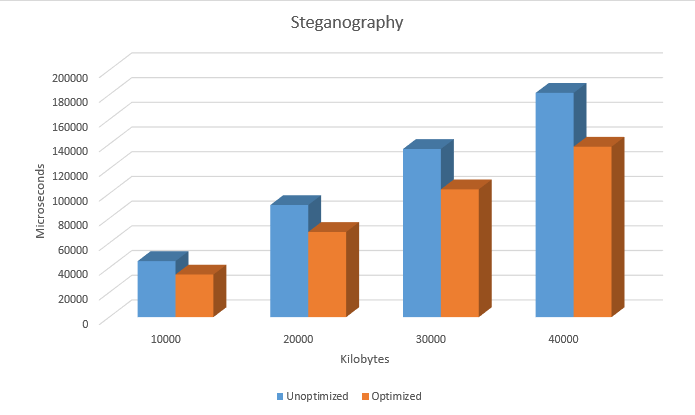

| + | The following graph displays the runtime (measured in microseconds) of encoding various txt file sizes (measured in kilobytes). | ||

| + | |||

| + | [[File:CudaSteganographyRuntime.png]] | ||

| + | |||

| + | |||

| + | |||

| + | |||

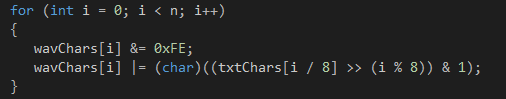

| + | The following is the serial code: | ||

| + | |||

| + | [[File:CudaSteganographySerial.png]] | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

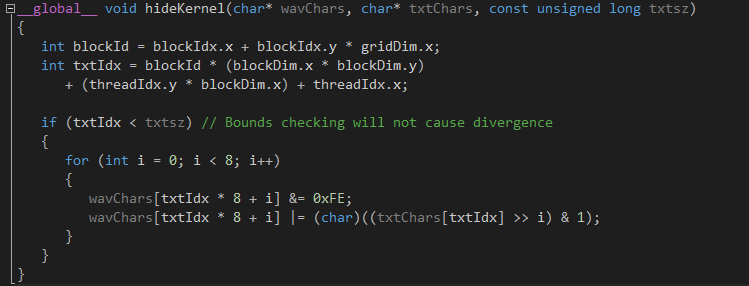

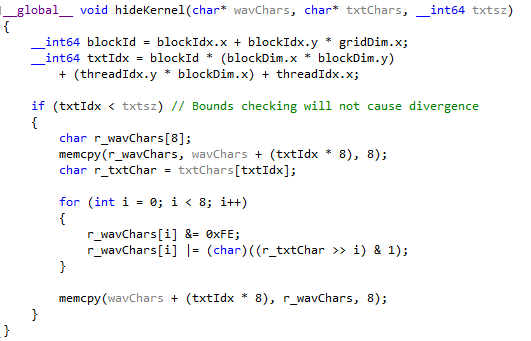

| + | The following is the kernel I wrote: | ||

| + | |||

| + | [[File:CudaSteganographyKernel.png]] | ||

| + | |||

=== Assignment 3 === | === Assignment 3 === | ||

| + | |||

| + | |||

| + | I improved on my previous application by cleaning some logic and adding optimization to it. I noticed that even on cards with a compute capability of 3.0 that it did not accept a grid with x dimensions larger than 65535, therefore I had to rewrite my code to adhere to the limitation. Within the kernel itself, there was opportunity to pre-fetch values into register memory, in order to reduce latency during operations on those values. There was no requirement for shared memory due to the fact that threads did not need to share any memory at all. | ||

| + | |||

| + | |||

| + | The following is the new kernel : | ||

| + | |||

| + | |||

| + | [[File:CudaSteganographyKernelA3.png]] | ||

| + | |||

| + | |||

| + | |||

| + | The following is run-time comparison between my old and my new kernel : | ||

| + | |||

| + | |||

| + | |||

| + | [[File:CudaSteganographyRuntimeA3.png]] | ||

Latest revision as of 18:39, 10 December 2015

Contents

Sudo

Team Members

- mlucic3, Mateya Lucic

Progress

Assignment 1

Catmull-Clark Mesh Subdivision

After browsing C++ repositories on GitHub in search for a 3D model viewer application, I came across the following repository: https://github.com/tsong/viewer

This repository caught my interest as it was amongst the more straight forward 3D model viewing applications with available source code.

Upon compiling and running the application I played around with it to determine any procedures which seemed like they would benefit from an increase in speed. I found that when calling for subdivision the loaded mesh took a very long time to complete. As a result, I’ve decided to take a look at the code which is involved in that process to determine whether it can benefit from parallelizing.

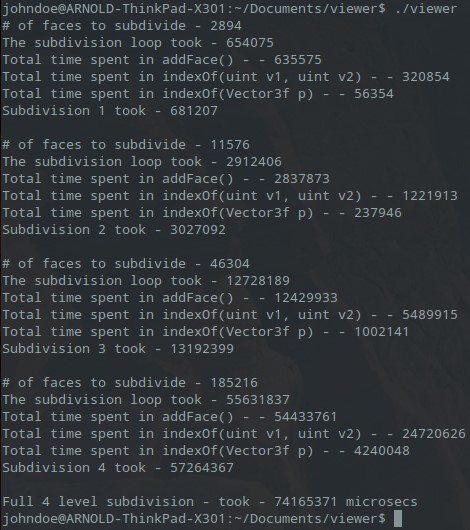

I made some changes to how the application runs in order to profile only the subdivision procedure. I removed the GUI component, and made it so that the only thing the application would do is load a mesh, and then perform the subdivision 4 times.

Output

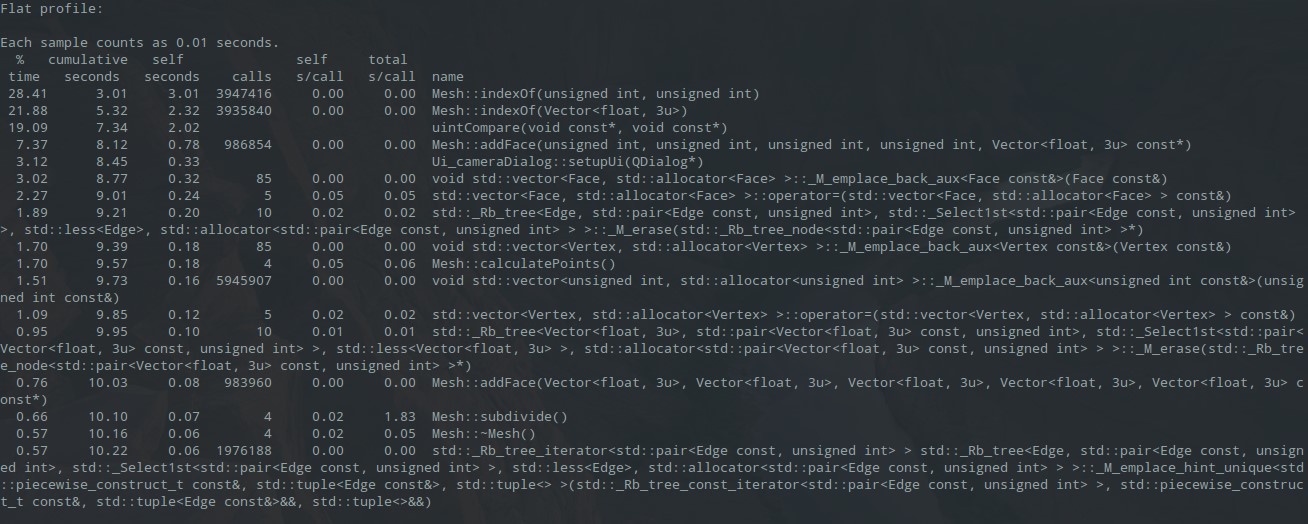

Flat Profile

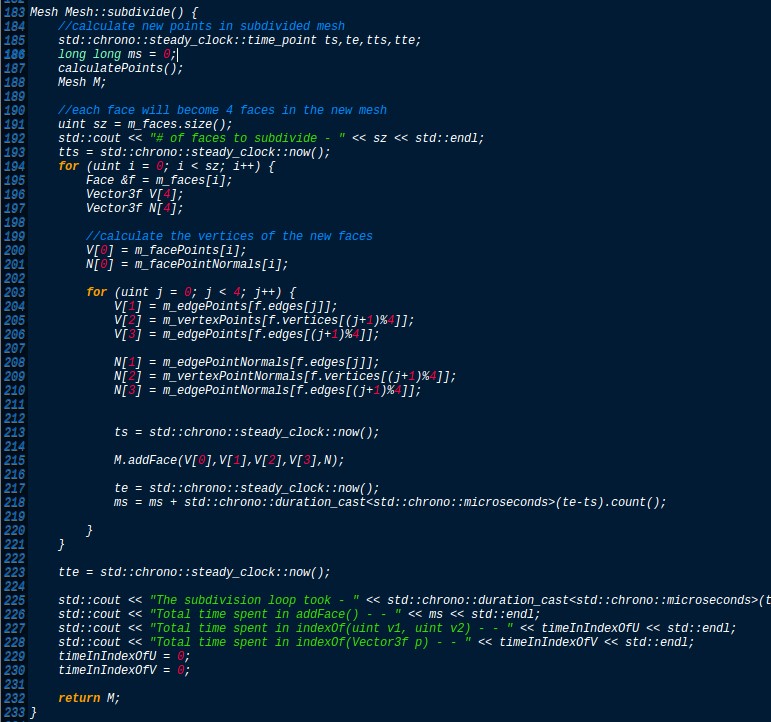

Subdivide Method

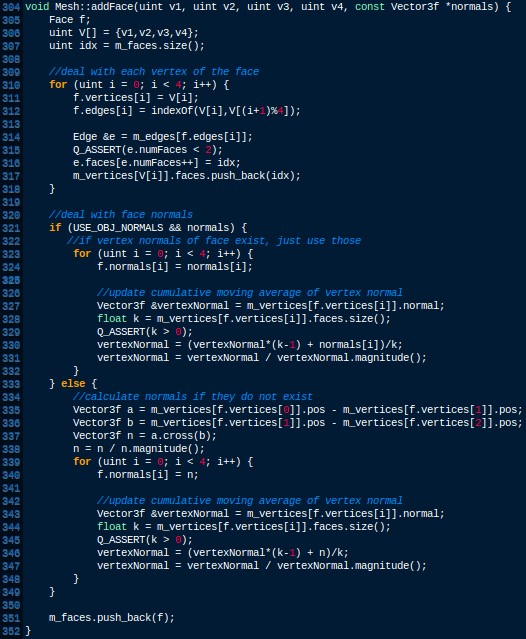

AddFace Method

IndexOf Methods

I did not trust the flat profile as it was not accurately reflecting the amount of time that the application took to run, so I used the std::chrono library to measure how long the various functions and sections of code took.

Analyzing the code, it can be seen that the call structure goes as following:

-> Scene.subdivide(4)

-> For(4 steps) { mesh.subdivide() }

-> For(each face in the current mesh) addFaces()

-> indexOf()

The vast majority of time is being occupied within the addFaces method, where a vast part of that time is occupied getting the index of the edges in the mesh.

Parallelizable?

If the code is reorganized to first find whether a vertex or edge exists in the mesh and then separate the ones that exist from the ones that don’t and perform the necessary operations on them separately and in parallel, it could possibly result in an improvement in run-time.

Assignment 2

The application which I had profiled in assignment 1 didn't end up being very viable for parallelization due to the design of the application. I was forced to search for a new application to parallelize, and I managed to come across a small steganography application which upon profiling it became quite apparent that by performing the encoding on the GPU would yield improvements in runtime.In order to fairly compare the serial application with the parallelized version I had to rewrite the serial code slightly to perform many of the same operations, with the only difference being the encoding algorithm.

The following graph displays the runtime (measured in microseconds) of encoding various txt file sizes (measured in kilobytes).

The following is the serial code:

The following is the kernel I wrote:

Assignment 3

I improved on my previous application by cleaning some logic and adding optimization to it. I noticed that even on cards with a compute capability of 3.0 that it did not accept a grid with x dimensions larger than 65535, therefore I had to rewrite my code to adhere to the limitation. Within the kernel itself, there was opportunity to pre-fetch values into register memory, in order to reduce latency during operations on those values. There was no requirement for shared memory due to the fact that threads did not need to share any memory at all.

The following is the new kernel :

The following is run-time comparison between my old and my new kernel :