Difference between revisions of "GPU610/TeamEh"

(→Assignment 3) |

(→Assignment 3) |

||

| (4 intermediate revisions by the same user not shown) | |||

| Line 200: | Line 200: | ||

Optimization presented some interesting challenges. The following were important factors in the attempt to optimize: | Optimization presented some interesting challenges. The following were important factors in the attempt to optimize: | ||

| − | + | * Filtering problems were independent of other filters | |

| − | + | * Filtering is 2D in nature | |

| − | + | * Filters can and are regularly convolved to form new filters | |

| − | + | * Filters require an apron of neighboring pixels to calculate the filter | |

| − | |||

| − | |||

| − | |||

| − | |||

The greatest gains were made in the convolution, and canny kernels with minimal gains in grey world, auto contrast and no gains in resize. No changes were made to either Gaussian as they were implemented as convolution kernels. | The greatest gains were made in the convolution, and canny kernels with minimal gains in grey world, auto contrast and no gains in resize. No changes were made to either Gaussian as they were implemented as convolution kernels. | ||

| − | [[ | + | [[File:A3.png]] |

| − | One change that improved performance everywhere | + | One change that improved performance everywhere was changing all variables from double to float. This improved performance across the board. |

| − | Shared Memory | + | <b><font style="font-size:140%"> Shared Memory </font></b> |

Due to the nature of the algorithms, shared memory was not an option for any kernel. | Due to the nature of the algorithms, shared memory was not an option for any kernel. | ||

| Line 233: | Line 229: | ||

First, is to pad, or use different types in your data structures. | First, is to pad, or use different types in your data structures. | ||

| + | <pre> | ||

struct RGBApixel { | struct RGBApixel { | ||

| − | + | unsigned char Blue; | |

| − | unsigned char Blue; | + | unsigned char Green; |

| − | + | unsigned char Red; | |

| − | unsigned char Green; | + | unsigned char Alpha; |

| − | |||

| − | unsigned char Red; | ||

| − | |||

| − | unsigned char Alpha; | ||

| − | |||

} | } | ||

| + | </pre> | ||

This struct is stored in one bank in shared memory since each char takes up 8 bits. If you need access to the individual elements of this struct, it will cause a 4 way bank conflict since the tread has to read from the bank 4 times. To prevent this, we could do this | This struct is stored in one bank in shared memory since each char takes up 8 bits. If you need access to the individual elements of this struct, it will cause a 4 way bank conflict since the tread has to read from the bank 4 times. To prevent this, we could do this | ||

| + | <pre> | ||

struct RGBApixel { | struct RGBApixel { | ||

| − | + | unsigned char32_t Blue; | |

| − | unsigned char32_t Blue; | + | unsigned char32_t Green; |

| − | + | unsigned char32_t Red; | |

| − | unsigned char32_t Green; | + | unsigned char32_t Alpha; |

| − | |||

| − | unsigned char32_t Red; | ||

| − | |||

| − | unsigned char32_t Alpha; | ||

| − | |||

} | } | ||

| + | </pre> | ||

This struct is now spread across 4 banks since char now 32 bits, or one full bank each. With this, bank conflicts are eliminated. | This struct is now spread across 4 banks since char now 32 bits, or one full bank each. With this, bank conflicts are eliminated. | ||

Convolution Kernel | Convolution Kernel | ||

| + | |||

| + | <b><font style="font-size:140%"> Convolution Kernel </font></b> | ||

The biggest gains in optimization came from the convolution kernels. | The biggest gains in optimization came from the convolution kernels. | ||

| Line 268: | Line 260: | ||

old: | old: | ||

| − | + | <pre> | |

| − | |||

cudaMalloc((void**)&_gpuKernel, _kernelXSize * _kernelYSize * sizeof(float)); | cudaMalloc((void**)&_gpuKernel, _kernelXSize * _kernelYSize * sizeof(float)); | ||

| − | |||

cudaMemcpy(_gpuKernel, _kernel, _kernelXSize * _kernelYSize * sizeof(float), | cudaMemcpy(_gpuKernel, _kernel, _kernelXSize * _kernelYSize * sizeof(float), | ||

| − | |||

cudaMemcpyHostToDevice); | cudaMemcpyHostToDevice); | ||

| + | </pre> | ||

new | new | ||

| + | |||

| + | <pre> | ||

const int MAX_KERNEL_RADIUS = 7; | const int MAX_KERNEL_RADIUS = 7; | ||

| − | |||

__constant__ float convolutionKernel[(MAX_KERNEL_RADIUS * 2) * (MAX_KERNEL_RADIUS * 2) + 1]; | __constant__ float convolutionKernel[(MAX_KERNEL_RADIUS * 2) * (MAX_KERNEL_RADIUS * 2) + 1]; | ||

| − | + | checkError(cudaMemcpyToSymbol(convolutionKernel, _kernel, _kernelXSize * _kernelYSize * sizeof(float)), "initializing kernel"); | |

| − | + | </pre> | |

| − | |||

| − | checkError(cudaMemcpyToSymbol(convolutionKernel, _kernel, | ||

| − | |||

| − | _kernelXSize * _kernelYSize * sizeof(float)), "initializing kernel"); | ||

Due to the nature of some of the kernels, thread divergence was a major slowdown due to having to do edge checks. This was eliminated by padding the outside of the image. | Due to the nature of some of the kernels, thread divergence was a major slowdown due to having to do edge checks. This was eliminated by padding the outside of the image. | ||

| − | __global__ void padImageKernel( | + | <pre> |

| − | + | __global__ void padImageKernel(const GPU_RGBApixel* img, GPU_RGBApixel* output, int width, int height,int wPad, int hPad){ | |

| − | const GPU_RGBApixel* img, GPU_RGBApixel* output, int width, int height, | + | int x = blockIdx.x * blockDim.x + threadIdx.x; |

| − | + | int y = blockIdx.y * blockDim.y + threadIdx.y; | |

| − | int wPad, int hPad) | + | int paddedWidth = width + wPad * 2; |

| − | + | int paddedHeight = height + hPad * 2; | |

| − | { | + | if (x >= paddedWidth || y >= paddedHeight){ |

| − | + | return; | |

| − | int x = blockIdx.x * blockDim.x + threadIdx.x; | + | } |

| − | |||

| − | int y = blockIdx.y * blockDim.y + threadIdx.y; | ||

| − | |||

| − | int paddedWidth = width + wPad * 2; | ||

| − | |||

| − | int paddedHeight = height + hPad * 2; | ||

| − | |||

| − | if (x >= paddedWidth || y >= paddedHeight) | ||

| − | |||

| − | { | ||

| − | |||

| − | return; | ||

| − | |||

| − | } | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | int xCorr = (x < wPad) ? width + x : x; | |

| + | xCorr = (xCorr >= width + wPad) ? xCorr - width : xCorr; | ||

| + | |||

| + | int yCorr = (y < hPad) ? height + y : y; | ||

| + | yCorr = (yCorr >= height + hPad) ? yCorr - height : yCorr; | ||

| + | output[imageIndex(x, y, paddedHeight)] = img[imageIndex(xCorr, yCorr, height)]; | ||

} | } | ||

| − | + | </pre> | |

This removed the edge checks from the convolution kernel allowing for a dramatic increase in Gaussian. | This removed the edge checks from the convolution kernel allowing for a dramatic increase in Gaussian. | ||

| + | <pre> | ||

//old | //old | ||

| + | for (int i = -kXRad; i <= kXRad; i++){ | ||

| + | for (int j = -kYRad; j <= kYRad; j++){ | ||

| + | //wrap image | ||

| − | + | int xCorr = (x + i < 0) ? width + i : x + i; | |

| − | + | xCorr = (xCorr >= width) ? xCorr - width : xCorr; | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | int xCorr = (x + i < 0) ? width + i : x + i; | ||

| − | |||

| − | xCorr = (xCorr >= width) ? xCorr - width : xCorr | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | int yCorr = (y + j < 0) ? height + j : y + j; | |

| + | yCorr = (yCorr >= height) ? yCorr - height : yCorr; | ||

| − | + | const float kernelElement = kernel[kernelIndex(i, j, kernelXSize, kernelYSize)]; | |

| − | + | const GPU_RGBApixel& imageElement = img[imageIndex(xCorr, yCorr, height)]; | |

| − | |||

| − | |||

| − | |||

| + | outPixel.Red += kernelElement * imageElement.Red; | ||

| + | outPixel.Green += kernelElement * imageElement.Green; | ||

| + | outPixel.Blue += kernelElement * imageElement.Blue; | ||

| + | } | ||

} | } | ||

| + | </pre> | ||

| + | <pre> | ||

//new | //new | ||

| − | for (int i = -kXRad; i <= kXRad; i++) | + | for (int i = -kXRad; i <= kXRad; i++){ |

| − | + | for (int j = -kYRad; j <= kYRad; j++){ | |

| − | { | ||

| − | |||

| − | for (int j = -kYRad; j <= kYRad; j++) | ||

| − | |||

| − | { | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | const float kernelElement = convolutionKernel[kernelIndex(i, j, kernelXSize, kernelYSize)]; | ||

| + | const GPU_RGBApixel imageElement = img[imageIndex(xCorr + i, yCorr + j, height + kernelYSize - 1)]; | ||

| + | |||

| + | outPixel.Red += kernelElement * imageElement.Red; | ||

| + | outPixel.Green += kernelElement * imageElement.Green; | ||

| + | outPixel.Blue += kernelElement * imageElement.Blue; | ||

| + | } | ||

} | } | ||

| + | </pre> | ||

| − | + | <b><font style="font-size:140%"> Canny </font></b> | |

| − | |||

| − | Canny | ||

| − | |||

Canny was optimized not through the kernel itself, but through some of the functions canny used. The functions were rewritten with a little rearrangement of code and slight manipulation of their logic in order for performance gains. | Canny was optimized not through the kernel itself, but through some of the functions canny used. The functions were rewritten with a little rearrangement of code and slight manipulation of their logic in order for performance gains. | ||

notMaxSuppression used to have a large if block in the middle of the kernel that could potentially split into 4 threads. It was rewritten using a pre-computed table of outcomes and a little “mathemagic” to eliminate the if statements. It has reduced it reduces it from four paths to two. | notMaxSuppression used to have a large if block in the middle of the kernel that could potentially split into 4 threads. It was rewritten using a pre-computed table of outcomes and a little “mathemagic” to eliminate the if statements. It has reduced it reduces it from four paths to two. | ||

| + | <pre> | ||

//changed the angle finding logic | //changed the angle finding logic | ||

| − | |||

//old | //old | ||

int index = imageIndex(x, y, height); | int index = imageIndex(x, y, height); | ||

| − | |||

const float& gM = gradMagnitude[index]; | const float& gM = gradMagnitude[index]; | ||

| − | |||

result[index] = gM; | result[index] = gM; | ||

//don't touch the edges | //don't touch the edges | ||

| − | if (x < 1 || y < 1 || x >= width - 1 || y >= height - 1) | + | if (x < 1 || y < 1 || x >= width - 1 || y >= height - 1){ |

| − | + | return; | |

| − | { | ||

| − | |||

| − | return; | ||

| − | |||

} | } | ||

const float& gA = gradAngle[index]; | const float& gA = gradAngle[index]; | ||

| − | |||

int i; | int i; | ||

| − | |||

int j; | int j; | ||

result[index] = gradMagnitude[index]; | result[index] = gradMagnitude[index]; | ||

| − | if (((gA >= -M_PId8) && (gA <= M_PId8)) || (gA >= 7 * M_PId8) || (gA <= -7 * M_PId8)) | + | if (((gA >= -M_PId8) && (gA <= M_PId8)) || (gA >= 7 * M_PId8) || (gA <= -7 * M_PId8)){ |

| − | + | i = 0; | |

| − | { | + | j = -1; |

| − | + | }else if (((gA <= 3 * M_PId8) && (gA > M_PId8)) || ((gA <= -5 * M_PId8) && (gA > -7 * M_PId8))){ | |

| − | i = | + | i = -1; |

| − | + | j = 1; | |

| − | + | }else if (((gA >= 5 * M_PId8) && (gA < 7 * M_PId8)) || ((gA < -M_PId8) && (gA >= -3 * M_PId8))){ | |

| − | + | i = -1; | |

| + | j = -1; | ||

| + | }else{ | ||

| + | i = -1; | ||

| + | j = 0; | ||

} | } | ||

| − | + | if (!((gM <= gradMagnitude[imageIndex(x + i, y + j, height)]) || (gM <= gradMagnitude[imageIndex(x - 1, y - j, height)]))){ | |

| − | + | result[index] = gM; | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | j | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | { | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

} | } | ||

| − | + | </pre> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | <pre> | |

| − | |||

| − | |||

//new | //new | ||

int xCorr = x + 1; | int xCorr = x + 1; | ||

| − | |||

int yCorr = y + 1; | int yCorr = y + 1; | ||

| − | |||

int heightCorr = height + 2; | int heightCorr = height + 2; | ||

| − | |||

int index = imageIndex(xCorr, yCorr, heightCorr); | int index = imageIndex(xCorr, yCorr, heightCorr); | ||

const float gM = gradMagnitude[index]; | const float gM = gradMagnitude[index]; | ||

| − | |||

const int sectorConvertX[9] = { 0, -1, -1, -1, -1, -1, -1, 0 , 0 }; | const int sectorConvertX[9] = { 0, -1, -1, -1, -1, -1, -1, 0 , 0 }; | ||

| − | |||

const int sectorConvertY[9] = { -1, 1, 1, -1, -1, 0, 0, -1 , -1 }; | const int sectorConvertY[9] = { -1, 1, 1, -1, -1, 0, 0, -1 , -1 }; | ||

| − | |||

const float gA = fabsf(gradAngle[imageIndex(x, y, height)]); | const float gA = fabsf(gradAngle[imageIndex(x, y, height)]); | ||

| − | |||

const int sector = int(gA / M_PI) * 8; | const int sector = int(gA / M_PI) * 8; | ||

int i = sectorConvertX[sector]; | int i = sectorConvertX[sector]; | ||

| − | |||

int j = sectorConvertY[sector]; | int j = sectorConvertY[sector]; | ||

| − | if (!((gM <= gradMagnitude[imageIndex(xCorr + i, yCorr + j, heightCorr)]) | + | if (!((gM <= gradMagnitude[imageIndex(xCorr + i, yCorr + j, heightCorr)])|| (gM <= gradMagnitude[imageIndex(xCorr - 1, yCorr - j, heightCorr)]))){ |

| − | + | result[index] = gM; | |

| − | || (gM <= gradMagnitude[imageIndex(xCorr - 1, yCorr - j, heightCorr)]))) | + | }else{ |

| − | + | result[index] = 0.0; | |

| − | { | ||

| − | |||

| − | result[index] = | ||

| − | |||

} | } | ||

| − | + | </pre> | |

| − | + | hysterisisSecondPass was padded and had its inner double loop unrolled and the inner loop was replaced with divisions to eliminate branching. | |

| − | |||

| − | |||

| − | |||

| − | |||

| + | <pre> | ||

//old | //old | ||

| − | if (pixel >= lowerThreshold && pixel < upperThreshold) | + | if (pixel >= lowerThreshold && pixel < upperThreshold){ |

| − | + | int iLower = (x == 0) ? 0 : -1; | |

| − | { | + | int iUpper = (x == width - 1) ? 0 : 1; |

| − | + | int jLower = (height == 0) ? 0 : -1; | |

| − | int iLower = (x == 0) ? 0 : -1; | + | int jUpper = (x == width - 1) ? 0 : 1; |

| − | + | ret = 0.0; | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | ret = 0.0 | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | for (int i = iLower; i <= iUpper; i++){ | ||

| + | for (int j = jLower; j <= jUpper; j++){ | ||

| + | if (image[imageIndex(x + i, y + j, height)]){ | ||

| + | ret = upperThreshold + 1; | ||

| + | } | ||

| + | } | ||

| + | } | ||

} | } | ||

| − | + | </pre> | |

| − | + | <pre> | |

| − | |||

| − | |||

//new | //new | ||

| − | if (level >= lowerThreshold && level < upperThreshold) | + | if (level >= lowerThreshold && level < upperThreshold){ |

| − | + | level = 0.0; | |

| − | + | level += image[imageIndex(xCorr + 1, yCorr + 1, heightCorr)] / upperThreshold; | |

| − | level = 0.0; | + | level += image[imageIndex(xCorr + 0, yCorr + 1, heightCorr)] / upperThreshold; |

| − | + | level += image[imageIndex(xCorr + -1, yCorr + 1, heightCorr)] / upperThreshold; | |

| − | level += image[imageIndex(xCorr + 1, yCorr + 1, heightCorr)] / upperThreshold; | + | level += image[imageIndex(xCorr + 1, yCorr + 0, heightCorr)] / upperThreshold; |

| − | + | level += image[imageIndex(xCorr + -1, yCorr + 0, heightCorr)] / upperThreshold; | |

| − | level += image[imageIndex(xCorr + 0, yCorr + 1, heightCorr)] / upperThreshold; | + | level += image[imageIndex(xCorr + 1, yCorr + -1, heightCorr)] / upperThreshold; |

| − | + | level += image[imageIndex(xCorr + 0, yCorr + -1, heightCorr)] / upperThreshold; | |

| − | level += image[imageIndex(xCorr + -1, yCorr + 1, heightCorr)] / upperThreshold; | + | level += image[imageIndex(xCorr + -1, yCorr + -1, heightCorr)] / upperThreshold; |

| − | + | level = level == 0 ? 0 : 255; | |

| − | level += image[imageIndex(xCorr + 1, yCorr + 0, heightCorr)] / upperThreshold; | + | } |

| − | |||

| − | level += image[imageIndex(xCorr + -1, yCorr + 0, heightCorr)] / upperThreshold; | ||

| − | |||

| − | level += image[imageIndex(xCorr + 1, yCorr + -1, heightCorr)] / upperThreshold; | ||

| − | |||

| − | level += image[imageIndex(xCorr + 0, yCorr + -1, heightCorr)] / upperThreshold; | ||

| − | |||

| − | level += image[imageIndex(xCorr + -1, yCorr + -1, heightCorr)] / upperThreshold; | ||

| − | |||

| − | level = level == 0 ? 0 : 255; | ||

| − | |||

| − | |||

| − | + | </pre> | |

| − | + | <b><font style="font-size:140%"> Grey world </font></b> | |

The optimization for grey world was minimal, and in turn provided a minimal increase in execution. | The optimization for grey world was minimal, and in turn provided a minimal increase in execution. | ||

Global memory calls were reduced from twelve to one, as well as reducing branching if statements. | Global memory calls were reduced from twelve to one, as well as reducing branching if statements. | ||

| + | |||

| + | <pre> | ||

if(averageR > 0.05){ | if(averageR > 0.05){ | ||

| − | + | newGrey[ imageIndex(x, y, height) ].Red = (255 < Original[ imageIndex(x, y, height) ].Red * averageAll / averageR)? 255 : Original[ imageIndex(x, y, height) ].Red * averageAll / averageR; | |

| − | newGrey[ imageIndex(x, y, height) ].Red = (255 < Original[ imageIndex(x, y, height) ].Red * averageAll / averageR)? | + | }else{ |

| − | + | newGrey[ imageIndex(x, y, height) ].Red = (255 < Original[ imageIndex(x, y, height) ].Red + averageAll)? 255 : Original[ imageIndex(x, y, height) ].Red + averageAll; | |

| − | 255 : Original[ imageIndex(x, y, height) ].Red * averageAll / averageR; | ||

| − | |||

| − | } | ||

| − | |||

| − | else | ||

| − | |||

| − | { | ||

| − | |||

| − | newGrey[ imageIndex(x, y, height) ].Red = (255 < Original[ imageIndex(x, y, height) ].Red + averageAll)? | ||

| − | |||

| − | 255 : Original[ imageIndex(x, y, height) ].Red + averageAll; | ||

| − | |||

} | } | ||

if(averageB > 0.05){ | if(averageB > 0.05){ | ||

| − | + | newGrey[ imageIndex(x, y, height) ].Blue = (255 < Original[ imageIndex(x, y, height) ].Blue * averageAll / averageB)? 255 : Original[ imageIndex(x, y, height) ].Blue * averageAll / averageB; | |

| − | newGrey[ imageIndex(x, y, height) ].Blue = (255 < Original[ imageIndex(x, y, height) ].Blue * averageAll / averageB)? | + | }else{ |

| − | + | newGrey[ imageIndex(x, y, height) ].Blue = (255 < Original[ imageIndex(x, y, height) ].Blue + averageAll)? 255 : Original[ imageIndex(x, y, height) ].Blue + averageAll; | |

| − | 255 : Original[ imageIndex(x, y, height) ].Blue * averageAll / averageB; | ||

| − | |||

| − | } | ||

| − | |||

| − | else | ||

| − | |||

| − | { | ||

| − | |||

| − | newGrey[ imageIndex(x, y, height) ].Blue = (255 < Original[ imageIndex(x, y, height) ].Blue + averageAll)? | ||

| − | |||

| − | 255 : Original[ imageIndex(x, y, height) ].Blue + averageAll; | ||

| − | |||

} | } | ||

if(averageG > 0.05){ | if(averageG > 0.05){ | ||

| − | + | newGrey[ imageIndex(x, y, height) ].Green = (255 < Original[ imageIndex(x, y, height) ].Green * averageAll / averageG)? 255 : Original[ imageIndex(x, y, height) ].Green * averageAll / averageG; | |

| − | newGrey[ imageIndex(x, y, height) ].Green = (255 < Original[ imageIndex(x, y, height) ].Green * averageAll / averageG)? | + | }else{ |

| − | + | newGrey[ imageIndex(x, y, height) ].Green = (255 < Original[ imageIndex(x, y, height) ].Green + averageAll)? 255 : Original[ imageIndex(x, y, height) ].Green + averageAll; | |

| − | 255 : Original[ imageIndex(x, y, height) ].Green * averageAll / averageG; | ||

| − | |||

| − | } | ||

| − | |||

| − | else | ||

| − | |||

| − | { | ||

| − | |||

| − | newGrey[ imageIndex(x, y, height) ].Green = (255 < Original[ imageIndex(x, y, height) ].Green + averageAll)? | ||

| − | |||

| − | 255 : Original[ imageIndex(x, y, height) ].Green + averageAll; | ||

| − | |||

} | } | ||

GPU_RGBApixel orig = Original[imageIndex(x, y, height)]; | GPU_RGBApixel orig = Original[imageIndex(x, y, height)]; | ||

| − | |||

GPU_RGBApixel grey = { 0, 0, 0 }; | GPU_RGBApixel grey = { 0, 0, 0 }; | ||

| − | if(averageR > 0.05) | + | if(averageR > 0.05){ |

| − | + | grey.Red = orig.Red * averageAll / averageR; | |

| − | { | + | }else{ |

| − | + | grey.Red = orig.Red + averageAll; | |

| − | grey.Red = orig.Red * averageAll / averageR; | ||

| − | |||

} | } | ||

| − | + | if(averageB > 0.05){ | |

| − | + | grey.Blue = orig.Blue * averageAll / averageB; | |

| − | { | + | }else{ |

| − | + | grey.Blue = orig.Blue + averageAll; | |

| − | grey. | ||

| − | |||

} | } | ||

| − | + | if(averageG > 0.05){ | |

| − | + | grey.Green = orig.Green * averageAll / averageG; | |

| − | + | }else{ | |

| − | + | grey.Green = orig.Green + averageAll; | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | if(averageG > 0.05) | ||

| − | |||

| − | { | ||

| − | |||

| − | grey.Green = orig.Green * averageAll / averageG; | ||

| − | |||

| − | } | ||

| − | |||

| − | else | ||

| − | |||

| − | { | ||

| − | |||

| − | grey.Green = orig.Green + averageAll; | ||

| − | |||

} | } | ||

if(grey.Red > 255) grey.Red = 255; | if(grey.Red > 255) grey.Red = 255; | ||

| − | |||

if(grey.Blue > 255) grey.Blue = 255; | if(grey.Blue > 255) grey.Blue = 255; | ||

| − | |||

if(grey.Green > 255) grey.Green = 255; | if(grey.Green > 255) grey.Green = 255; | ||

newGrey[imageIndex(x, y, height)] = grey; | newGrey[imageIndex(x, y, height)] = grey; | ||

| − | Autocontrast | + | </pre> |

| + | |||

| + | <b><font style="font-size:140%"> Autocontrast </font></b> | ||

The only optimization made was reducing global memory access from 2 to 1. | The only optimization made was reducing global memory access from 2 to 1. | ||

| − | + | <pre> | |

const RGBApixel& imgPixel = img[imageIndex(x, y, height)]; | const RGBApixel& imgPixel = img[imageIndex(x, y, height)]; | ||

| Line 713: | Line 519: | ||

RGBApixel& resultPixel = result[imageIndex(x, y, height)]; | RGBApixel& resultPixel = result[imageIndex(x, y, height)]; | ||

| − | + | </pre> | |

| − | + | <pre> | |

| + | int index = imageIndex(x, y, height); | ||

const RGBApixel& imgPixel = img[index]; | const RGBApixel& imgPixel = img[index]; | ||

| + | RGBApixel& resultPixel = result[index]; | ||

| − | + | </pre> | |

| − | + | <b><font style="font-size:140%"> Resize </font></b> | |

When optimizing resize, on the development machine where the code was being written, there seemed to be an increase of performance but when ran on the benchmark system there was no performance increase shown. | When optimizing resize, on the development machine where the code was being written, there seemed to be an increase of performance but when ran on the benchmark system there was no performance increase shown. | ||

| Line 727: | Line 535: | ||

Logic was tinkered with, and some redundant caculations were eliminated storing the reults in local registers. As well, global memory access was reduced from 12 times a thread to 4. Finally, the result from the pixel calculation was stored in a temp local register and then that was writted to global memory, reducing the writes to global memory. | Logic was tinkered with, and some redundant caculations were eliminated storing the reults in local registers. As well, global memory access was reduced from 12 times a thread to 4. Finally, the result from the pixel calculation was stored in a temp local register and then that was writted to global memory, reducing the writes to global memory. | ||

| + | <pre> | ||

newImage[i * NewWidth + j].Red = | newImage[i * NewWidth + j].Red = | ||

| − | + | (ebmpBYTE)((1.0 - ThetaI - ThetaJ + ThetaI*ThetaJ)*(OldImage[I * OldWidth + J].Red) | |

| − | (ebmpBYTE)((1.0 - ThetaI - ThetaJ + ThetaI*ThetaJ)*(OldImage[I * OldWidth + J].Red) | + | + (ThetaI - ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J].Red) |

| − | + | + (ThetaJ - ThetaI*ThetaJ)*(OldImage[I * OldWidth + J + 1].Red) | |

| − | + (ThetaI - ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J].Red) | + | + (ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J + 1].Red)); |

| − | |||

| − | + (ThetaJ - ThetaI*ThetaJ)*(OldImage[I * OldWidth + J + 1].Red) | ||

| − | |||

| − | + (ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J + 1].Red)); | ||

newImage[i * NewWidth + j].Green = | newImage[i * NewWidth + j].Green = | ||

| − | + | (ebmpBYTE)((1.0 - ThetaI - ThetaJ + ThetaI*ThetaJ)*(OldImage[I * OldWidth + J].Green) | |

| − | (ebmpBYTE)((1.0 - ThetaI - ThetaJ + ThetaI*ThetaJ)*(OldImage[I * OldWidth + J].Green) | + | + (ThetaI - ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J].Green) |

| − | + | + (ThetaJ - ThetaI*ThetaJ)*(OldImage[I * OldWidth + J + 1].Green) | |

| − | + (ThetaI - ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J].Green) | + | + (ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J + 1].Green)); |

| − | |||

| − | + (ThetaJ - ThetaI*ThetaJ)*(OldImage[I * OldWidth + J + 1].Green) | ||

| − | |||

| − | + (ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J + 1].Green)); | ||

newImage[i * NewWidth + j].Blue = | newImage[i * NewWidth + j].Blue = | ||

| + | (ebmpBYTE)((1.0 - ThetaI - ThetaJ + ThetaI*ThetaJ)*(OldImage[I * OldWidth + J].Blue) | ||

| + | + (ThetaI - ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J].Blue) | ||

| + | + (ThetaJ - ThetaI*ThetaJ)*(OldImage[I * OldWidth + J + 1].Blue) | ||

| + | + (ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J + 1].Blue)); | ||

| − | + | </pre> | |

| − | |||

| − | |||

| − | + | <pre> | |

| − | |||

| − | |||

float t4 = ThetaI*ThetaJ; | float t4 = ThetaI*ThetaJ; | ||

| − | |||

float t1 = 1.0 - ThetaI - ThetaJ + t4; | float t1 = 1.0 - ThetaI - ThetaJ + t4; | ||

| − | |||

float t2 = ThetaI - t4; | float t2 = ThetaI - t4; | ||

| − | |||

float t3 = ThetaJ - t4; | float t3 = ThetaJ - t4; | ||

int p1 = I * OldWidth + J; | int p1 = I * OldWidth + J; | ||

| − | |||

int p2 = (I + 1) * OldWidth + J; | int p2 = (I + 1) * OldWidth + J; | ||

| − | |||

int p3 = I * OldWidth + J + 1; | int p3 = I * OldWidth + J + 1; | ||

| − | |||

int p4 = (I + 1) * OldWidth + J + 1; | int p4 = (I + 1) * OldWidth + J + 1; | ||

RGBApixel temp; | RGBApixel temp; | ||

| − | |||

RGBApixel temp1 = OldImage[p1]; | RGBApixel temp1 = OldImage[p1]; | ||

| − | |||

RGBApixel temp3 = OldImage[p3]; | RGBApixel temp3 = OldImage[p3]; | ||

| − | |||

RGBApixel temp2 = OldImage[p2]; | RGBApixel temp2 = OldImage[p2]; | ||

| − | |||

RGBApixel temp4 = OldImage[p4]; | RGBApixel temp4 = OldImage[p4]; | ||

| − | temp.Red =(ebmpBYTE)((t1)*(temp1.Red) | + | temp.Red =(ebmpBYTE)((t1)*(temp1.Red) + (t2)*(temp2.Red) + (t3)*(temp3.Red) + (t4)*(temp4.Red)); |

| − | + | temp.Green = (ebmpBYTE)((t1)*(temp1.Green) + (t2)*(temp2.Green) + (t3)*(temp3.Green) + (t4)*(temp4.Green)); | |

| − | + (t2)*(temp2.Red) | + | temp.Blue = (ebmpBYTE)((t1)*(temp1.Blue) + (t2)*(temp2.Blue) + (t3)*(temp3.Blue) + (t4)*(temp4.Blue)); |

| − | |||

| − | + (t3)*(temp3.Red) | ||

| − | |||

| − | + (t4)*(temp4.Red)); | ||

| − | |||

| − | temp.Green = | ||

| − | |||

| − | (ebmpBYTE)((t1)*(temp1.Green) | ||

| − | |||

| − | + (t2)*(temp2.Green) | ||

| − | |||

| − | + (t3)*(temp3.Green) | ||

| − | |||

| − | + (t4)*(temp4.Green)); | ||

| − | |||

| − | temp.Blue = | ||

| − | |||

| − | (ebmpBYTE)((t1)*(temp1.Blue) | ||

| − | |||

| − | + (t2)*(temp2.Blue) | ||

| − | |||

| − | + (t3)*(temp3.Blue) | ||

| − | + | </pre> | |

During the optimization of resize, an attempt was made to make use of share memory. While eventually it was made to work, there was a 250% performance decrease. Here was the last working attempt at shared memory use. This method introduced branching in order to check for edge detection of the blocks. This method caused severe bank conflicts, and after further analysis revealed that it would not work due to each resultant pixels calculations were independent of every other pixel thus shared memory use was not possible. | During the optimization of resize, an attempt was made to make use of share memory. While eventually it was made to work, there was a 250% performance decrease. Here was the last working attempt at shared memory use. This method introduced branching in order to check for edge detection of the blocks. This method caused severe bank conflicts, and after further analysis revealed that it would not work due to each resultant pixels calculations were independent of every other pixel thus shared memory use was not possible. | ||

| − | __global__ void c_newPixel(RGBApixel * OldImage, RGBApixel *newImage, | + | <pre> |

| − | + | __global__ void c_newPixel(RGBApixel * OldImage, RGBApixel *newImage,int OldWidth, int OldHeight, int NewWidth, int NewHeight){ | |

| − | int OldWidth, int OldHeight, int NewWidth, int NewHeight) | ||

| − | |||

| − | { | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | int j = blockIdx.x * blockDim.x + threadIdx.x; | |

| + | int i = blockIdx.y * blockDim.y + threadIdx.y; | ||

| + | int tx = threadIdx.x; | ||

| + | int ty = threadIdx.y; | ||

| − | + | if (i >= NewHeight - 1 || j >= NewWidth - 1){ | |

| + | return; | ||

| + | } | ||

| − | J = (int)floor(ThetaJ); | + | int I, J; |

| + | float ThetaI, ThetaJ; | ||

| + | ThetaJ = (float)(j*(OldHeight - 1.0)) / (float)(NewHeight - 1.0); | ||

| + | J = (int)floor(ThetaJ); | ||

| + | ThetaJ -= J; | ||

| + | |||

| + | ThetaI = (float)(i*(OldWidth - 1.0)) / (float)(NewWidth - 1.0); | ||

| + | I = (int)floor(ThetaI); | ||

| + | ThetaI -= I; | ||

| + | |||

| + | int blkArraySize = (blockDim.x + 1) * (blockDim.x + 1); | ||

| + | int blkArrayWidth = (blockDim.x + 1) * ty; | ||

| − | + | extern __shared__ RGBApixel pixel[]; | |

| − | + | pixel[tx + blkArrayWidth] = OldImage[I * OldWidth + J]; | |

| + | pixel[tx + blkArrayWidth + blkArraySize] = OldImage[(I + 1) * OldWidth + J]; | ||

| + | |||

| + | if (ty == blockDim.y - 1){ | ||

| + | int I1; | ||

| + | float ThetaI1; | ||

| + | ThetaI1 = (float)((i + 1)*(OldWidth - 1.0)) / (float)(NewWidth - 1.0); | ||

| + | I1 = (int)floor(ThetaI1); | ||

| + | |||

| + | int J1; | ||

| + | float ThetaJ1; | ||

| + | ThetaJ1 = (float)((j)*(OldHeight - 1.0)) / (float)(NewHeight - 1.0); | ||

| + | J1 = (int)floor(ThetaJ1); | ||

| + | |||

| + | pixel[tx + blockDim.x + blkArrayWidth] = OldImage[I1 * OldWidth + J1]; | ||

| + | pixel[tx + blockDim.x + blkArrayWidth + blkArraySize] = OldImage[(I1 + 1) * OldWidth + J1]; | ||

| + | } | ||

| − | + | if (tx == blockDim.x - 1){ | |

| + | int J1; | ||

| + | float ThetaJ1; | ||

| + | ThetaJ1 = (float)((j + 1)*(OldHeight - 1.0)) / (float)(NewHeight - 1.0); | ||

| + | J1 = (int)floor(ThetaJ1); | ||

| − | + | int I1; | |

| + | float ThetaI1; | ||

| + | ThetaI1 = (float)((i)*(OldWidth - 1.0)) / (float)(NewWidth - 1.0); | ||

| + | I1 = (int)floor(ThetaI1); | ||

| − | + | pixel[tx + blkArrayWidth + 1] = OldImage[I1 * OldWidth + J1]; | |

| + | pixel[tx + blkArrayWidth + 1 + blkArraySize] = OldImage[(I1 + 1) * OldWidth + J1]; | ||

| + | } | ||

| − | + | __syncthreads(); | |

| − | + | RGBApixel pixelResult; | |

| − | + | float t4 = ThetaI*ThetaJ; | |

| + | float t1 = 1.0 - ThetaI - ThetaJ + t4; | ||

| + | float t2 = ThetaI - t4; | ||

| + | float t3 = ThetaJ - t4; | ||

| − | + | int ps1 = tx + blkArrayWidth; | |

| + | int ps2 = tx + blkArraySize + blkArrayWidth; | ||

| + | int ps3 = ps1 + 1; | ||

| + | int ps4 = ps2 + 1; | ||

| − | + | pixelResult.Red =(ebmpBYTE)((t1)*(pixel[ps1].Red) | |

| + | + (t2)*(pixel[ps2].Red) | ||

| + | + (t3)*(pixel[ps3].Red) | ||

| + | + (t4)*(pixel[ps4].Red)); | ||

| − | + | pixelResult.Green =(ebmpBYTE)((t1)*(pixel[ps1].Green) | |

| − | + | + (t2)*(pixel[ps2].Green) | |

| − | + | + (t3)*(pixel[ps3].Green) | |

| − | + | + (t4)*(pixel[ps4].Green)); | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | pixelResult.Green = | ||

| − | |||

| − | (ebmpBYTE)((t1)*(pixel[ps1].Green) | ||

| − | |||

| − | + (t2)*(pixel[ps2].Green) | ||

| − | |||

| − | + (t3)*(pixel[ps3].Green) | ||

| − | |||

| − | + (t4)*(pixel[ps4].Green | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | pixelResult.Blue = (ebmpBYTE)((t1)*(pixel[ps1].Blue) | ||

| + | + (t2)*(pixel[ps2].Blue) | ||

| + | + (t3)*(pixel[ps3].Blue) | ||

| + | + (t4)*(pixel[ps4].Blue)); | ||

| + | |||

newImage[i * NewWidth + j] = pixelResult; | newImage[i * NewWidth + j] = pixelResult; | ||

| − | |||

} | } | ||

| + | </pre> | ||

Latest revision as of 16:50, 5 December 2014

GPU610/DPS915 | Student List | Group and Project Index | Student Resources | Glossary

Contents

[hide]Team Eh

Team Members

- Benjamin Snively, Some responsibility

- Brad Hoover, Some other responsibility

- Balint Czunyi, Some other responsibility

- ...

Progress

Assignment 1

Benjamin Snively's Results

Introduction

This image processing program was found on github. It processes and manipulates images using convolutions matrices (kernels). It has several different functions including aligning and sharpening images.

To convolve an image the kernel is applied to each pixel. Using the kernel, the pixel's value is combined with that of its neighbors to create a new pixel value. This program implements the filter using two loops to loop over each pixel in sequence. For a given an image convolution is an O(rows x columns) function. As blurring operation on each pixel is independent of the others, therefore it is a perfect candidate for parallelization.

To profile the application, I created a large bitmap file (about 800 x 800, 2MB) and ran it through three different operations. To conserve space, I have not included a profile of all of the available operations.

Gassian Blur

Command: --gassian 5

Each sample counts as 0.01 seconds.

% cumulative self self total time seconds seconds calls s/call s/call name 44.81 88.57 88.57 _mcount_private 31.92 151.66 63.09 __fentry__ 4.85 161.25 9.59 1 9.59 45.84 Gauss_filter::smooth_ord(Matrix<std::tuple<unsigned int, unsigned int, unsigned int> >&) 1.92 165.05 3.80 633231640 0.00 0.00 Matrix<std::tuple<unsigned int, unsigned int, unsigned int> >::operator()(unsigned int, unsigned int) 1.43 167.88 2.83 633887736 0.00 0.00 std::__shared_ptr<std::tuple<unsigned int, unsigned int, unsigned int>, (__gnu_cxx::_Lock_policy)2>::get() const 1.37 170.58 2.70 630508256 0.00 0.00 std::_Tuple_impl<0ul, int&, int&, int&>& std::_Tuple_impl<0ul, int&, int&, int&>::operator=<unsigned int, unsigned int, unsigned int>(std::_Tuple_impl<0ul, unsigned int, unsigned int, unsigned int> const&) 0.92 172.40 1.82 630508256 0.00 0.00 std::_Head_base<0ul, int&, false>::_Head_base(int&) 0.87 174.12 1.72 630508256 0.00 0.00 std::_Tuple_impl<2ul, int&>& std::_Tuple_impl<2ul, int&>::operator=<unsigned int>(std::_Tuple_impl<2ul, unsigned int> const&) 0.86 175.81 1.69 630508256 0.00 0.00 std::_Head_base<1ul, int&, false>::_Head_base(int&) 0.84 177.47 1.66 630508256 0.00 0.00 std::_Tuple_impl<1ul, int&, int&>& std::_Tuple_impl<1ul, int&, int&>::operator=<unsigned int, unsigned int>(std::_Tuple_impl<1ul, unsigned int, unsigned int> const&) 0.78 179.02 1.55 630508256 0.00 0.00 std::_Head_base<2ul, int&, false>::_Head_base(int&) 0.77 180.54 1.52 630508256 0.00 0.00 std::tuple<int&, int&, int&> std::tie<int, int, int>(int&, int&, int&) 0.74 182.00 1.46 630508256 0.00 0.00 std::_Tuple_impl<2ul, int&>::_Tuple_impl(int&) 0.65 183.28 1.28 630508256 0.00 0.00 std::tuple<int&, int&, int&>& std::tuple<int&, int&, int&>::operator=<unsigned int, unsigned int, unsigned int, void>(std::tuple<unsigned int, unsigned int, unsigned int> const&) 0.57 184.41 1.13 630508256 0.00 0.00 std::tuple<int&, int&, int&>::tuple(int&, int&, int&) 0.55 185.50 1.09 630508256 0.00 0.00 std::_Head_base<0ul, int&, false>::_M_head(std::_Head_base<0ul, int&, false>&) 0.52 186.53 1.03 630508256 0.00 0.00 std::_Tuple_impl<0ul, int&, int&, int&>::_Tuple_impl(int&, int&, int&)

Sharpen

Command: --unsharp

Each sample counts as 0.01 seconds.

% cumulative self self total time seconds seconds calls ms/call ms/call name 44.44 0.96 0.96 _mcount_private 27.31 1.55 0.59 __fentry__ 7.41 1.71 0.16 1 160.00 458.44 unsharp(Matrix<std::tuple<unsigned int, unsigned int, unsigned int> >) 5.56 1.83 0.12 20345464 0.00 0.00 Matrix<std::tuple<unsigned int, unsigned int, unsigned int> >::operator()(unsigned int, unsigned int) 1.39 1.86 0.03 21001560 0.00 0.00 std::__shared_ptr<std::tuple<unsigned int, unsigned int, unsigned int>, (__gnu_cxx::_Lock_policy)2>::get() const 1.39 1.89 0.03 7876396 0.00 0.00 std::_Tuple_impl<0ul, unsigned int, unsigned int, unsigned int>::_M_head(std::_Tuple_impl<0ul, unsigned int, unsigned int, unsigned int>&) 1.39 1.92 0.03 656096 0.00 0.00 std::_Tuple_impl<2ul, unsigned int>& std::_Tuple_impl<2ul, unsigned int>::operator=<unsigned char>(std::_Tuple_impl<2ul, unsigned char>&&) 0.93 1.94 0.02 7876396 0.00 0.00 std::_Tuple_impl<1ul, unsigned int, unsigned int>::_M_head(std::_Tuple_impl<1ul, unsigned int, unsigned int>&) 0.93 1.96 0.02 1968288 0.00 0.00 unsigned char&& std::forward<unsigned char>(std::remove_reference<unsigned char>::type&) 0.93 1.98 0.02 656096 0.00 0.00 std::_Head_base<0ul, unsigned char, false>::_M_head(std::_Head_base<0ul, unsigned char, false>&)

Identity

command: --custom '0,0,0,0,1,0,0,0,0'

Each sample counts as 0.01 seconds.

% cumulative self self total time seconds seconds calls ms/call ms/call name 53.61 1.71 1.71 _mcount_private 28.21 2.61 0.90 __fentry__ 4.39 2.75 0.14 2 70.00 218.45 Use_kernel::new_im() 1.88 2.81 0.06 8542240 0.00 0.00 Matrix<std::tuple<unsigned int, unsigned int, unsigned int> >::operator()(unsigned int, unsigned int) 0.94 2.84 0.03 5904864 0.00 0.00 std::_Tuple_impl<0ul, int&, int&, int&>::_Tuple_impl(int&, int&, int&) 0.63 2.86 0.02 13126802 0.00 0.00 __gnu_cxx::__enable_if<std::__is_integer<int>::__value, double>::__type std::floor<int>(int) 0.63 2.88 0.02 9841440 0.00 0.00 double& std::forward<double&>(std::remove_reference<double&>::type&) 0.63 2.90 0.02 7223552 0.00 0.00 std::_Head_base<0ul, unsigned int, false>::_M_head(std::_Head_base<0ul, unsigned int, false> const&) 0.63 2.92 0.02 5904864 0.00 0.00 std::_Tuple_impl<1ul, int&, int&>& std::_Tuple_impl<1ul, int&, int&>::operator=<unsigned int, unsigned int>(std::_Tuple_impl<1ul, unsigned int, unsigned int> const&) 0.63 2.94 0.02 5904864 0.00 0.00 std::_Tuple_impl<2ul, int&>::_Tuple_impl(int&)

Summary

The functions that perform the filtering are Gauss_filter::smooth_ord, unsharp and Use_kernel::new_im(). These functions are all O(r x c) with respect to image dimensions and thus where the biggest gains from parallelization will be found.

Bradly Hoovers Results

Introduction

The SHA-1 algorithm used in this project was implemented by Paul E. Jones[1]. It is a C++ version of the algorithm. The recursive permutation algorithm was taken from a user submission[2] on stack exchange.

[1]http://www.packetizer.com/security/sha1/

[2] http://codereview.stackexchange.com/questions/38474/brute-force-algorithm-in-c

After some tweaking to integrate the two to work in conjunction, I ran the program using the Upper and lower case alphabet for the permutation, with a length of 5, as input. The length and character set are hard coded.

Length of 5, upper and lowercase

Command: ./brutis

Each sample counts as 0.01 seconds.

Each sample counts as 0.01 seconds. % cumulative self self total time seconds seconds calls s/call s/call name 83.38 109.96 109.96 387659012 0.00 0.00 SHA1::ProcessMessageBlock() 8.77 121.52 11.56 387659012 0.00 0.00 SHA1::PadMessage() 4.01 126.81 5.28 1930693908 0.00 0.00 SHA1::Input(unsigned char const*, unsigned int) 1.48 128.76 1.96 387659012 0.00 0.00 SHA1::operator<<(char const*) 1.19 130.33 1.57 387659012 0.00 0.00 SHA1::Result(unsigned int*) 0.73 131.29 0.96 387659012 0.00 0.00 SHA1::Reset() 0.36 131.76 0.47 5 0.09 26.35 SHA1::check(char*, int, int, int, char const*) 0.05 131.83 0.07 SHA1::~SHA1() 0.03 131.88 0.05 SHA1::SHA1() 0.03 131.92 0.05 SHA1::operator<<(unsigned char) 0.02 131.94 0.02 SHA1::Input(char) 0.00 131.94 0.00 1 0.00 0.00 _GLOBAL__sub_I__ZN4SHA1C2Ev 0.00 131.94 0.00 1 0.00 0.00 _GLOBAL__sub_I_main

Summary

The total runtime for this test was approximately 132 seconds. Using Amdahl's law to calculate the speed up I would obtain on my laptop, the equation is:

S384 = 1 / ((1 - 0.8338) + (0.8338/384)) = 5.9393

The maximum expected speed is 5.9393. My laptop’s 650M GPU only has 384 cores. This is not a significant increase in speed.

Using my desktop’s GTX780 has 2304 core. Using my desktop’s gpu the resulting speed up would be:

S2304 = 1 / ((1 - 0.8338) + (0.8338/2304)) = 6.004

After observing these results, and further analysis of the algorithm, I have found that the SHA-1 algorithm is a sequential algorithm not entirely suitable for parallelisation.

Due to this, I choose Ben's image processing for parallelisation.

Balint Czunyi's Results

Introduction

My Project used a Heat Equation calculator.

Source: https://github.com/MyCodes/Heat-Equation

After some changes to the Makefile to work with the profiler and testing several different parameters for the calculations I have come up with the following results:

Explicit from -15 to +15

Each sample counts as 0.01 seconds.

% cumulative self self total time seconds seconds calls ms/call ms/call name 34.78 0.08 0.08 __fentry__ 26.09 0.14 0.06 2 30.00 31.67 Heat::writePlot() 26.09 0.20 0.06 _mcount_private 8.70 0.22 0.02 8986009 0.00 0.00 std::vector<double, std::allocator<double> >::operator[](unsigned long) 4.35 0.23 0.01 1 10.00 26.66 Heat::solveEquation(char) 0.00 0.23 0.00 8986009 0.00 0.00 std::vector<std::vector<double, std::allocator<double> >, std::allocator<std::vector<double, std::allocator<double> > > >::operator[](unsigned long) 0.00 0.23 0.00 1496502 0.00 0.00 heatFunction(double, double)

. . .

Summary

As you can see the std::vector<double, std::allocator<double> >::operator[](unsigned long) function is where 8.7 % of the time is spent and thus this is where the program would most benefit from parallelisation.

S1920 = (1 / ( 1 - 0.087 + 0.087 / 1920 ) = 0.913

As this program wont get much faster even with my high number of cores I also choose Ben's Image Processor for parallelisation.

Assignment 2

We chose to parallelize the image processing program. Image processing is easy to parallelize but this project was a challenge due to the number of functions the program has.

Benchmarking

Intel Core i7 2600K (standard clock) NVidia GeForce GTX 750 Ti

Two images were used to test each image function, a large one and a small one.

The small sample test image:

The sample after being processed by the canny filter:

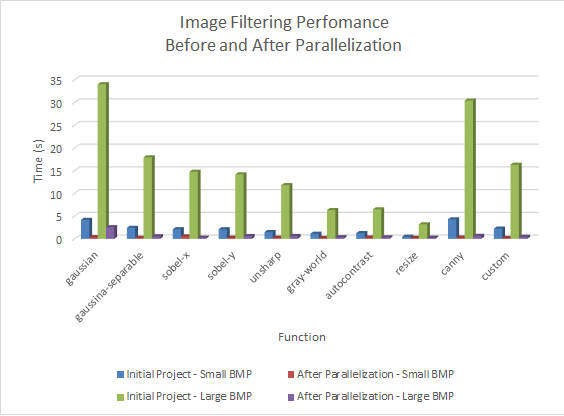

Results

This chart compares the run times for the original and parallelized image processing functions. We saw dramatic improvements to image filtering performance. All functions are down to constant time with respect to image dimensions, down from O(n^2).

Problems Encountered

Many of the operations were composed of several different kernels and other operations. To avoid repeated copies to and from the device we wrapped each kernel in a function that took device pointers. That way images could be loaded once and passed through multiple filters without returning them to the host.

Several of the operations scan a pixels neighbors to determine the pixels value. This creates a problem when a pixel is near the edge of an image. To solve this problem we interpenetrated the image not as a flat surface but as a torus. Anytime a thread would access an off image pixel it would wrap around and use a pixel from the opposite side of the image.

Assignment 3

Optimization presented some interesting challenges. The following were important factors in the attempt to optimize:

* Filtering problems were independent of other filters * Filtering is 2D in nature * Filters can and are regularly convolved to form new filters * Filters require an apron of neighboring pixels to calculate the filter

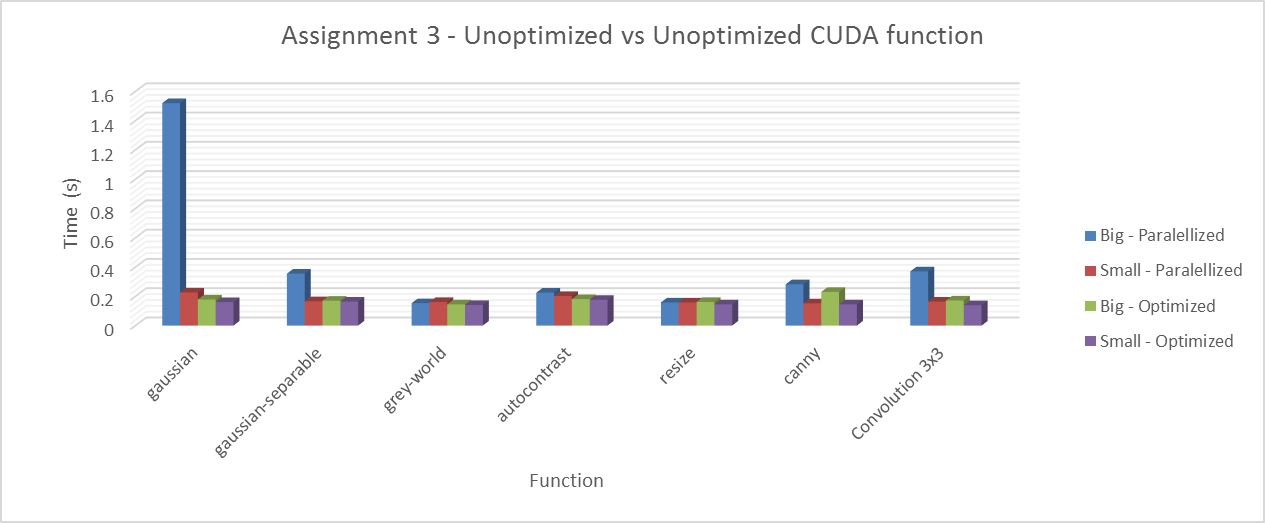

The greatest gains were made in the convolution, and canny kernels with minimal gains in grey world, auto contrast and no gains in resize. No changes were made to either Gaussian as they were implemented as convolution kernels.

One change that improved performance everywhere was changing all variables from double to float. This improved performance across the board.

Shared Memory

Due to the nature of the algorithms, shared memory was not an option for any kernel.

In all of the kernels, the calculations performed for a resultant pixel were independent from all other resultant pixels. This meant that one pixels result was completely independent of all other pixels. An attempt was made in the resize kernel but after careful analysis of shared memory access and further understanding of the algorithm, it was determined that shared memory would only result in performance loss.

While researching shared memory, we did come across the problem of bank conflicts which was not covered in class.

Shared memory is divided into banks, each bank is made up of 4bytes (32 bits).

Bank 1 2 3 4

Byte | 1 2 3 4 | 1 2 3 4 | 1 2 3 4 | 1 2 3 4 |

If multiple threads try to access the same bank, a conflict occurs, and access has to be serialized. This can be prevented in multiple ways.

First, is to pad, or use different types in your data structures.

struct RGBApixel {

unsigned char Blue;

unsigned char Green;

unsigned char Red;

unsigned char Alpha;

}

This struct is stored in one bank in shared memory since each char takes up 8 bits. If you need access to the individual elements of this struct, it will cause a 4 way bank conflict since the tread has to read from the bank 4 times. To prevent this, we could do this

struct RGBApixel {

unsigned char32_t Blue;

unsigned char32_t Green;

unsigned char32_t Red;

unsigned char32_t Alpha;

}

This struct is now spread across 4 banks since char now 32 bits, or one full bank each. With this, bank conflicts are eliminated.

Convolution Kernel

Convolution Kernel

The biggest gains in optimization came from the convolution kernels.

Constant memory use improved the access times in the convolution kernel.

old:

cudaMalloc((void**)&_gpuKernel, _kernelXSize * _kernelYSize * sizeof(float)); cudaMemcpy(_gpuKernel, _kernel, _kernelXSize * _kernelYSize * sizeof(float), cudaMemcpyHostToDevice);

new

const int MAX_KERNEL_RADIUS = 7; __constant__ float convolutionKernel[(MAX_KERNEL_RADIUS * 2) * (MAX_KERNEL_RADIUS * 2) + 1]; checkError(cudaMemcpyToSymbol(convolutionKernel, _kernel, _kernelXSize * _kernelYSize * sizeof(float)), "initializing kernel");

Due to the nature of some of the kernels, thread divergence was a major slowdown due to having to do edge checks. This was eliminated by padding the outside of the image.

__global__ void padImageKernel(const GPU_RGBApixel* img, GPU_RGBApixel* output, int width, int height,int wPad, int hPad){

int x = blockIdx.x * blockDim.x + threadIdx.x;

int y = blockIdx.y * blockDim.y + threadIdx.y;

int paddedWidth = width + wPad * 2;

int paddedHeight = height + hPad * 2;

if (x >= paddedWidth || y >= paddedHeight){

return;

}

int xCorr = (x < wPad) ? width + x : x;

xCorr = (xCorr >= width + wPad) ? xCorr - width : xCorr;

int yCorr = (y < hPad) ? height + y : y;

yCorr = (yCorr >= height + hPad) ? yCorr - height : yCorr;

output[imageIndex(x, y, paddedHeight)] = img[imageIndex(xCorr, yCorr, height)];

}

This removed the edge checks from the convolution kernel allowing for a dramatic increase in Gaussian.

//old

for (int i = -kXRad; i <= kXRad; i++){

for (int j = -kYRad; j <= kYRad; j++){

//wrap image

int xCorr = (x + i < 0) ? width + i : x + i;

xCorr = (xCorr >= width) ? xCorr - width : xCorr;

int yCorr = (y + j < 0) ? height + j : y + j;

yCorr = (yCorr >= height) ? yCorr - height : yCorr;

const float kernelElement = kernel[kernelIndex(i, j, kernelXSize, kernelYSize)];

const GPU_RGBApixel& imageElement = img[imageIndex(xCorr, yCorr, height)];

outPixel.Red += kernelElement * imageElement.Red;

outPixel.Green += kernelElement * imageElement.Green;

outPixel.Blue += kernelElement * imageElement.Blue;

}

}

//new

for (int i = -kXRad; i <= kXRad; i++){

for (int j = -kYRad; j <= kYRad; j++){

const float kernelElement = convolutionKernel[kernelIndex(i, j, kernelXSize, kernelYSize)];

const GPU_RGBApixel imageElement = img[imageIndex(xCorr + i, yCorr + j, height + kernelYSize - 1)];

outPixel.Red += kernelElement * imageElement.Red;

outPixel.Green += kernelElement * imageElement.Green;

outPixel.Blue += kernelElement * imageElement.Blue;

}

}

Canny Canny was optimized not through the kernel itself, but through some of the functions canny used. The functions were rewritten with a little rearrangement of code and slight manipulation of their logic in order for performance gains.

notMaxSuppression used to have a large if block in the middle of the kernel that could potentially split into 4 threads. It was rewritten using a pre-computed table of outcomes and a little “mathemagic” to eliminate the if statements. It has reduced it reduces it from four paths to two.

//changed the angle finding logic

//old

int index = imageIndex(x, y, height);

const float& gM = gradMagnitude[index];

result[index] = gM;

//don't touch the edges

if (x < 1 || y < 1 || x >= width - 1 || y >= height - 1){

return;

}

const float& gA = gradAngle[index];

int i;

int j;

result[index] = gradMagnitude[index];

if (((gA >= -M_PId8) && (gA <= M_PId8)) || (gA >= 7 * M_PId8) || (gA <= -7 * M_PId8)){

i = 0;

j = -1;

}else if (((gA <= 3 * M_PId8) && (gA > M_PId8)) || ((gA <= -5 * M_PId8) && (gA > -7 * M_PId8))){

i = -1;

j = 1;

}else if (((gA >= 5 * M_PId8) && (gA < 7 * M_PId8)) || ((gA < -M_PId8) && (gA >= -3 * M_PId8))){

i = -1;

j = -1;

}else{

i = -1;

j = 0;

}

if (!((gM <= gradMagnitude[imageIndex(x + i, y + j, height)]) || (gM <= gradMagnitude[imageIndex(x - 1, y - j, height)]))){

result[index] = gM;

}

//new

int xCorr = x + 1;

int yCorr = y + 1;

int heightCorr = height + 2;

int index = imageIndex(xCorr, yCorr, heightCorr);

const float gM = gradMagnitude[index];

const int sectorConvertX[9] = { 0, -1, -1, -1, -1, -1, -1, 0 , 0 };

const int sectorConvertY[9] = { -1, 1, 1, -1, -1, 0, 0, -1 , -1 };

const float gA = fabsf(gradAngle[imageIndex(x, y, height)]);

const int sector = int(gA / M_PI) * 8;

int i = sectorConvertX[sector];

int j = sectorConvertY[sector];

if (!((gM <= gradMagnitude[imageIndex(xCorr + i, yCorr + j, heightCorr)])|| (gM <= gradMagnitude[imageIndex(xCorr - 1, yCorr - j, heightCorr)]))){

result[index] = gM;

}else{

result[index] = 0.0;

}

hysterisisSecondPass was padded and had its inner double loop unrolled and the inner loop was replaced with divisions to eliminate branching.

//old

if (pixel >= lowerThreshold && pixel < upperThreshold){

int iLower = (x == 0) ? 0 : -1;

int iUpper = (x == width - 1) ? 0 : 1;

int jLower = (height == 0) ? 0 : -1;

int jUpper = (x == width - 1) ? 0 : 1;

ret = 0.0;

for (int i = iLower; i <= iUpper; i++){

for (int j = jLower; j <= jUpper; j++){

if (image[imageIndex(x + i, y + j, height)]){

ret = upperThreshold + 1;

}

}

}

}

//new

if (level >= lowerThreshold && level < upperThreshold){

level = 0.0;

level += image[imageIndex(xCorr + 1, yCorr + 1, heightCorr)] / upperThreshold;

level += image[imageIndex(xCorr + 0, yCorr + 1, heightCorr)] / upperThreshold;

level += image[imageIndex(xCorr + -1, yCorr + 1, heightCorr)] / upperThreshold;

level += image[imageIndex(xCorr + 1, yCorr + 0, heightCorr)] / upperThreshold;

level += image[imageIndex(xCorr + -1, yCorr + 0, heightCorr)] / upperThreshold;

level += image[imageIndex(xCorr + 1, yCorr + -1, heightCorr)] / upperThreshold;

level += image[imageIndex(xCorr + 0, yCorr + -1, heightCorr)] / upperThreshold;

level += image[imageIndex(xCorr + -1, yCorr + -1, heightCorr)] / upperThreshold;

level = level == 0 ? 0 : 255;

}

Grey world

The optimization for grey world was minimal, and in turn provided a minimal increase in execution.

Global memory calls were reduced from twelve to one, as well as reducing branching if statements.

if(averageR > 0.05){

newGrey[ imageIndex(x, y, height) ].Red = (255 < Original[ imageIndex(x, y, height) ].Red * averageAll / averageR)? 255 : Original[ imageIndex(x, y, height) ].Red * averageAll / averageR;

}else{

newGrey[ imageIndex(x, y, height) ].Red = (255 < Original[ imageIndex(x, y, height) ].Red + averageAll)? 255 : Original[ imageIndex(x, y, height) ].Red + averageAll;

}

if(averageB > 0.05){

newGrey[ imageIndex(x, y, height) ].Blue = (255 < Original[ imageIndex(x, y, height) ].Blue * averageAll / averageB)? 255 : Original[ imageIndex(x, y, height) ].Blue * averageAll / averageB;

}else{

newGrey[ imageIndex(x, y, height) ].Blue = (255 < Original[ imageIndex(x, y, height) ].Blue + averageAll)? 255 : Original[ imageIndex(x, y, height) ].Blue + averageAll;

}

if(averageG > 0.05){

newGrey[ imageIndex(x, y, height) ].Green = (255 < Original[ imageIndex(x, y, height) ].Green * averageAll / averageG)? 255 : Original[ imageIndex(x, y, height) ].Green * averageAll / averageG;

}else{

newGrey[ imageIndex(x, y, height) ].Green = (255 < Original[ imageIndex(x, y, height) ].Green + averageAll)? 255 : Original[ imageIndex(x, y, height) ].Green + averageAll;

}

GPU_RGBApixel orig = Original[imageIndex(x, y, height)];

GPU_RGBApixel grey = { 0, 0, 0 };

if(averageR > 0.05){

grey.Red = orig.Red * averageAll / averageR;

}else{

grey.Red = orig.Red + averageAll;

}

if(averageB > 0.05){

grey.Blue = orig.Blue * averageAll / averageB;

}else{

grey.Blue = orig.Blue + averageAll;

}

if(averageG > 0.05){

grey.Green = orig.Green * averageAll / averageG;

}else{

grey.Green = orig.Green + averageAll;

}

if(grey.Red > 255) grey.Red = 255;

if(grey.Blue > 255) grey.Blue = 255;

if(grey.Green > 255) grey.Green = 255;

newGrey[imageIndex(x, y, height)] = grey;

Autocontrast

The only optimization made was reducing global memory access from 2 to 1.

const RGBApixel& imgPixel = img[imageIndex(x, y, height)]; RGBApixel& resultPixel = result[imageIndex(x, y, height)];

int index = imageIndex(x, y, height); const RGBApixel& imgPixel = img[index]; RGBApixel& resultPixel = result[index];

Resize

When optimizing resize, on the development machine where the code was being written, there seemed to be an increase of performance but when ran on the benchmark system there was no performance increase shown.

Logic was tinkered with, and some redundant caculations were eliminated storing the reults in local registers. As well, global memory access was reduced from 12 times a thread to 4. Finally, the result from the pixel calculation was stored in a temp local register and then that was writted to global memory, reducing the writes to global memory.

newImage[i * NewWidth + j].Red =

(ebmpBYTE)((1.0 - ThetaI - ThetaJ + ThetaI*ThetaJ)*(OldImage[I * OldWidth + J].Red)

+ (ThetaI - ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J].Red)

+ (ThetaJ - ThetaI*ThetaJ)*(OldImage[I * OldWidth + J + 1].Red)

+ (ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J + 1].Red));

newImage[i * NewWidth + j].Green =

(ebmpBYTE)((1.0 - ThetaI - ThetaJ + ThetaI*ThetaJ)*(OldImage[I * OldWidth + J].Green)

+ (ThetaI - ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J].Green)

+ (ThetaJ - ThetaI*ThetaJ)*(OldImage[I * OldWidth + J + 1].Green)

+ (ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J + 1].Green));

newImage[i * NewWidth + j].Blue =

(ebmpBYTE)((1.0 - ThetaI - ThetaJ + ThetaI*ThetaJ)*(OldImage[I * OldWidth + J].Blue)

+ (ThetaI - ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J].Blue)

+ (ThetaJ - ThetaI*ThetaJ)*(OldImage[I * OldWidth + J + 1].Blue)

+ (ThetaI*ThetaJ)*(OldImage[(I + 1) * OldWidth + J + 1].Blue));

float t4 = ThetaI*ThetaJ; float t1 = 1.0 - ThetaI - ThetaJ + t4; float t2 = ThetaI - t4; float t3 = ThetaJ - t4; int p1 = I * OldWidth + J; int p2 = (I + 1) * OldWidth + J; int p3 = I * OldWidth + J + 1; int p4 = (I + 1) * OldWidth + J + 1; RGBApixel temp; RGBApixel temp1 = OldImage[p1]; RGBApixel temp3 = OldImage[p3]; RGBApixel temp2 = OldImage[p2]; RGBApixel temp4 = OldImage[p4]; temp.Red =(ebmpBYTE)((t1)*(temp1.Red) + (t2)*(temp2.Red) + (t3)*(temp3.Red) + (t4)*(temp4.Red)); temp.Green = (ebmpBYTE)((t1)*(temp1.Green) + (t2)*(temp2.Green) + (t3)*(temp3.Green) + (t4)*(temp4.Green)); temp.Blue = (ebmpBYTE)((t1)*(temp1.Blue) + (t2)*(temp2.Blue) + (t3)*(temp3.Blue) + (t4)*(temp4.Blue));

During the optimization of resize, an attempt was made to make use of share memory. While eventually it was made to work, there was a 250% performance decrease. Here was the last working attempt at shared memory use. This method introduced branching in order to check for edge detection of the blocks. This method caused severe bank conflicts, and after further analysis revealed that it would not work due to each resultant pixels calculations were independent of every other pixel thus shared memory use was not possible.

__global__ void c_newPixel(RGBApixel * OldImage, RGBApixel *newImage,int OldWidth, int OldHeight, int NewWidth, int NewHeight){

int j = blockIdx.x * blockDim.x + threadIdx.x;

int i = blockIdx.y * blockDim.y + threadIdx.y;

int tx = threadIdx.x;

int ty = threadIdx.y;

if (i >= NewHeight - 1 || j >= NewWidth - 1){

return;

}

int I, J;

float ThetaI, ThetaJ;

ThetaJ = (float)(j*(OldHeight - 1.0)) / (float)(NewHeight - 1.0);

J = (int)floor(ThetaJ);

ThetaJ -= J;

ThetaI = (float)(i*(OldWidth - 1.0)) / (float)(NewWidth - 1.0);

I = (int)floor(ThetaI);

ThetaI -= I;

int blkArraySize = (blockDim.x + 1) * (blockDim.x + 1);

int blkArrayWidth = (blockDim.x + 1) * ty;

extern __shared__ RGBApixel pixel[];

pixel[tx + blkArrayWidth] = OldImage[I * OldWidth + J];

pixel[tx + blkArrayWidth + blkArraySize] = OldImage[(I + 1) * OldWidth + J];

if (ty == blockDim.y - 1){

int I1;

float ThetaI1;

ThetaI1 = (float)((i + 1)*(OldWidth - 1.0)) / (float)(NewWidth - 1.0);

I1 = (int)floor(ThetaI1);

int J1;

float ThetaJ1;

ThetaJ1 = (float)((j)*(OldHeight - 1.0)) / (float)(NewHeight - 1.0);

J1 = (int)floor(ThetaJ1);

pixel[tx + blockDim.x + blkArrayWidth] = OldImage[I1 * OldWidth + J1];

pixel[tx + blockDim.x + blkArrayWidth + blkArraySize] = OldImage[(I1 + 1) * OldWidth + J1];

}

if (tx == blockDim.x - 1){

int J1;

float ThetaJ1;

ThetaJ1 = (float)((j + 1)*(OldHeight - 1.0)) / (float)(NewHeight - 1.0);

J1 = (int)floor(ThetaJ1);

int I1;

float ThetaI1;

ThetaI1 = (float)((i)*(OldWidth - 1.0)) / (float)(NewWidth - 1.0);

I1 = (int)floor(ThetaI1);

pixel[tx + blkArrayWidth + 1] = OldImage[I1 * OldWidth + J1];

pixel[tx + blkArrayWidth + 1 + blkArraySize] = OldImage[(I1 + 1) * OldWidth + J1];

}

__syncthreads();

RGBApixel pixelResult;

float t4 = ThetaI*ThetaJ;

float t1 = 1.0 - ThetaI - ThetaJ + t4;

float t2 = ThetaI - t4;

float t3 = ThetaJ - t4;

int ps1 = tx + blkArrayWidth;

int ps2 = tx + blkArraySize + blkArrayWidth;

int ps3 = ps1 + 1;

int ps4 = ps2 + 1;

pixelResult.Red =(ebmpBYTE)((t1)*(pixel[ps1].Red)

+ (t2)*(pixel[ps2].Red)

+ (t3)*(pixel[ps3].Red)

+ (t4)*(pixel[ps4].Red));

pixelResult.Green =(ebmpBYTE)((t1)*(pixel[ps1].Green)

+ (t2)*(pixel[ps2].Green)

+ (t3)*(pixel[ps3].Green)

+ (t4)*(pixel[ps4].Green));

pixelResult.Blue = (ebmpBYTE)((t1)*(pixel[ps1].Blue)

+ (t2)*(pixel[ps2].Blue)

+ (t3)*(pixel[ps3].Blue)

+ (t4)*(pixel[ps4].Blue));

newImage[i * NewWidth + j] = pixelResult;

}