Difference between revisions of "GPU610/TeamKCM"

Byungho Kim (talk | contribs) (→Profile) |

Byungho Kim (talk | contribs) (→Profile Result) |

||

| Line 416: | Line 416: | ||

==== Profile Result ==== | ==== Profile Result ==== | ||

| − | ===== | + | ===== Sample Data = 250 ===== |

| − | ===== | + | ===== Sample Data = 1000 ===== |

| − | ===== | + | ===== Sample Data = 5000 ===== |

| − | ===== | + | ===== Sample Data = 10000 ===== |

==== Chart ==== | ==== Chart ==== | ||

Revision as of 21:17, 1 December 2014

GPU610/DPS915 | Student List | Group and Project Index | Student Resources | Glossary

Contents

- 1 Team KCM

- 1.1 Team Members

- 1.2 Progress

- 1.2.1 Assignment 1

- 1.2.2 Assignment 2

- 1.2.3 Assignment 3

Team KCM

Team Members

- Byungho Kim, Some responsibility

- Taeyang Chung, Some responsibility

- SeungYeon Moon, Some responsibility

Progress

Assignment 1

Byungho's Findings

Introduction

To enhance image quality, most of S/W use convolution algorithm with sharpen kernel. The convolution algorithm is based on pixel window and kernel multiplication and the kernel which consists of 3 x 3 matrix or 5 x 5 matrix. Therefore, as we learned at the GPU610 class, I can speed up the program using CUDA library

| Original Image | Enhanced Image |

|---|---|

These two images show the result of the S/W. Left picture is the original image and right picture is obtained by the S/W. 3x3 matrix sharpen kernel is used.

Convolution

| IP11 | IP12 | IP13 | IP14 | IP15 | IP16 |

| IP21 | IP22 | IP23 | IP24 | IP25 | IP26 |

| IP31 | IP32 | IP33 | IP34 | IP35 | IP36 |

| IP41 | IP42 | IP43 | IP44 | IP45 | IP46 |

| K0 | K1 | K2 |

| K3 | K4 | k5 |

| k6 | k7 | k8 |

The 33th output pixel will be given by

OP33 = IP22 x K0 + IP23 x K1 + IP24 x K2 + IP32 x K3 + IP33 x K4 + IP34 x K5 + IP42 x K6 + IP43 x K7 + IP44 x K8

Analysis

The profile shows that filter function occupies the most of processing time.

% cumulative self self total time seconds seconds calls ns/call ns/call name 93.59 1.46 1.46 filter(image_t*, double*, int, double, double) 6.41 1.56 0.10 5038848 19.85 19.85 put_pixel_unsafe(image_t*, unsigned int, unsigned int, unsigned char, unsigned char, unsigned char) 0.00 1.56 0.00 2 0.00 0.00 alloc_img(unsigned int, unsigned int) 0.00 1.56 0.00 1 0.00 0.00 _GLOBAL__sub_I__Z7get_ppmP8_IO_FILE 0.00 1.56 0.00 1 0.00 0.00 get_ppm(_IO_FILE*)

In the filter function, 3 x 3 mask walk through all RGB pixels and calculate convolution of them.

image filter(image im, double *K, int Ks, double divisor, double offset)

{

image oi;

unsigned int ix, iy, l;

int kx, ky;

double cp[3];

oi = alloc_img(im->width, im->height);

if ( oi != NULL ) {

for(ix=0; ix < im->width; ix++) {

for(iy=0; iy < im->height; iy++) {

cp[0] = cp[1] = cp[2] = 0.0;

for(kx=-Ks; kx <= Ks; kx++) {

for(ky=-Ks; ky <= Ks; ky++) {

for(l=0; l<3; l++)

cp[l] += (K[(kx+Ks) +

(ky+Ks)*(2*Ks+1)]/divisor) *

((double)GET_PIXEL_CHECK(im, ix+kx, iy+ky, l)) + offset;

}

}

for(l=0; l<3; l++)

cp[l] = (cp[l]>255.0) ? 255.0 : ((cp[l]<0.0) ? 0.0 : cp[l]) ;

put_pixel_unsafe(oi, ix, iy,

(color_component)cp[1],

(color_component)cp[2],

(color_component)cp[0]);

}

}

return oi;

}

return NULL;

}

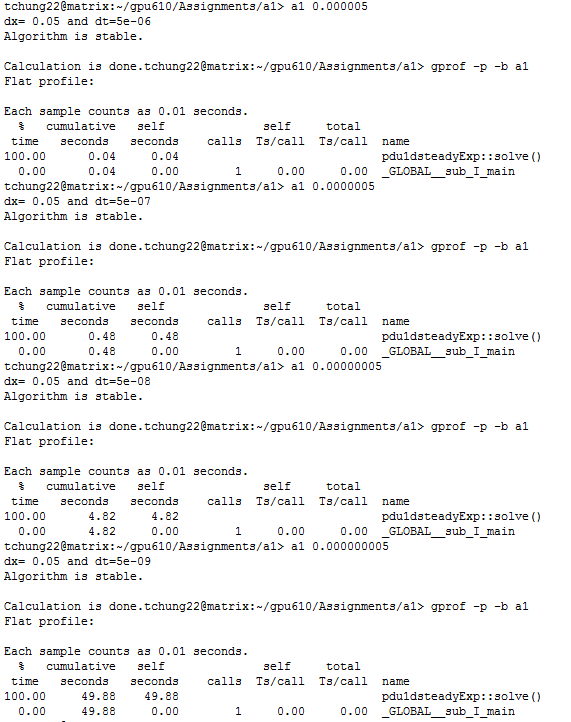

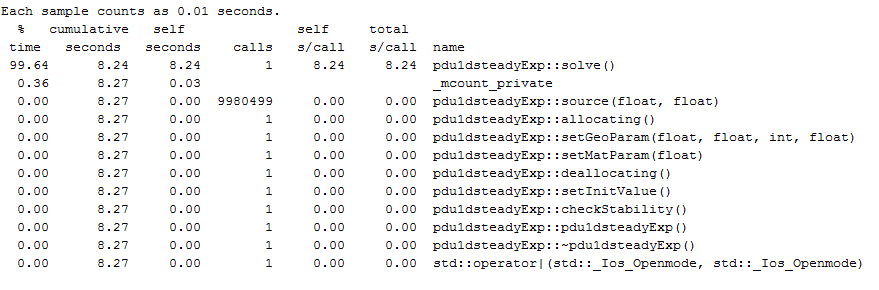

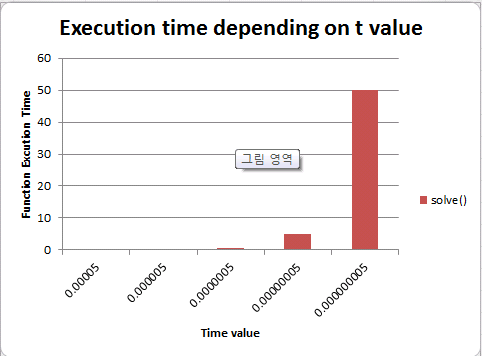

Taeyang's Findings

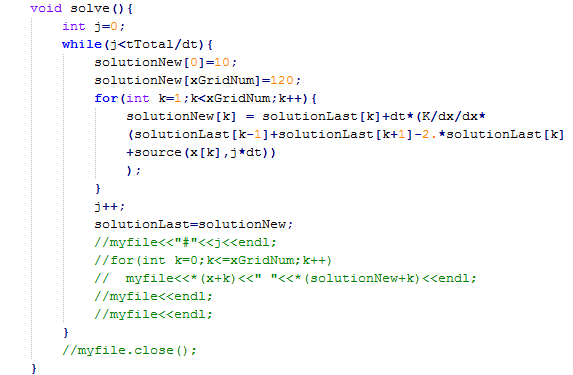

My program is to solve the heat transfer problem using explicit method. I found this source code at http://www.pengfeidu.net/Media/pdf/1dUnsteadyHeatTransferCode.pdf

The 'Solve()' function takes 3 arguments and as t-value decreases the processing time increases as shown below

The 'Solve()' function:

void solve(){

int j=0;

while(j<tTotal/dt){

solutionNew[0]=10;

solutionNew[xGridNum]=120;

for(int k=1;k<xGridNum;k++){

solutionNew[k] = solutionLast[k]+dt*(K/dx/dx*

(solutionLast[k-1]+solutionLast[k+1]-2.*solutionLast[k]

+source(x[k],j*dt)));

}

j++;

solutionLast=solutionNew;

}

}

By Implementing parallel solution, I believe I can shorten the processing time.

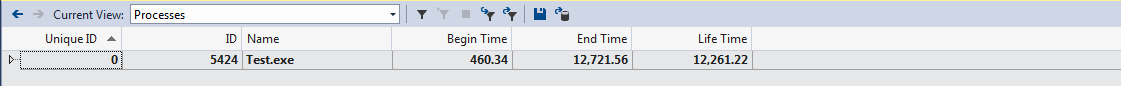

SeungYeon's Findings

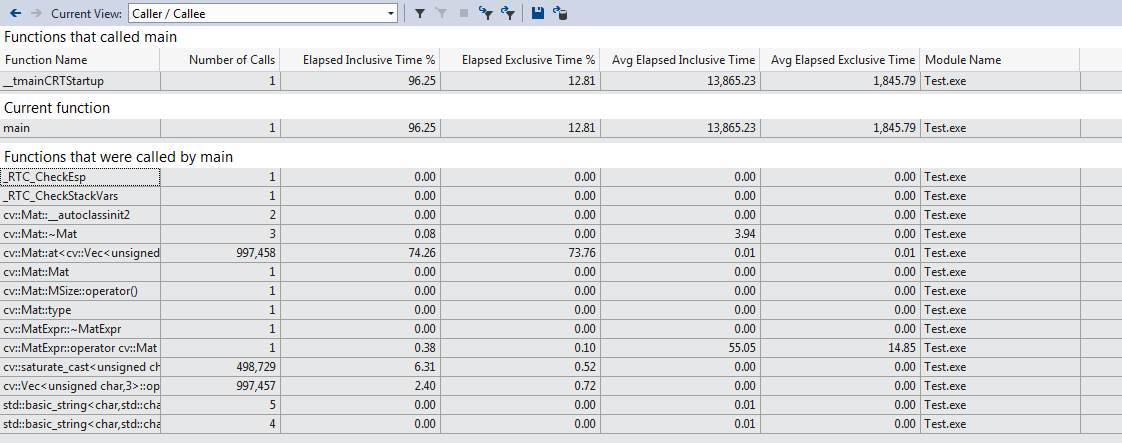

There are many types of image processing or operations can be done. Some of examples are rotating,re-sizing,blurring, etc... For Assignment 1, I decided to work with one of the operation that can be done with an image in c++, which deals with brightness and contrast of an image. I was able to find a open source code from "openCV" website it stands for Open Source Computer Vision, is open source libraries developed by Intel for image and video processing. The libraries can be installed in any platform, and i was able to install it in my windows platform, and all test runs all done using visual studio 2013, Test file is compiled and profiled using performance wizard vs13. Following website openCV.org is an official documentation website of openCV libraries, with code examples for many image and video type operations

The open source code for the program requires 3 user inputs which are path to an image and alpha,beta values which will be multiplied and added to each pixels' in the image. To make sure test run is accurate, i had to change some code in the program and hard code in the values for alpha and beta.

Here is two test runs using same image but in different file size

Test run with an image size of 500KB

Total Time takes is 12 seconds

Test run with an image size of 2MB

Total Time takes is 14 seconds

SeungYeons' observation for As_1

Changing contrast and brightness of an image in the function is implemented with a scale * matrix operation. M_A(x,y) = ALPHA * M_B(x,y) + BETA;

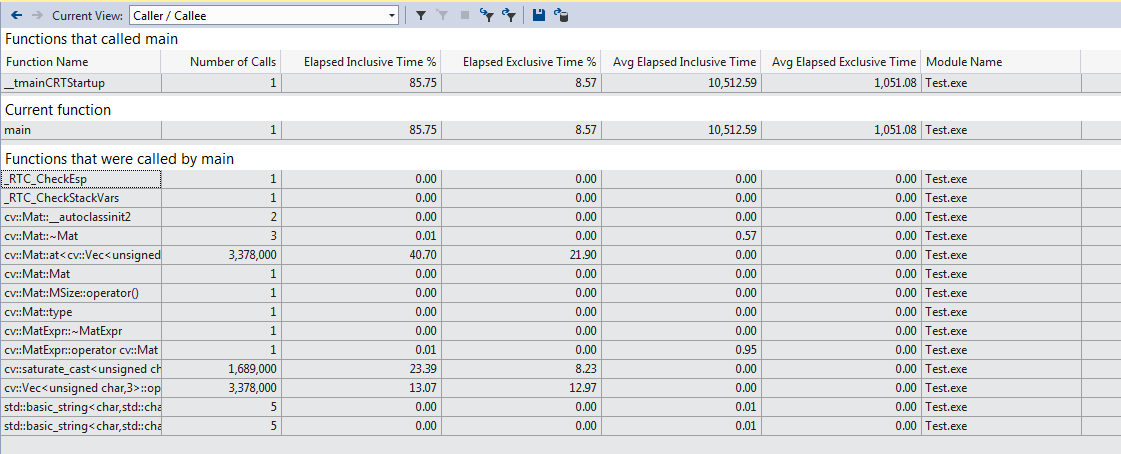

Assignment 2

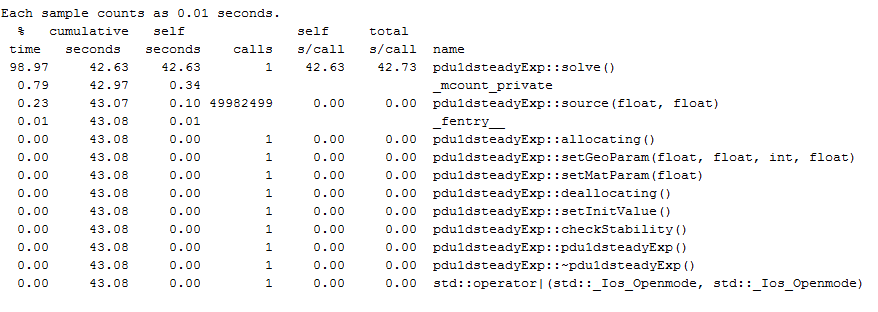

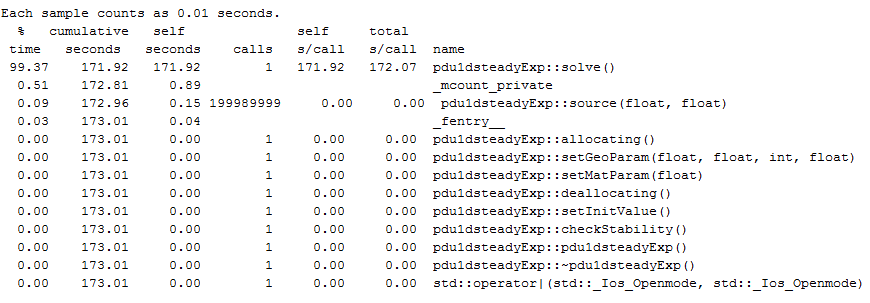

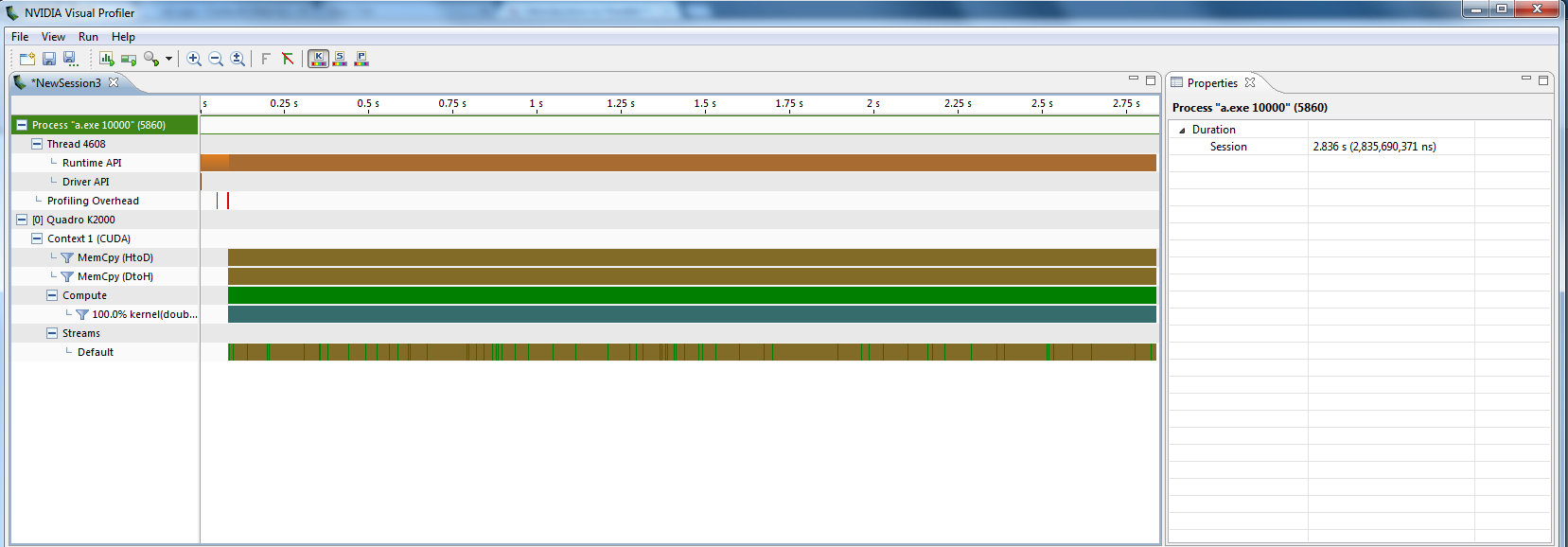

For our Assignment 2 we decided to work with heat transfer problem.

To compare the speed difference between the original code and parallelized code, we first profiled the original code in matrix environment an parallelized code in school computer. (using gporf and nvvp)

Profile results

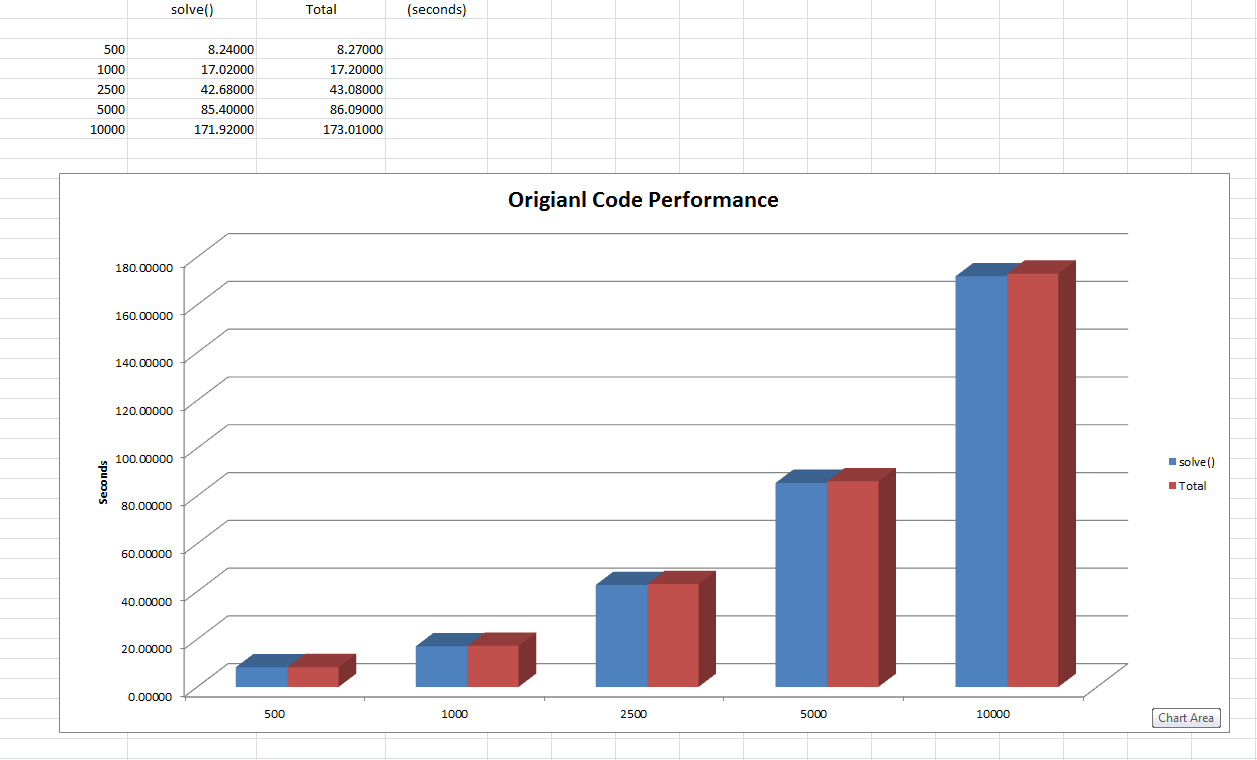

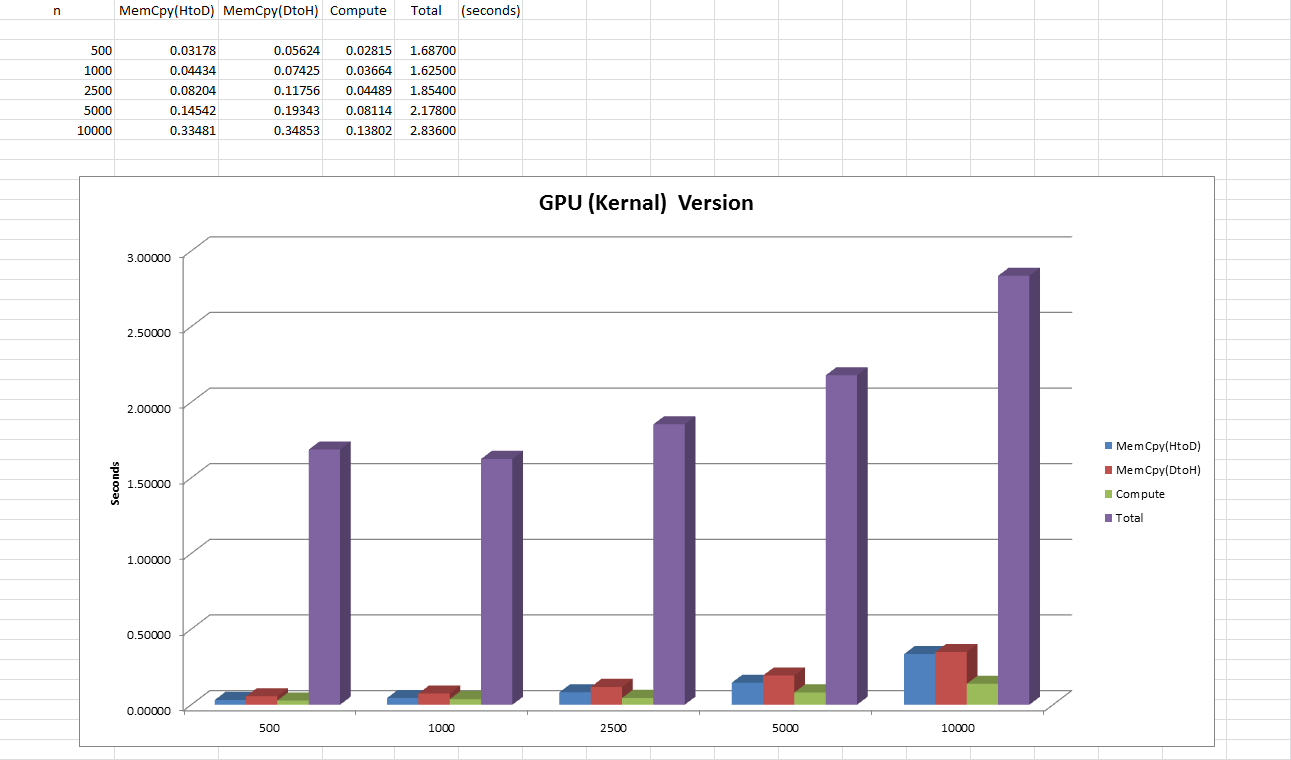

Each Program was tested with 5 different input data.(500,1000,2500,5000,10000)

Profile #1 (Original code)

Sample Data = 500

Sample Data = 2500

Sample Data = 10000

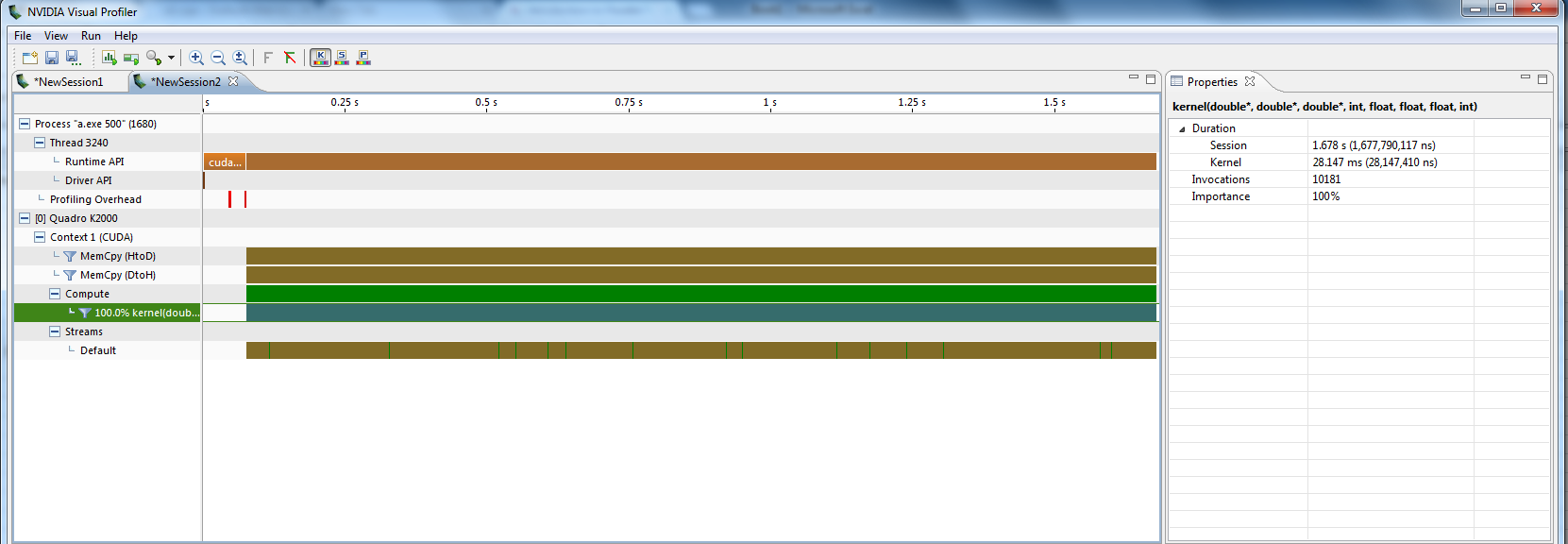

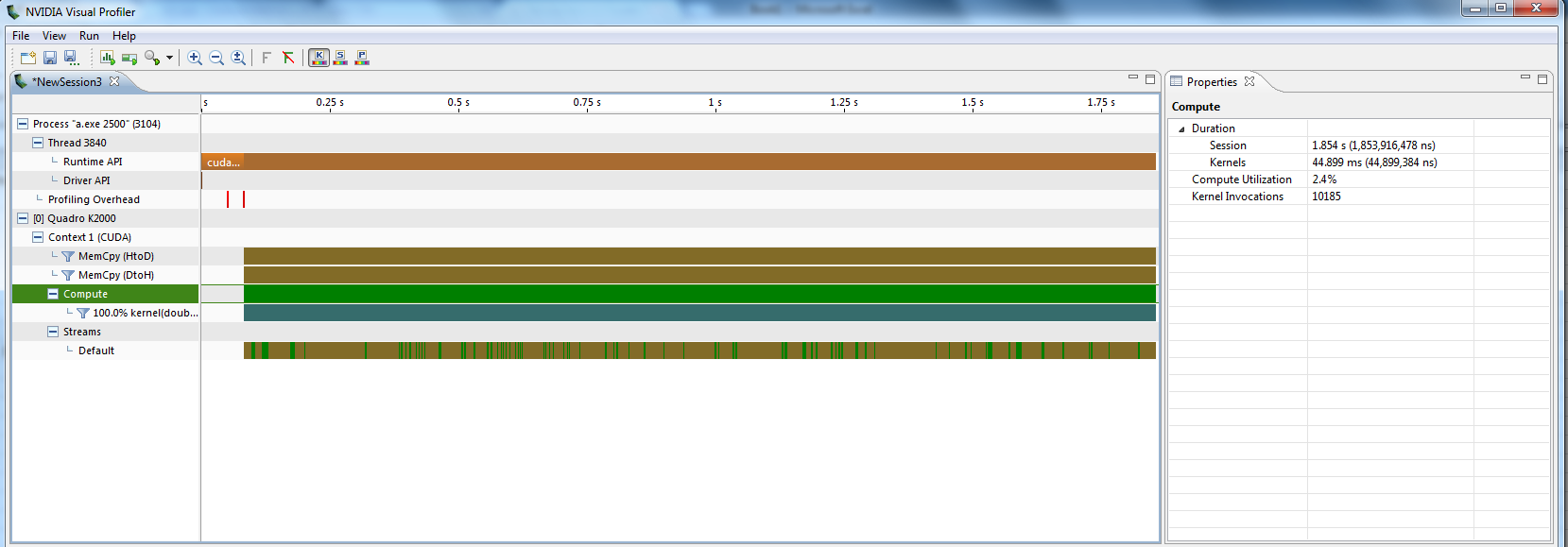

Profile #2 (GPU implementation version)

Sample Data = 500

Sample Data = 2500

Sample Data = 10000

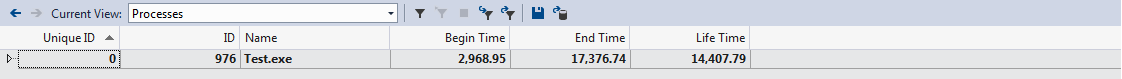

Summary of profiling

We tested CPU and GPU implementation version with same data sizes and showed significant speedup in result after using kernel.

Summary of profiling in Chart

Charts with some of main operation(function) calls for CPU version and GPU(kernel)

Original

GPU (kernel)

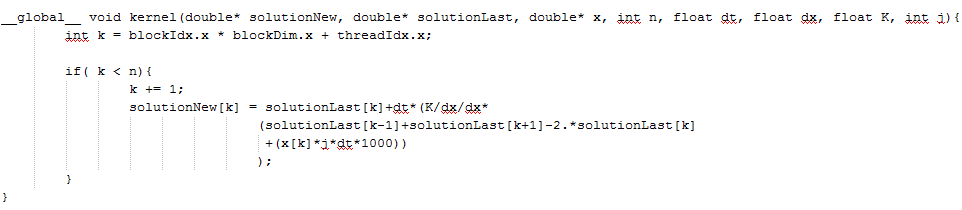

Screen shot of solve function in CPU and GPU(kernel) versions

CPU

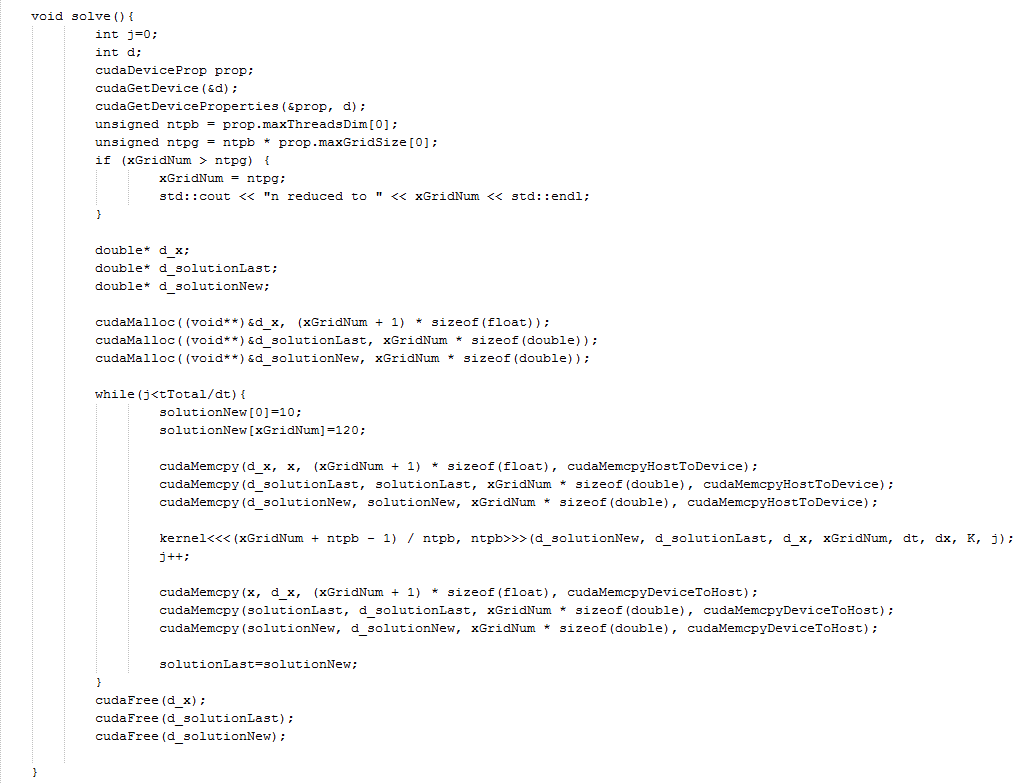

GPU(Solve) and Kernel

Assignment 3

For assignment 3, we were checking everything we can do to improve the performance and found 2 thing we can do. Firstly, in the while loop, there were 6 times of memory copy functions called, and we found that we can reduce 6 times to 1 time by using device address pointer switching. Furthermore, we found that if the sample number n is less that 1024, we can use shared memory in the kernel.

Reducing Memory Copy Calls

Assignment 2 Code

void solve(){

int j=0;

int d;

cudaDeviceProp prop;

cudaGetDevice(&d);

cudaGetDeviceProperties(&prop, d);

unsigned ntpb = prop.maxThreadsDim[0];

unsigned ntpg = ntpb * prop.maxGridSize[0];

if (xGridNum > ntpg) {

xGridNum = ntpg;

std::cout << "n reduced to " << xGridNum << std::endl;

}

double* d_x;

double* d_solutionLast;

double* d_solutionNew;

cudaMalloc((void**)&d_x, (xGridNum + 1) * sizeof(float));

cudaMalloc((void**)&d_solutionLast, xGridNum * sizeof(double));

cudaMalloc((void**)&d_solutionNew, xGridNum * sizeof(double));

while(j<tTotal/dt){

solutionNew[0]=10;

solutionNew[xGridNum]=120;

cudaMemcpy(d_x, x, (xGridNum + 1) * sizeof(float), cudaMemcpyHostToDevice);

cudaMemcpy(d_solutionLast, solutionLast, xGridNum * sizeof(double), cudaMemcpyHostToDevice);

cudaMemcpy(d_solutionNew, solutionNew, xGridNum * sizeof(double), cudaMemcpyHostToDevice);

kernel<<<(xGridNum + ntpb - 1) / ntpb, ntpb>>>(d_solutionNew, d_solutionLast, d_x, xGridNum, dt, dx, K, j);

j++;

cudaMemcpy(x, d_x, (xGridNum + 1) * sizeof(float), cudaMemcpyDeviceToHost);

cudaMemcpy(solutionLast, d_solutionLast, xGridNum * sizeof(double), cudaMemcpyDeviceToHost);

cudaMemcpy(solutionNew, d_solutionNew, xGridNum * sizeof(double), cudaMemcpyDeviceToHost);

solutionLast=solutionNew;

}

for(j = 0; j <= xGridNum; j++){

std::cout << solutionLast[j] << endl;

}

cudaFree(d_x);

cudaFree(d_solutionLast);

cudaFree(d_solutionNew);

}

Improved Code

void solve(){

int j=0;

int d;

cudaDeviceProp prop;

cudaGetDevice(&d);

cudaGetDeviceProperties(&prop, d);

unsigned ntpb = prop.maxThreadsDim[0];

unsigned ntpg = ntpb * prop.maxGridSize[0];

if (xGridNum > ntpg) {

xGridNum = ntpg;

std::cout << "n reduced to " << xGridNum << std::endl;

}

double* d_x;

double* d_solutionA;

double* d_solutionB;

cudaMalloc((void**)&d_x, (xGridNum + 1) * sizeof(float));

cudaMalloc((void**)&d_solutionA, xGridNum * sizeof(double));

cudaMalloc((void**)&d_solutionB, xGridNum * sizeof(double));

double* d_solutionTemp;

cudaMemcpy(d_x, x, (xGridNum + 1) * sizeof(float), cudaMemcpyHostToDevice);

cudaMemcpy(d_solutionA, solutionNew, xGridNum * sizeof(double), cudaMemcpyHostToDevice);

cudaMemcpy(d_solutionB, solutionLast, xGridNum * sizeof(double), cudaMemcpyHostToDevice);

std::ofstream myfile;

myfile.open ("output.txt");

// A = new, B = last

while( j < tTotal / dt){

kernel<<<(xGridNum + ntpb - 1) / ntpb, ntpb>>>(d_solutionA, d_solutionB, d_x, xGridNum, dt, dx, K, j);

j++;

cudaMemcpy(solutionNew, d_solutionA, xGridNum * sizeof(double), cudaMemcpyDeviceToHost);

/*

myfile << "Time" << tTotal/dt << std::endl;

for(int i = 0; i <= xGridNum; i++){

myfile << solutionNew[i] << ":";

}

*/

d_solutionTemp = d_solutionA;

d_solutionA = d_solutionB;

d_solutionB = d_solutionTemp;

}

myfile.close();

cudaFree(d_x);

cudaFree(d_solutionA);

cudaFree(d_solutionB);

cudaDeviceReset(); // Important

}

Assignment 2 Code

__global__ void kernel(double* solutionNew, double* solutionLast, double* x, int n, float dt, float dx, float K, int j){

int k = blockIdx.x * blockDim.x + threadIdx.x;

if( k < n){

k += 1;

solutionNew[k] = solutionLast[k]+dt*(K/dx/dx*

(solutionLast[k-1]+solutionLast[k+1]-2.*solutionLast[k]

+(x[k]*j*dt*1000))

);

}

}Improved Code

__global__ void kernel(double* solutionNew, double* solutionLast, double* x, int n, float dt, float dx, float K, int j){

int k = blockIdx.x * blockDim.x + threadIdx.x;

double *t_c;

__shared__ double s_c[1024];

if ( n < 1024 && k < (n + 1))

s_c[k] = solutionLast[k];

__syncthreads();

if( n < 1024) t_c = s_c;

else t_c = solutionLast;

if( k == 0){

solutionNew[k] = 10;

} else if( k == n){

solutionNew[k] = 120;

}

if( k < n){

k += 1;

solutionNew[k] = t_c[k]+dt*(K/dx/dx*

(t_c[k-1]+t_c[k+1]-2.*t_c[k]

+(x[k]*j*dt*1000))

);

}

}